二进制安装kubernetes(一) 环境准备及etcd组件安装及etcd管理软件etcdkeeper安装

实验环境:

架构图:

主机环境:

操作系统:因docker对内核需要,本次部署操作系统全部采用centos7.6(需要内核3.8以上)

VM :2C 2G 50G * 5 PS:因后面实验需要向k8s交付java服务,所以运算节点直接4c8g,如果不交付服务,全部2c2g即可。

IP及服务规划:

安装步骤:

所有机器上安装epel源:

#yum -y install epel-release

关闭防火墙以及selinux:

# systemctl stop firewalld

# systemctl disable firewalld

#setenforce 1

vi /etc/selinux/config

SELINUX=disabled

hdss7-11上安装bind9:

#yum -y install wget net-tools telnet tree nmap sysstat lrzsz dos2unix bind-utils -y

#yum install bind -y

配置bind:

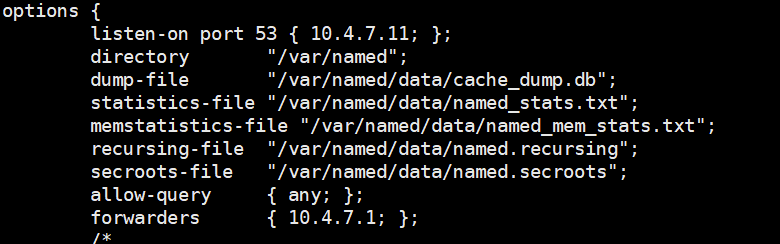

#vi /etc/named.conf

修改以下配置项:

注意此服务对配置文件格式要求比较严格

listen-on port 53 { 127.0.0.1; }; ----> listen-on port 53 { 10.4.7.11; }; #将127.0.0.1修改为当前主机IP

allow-query { localhost; }; ----> allow-query { any; }; #为哪些服务器提供解析服务

dnssec-enable yes; ----> dnssec-enable no; # 是否支持DNSSEC开关 PS:dnssec作用:1.为DNS数据提供来源验证 2.为数据提供完整性性验证 3.为查询提供否定存在验证

dnssec-validation yes; ----> dnssec-validation no; #是否进行DNSSEC确认开关

添加以下配置项:

forwarders { 10.4.7.1; }; #用来指定上一层DNS地址,一般指定网关,确保服务能够访问公网

如果不适用IPV6,可以将以下配置删除:

listen-on-v6 port 53 { ::1; };

配置文件截图:(仅粘贴修改部分)

检查配置文件:

#named-checkconf

修改zons文件:

#vi /etc/named.rfc1912.zones

在文件最后,添加本次需要用到的两个dns域:

zone "host.com" IN { type master; file "host.com.zone"; allow-update { 10.4.7.11; }; }; zone "od.com" IN { type master; file "od.com.zone"; allow-update { 10.4.7.11; }; };

编辑刚刚添加的两个域的配置文件:将用到的DNS域解析A记录添加到配置文件

# vi /var/named/host.com.zone

$ORIGIN host.com. $TTL 600 ; 10 minutes @ IN SOA dns.host.com. dnsadmin.host.com. ( 2019111001 ; serial 10800 ; refresh (3 hours) 900 ; retry (15 minutes) 604800 ; expire (1 week) 86400 ; minimum (1 day) ) NS dns.host.com. $TTL 60 ; 1 minute dns A 10.4.7.11 HDSS7-11 A 10.4.7.11 HDSS7-12 A 10.4.7.12 HDSS7-21 A 10.4.7.21 HDSS7-22 A 10.4.7.22 HDSS7-200 A 10.4.7.200

#vi /var/named/od.com.zone

$ORIGIN od.com. $TTL 600 ; 10 minutes @ IN SOA dns.od.com. dnsadmin.od.com. ( 2019111001 ; serial 10800 ; refresh (3 hours) 900 ; retry (15 minutes) 604800 ; expire (1 week) 86400 ; minimum (1 day) ) NS dns.od.com. $TTL 60 ; 1 minute dns A 10.4.7.11

harbor A 10.4.7.200

修改主机dns:将nameserver 修改为bind9搭建的服务器地址(所有主机都要修改)

vim /etc/resolv.conf

search host.com nameserver 10.4.7.11

修改网卡配置:PS:如果网卡指定了DNS1配置

vim /etc/sysconfig/network-scripts/ifcfg-eth0

DNS1=10.4.7.11

重启网卡(所有主机),重启named服务(bind主机)

#systemctl restart network

# systemctl enable named #10.4.7.11上执行

# systemctl restart named #10.4.7.11上执行

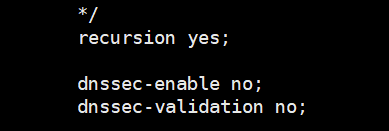

验证是否可以访问公网,以及是否是走的我们自己搭建的dns服务:

#nslookup www.baidu.com

PS:如果虚拟机vm的宿主机在自己的windows或者mac上,将宿主机的dns指向10.4.7.11,方便一会使用浏览器验证内容

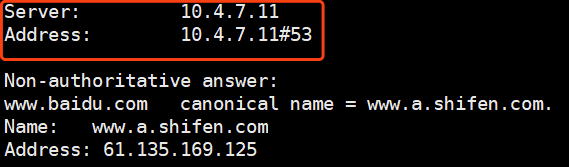

配置CA证书服务:

使用cfssl

在主机hdss7-200上操作:

# cd /usr/bin

# wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -O /usr/bin/cfssl # wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -O /usr/bin/cfssl-json # wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -O /usr/bin/cfssl-certinfo

给执行权限:

# chmod +x /usr/bin/cfssl*

创建证书存放位置(可自己设置):

# mkdir /opt/certs

# cd /opt/certs

创建证书申请文件:

# vi /opt/certs/ca-csr.json

文件内容:#这里注意"expiry": "175200h",key,如果使用kubeadmin安装,默认证书有效期是1年,我们这里手动部署为20年,这里如果证书失效,会导致整个k8s集群瘫痪。

{ "CN": "OldboyEdu", "hosts": [ ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "beijing", "L": "beijing", "O": "od", "OU": "ops" } ], "ca": { "expiry": "175200h" } }

申请证书:

# cfssl gencert -initca ca-csr.json | cfssl-json -bare ca

申请成功:

PS:我们后面的所有证书,都是基于这套ca证书签发的。

接下来继续在hdss7-21,hdss7-22,hdss-7-200服务器上安装docker,配置私有仓库harbor(hdss7-200):

# curl -fsSL https://get.docker.com | bash -s docker --mirror Aliyun

编辑docker配置文件:

# mkdir /etc/docker

# mkdir /data/docker

# vi /etc/docker/daemon.json

{ "graph": "/data/docker", "storage-driver": "overlay2", "insecure-registries": ["registry.access.redhat.com","quay.io","harbor.od.com"], "registry-mirrors": ["https://q2gr04ke.mirror.aliyuncs.com"], "bip": "172.7.21.1/24", "exec-opts": ["native.cgroupdriver=systemd"], "live-restore": true }

启动docker并添加开机启动:

# systemctl start docker

# systemctl enable docker

在opt下创建安装包存放目录:hdss7-200上

# mkdir /opt/src

# cd /opt/src

下面开始安装harbor私有仓库:

下载地址:

# wget https://github.com/goharbor/harbor/releases/download/v1.9.2/harbor-offline-installer-v1.9.2.tgz

# tar -zxf harbor-offline-installer-v1.9.2.tgz -C /opt/

# cd /opt/

# mv harbor harbor-1.9.2

# ln -s /opt/harbor-1.9.2 /opt/harbor #方便版本管理

编辑harbor配置文件:

# vim /opt/harbor/harbor.yml

修改成如下:

hostname: harbor.od.com #这里添加的是我们开始在hdss7-11的自建dns上添加的域名解析

port: 180 #避免和nginx端口冲突

data_volume: /data/harbor

location: /data/harbor/logs

创建数据目录和日志目录:

# mkdir -p /data/harbor/logs

接下来安装docker-compose:

#yum install docker-compose -y #根据网络情况不同,可能需要一些时间

执行harbor脚本:

# sh /opt/harbor/install.sh #根据网络情况不同,可能需要一些时间

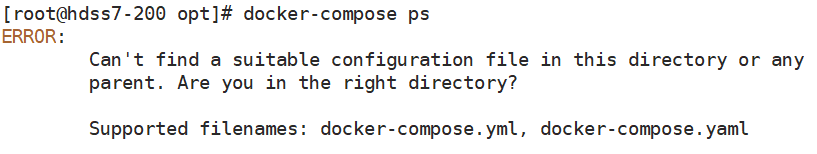

进入到harbor目录执行以下命令:如果报以下错误,请检查目录

# cd /opt/harbor

# docker-compose ps

全是up表示正常:

安装nginx:

# yum install nginx -y #可是直接yum,也可以安装源码安装

编辑nginx配置文件:

# vi /etc/nginx/conf.d/harbor.od.com.conf

反代harbor:

server { listen 80; server_name harbor.od.com; client_max_body_size 1000m; location / { proxy_pass http://127.0.0.1:180; } }

启动nginx并设置开机启动:

# systemctl start nginx

# systemctl enable nginx

检查harbor端口及nginx端口:

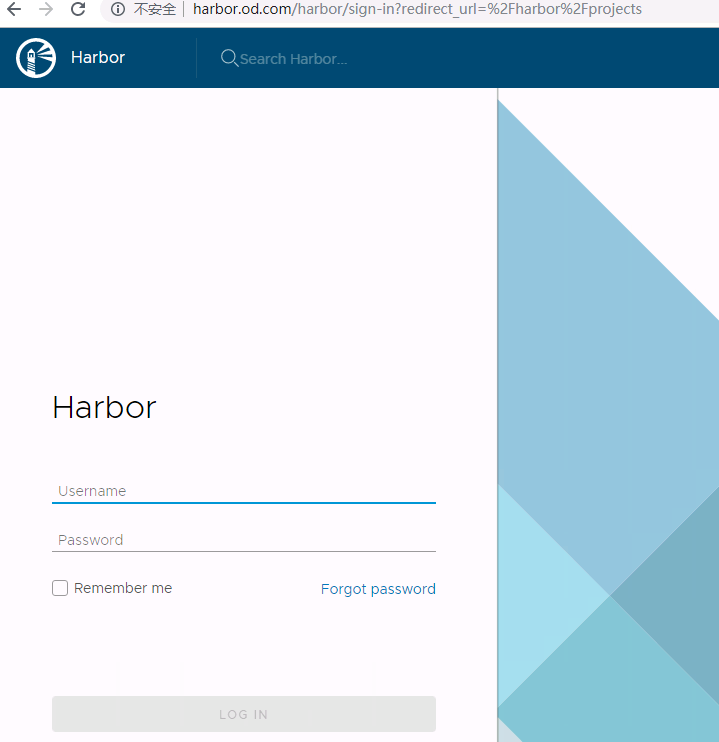

试着访问harbor,使用宿主机浏览器打开harbor.od.com,如果访问不了,检查dns是否是10.4.7.11,也就是部署bind服务的服务器IP,也可以做host解析:

harbor.od.com

默认账号:admin

默认密码:Harbor12345

登录后创建一个新的仓库,一会测试用:

使用docker下载一个nginx,测试私有仓库:

# docker pull nginx:1.7.9

# docker login harbor.od.com

# docker tag 84581e99d807 harbor.od.com/public/nginx:v1.7.9

# docker push harbor.od.com/public/nginx:v1.7.9

然后去私有仓库上看下:

看到我们的镜像已经上传到私有仓库了

现在正式开始部署etcd组件:

首先申请证书,我们所有申请证书的操作,都在hdss7-200上操作

# cd /opt/certs

# vi /opt/certs/ca-config.json

{ "signing": { "default": { "expiry": "175200h" }, "profiles": { "server": { "expiry": "175200h", "usages": [ "signing", "key encipherment", "server auth" ] }, "client": { "expiry": "175200h", "usages": [ "signing", "key encipherment", "client auth" ] }, "peer": { "expiry": "175200h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } } }

# vi etcd-peer-csr.json

{ "CN": "k8s-etcd", "hosts": [ "10.4.7.11", "10.4.7.12", "10.4.7.21", "10.4.7.22" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "beijing", "L": "beijing", "O": "od", "OU": "ops" } ] }

执行签发证书命令:

# cd /opt/certs

# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=peer etcd-peer-csr.json |cfssl-json -bare etcd-peer

按照架构设计,在hdss7-12,hdss7-21, hdss7-22三台上部署etcd服务:

首先创建etcd用户:

# useradd -s /sbin/nologin -M etcd

创建应用包存放目录

# mkdir -p /opt/src

# cd /opt/src

下载etcd组件:

地址:https://github.com/etcd-io/etcd/tags

# wget https://github.com/etcd-io/etcd/releases/download/v3.2.28/etcd-v3.2.28-linux-amd64.tar.gz

# tar -zxf etcd-v3.2.28-linux-amd64.tar.gz -C ../

# ln -s /opt/etcd-v3.2.28-linux-amd64/ /opt/etcd

# mkdir -p /opt/etcd/certs /data/etcd /data/logs/etcd-server

编辑etcd启动脚本:

# vim /opt/etcd/etcd-server-startup.sh

标红处在另外两台服务器上需要修改成对应自己的ip地址:

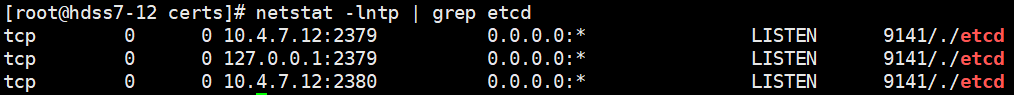

#!/bin/sh ./etcd --name etcd-server-7-12 \ --data-dir /data/etcd/etcd-server \ --listen-peer-urls https://10.4.7.12:2380 \ --listen-client-urls https://10.4.7.12:2379,http://127.0.0.1:2379 \ --quota-backend-bytes 8000000000 \ --initial-advertise-peer-urls https://10.4.7.12:2380 \ --advertise-client-urls https://10.4.7.12:2379,http://127.0.0.1:2379 \ --initial-cluster etcd-server-7-12=https://10.4.7.12:2380,etcd-server-7-21=https://10.4.7.21:2380,etcd-server-7-22=https://10.4.7.22:2380 \ --ca-file ./certs/ca.pem \ --cert-file ./certs/etcd-peer.pem \ --key-file ./certs/etcd-peer-key.pem \ --client-cert-auth \ --trusted-ca-file ./certs/ca.pem \ --peer-ca-file ./certs/ca.pem \ --peer-cert-file ./certs/etcd-peer.pem \ --peer-key-file ./certs/etcd-peer-key.pem \ --peer-client-cert-auth \ --peer-trusted-ca-file ./certs/ca.pem \ --log-output stdout

添加执行权限:

# chmod +x /opt/etcd/etcd-server-startup.sh

创建证书存放目录:

# mkdir /opt/etcd/certs

# cd /opt/etcd/certs

拷贝证书:

# scp hdss7-200:/opt/certs/ca.pem ./ # scp hdss7-200:/opt/certs/etcd-peer.pem ./ # scp hdss7-200:/opt/certs/etcd-peer-key.pem ./

给目录授权:

# chown -R etcd.etcd /opt/etcd/certs /data/etcd /data/logs/etcd-server

安装supervisor管理服务:

# yum install supervisor -y

启动服务:

# systemctl start supervisord

# systemctl enable supervisord

编辑etcd启动脚本:红色部分根据主机修改

# vi /etc/supervisord.d/etcd-server.ini

[program:etcd-server-7-12] command=/opt/etcd/etcd-server-startup.sh ; the program (relative uses PATH, can take args) numprocs=1 ; number of processes copies to start (def 1) directory=/opt/etcd ; directory to cwd to before exec (def no cwd) autostart=true ; start at supervisord start (default: true) autorestart=true ; retstart at unexpected quit (default: true) startsecs=30 ; number of secs prog must stay running (def. 1) startretries=3 ; max # of serial start failures (default 3) exitcodes=0,2 ; 'expected' exit codes for process (default 0,2) stopsignal=QUIT ; signal used to kill process (default TERM) stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10) user=etcd ; setuid to this UNIX account to run the program redirect_stderr=true ; redirect proc stderr to stdout (default false) stdout_logfile=/data/logs/etcd-server/etcd.stdout.log ; stdout log path, NONE for none; default AUTO stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stdout_logfile_backups=4 ; # of stdout logfile backups (default 10) stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stdout_events_enabled=false ; emit events on stdout writes (default false)

killasgroup=true

stopasgroup=true

更新supervisord

# supervisorctl update

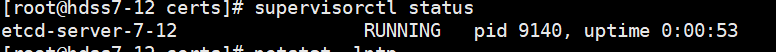

检查状态:

# supervisorctl status

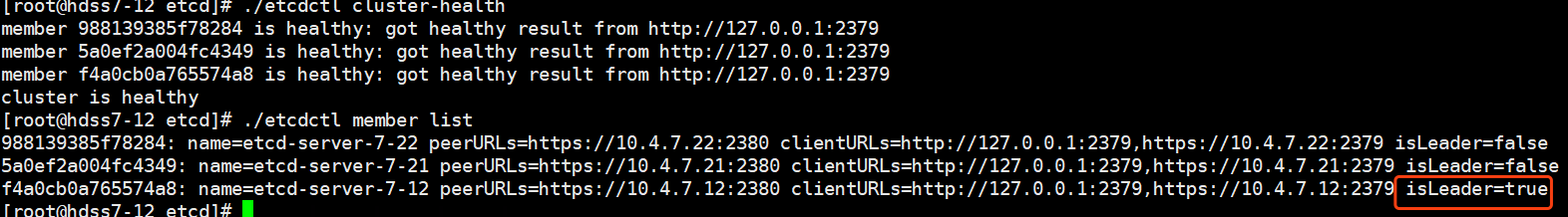

检查etcd集群状态:

# cd /opt/etcd/

# ./etcdctl member list

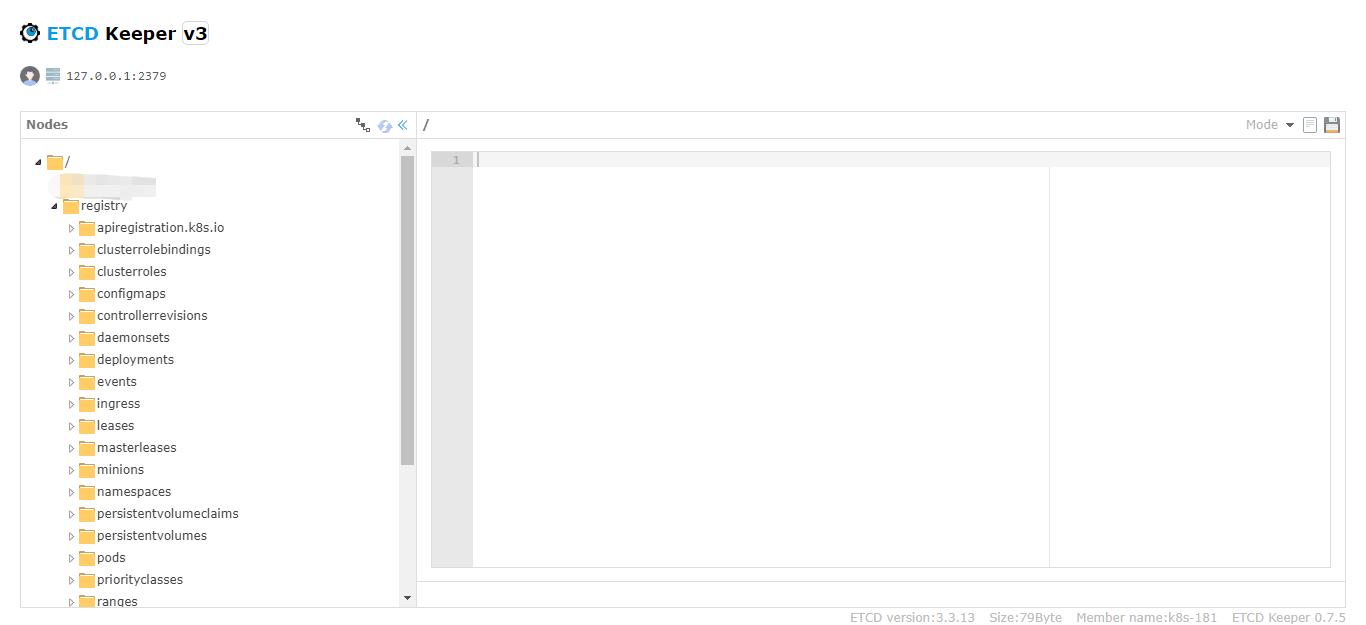

etcd服务搭建完成后,里面其实存储了很多的key,如何查看和管理这些key,需要使用一个小工具,叫做etcdkeeper:

原文链接:https://www.cnblogs.com/gytangyao/p/11407205.html

下载etcd,在etcd节点选一台

# cd /opt/src

# wget https://github.com/evildecay/etcdkeeper/releases/download/v0.7.5/etcdkeeper-v0.7.5-linux_x86_64.zip

##解开压缩包,需安装unzip: yum install unzip -y

# unzip etcdkeeper-*-linux_x86_64.zip

# rm etcdkeeper-*-linux_x86_64.zip

# mv etcdkeeper ../etcdkeeper-0.7.5

# ln -s /opt/etcdkeeper-0.7.5/ /opt/etcdkeeper

# cd /opt/etcdkeeper

# chmod +x etcdkeeper

编写一个服务文件

该服务文件主要用于在后台运行etcd程序,用以提供http服务

# cd /lib/systemd/system

# vim etcdkeeper.service

[Unit] Description=etcdkeeper service After=network.target [Service] Type=simple ExecStart=/opt/etcdkeeper/etcdkeeper -h 10.4.7.12 -p 8800 ExecReload=/bin/kill -HUP $MAINPID KillMode=process Restart=on-failure PrivateTmp=true [Install] WantedBy=multi-user.target

-h 指定etcdkeeper http监听的地址,这里监听的是IPV4地址10.4.7.12

-p 指定etcdkeeper http监听的端口

服务的控制

# systemctl start etcdkeeper 启动etcdkeeper服务

# systemctl stop etcdkeeper 停止etcdkeeper服务

# systemctl enable etcdkeeper.service 设置开机自启动

# systemctl disable etcdkeeper.service 停止开机自启动

访问安全

如果启用了etcd自身的授权,无需特别关心

如果没有自动,可以考虑使用Nginx反代,使用base auth授权.

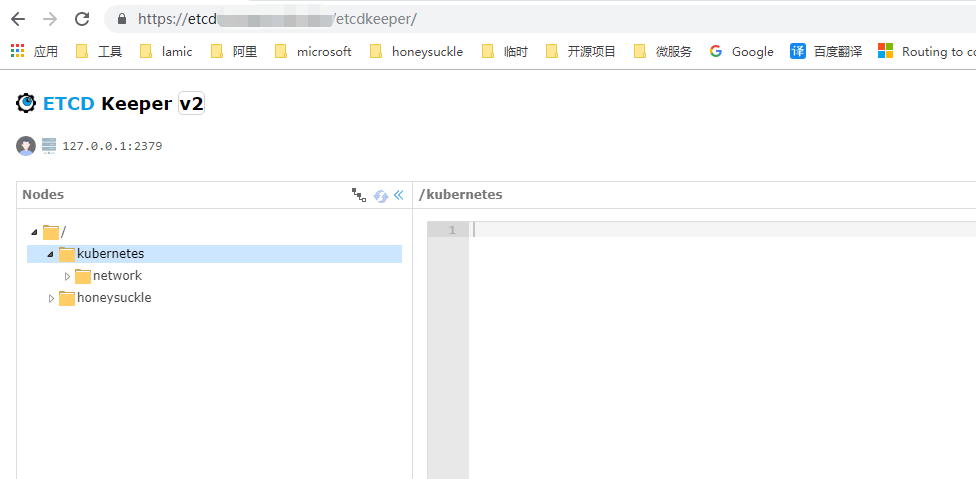

遗留的问题

当发布到公网环境时,v2可以查看到数据,v3查看不到数据。 目前没查到原因.

测试访问

http://10.4.7.12:8800

至此,etcd服务集群已经搭建完成了,接下来部署kube-apiserver服务,etcd属于服务端,kube-apiserver属于客户端,搭建kube-apiserver的过程将在下个章节。