Hive扩展之web界面:hwi接口

HWI是Hive Web Interface的简称,是hive cli的一个web替换方案,以开发的网页形式查看数据

1|0切换用户启动集群

2|0查看端口状态

3|0安装apache-hive-2.0.0-src.tar.gz

4|0解压安装包,打包并配置文件

5|0HWI需要用Apache的ANT来编译,因此需要安装ANT

6|0解压安装包,重命名然后赋hadoop用户权限

7|0配置环境变量

8|0切换用户查看ant信息

9|0开启hive 的hwi服务

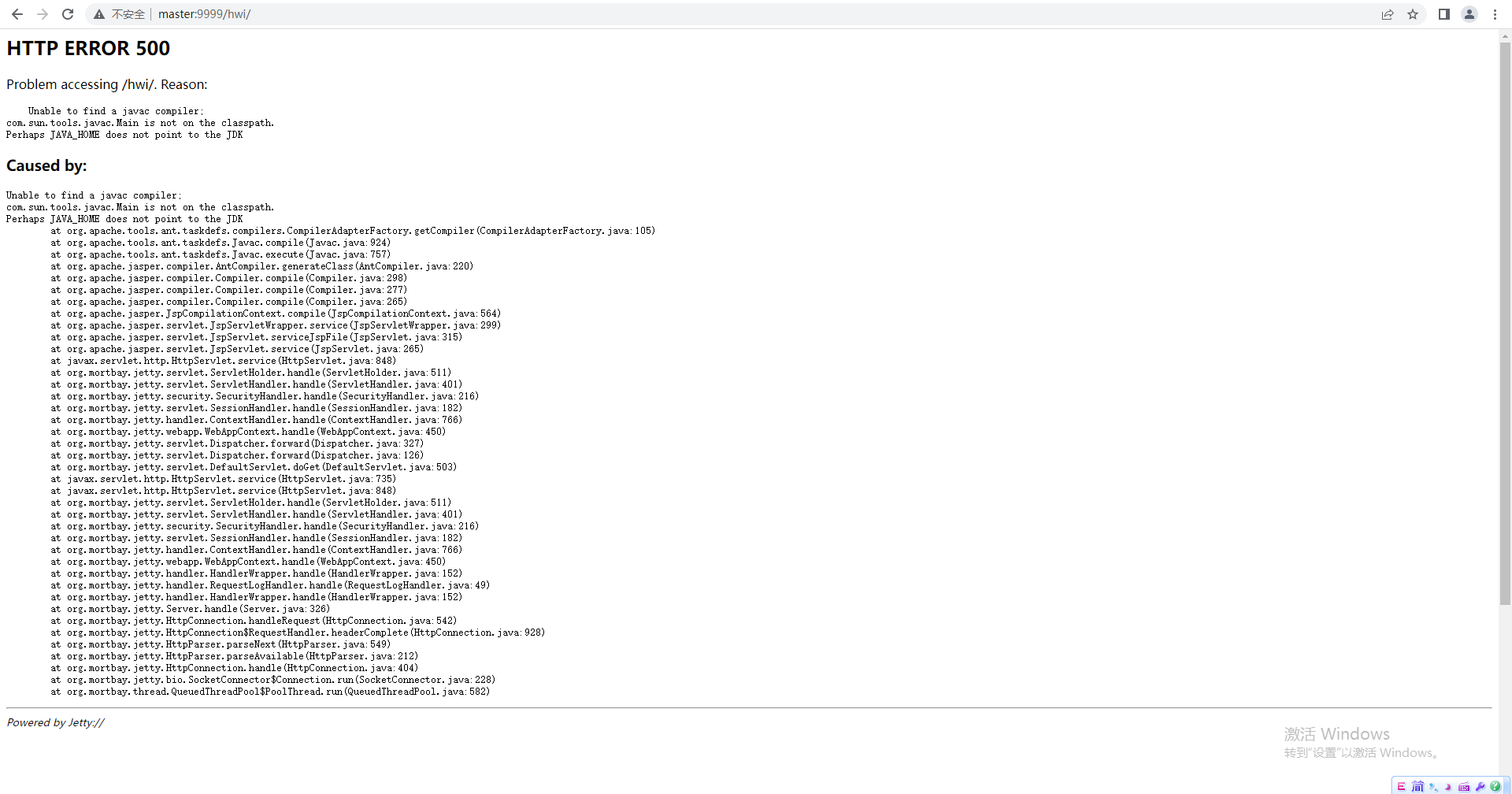

10|0浏览器查看,http://master:9999/hwi(没有配主机域名映射的,master替换成IP)

11|0将ant.jar包和tools.jar包传到lib目录下

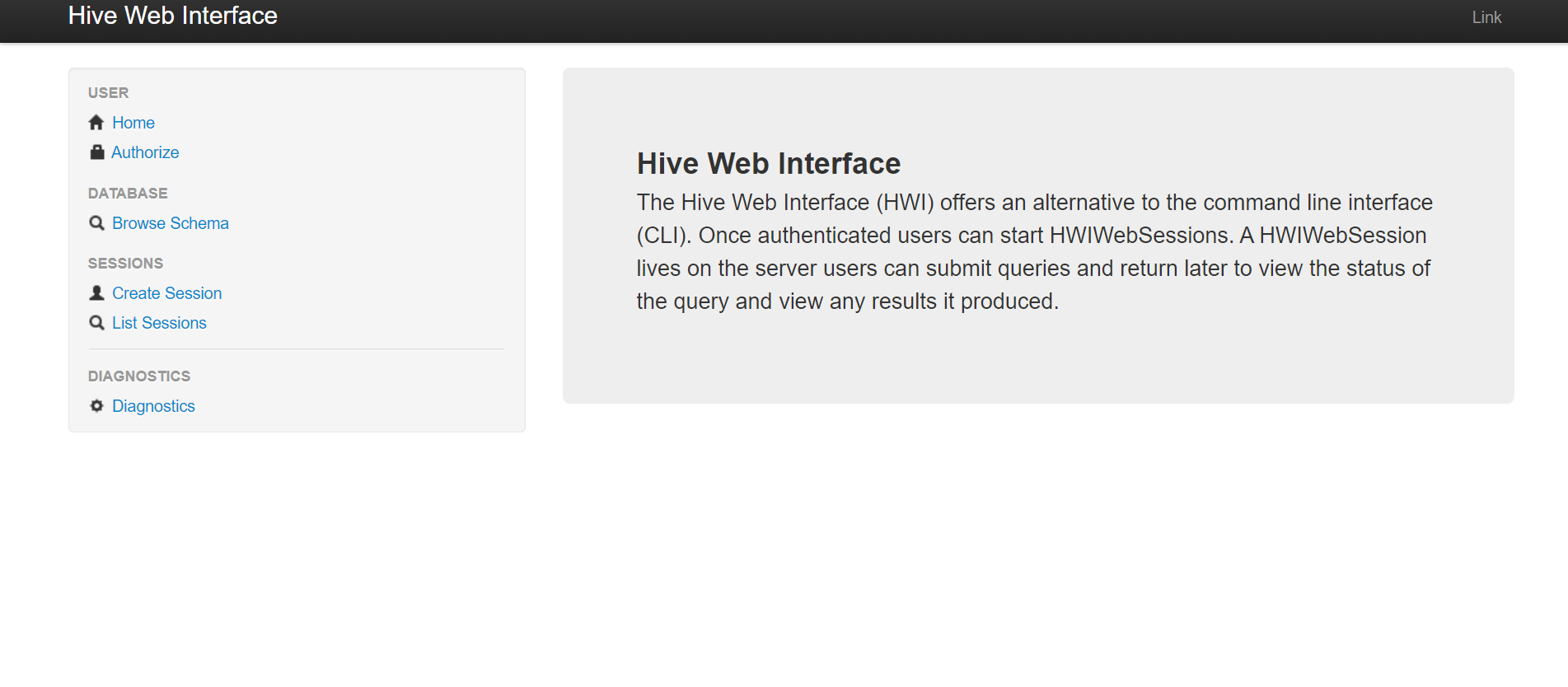

12|0再次启动hive-hwi

13|0浏览器刷新访问,多刷新几次

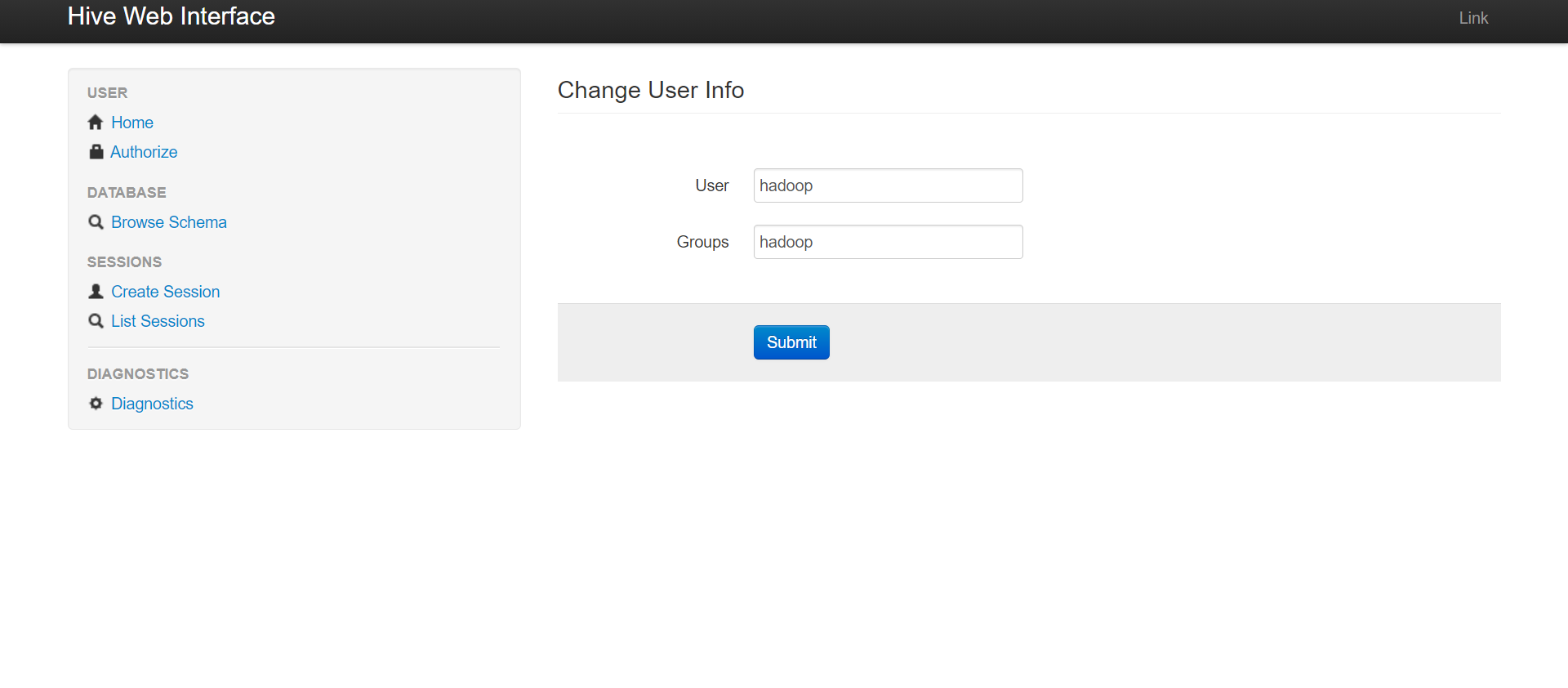

14|0hadoop授权

__EOF__

本文作者:SkyRainmom

本文链接:https://www.cnblogs.com/skyrainmom/p/17489263.html

关于博主:评论和私信会在第一时间回复。或者直接私信我。

版权声明:本博客所有文章除特别声明外,均采用 BY-NC-SA 许可协议。转载请注明出处!

声援博主:如果您觉得文章对您有帮助,可以点击文章右下角【推荐】一下。您的鼓励是博主的最大动力!

本文链接:https://www.cnblogs.com/skyrainmom/p/17489263.html

关于博主:评论和私信会在第一时间回复。或者直接私信我。

版权声明:本博客所有文章除特别声明外,均采用 BY-NC-SA 许可协议。转载请注明出处!

声援博主:如果您觉得文章对您有帮助,可以点击文章右下角【推荐】一下。您的鼓励是博主的最大动力!

分类:

Hadoop集群

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 别再用vector<bool>了!Google高级工程师:这可能是STL最大的设计失误

· 单元测试从入门到精通