HBase部署

1|0HBase部署-基于Hbase自带的Zookeeper

1|1时间同步

1|2解压安装包,改名

1|3配置hbase环境变量

1|4在master节点配置hbase-site.xml

1|5在master节点修改 regionservers文件

1|6在 master 节点创建hbase.tmp.dir目录

1|7将 master上的 hbase 安装文件同步到 slave1 slave2

1|8在所有节点修改hbase 目录权限

1|9将master配置的hbase.sh环境变量传到slave节点

1|10切换hadoop用户

1|11启动 HBase

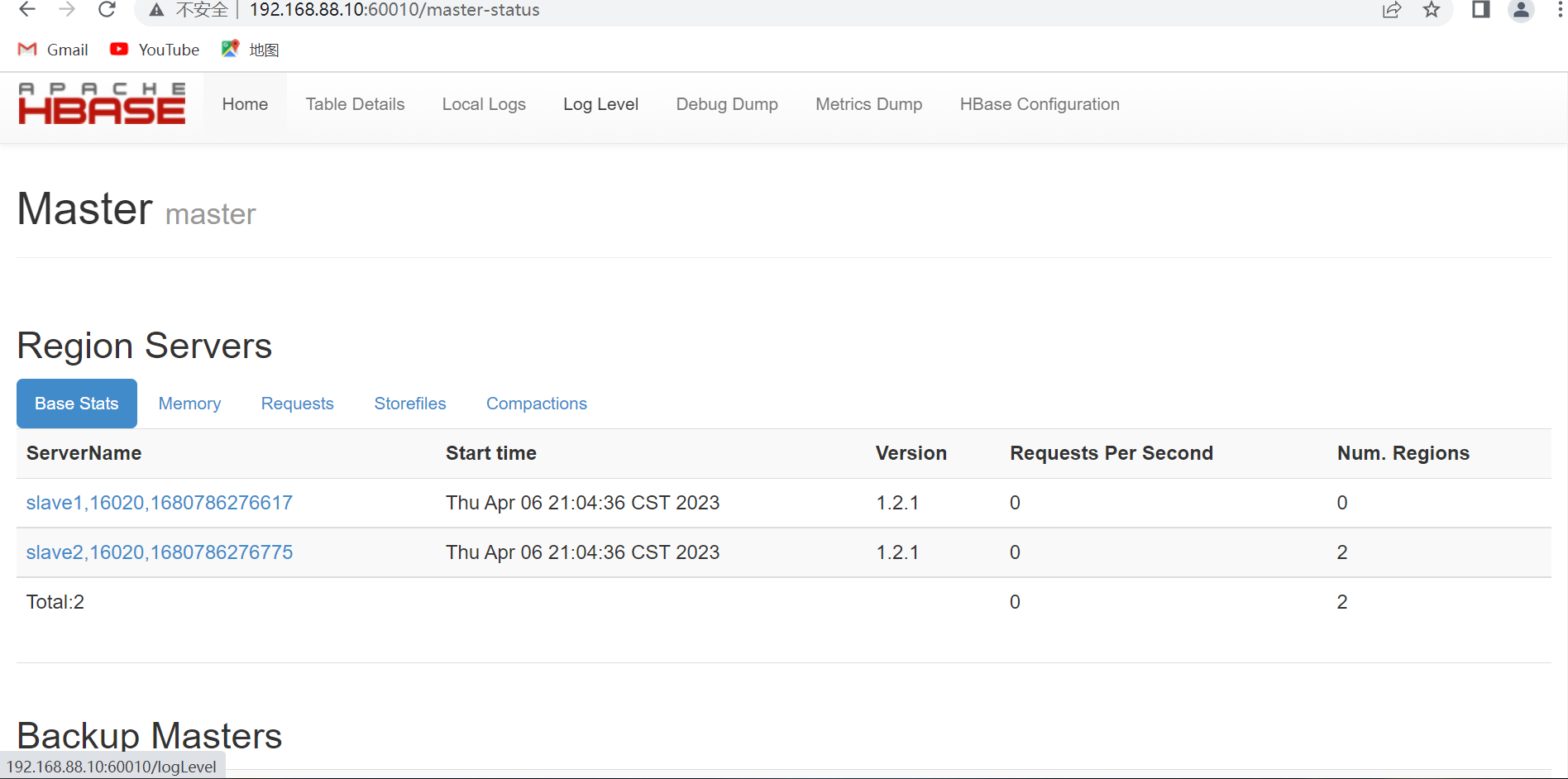

1|12在浏览器输 master:60010出现如下图所示的界面

2|0HBase部署-基于主机安装的Zookeeper

2|1实现时间同步

2|2解压安装包,改名

2|3配置hbase环境变量

2|4配置Hbase

1|0在master 节点配置hbase-site.xml

1|0在master节点修改regionservers文件

1|0在master节点创建hbase.tmp.dir目录

1|0将master上的hbase安装文件同步到slave1 slave2

1|0在所有节点修改hbase目录权限

1|0将master配置的hbase.sh环境变量传到slave节点

1|0切换hadoop用户

1|0启动 服务

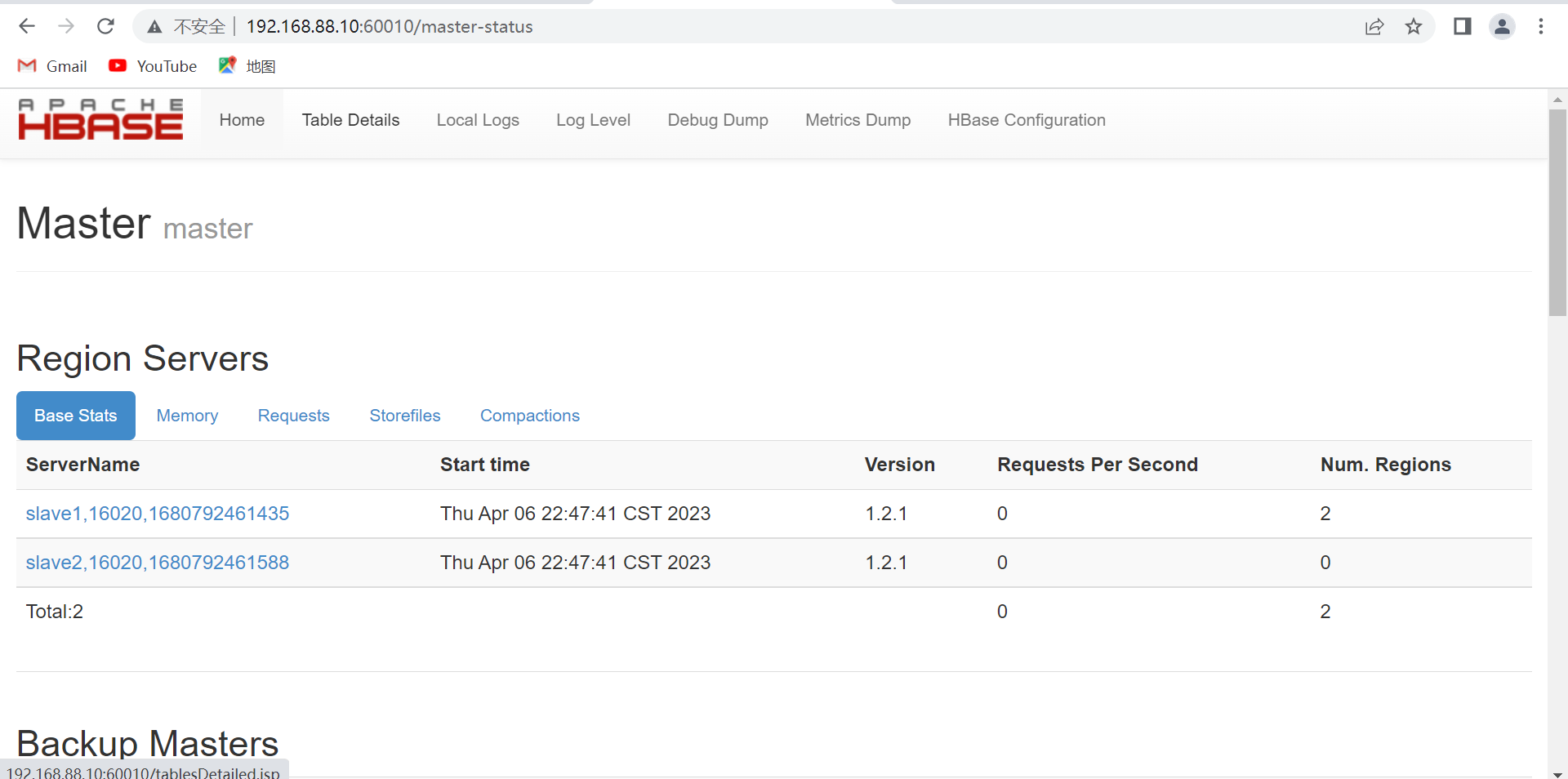

1|0在浏览器输 master:60010出现如下图所示的界面

3|0HBase常用 Shell 命令

3|1进入 HBase 命令行

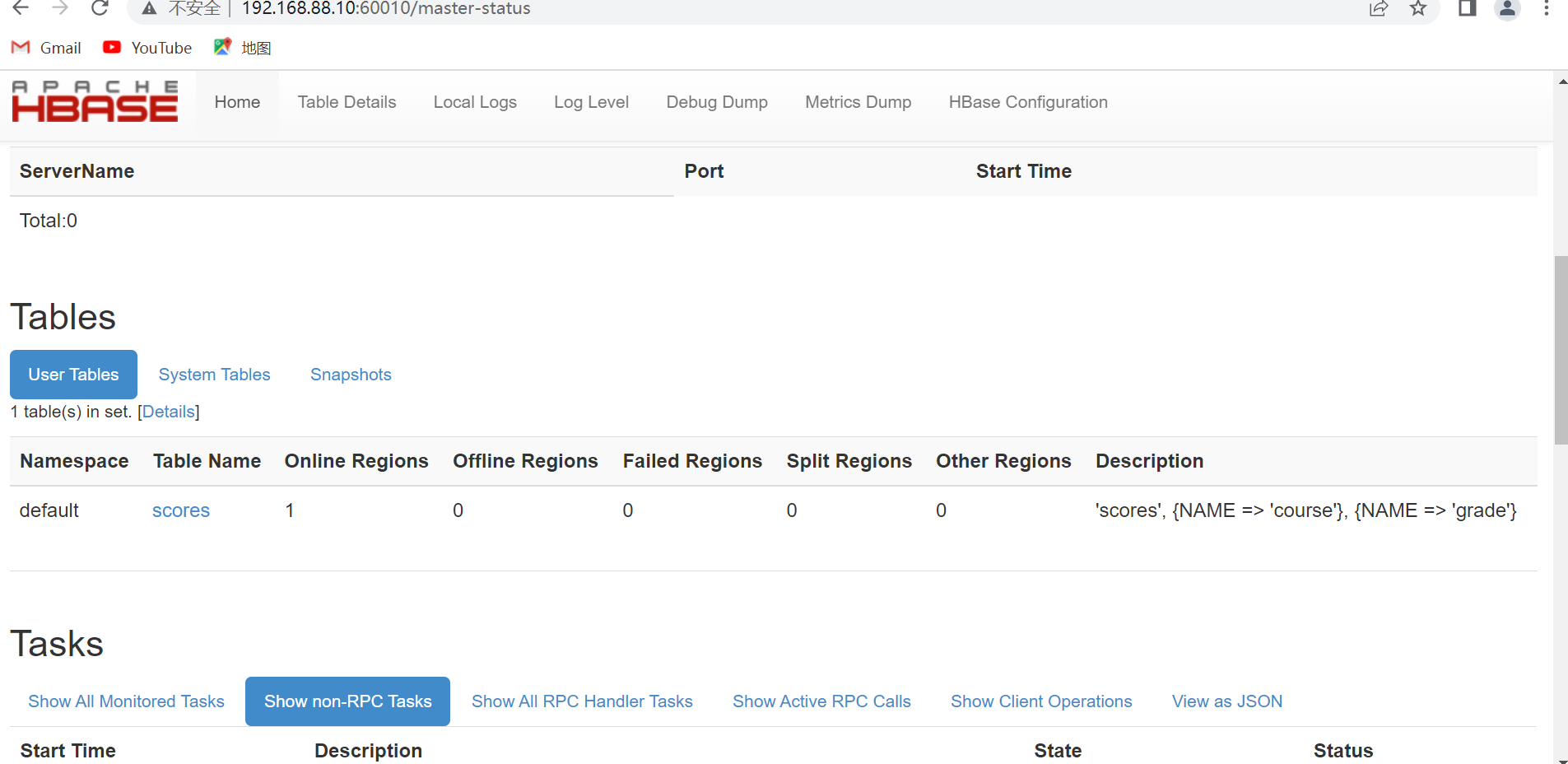

3|2建立表 scores,两个列簇:grade和course

3|3查看数据库状态

hbase(main):002:0> status 1 active master, 0 backup masters, 2 servers, 0 dead, 1.5000 average load

3|4查看数据库版本

hbase(main):003:0> version 1.2.1, r8d8a7107dc4ccbf36a92f64675dc60392f85c015, Wed Mar 30 11:19:21 CDT 2016

3|5查看表

3|6插入记录 1:jie,grade: 143cloud

3|7插入记录2:jie,course:math,86

3|8插入记录3:jie,course:cloud,92

3|9插入记录 4:shi,grade:133soft

3|10插入记录5:shi,grade:math,87

3|11插入记录6:shi,grade:cloud,96

3|12读取jie的记录

3|13读取jie的班级

3|14查看整个表记录

3|15按例查看表记录

3|16删除指定记录

3|17删除后,执行scan命令

3|18增加新的列簇

3|19查看表结构

3|20删除列簇

3|21删除表

3|22退出

hbase(main):023:0> quit [hadoop@master ~]$

3|23关闭所有服务

4|0在 master 节点关闭 HBase

[hadoop@master ~]$ stop-hbase.sh stopping hbase............... [hadoop@master ~]$ jps 1473 NameNode 1827 ResourceManager 1640 SecondaryNameNode 2109 QuorumPeerMain 3311 Jps [hadoop@slave1 ~]$ jps 1301 DataNode 1369 NodeManager 1933 Jps 1551 QuorumPeerMain [hadoop@slave2 ~]$ jps 1296 DataNode 1922 Jps 1364 NodeManager 1546 QuorumPeerMain

5|0在所有节点关闭 ZooKeeper

[hadoop@master ~]$ zkServer.sh stop ZooKeeper JMX enabled by default Using config: /usr/local/src/zookeeper/bin/../conf/zoo.cfg Stopping zookeeper ... STOPPED [hadoop@master ~]$ jps 1473 NameNode 1827 ResourceManager 1640 SecondaryNameNode 3338 Jps [hadoop@slave1 ~]$ zkServer.sh stop ZooKeeper JMX enabled by default Using config: /usr/local/src/zookeeper/bin/../conf/zoo.cfg Stopping zookeeper ... STOPPED [hadoop@slave1 ~]$ jps 1301 DataNode 1369 NodeManager 1964 Jps [hadoop@slave2 ~]$ zkServer.sh stop ZooKeeper JMX enabled by default Using config: /usr/local/src/zookeeper/bin/../conf/zoo.cfg Stopping zookeeper ... STOPPED [hadoop@slave2 ~]$ jps 1296 DataNode 1953 Jps 1364 NodeManager

6|0在 master 节点关闭 Hadoop

[hadoop@master ~]$ stop-all.sh This script is Deprecated. Instead use stop-dfs.sh and stop-yarn.sh Stopping namenodes on [master] master: stopping namenode 192.168.88.20: stopping datanode 192.168.88.30: stopping datanode Stopping secondary namenodes [0.0.0.0] 0.0.0.0: stopping secondarynamenode stopping yarn daemons stopping resourcemanager 192.168.88.20: stopping nodemanager 192.168.88.30: stopping nodemanager no proxyserver to stop [hadoop@master ~]$ jps 3771 Jps [hadoop@slave1 ~]$ jps 2043 Jps [hadoop@slave2 ~]$ jps 2031 Jps

__EOF__

本文作者:SkyRainmom

本文链接:https://www.cnblogs.com/skyrainmom/p/17438944.html

关于博主:评论和私信会在第一时间回复。或者直接私信我。

版权声明:本博客所有文章除特别声明外,均采用 BY-NC-SA 许可协议。转载请注明出处!

声援博主:如果您觉得文章对您有帮助,可以点击文章右下角【推荐】一下。您的鼓励是博主的最大动力!

本文链接:https://www.cnblogs.com/skyrainmom/p/17438944.html

关于博主:评论和私信会在第一时间回复。或者直接私信我。

版权声明:本博客所有文章除特别声明外,均采用 BY-NC-SA 许可协议。转载请注明出处!

声援博主:如果您觉得文章对您有帮助,可以点击文章右下角【推荐】一下。您的鼓励是博主的最大动力!

分类:

Hadoop集群

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY

· Docker 太简单,K8s 太复杂?w7panel 让容器管理更轻松!