Hadoop全分布部署

安装包下载(百度网盘)链接: https://pan.baidu.com/s/1XrnbpNNqcG20QG_hL4RJoQ?pwd=aec9

提取码: aec9

1|0基础配置(所有节点)

1|1关闭防火墙,selinux安全子系统

1|2网络配置

- master节点IP:192.168.88.10

- slave1节点IP:192.168.88.20

- slave2节点IP:192.168.88.30

1|3主机名配置

1|4主机名映射

1|5创建hadoop用户

1|6配置ssh无密钥登录

1|0每个节点安装和启动 SSH 协议

1|0切换到 hadoop 用户

1|0每 个节点生成密钥,然后分配

1|0测试免密登录

2|0集群部署服务

2|1安装JAVA环境

1|0安装JDK

1|0将配置传到slave1,slave2节点

2|2安装Hadoop环境

1|0安装hadoop

1|0编辑hadoop配置文件

1|0创建目录,文件

1|0将配置传到slave1,slave2节点

1|0每个节点赋予权限

2|3配置Hadoop格式化

1|0NameNode 格式化

将 NameNode 上的数据清零,第一次启动 HDFS 时要进行格式化,以后启动无需再格式

化,否则会缺失 DataNode 进程。另外,只要运行过 HDFS,Hadoop 的工作目录(本书设置为

/usr/local/src/hadoop/tmp)就会有数据,如果需要重新格式化,则在格式化之前一定要先删

除工作目录下的数据,否则格式化时会出问题

1|0启动集群各项服务

1|0查看 HDFS 数据存放位置

HDFS 的数据保存在/usr/local/src/hadoop/dfs 目录下,NameNode、DataNode

和/usr/local/src/hadoop/tmp/目录下,SecondaryNameNode 各有一个目录存放数据

[hadoop@master ~]$ ll /usr/local/src/hadoop/dfs/ total 0 drwxrwxr-x. 2 hadoop hadoop 6 May 12 15:40 data drwxrwxr-x. 3 hadoop hadoop 40 May 12 15:43 name [hadoop@master ~]$ ll /usr/local/src/hadoop/tmp/dfs/ total 0 drwxrwxr-x. 3 hadoop hadoop 40 May 12 15:44 namesecondary

1|0查看HDFS的报告

1|0HDFS 中的文件

1|0运行 WordCount 案例,计算数据文件中各单词的频度

MapReduce 命令指定数据输出目录为/output,/output 目录在 HDFS 文件系统中已经存

在,则执行相应的 MapReduce 命令就会出错。所以如果不是第一次运行 MapReduce,就要先

查看HDFS中的文件,是否存在/output目录。如果已经存在/output目录,就要先删除/output

目录

2|4安装配置Hive组件

1|0删除节点mariadb

1|0master节点安装解压mysql相关包

1|0配置my.cnf文件

1|0启动mysql,并设置开机自启

1|0查询数据库默认密码

1|0初始化数据库

1|0登录数据库,添加 root 用户从本地和远程访问 MySQL 数据库表单的授权

1|0安装解压hive相关包

1|0配置hive环境变量

1|0配置hive参数

1|0创建operation_logs和resources目录

1|0初始化Hive元数据

-

将 MySQL 数据库驱动(/opt/software/mysql-connector-java-5.1.46.jar)拷贝到 Hive 安装目录的 lib 下,并更改权限

[root@master ~]# cp mysql-connector-java-5.1.46.jar /usr/local/src/hive/lib/ #保证这个包的所有者是hadoop用户,更改它的所属 [root@master ~]# chown -R hadoop.hadoop /usr/local/src/ [root@master ~]# ll /usr/local/src/hive/lib/mysql-connector-java-5.1.46.jar -rw-r--r-- 1 hadoop hadoop 1004838 Mar 25 18:24 /usr/local/src/hive/lib/mysql-connector-java-5.1.46.jar -

启动Hadoop

[hadoop@master ~]$ start-all.sh This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh Starting namenodes on [master] master: starting namenode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-namenode-master.out 192.168.88.20: starting datanode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-datanode-slave1.out 192.168.88.30: starting datanode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-datanode-slave2.out Starting secondary namenodes [0.0.0.0] 0.0.0.0: starting secondarynamenode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-secondarynamenode-master.out starting yarn daemons starting resourcemanager, logging to /usr/local/src/hadoop/logs/yarn-hadoop-resourcemanager-master.out 192.168.88.20: starting nodemanager, logging to /usr/local/src/hadoop/logs/yarn-hadoop-nodemanager-slave1.out 192.168.88.30: starting nodemanager, logging to /usr/local/src/hadoop/logs/yarn-hadoop-nodemanager-slave2.out [hadoop@master ~]$ jps 4081 SecondaryNameNode 4498 Jps 4238 ResourceManager 3887 NameNode [hadoop@slave1 ~]$ jps 1521 Jps 1398 NodeManager 1289 DataNode [hadoop@slave2 ~]$ jps 1298 DataNode 1530 Jps 1407 NodeManager -

初始化数据库

[hadoop@master conf]$ schematool -initSchema -dbType mysql which: no hbase in (/home/hadoop/.local/bin:/home/hadoop/bin:/usr/local/src/hive/bin:/usr/local/src/hadoop/bin:/usr/local/src/hadoop/sbin:/usr/local/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/usr/local/src/jdk/bin) SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/hive-jdbc-2.0.0-standalone.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/src/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Metastore connection URL: jdbc:mysql://master:3306/hive?createDatabaseIfNotExist=true&useSSL=false Metastore Connection Driver : com.mysql.jdbc.Driver Metastore connection User: root Starting metastore schema initialization to 2.0.0 Initialization script hive-schema-2.0.0.mysql.sql Initialization script completed schemaTool completed -

启动Hive

[hadoop@master ~]$ hive which: no hbase in (/home/hadoop/.local/bin:/home/hadoop/bin:/usr/local/src/hive/bin:/usr/local/src/hadoop/bin:/usr/local/src/hadoop/sbin:/usr/local/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/usr/local/src/jdk/bin) SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/hive-jdbc-2.0.0-standalone.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/src/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Logging initialized using configuration in jar:file:/usr/local/src/hive/lib/hive-common-2.0.0.jar!/hive-log4j2.properties Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases. hive>

2|5安装配置sqoop组件

1|0安装解压hive相关包

1|0修改sqoop-env.sh文件

1|0配置sqoop环境变量

1|0连接数据库

1|0更改文件夹所属用户权限

1|0启动sqoop

-

启动Hadoop

#切换用户 [root@master ~]# su - hadoop Last login: Wed Apr 12 13:16:11 CST 2023 on pts/0 #启动hadoop [hadoop@master ~]$ start-all.sh This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh Starting namenodes on [master] master: starting namenode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-namenode-master.out 192.168.88.20: starting datanode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-datanode-slave1.out 192.168.88.30: starting datanode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-datanode-slave2.out Starting secondary namenodes [0.0.0.0] 0.0.0.0: starting secondarynamenode, logging to /usr/local/src/hadoop/logs/hadoop-hadoop-secondarynamenode-master.out starting yarn daemons starting resourcemanager, logging to /usr/local/src/hadoop/logs/yarn-hadoop-resourcemanager-master.out 192.168.88.20: starting nodemanager, logging to /usr/local/src/hadoop/logs/yarn-hadoop-nodemanager-slave1.out 192.168.88.30: starting nodemanager, logging to /usr/local/src/hadoop/logs/yarn-hadoop-nodemanager-slave2.out #查看集群状态 [hadoop@master ~]$ jps 1538 SecondaryNameNode 1698 ResourceManager 1957 Jps 1343 NameNode -

测试 Sqoop 是否能够正常连接 MySQL 数据库

[hadoop@master ~]$ sqoop list-databases --connect jdbc:mysql://master:3306/ --username root -P Warning: /usr/local/src/sqoop/../hbase does not exist! HBase imports will fail. Please set $HBASE_HOME to the root of your HBase installation. Warning: /usr/local/src/sqoop/../hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. Warning: /usr/local/src/sqoop/../accumulo does not exist! Accumulo imports will fail. Please set $ACCUMULO_HOME to the root of your Accumulo installation. Warning: /usr/local/src/sqoop/../zookeeper does not exist! Accumulo imports will fail. Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation. 23/05/15 18:23:30 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7 Enter password: 23/05/15 18:23:37 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset. Mon May 15 18:23:37 CST 2023 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification. information_schema hive mysql performance_schema sys -

连接 hive

#为了使 Sqoop 能够连接 Hive,需要将 hive 组件/usr/local/src/hive/lib 目录下的hive-common-2.0.0.jar 也放入 Sqoop 安装路径的 lib 目录中 [hadoop@master ~]$ cp /usr/local/src/hive/lib/hive-common-2.0.0.jar /usr/local/src/sqoop/lib/ #赋予hadoop用户权限 [root@master ~]# chown -R hadoop.hadoop /usr/local/src/

3|0Hadoop集群验证

1|0hadoop集群运行状态

[hadoop@master ~]$ jps 1609 SecondaryNameNode 3753 Jps 1404 NameNode 1772 ResourceManager [hadoop@slave1 ~]$ jps 1265 DataNode 1387 NodeManager 5516 Jps [hadoop@slave2 ~]$ jps 1385 NodeManager 5325 Jps 1263 DataNode

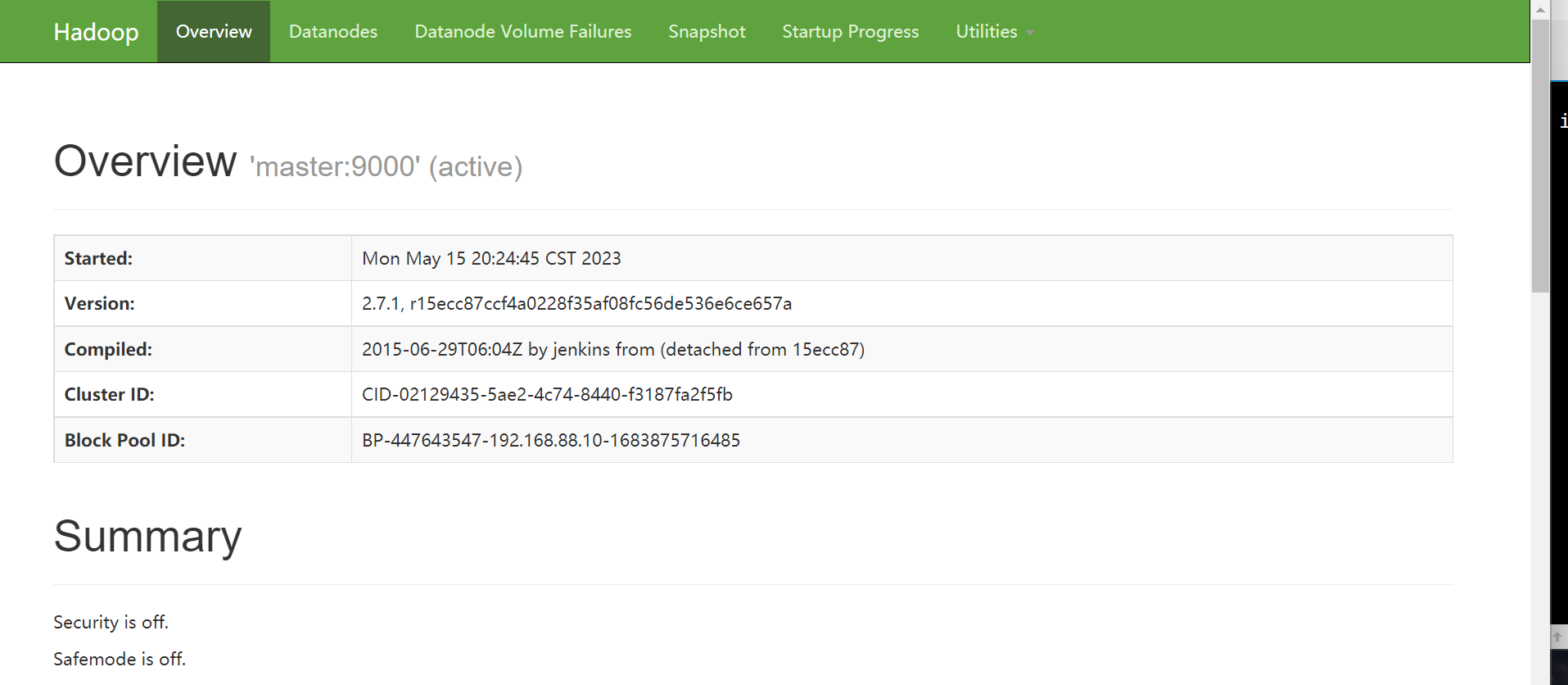

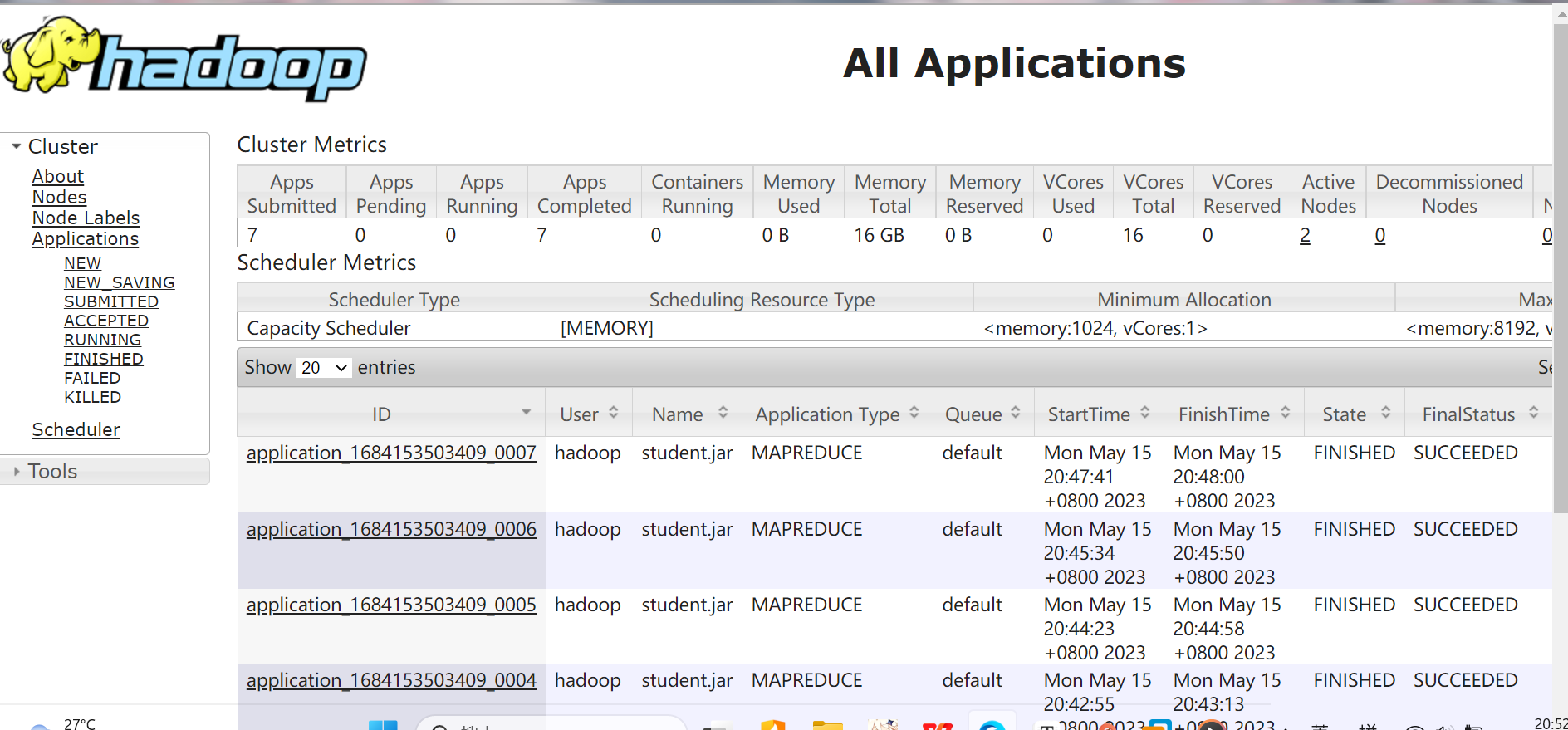

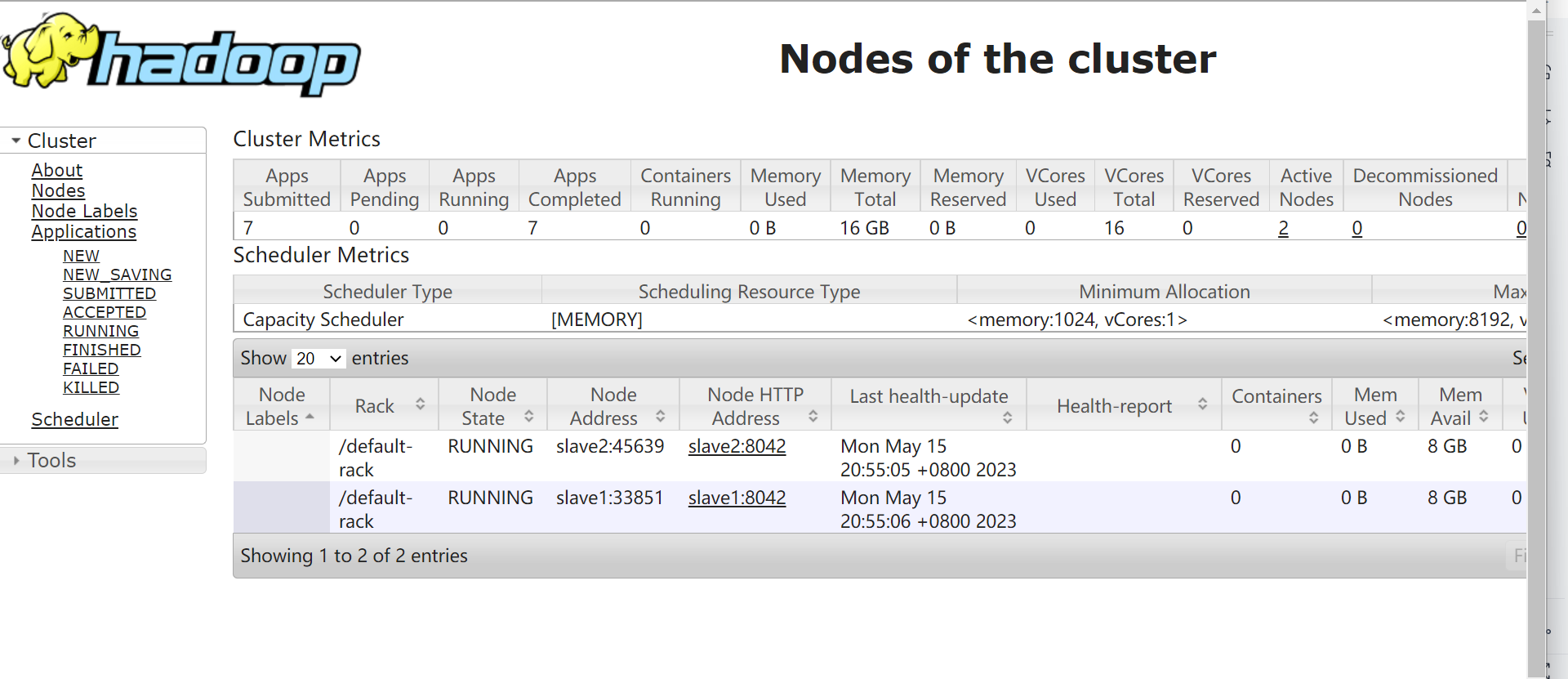

1|0web监控界面

使用浏览器浏览主节点机http://master:50070,能查看 NameNode节点状态说明系统启

动正常

http://master:8088,查看所有应用说明系统启动正常

浏览 Nodes 说明系统启动正常

1|0查看Sqoop版本

1|0Sqoop连接MySQL数据库

1|0Sqoop将HDFS数据导入到MySQL

1|0Hive组件验证,初始化

1|0启动hive

__EOF__

本文链接:https://www.cnblogs.com/skyrainmom/p/17438858.html

关于博主:评论和私信会在第一时间回复。或者直接私信我。

版权声明:本博客所有文章除特别声明外,均采用 BY-NC-SA 许可协议。转载请注明出处!

声援博主:如果您觉得文章对您有帮助,可以点击文章右下角【推荐】一下。您的鼓励是博主的最大动力!

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 别再用vector<bool>了!Google高级工程师:这可能是STL最大的设计失误

· 单元测试从入门到精通