初识python 之 pyspark读写hive数据

环境准备

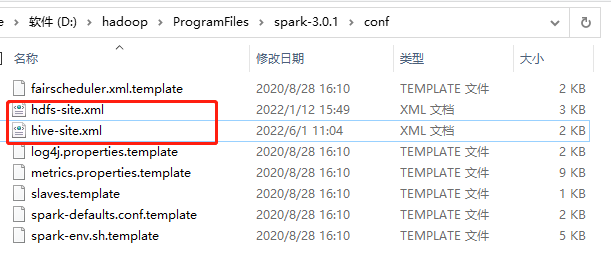

复制hdfs-site.xml、hive-site.xml到spark\conf目录下。

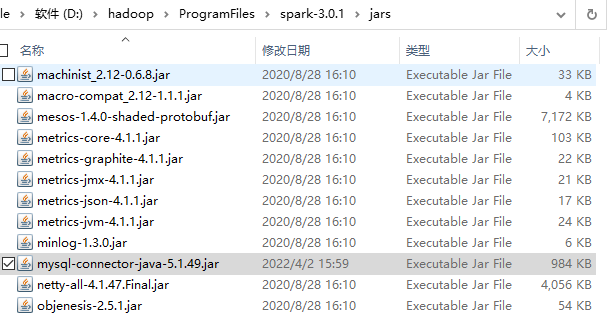

复制mysql-connector-java-5.1.49.jar到spark\jars目录下。

注意设置连接用户 ,默认为windows当前用户。

os.environ['HADOOP_USER_NAME'] = 'hadoop' # 设置连接用户

代码

1 #!/user/bin env python 2 # author:Simple-Sir 3 # create_time: 2022/6/7 10:20 4 from pyspark.sql import SparkSession 5 import os 6 os.environ['HADOOP_USER_NAME'] = 'hadoop' # 设置连接用户 7 # 连接外部hive必须要加上 enableHiveSupport() ,否则连接的是内置的hive库。 8 spark = SparkSession.builder.enableHiveSupport().master("local[*]").appName("sparkSql").getOrCreate() 9 10 # SQL代码 11 spark.sql('show databases').show() 12 spark.sql('use lzh') 13 spark.sql("show tables").show() 14 spark.sql('drop table tmp_20220531') 15 spark.sql("show tables").show() 16 spark.sql(''' 17 create table tmp_20220531( 18 name string, 19 age int 20 )ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' LINES TERMINATED BY '\n' 21 ''') 22 spark.sql('select * from tmp_20220531').show() 23 spark.sql(''' 24 load data local inpath 'user.txt' into table tmp_20220531 25 ''') 26 spark.sql('select * from tmp_20220531').show() 27 28 spark.stop()

#!/user/bin env python # author:Simple-Sir # create_time: 2022/6/2 14:20 from pyspark import SparkContext,SparkConf,HiveContext import os os.environ['HADOOP_USER_NAME'] = 'hadoop' # 设置连接用户 sparkConf = SparkConf().setMaster("local[*]").setAppName("sparkSql") sc = SparkContext(conf=sparkConf) sc.setLogLevel('WARN') hc = HiveContext(sc) hc.sql('use lzh') hc.sql("show tables").show() hc.sql('drop table tmp_20220531') hc.sql("show tables").show() hc.sql(''' create table tmp_20220531( name string, age int )ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' LINES TERMINATED BY '\n' ''') hc.sql('select * from tmp_20220531').show() hc.sql(''' load data local inpath 'user.txt' into table tmp_20220531 ''') hc.sql('select * from tmp_20220531').show() sc.stop()

运行结果

世风之狡诈多端,到底忠厚人颠扑不破;

末俗以繁华相尚,终觉冷淡处趣味弥长。

posted on 2022-06-06 18:03 Simple-Sir 阅读(687) 评论(0) 收藏 举报

浙公网安备 33010602011771号

浙公网安备 33010602011771号