初识python 之 pyspark实现wordcount

代码

#!/user/bin env python # author:Simple-Sir # create_time: 2022/6/6 14:20 from pyspark import SparkConf,SparkContext sparkConf = SparkConf().setMaster("local[*]").setAppName("wc") sc = SparkContext.getOrCreate(sparkConf) # sc = SparkContext(master="local[*]",appName="wc") # 创建RDD rdd = sc.parallelize([1,2,3,4,5]) # 创建RDD print(rdd.getNumPartitions()) # 查看分区数 8 print(rdd.repartition(2).glom().collect()) # 按照分区打印数据 [[2, 4], [1, 3, 5]] # 读取文件构建RDD rdd0 = sc.textFile("wordcount.txt") print(rdd0.getNumPartitions()) # 查看分区数 2 print(rdd0.repartition(2).glom().collect()) # 按照分区打印数据 [['hello world', 'hello python'], ['hello spark']] # 统计 rdd0.flatMap(lambda x:x.split(" ")).map(lambda x:(x,1)).reduceByKey(lambda x,y:x+y).foreach(print) sc.stop()

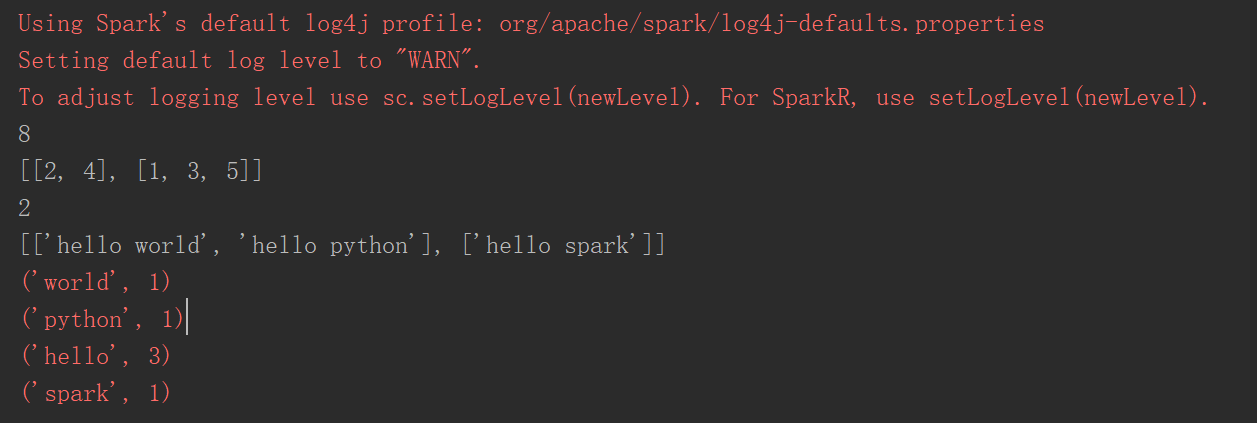

运行结果

附:

如果提示这个: UserWarning: Please install psutil to have better support with spilling

需要在windows安装psutil: pip install psutil

世风之狡诈多端,到底忠厚人颠扑不破;

末俗以繁华相尚,终觉冷淡处趣味弥长。

posted on 2022-06-06 14:29 Simple-Sir 阅读(473) 评论(0) 收藏 举报

浙公网安备 33010602011771号

浙公网安备 33010602011771号