spark 之 sparkSql操作hive数据

步骤

1.将hive-site.xml、hdfs-site.xml文件复制到项目的resources目录中

2.导入依赖

1 <dependency>

2 <groupId>org.apache.spark</groupId>

3 <artifactId>spark-hive_2.12</artifactId>

4 <version>3.0.0</version>

5 </dependency>

6 <dependency>

7 <groupId>org.apache.hive</groupId>

8 <artifactId>hive-exec</artifactId>

9 <version>1.2.1</version>

10 </dependency>

11 <dependency>

12 <groupId>mysql</groupId>

13 <artifactId>mysql-connector-java</artifactId>

14 <version>5.1.27</version>

15 </dependency>

16 <dependency>

17 <groupId>org.apache.hadoop</groupId>

18 <artifactId>hadoop-client</artifactId>

19 <version>2.7.3</version>

20 </dependency>

3.启用Hive的支持 enableHiveSupport()

实现代码

package com.lzh.sql.数据加载保存

/*

spark连接hive步骤

1.将hive-site.xml、hdfs-site.xml文件复制到项目的resources目录中

没有hdfs-site.xml文件,会报错: java.net.UnknownHostException: ns1

2.导入依赖

spark-hive

hive-exec

mysql-connector-java

hadoop-client

3.启用Hive的支持 enableHiveSupport()

错误信息:

不导入hadoop-client : Exception in thread "main" java.lang.NoSuchMethodError: org.apache.hadoop.conf.Configuration.getPassword(Ljava/lang/String;)

不设置访问hive的用户 :exception: org.apache.hadoop.security.AccessControlException Permission denied: user=Administrator, access=WRITE

*/

import org.apache.spark.SparkConf

import org.apache.spark.sql.SparkSession

object conHive {

def main(args: Array[String]): Unit = {

// 设置访问hive的用户,默认本机用户

/*不设置会报错

exception: org.apache.hadoop.security.AccessControlException Permission denied: user=Administrator, access=WRITE

*/

System.setProperty("HADOOP_USER_NAME", "hadoop")

val sparkConf = new SparkConf().setMaster("local[*]").setAppName("conHive")

val spark = SparkSession.builder().enableHiveSupport().config(sparkConf).getOrCreate()

// 使用SparkSQL连接外置的Hive

// 1. 拷贝Hive-size.xml文件到classpath下

// 2. 启用Hive的支持

// 3. 增加对应的依赖关系(包含MySQL驱动)

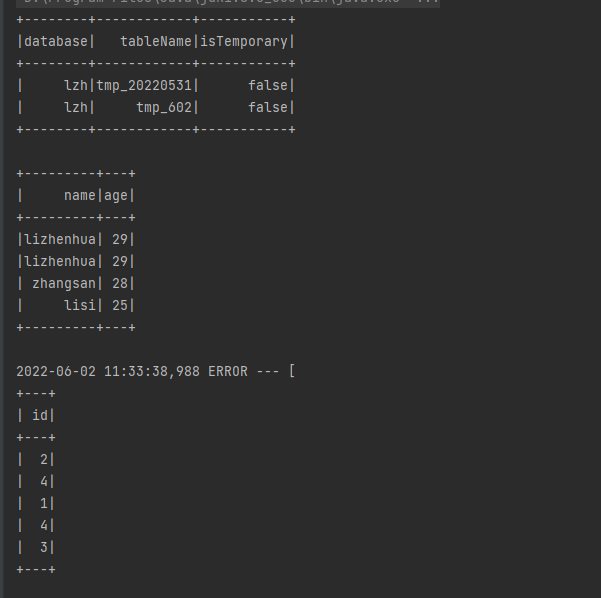

spark.sql("use lzh")

spark.sql("show tables").show()

spark.sql("select * from tmp_20220531").show()

spark.sql(

"""

|create table if not exists tmp_602(

|id int

|)

|""".stripMargin)

spark.sql(

"""

|insert into tmp_602 values(1)

|""".stripMargin)

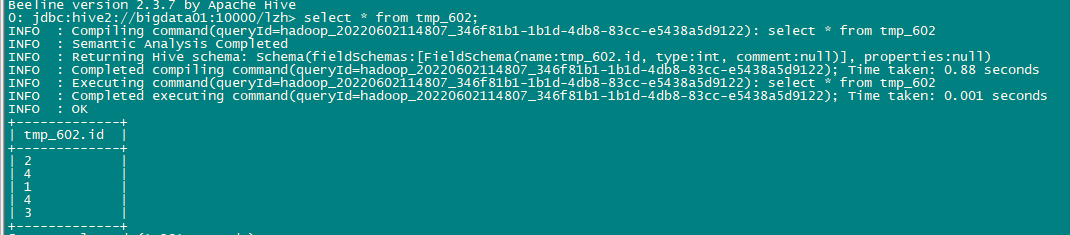

spark.sql("select * from tmp_602").show()

spark.close()

}

}

运行结果

错误信息

没有hdfs-site.xml文件 : java.net.UnknownHostException: ns1

不导入hadoop-client : Exception in thread "main" java.lang.NoSuchMethodError: org.apache.hadoop.conf.Configuration.getPassword(Ljava/lang/String;)

不设置访问hive的用户 : exception: org.apache.hadoop.security.AccessControlException Permission denied: user=Administrator, access=WRITE

运行结果中出现这个错误:Could not find uri with key [dfs.encryption.key.provider.uri] to create a keyProvider,网上说HDFS客户端的一个bug,不影响正常运行,在2.8版本之后已经修复。

世风之狡诈多端,到底忠厚人颠扑不破;

末俗以繁华相尚,终觉冷淡处趣味弥长。

posted on 2022-06-02 11:27 Simple-Sir 阅读(1645) 评论(0) 收藏 举报

浙公网安备 33010602011771号

浙公网安备 33010602011771号