redis 压力测试与qps监控

1 背景

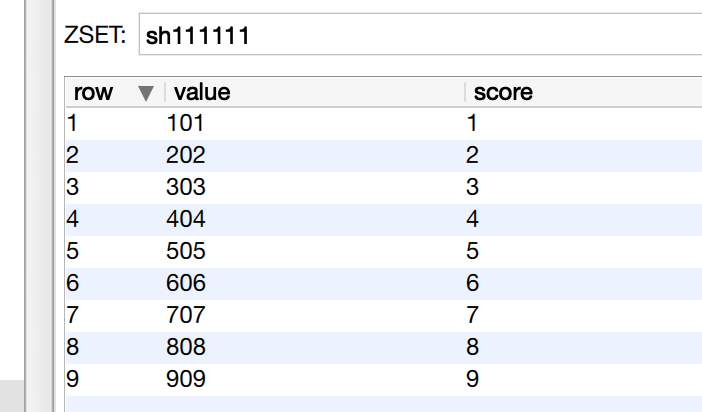

由于股票撮合中,我们使用zset构建到价成交,故这里对rangebyscore命令进行原位压力测试

redis线程池如何定,为什么开10个disruptor消费线程(redis连接):

1)io密集型4核2(n+1);

2)从第2点本地压测结果看,10线程已80%满足最高qps;

3)disruptor太多线程不好

2 首先压一波本地

压测设备:mac 2016 12'

2.1 docker

redis-benchmark -h 127.0.0.1 -p 63790 -c 100 -n 10000 script load "redis.call('zrangebyscore','sh111111','3','9')"

| java benchmark | java 代码 | redis命令行 | |

| 1 | 807 | 729 | 866 |

| 10 | 3115 | 3115 | 3187 |

| 50 | 4467 | 4235 | 4640 |

| 100 | 4375 | 4417 | 5238 |

| 500 | 5747 |

*java benchmark与java代码都存在从池拿连接的操作

2.2 native

redis-benchmark -h 127.0.0.1 -p 6379 -c 1 -n 10000 script load "redis.call('zrangebyscore','sh111111','3','9')"

| java benchmark | java 代码 | redis命令行 | |

| 1 | 11729 | 6050 | 10131 |

| 10 | 28597 | 18653 | 21000 |

| 50 | 31943 | 29056 | 23584 |

| 100 | 29476 | 28438 | 24875 |

| 500 | 24937 |

那么我们看到局域网及docker的测试,可能经过网卡后,10线程qps为3k,这个值与官方宣称的10w相去甚远,所以我看下往上其它人的压测结果

3 其它参考:

3.1 openresty-redis在不同网络环境下QPS对比讲解

http://blog.sina.cn/dpool/blog/s/blog_6145ed810102vefe.html?from=groupmessage&isappinstalled=0

redis相对openresty网络环境redis(requests per second)openresty(requests per second)

本地52631.58

局域网3105.59 与我docker测试水平相当

公网(纽约节点)169.95

3.2 memcache、redis、tair性能对比测试报告

http://blog.sina.cn/dpool/blog/s/blog_6145ed810102vefe.html?from=groupmessage&isappinstalled=0

以单线程通过各缓存客户端get调用向服务端获取数据,比较10000操作所消耗的时间

redis 1k对象 1260qps

并发1000个线程通过缓存软件的客户get调用向服务端获取数据,每个线程完成10000次的操作

redis 1k对象 11430qps 这个数据比我测试的要大三倍

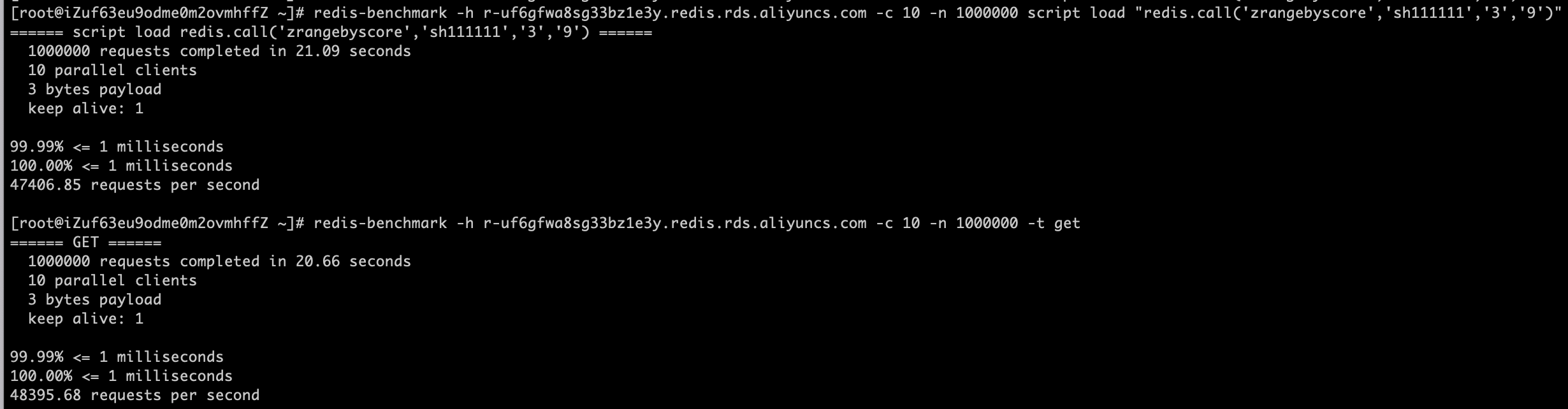

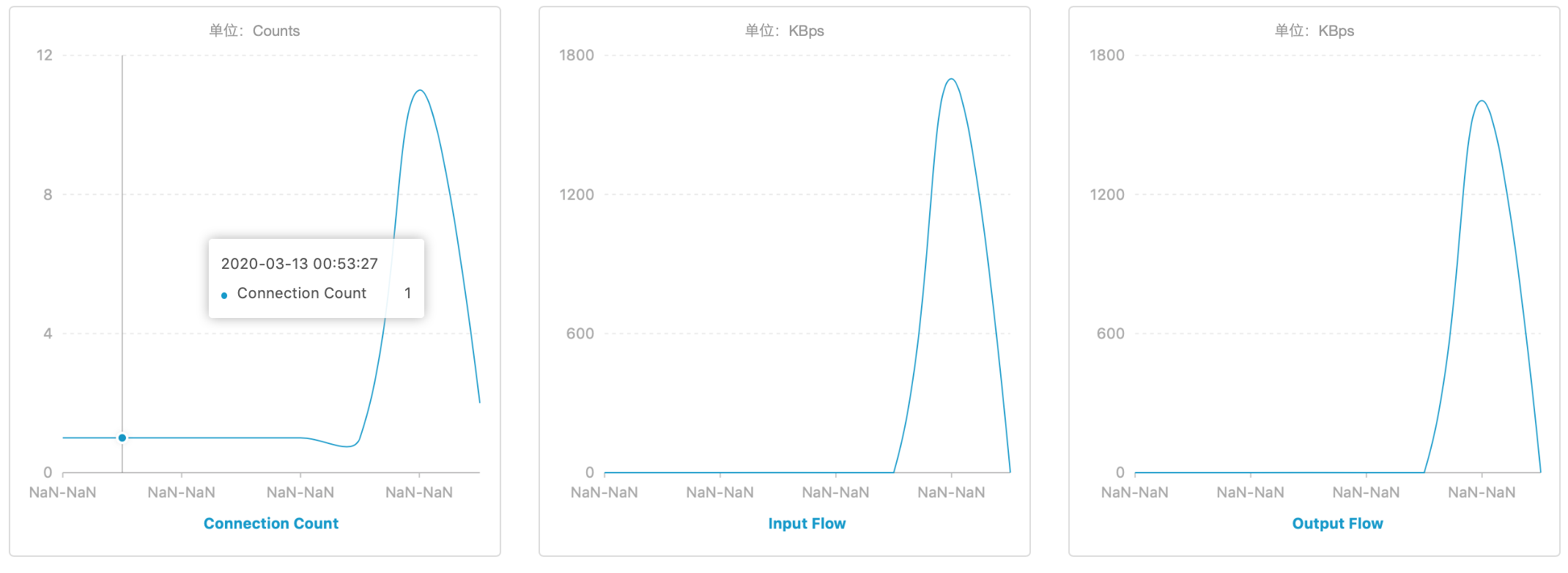

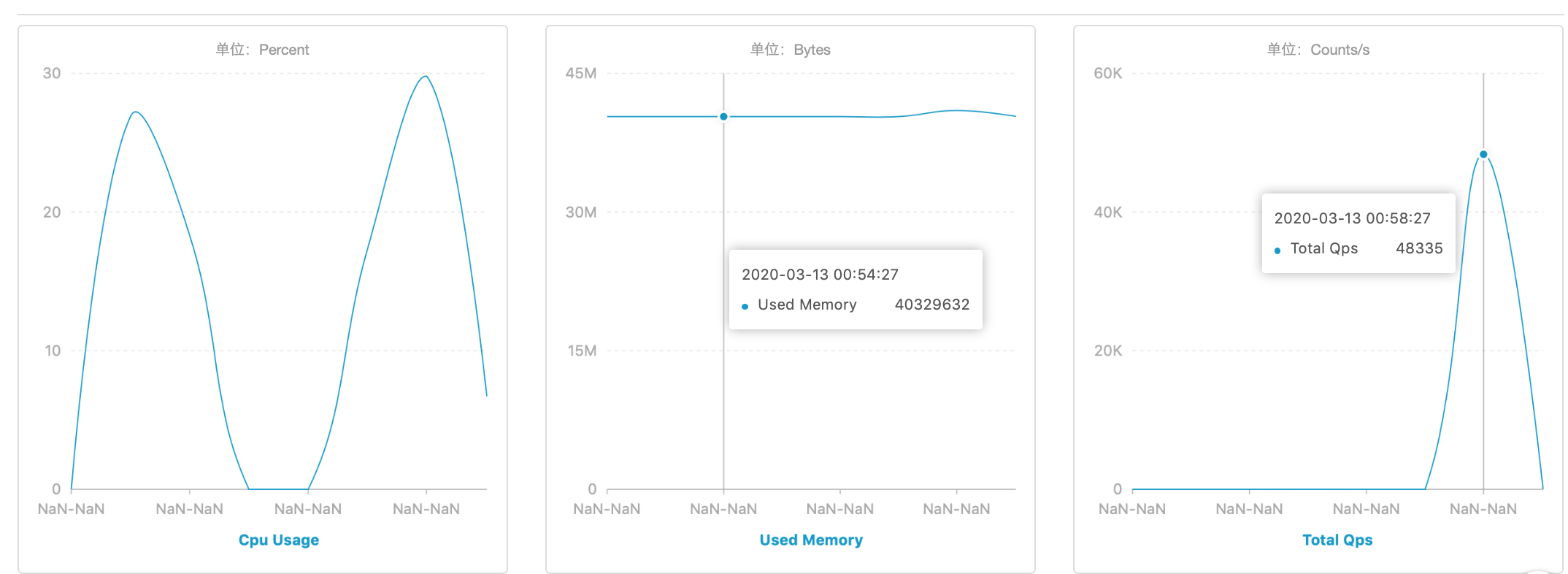

4 阿里云redis qps 10线程,4.7万qps

https://zhuanlan.zhihu.com/p/78034665?utm_source=wechat_session&utm_medium=social&utm_oi=1003056052560101376&from=singlemessage&isappinstalled=0&wechatShare=1&s_s_i=Nxnfuuur16PoKq5S8w%2Bv7CqmqZ5fwF2fxQZXH9O4%2FPM%3D&s_r=1

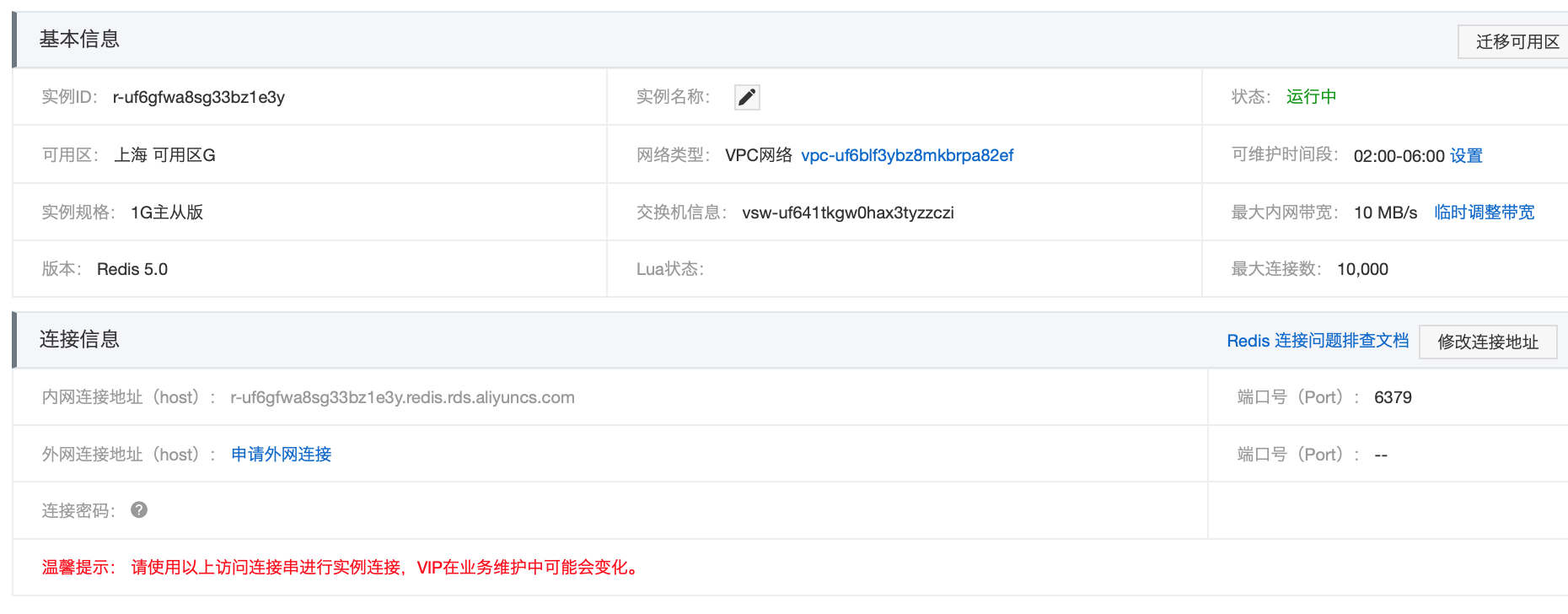

阿里云社区版

社区 标准版双副本 1g主从 redis5.0 号称8w qps(集群256分片2560w qps),企业版24w(集群6144w):https://help.aliyun.com/document_detail/26350.html

施压机 :4 vCPU 8 GiB (I/O优化)ecs.c6.xlarge 10Mbps (峰值)

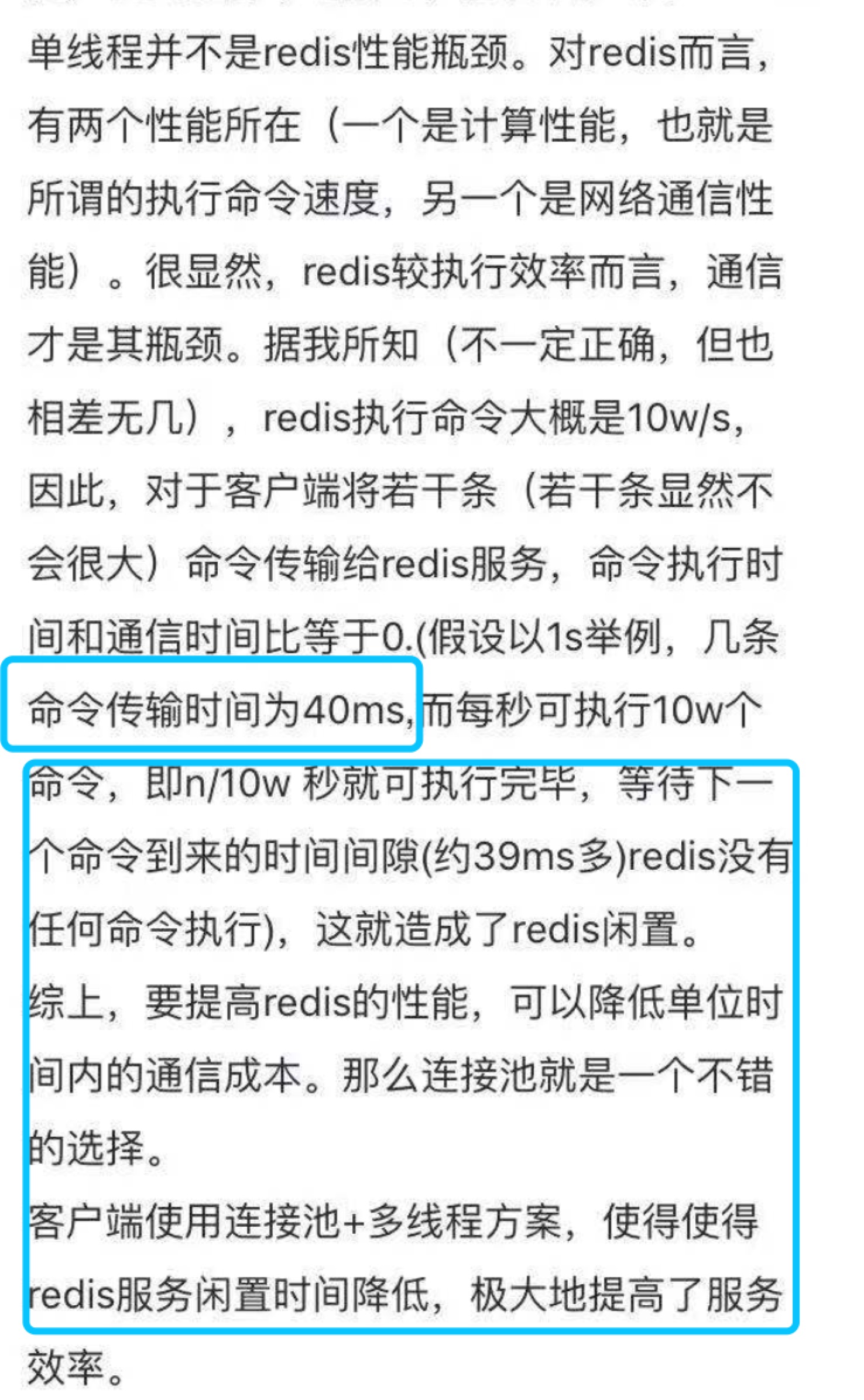

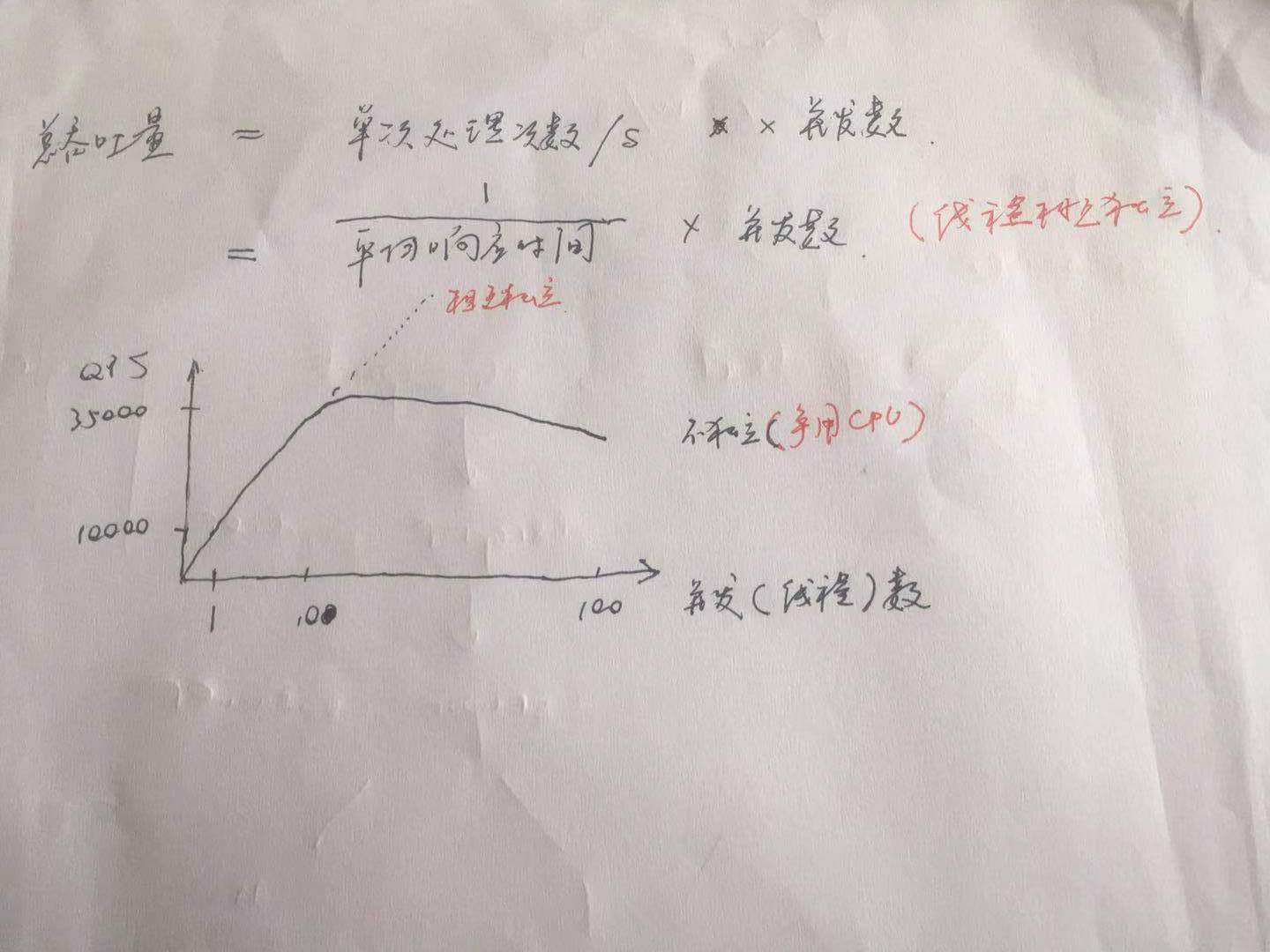

5 后话,为什么redis 多线程客户端获得更大qps,大到什么程度

以一个例子说明,假设:

一次命令时间(borrow|return resource + Jedis执行命令(含网络) )的平均耗时约为1ms,一个连接的QPS大约是1000 业务期望的QPS是50000 那么理论上需要的资源池大小是50000 / 1000 = 50个。但事实上这是个理论值,还要考虑到要比理论值预留一些资源,通常来讲maxTotal可以比理论值大一些。

但这个值不是越大越好,一方面连接太多占用客户端和服务端资源,另一方面对于Redis这种高QPS的服务器,一个大命令的阻塞即使设置再大资源池仍然会无济于事。

https://cloud.tencent.com/developer/article/1425158

注意,redis多线程qps并不像理论的那样,多个线程qps=单个线程*线程数(有点像负载均衡),因为线程之间相互切换吞吐量相互制约,成非线性关系

6 性能监控:

参考1 https://www.cnblogs.com/cheyunhua/p/9068029.html 设置redis最大内存,类似于java内存的xmx

参考2 https://blog.csdn.net/z644041867/article/details/77965521 性能监控指标

redis-cli info | grep -w "connected_clients" |awk -F':' '{print $2}'

redis-cli info | grep -w "used_memory_rss_human" |awk -F':' '{print $2}' 类似于java内存jmx监控的commited和used

redis-cli info | grep -w "used_memory_peak_human" |awk -F':' '{print $2}'

redis-cli info | grep -w "instantaneous_ops_per_sec" |awk -F':' '{print $2}'

redis-benchmark -h 127.0.0.1 -p 6379 -c 1 -n 1000000 script load "redis.call('zrangebyscore','sh111111','3','9')"

^Cript load redis.call('zrangebyscore','sh111111','3','9'): 10026.05

JoycedeMacBook:redis-5.0.5 joyce$ redis-cli info | grep -w "instantaneous_ops_per_sec" |awk -F':' '{print $2}'

0

JoycedeMacBook:redis-5.0.5 joyce$ redis-cli info | grep -w "instantaneous_ops_per_sec" |awk -F':' '{print $2}'

9666

JoycedeMacBook:redis-5.0.5 joyce$ redis-cli info | grep -w "instantaneous_ops_per_sec" |awk -F':' '{print $2}'

9473

JoycedeMacBook:redis-5.0.5 joyce$ redis-cli info | grep -w "instantaneous_ops_per_sec" |awk -F':' '{print $2}'

10249

JoycedeMacBook:redis-5.0.5 joyce$ redis-cli info | grep -w "instantaneous_ops_per_sec" |awk -F':' '{print $2}'

10590

JoycedeMacBook:redis-5.0.5 joyce$ redis-cli info | grep -w "instantaneous_ops_per_sec" |awk -F':' '{print $2}'

10486

JoycedeMacBook:redis-5.0.5 joyce$ redis-cli info | grep -w "instantaneous_ops_per_sec" |awk -F':' '{print $2}'

10421

JoycedeMacBook:redis-5.0.5 joyce$ redis-cli info | grep -w "instantaneous_ops_per_sec" |awk -F':' '{print $2}'

10450

JoycedeMacBook:redis-5.0.5 joyce$ redis-cli info | grep -w "instantaneous_ops_per_sec" |awk -F':' '{print $2}'

10673

JoycedeMacBook:redis-5.0.5 joyce$ redis-cli info | grep -w "instantaneous_ops_per_sec" |awk -F':' '{print $2}'

10707

JoycedeMacBook:redis-5.0.5 joyce$ redis-cli info | grep -w "instantaneous_ops_per_sec" |awk -F':' '{print $2}'

10655

JoycedeMacBook:redis-5.0.5 joyce$ redis-cli info | grep -w "instantaneous_ops_per_sec" |awk -F':' '{print $2}'

10530

JoycedeMacBook:redis-5.0.5 joyce$ redis-cli info | grep -w "instantaneous_ops_per_sec" |awk -F':' '{print $2}'

10570

JoycedeMacBook:redis-5.0.5 joyce$ redis-cli info | grep -w "instantaneous_ops_per_sec" |awk -F':' '{print $2}'

10396

JoycedeMacBook:redis-5.0.5 joyce$ redis-cli info | grep -w "instantaneous_ops_per_sec" |awk -F':' '{print $2}'

9595

JoycedeMacBook:redis-5.0.5 joyce$ redis-cli info | grep -w "instantaneous_ops_per_sec" |awk -F':' '{print $2}'

9010

JoycedeMacBook:redis-5.0.5 joyce$ redis-cli info | grep -w "instantaneous_ops_per_sec" |awk -F':' '{print $2}'

9414

7 测试代码:

package redis;

import com.alibaba.fastjson.JSON;

import com.alibaba.fastjson.JSONObject;

import com.google.protobuf.InvalidProtocolBufferException;

import ip.IpPool;

import org.apache.commons.pool2.impl.GenericObjectPool;

import org.apache.commons.pool2.impl.GenericObjectPoolConfig;

import org.openjdk.jmh.annotations.*;

import org.openjdk.jmh.runner.Runner;

import org.openjdk.jmh.runner.RunnerException;

import org.openjdk.jmh.runner.options.Options;

import org.openjdk.jmh.runner.options.OptionsBuilder;

import org.redisson.Redisson;

import org.redisson.api.RBucket;

import org.redisson.api.RedissonClient;

import org.redisson.config.Config;

import redis.clients.jedis.Jedis;

import redis.clients.jedis.JedisPool;

import redis.clients.jedis.JedisPoolConfig;

import serial.MyBaseBean;

import serial.MyBaseProto;

import java.util.Set;

import java.util.concurrent.CountDownLatch;

import java.util.concurrent.TimeUnit;

/**

* Created by joyce on 2019/10/24.

*/

@BenchmarkMode(Mode.Throughput)//基准测试类型

@OutputTimeUnit(TimeUnit.SECONDS)//基准测试结果的时间类型

@Threads(10)//测试线程数量(IO密集)

@State(Scope.Thread)//该状态为每个线程独享

public class YaliRedis {

private static JedisPool jedisPool;

private static final int threadCount = 10;

@Setup

public static void init() {

JedisPoolConfig config = new JedisPoolConfig();

config.setMaxTotal(800);

config.setMaxIdle(800);

jedisPool = new JedisPool(config,"localhost",63790,2000,"test");

// Jedis jedis = jedisPool.getResource();

// String test = jedis.get("testkey");

// System.out.println(test);

// Set<String> set = jedis.zrangeByScore("sh111111", 3,9);

// System.out.println(set.size());

// jedis.close();

}

@TearDown

public static void destroy() {

jedisPool.close();

}

public static void main(String[] args) throws Exception {

// initData();

if(false) {

Options opt = new OptionsBuilder().include(YaliRedis.class.getSimpleName()).forks(1).warmupIterations(1)

.measurementIterations(3).build();

new Runner(opt).run();

} else {

init();

final int perThread = 10000;

CountDownLatch countDownLatchMain = new CountDownLatch(threadCount);

CountDownLatch countDownLatchSub = new CountDownLatch(1);

for(int i=0; i<threadCount; ++i) {

new Thread(new Runnable() {

@Override

public void run() {

try {

countDownLatchSub.await();

Set<String> set = null;

for(int j=0; j<perThread; ++j)

set = testZSet();

System.out.println(set.size());

} catch (Exception e) {

e.printStackTrace();

} finally {

countDownLatchMain.countDown();

}

}

}).start();

}

long st = (System.currentTimeMillis());

countDownLatchSub.countDown();

countDownLatchMain.await();

System.out.println(System.currentTimeMillis() - st);

System.out.println(threadCount * perThread * 1000 / (System.currentTimeMillis() - st));

}

}

@Benchmark

public static Set<String> testZSet() {

Jedis jedis = null;

jedis = jedisPool.getResource();

Set<String> set = jedis.zrangeByScore("sh111111", 3,9);

jedis.close();

return set;

}

// @Benchmark

public static void test() {

Jedis jedis = null;

jedis = jedisPool.getResource();

jedis.get("testkey");

jedis.close();

}

// @Benchmark

public static void testJson() {

Jedis jedis = null;

jedis = jedisPool.getResource();

String xx = jedis.get("testjson");

JSONObject userJson = JSONObject.parseObject(xx);

MyBaseBean user = JSON.toJavaObject(userJson,MyBaseBean.class);

jedis.close();

}

// @Benchmark

public static void testPb() {

Jedis jedis = null;

jedis = jedisPool.getResource();

byte [] bytes = jedis.get("testpb".getBytes());

try {

MyBaseProto.BaseProto baseProto = MyBaseProto.BaseProto.parseFrom(bytes);

} catch (InvalidProtocolBufferException e) {

e.printStackTrace();

}

jedis.close();

}

public static void initData() {

Jedis jedis = new Jedis("localhost", 63790);

jedis.auth("test");

for(int i=1; i<=9; ++i) {

jedis.zadd("sh111111", i, String.valueOf(i*100));

}

}

}

8 备用:

1 redis-benchmark. + ( java bench jedis )

1)redis 本机

redis-benchmark -h 127.0.0.1 -p 6379 -c 1000 -n 10000 script load "redis.call('zrangebyscore','sh111111','3','9)"

1 th

10000 (11500)

50 th

24000 (33000)

100 th

25000 (30000)

500 th

26000 (20000)

1000 th

24000

2)docker

redis-benchmark -h 127.0.0.1 -p 63790 -c 100 -n 10000 script load "redis.call('zrangebyscore','sh111111','3','9)"

1 th

640 (700)

50 th

3900 (3300)

100 th

4400 (3800)

500 th

6000 (4500). 约80%

1000 th

5300