tensorrt学习(三)

继续整理tensorrt的学习资料,方便后续查看. (文章内容大部分摘取于网络资源)

1 int8量化

int8量化就是将网络的权重由float32类型缩放为int8类型,同时记录缩放的scale。为了尽可能的不丢失精度,需要采用标定图片来确定缩放的范围。

1.1 int8量化原理

标定过程

- 标定的原理,是通过输入标定图像I,使用参数W(Int8)推理得到输出结果P(Int8),然后不断调整W(Int8),使得输出P(Int8)与原始参数w(float32)输出结果P(Float32)越接近越好

- 因此标定时通常需要使用一些图像,正常发布时,一般使用100张图左右即可

int8 加速原因

计算机中的float计算量是非常大的,而改成int8后,计算量相比可以提升数倍

- 对于实际操作时,input[float32], w[int8], bias[float32], output[float32] (w[int8], bias[float32]为量化后的网络权重)

- 步骤如下:

- input[int8] = to_int8(input[float32])

- y[int16] = input[int8] * w[int8] # 此处乘法会由计算机转换为int16,保证精度

- output[float32] = to_float32(y[int16]) + bias[float32]

- 所以整个过程的只是为了减少float32的乘法数量以实现提速

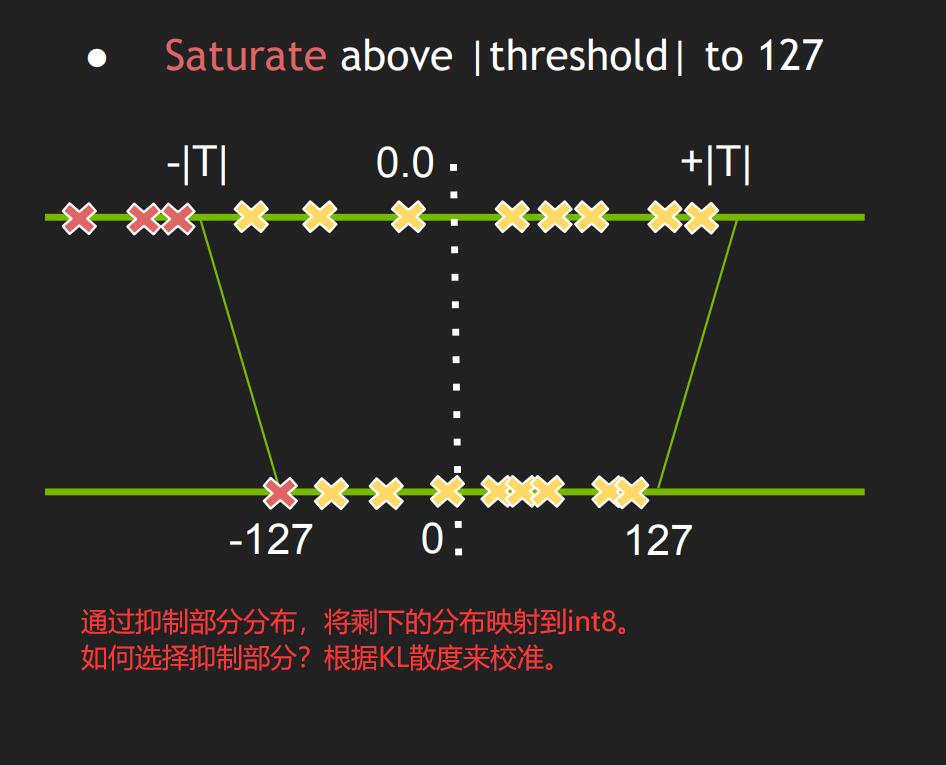

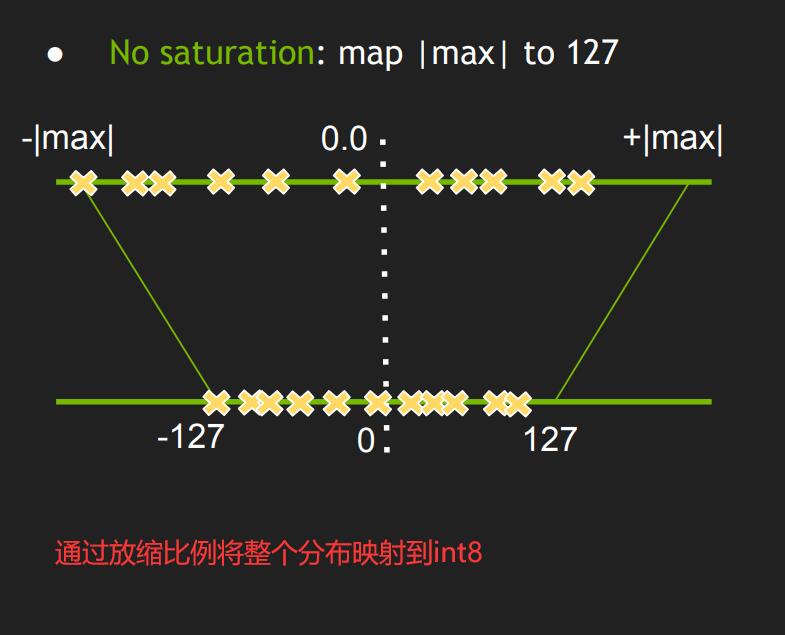

- 对于to_int8的过程,并不是直接的线性缩放,而是经过KL散度计算最合适的截断点(最大、最小值),进而进行缩放,使 布尽可能小的被改变

- 可以参照这个地址:https://on-demand.gputechconf.com/gtc/2017/presentation/s7310-8-bit-inference-with-tensorrt.pdf

1.2 int8量化实现

tensorrt中实现int8量化,是在build模型时完成,主要包括两步:

-

- 继承和实现一个Calibrator, 用来读取标定图片,选取合适的范围将float32权重转变为int8权重。常用的Calibrator包括下面两类:

Int8EntropyCalibrator2

熵校准选择张量的比例因子来优化量化张量的信息论内容,通常会抑制分布中的异常值。这是当前推荐的熵校准器。默认情况下,校准发生在图层融合之前。推荐用于基于 CNN 的网络。

.jpg)Iint8MinMaxCalibrator

该校准器使用激活分布的整个范围来确定比例因子。它似乎更适合NLP任务。默认情况下,校准发生在图层融合之前。推荐用于NVIDIA BERT等网络。

-

-

build时

config->setFlag()需要配置nvinfer1::BuilderFlag::kINT8,并且配置config->setInt8Calibratorconfig->setFlag(nvinfer1::BuilderFlag::kINT8); shared_ptr<Int8EntropyCalibrator> calib(new Int8EntropyCalibrator({"kej.jpg"}, input_dims, preprocess)); config->setInt8Calibrator(calib.get());

-

下面是采用Int8EntropyCalibrator2量化resnet50的示例代码:

#include <NvInfer.h>

#include <NvOnnxParser.h>

#include <NvInferRuntime.h>

#include <cuda_runtime.h>

#include <iostream>

#include <stdio.h>

#include <memory>

#include <functional>

#include <vector>

#include <cassert>

#include <Shlwapi.h>

#include <fstream>

#include <opencv2/opencv.hpp>

#define checkRuntime(op) __check_cuda_runtime((op), #op, __FILE__, __LINE__)

bool __check_cuda_runtime(cudaError_t code, const char* op, const char* file, int line) {

if (code != cudaSuccess) {

const char* err_name = cudaGetErrorName(code);

const char* err_message = cudaGetErrorString(code);

printf("runtime error %s:%d %s failed. \n code = %s, message = %s\n", file, line, op, err_name, err_message);

return false;

}

return true;

}

inline const char* severity_string(nvinfer1::ILogger::Severity t) {

switch (t) {

case nvinfer1::ILogger::Severity::kINTERNAL_ERROR: return "internal_error";

case nvinfer1::ILogger::Severity::kERROR: return "error";

case nvinfer1::ILogger::Severity::kWARNING: return "warning";

case nvinfer1::ILogger::Severity::kINFO: return "info";

case nvinfer1::ILogger::Severity::kVERBOSE: return "verbose";

default: return "unknow";

}

}

class TRTLogger : public nvinfer1::ILogger {

public:

virtual void log(Severity severity, nvinfer1::AsciiChar const* msg) noexcept override {

if (severity <= Severity::kINFO) {

// 打印带颜色的字符,格式如下:

// printf("\033[47;33m打印的文本\033[0m");

// 其中 \033[ 是起始标记

// 47 是背景颜色

// ; 分隔符

// 33 文字颜色

// m 开始标记结束

// \033[0m 是终止标记

// 其中背景颜色或者文字颜色可不写

// 部分颜色代码 https://blog.csdn.net/ericbar/article/details/79652086

if (severity == Severity::kWARNING) {

printf("\033[33m%s: %s\033[0m\n", severity_string(severity), msg);

}

else if (severity <= Severity::kERROR) {

printf("\033[31m%s: %s\033[0m\n", severity_string(severity), msg);

}

else {

printf("%s: %s\n", severity_string(severity), msg);

}

}

}

};

typedef std::function<void(

int current, int count, const std::vector<std::string>& files, nvinfer1::Dims dims, float* ptensor

)> Int8Process;

// int8熵校准器:用于评估量化前后的分布改变

class Int8EntropyCalibrator :public nvinfer1::IInt8EntropyCalibrator2 {

public:

Int8EntropyCalibrator(const std::vector<std::string>& imagefiles, nvinfer1::Dims dims, const Int8Process& preprocess)

{

assert(preprocess != nullptr);

this->dims_ = dims;

this->allimgs_ = imagefiles;

this->preprocess_ = preprocess;

this->fromCalibratorData_ = false;

files_.resize(dims.d[0]); //dims.d[0]对应batch_size

}

// 这个构造函数,是允许从缓存数据中加载标定结果,这样不用重新读取图像处理

Int8EntropyCalibrator(const std::vector<uint8_t>& entropyCalibratorData, nvinfer1::Dims dims, const Int8Process& preprocess)

{

assert(preprocess != nullptr);

this->dims_ = dims;

this->entropyCalibratorData_ = entropyCalibratorData;

this->preprocess_ = preprocess;

this->fromCalibratorData_ = false;

files_.resize(dims.d[0]);

}

virtual ~Int8EntropyCalibrator()

{

if (tensor_host_ != nullptr) {

checkRuntime(cudaFreeHost(tensor_host_));

checkRuntime(cudaFree(tensor_device_));

tensor_host_ = nullptr;

tensor_device_ = nullptr;

}

}

// 想要按照多少的batch进行标定

int getBatchSize() const noexcept {

return dims_.d[0];

}

bool next() {

int batch_size = dims_.d[0];

if (cursor_ + batch_size > allimgs_.size()) {

return false;

}

for (int i = 0; i < batch_size; ++i) {

files_[i] = allimgs_[cursor_++];

}

if (tensor_host_ == nullptr) {

size_t volume = 1;

for (int j = 0; j < dims_.nbDims; ++j) {

volume *= dims_.d[j];

}

bytes_ = volume * sizeof(float);

checkRuntime(cudaMallocHost(&tensor_host_, bytes_));

checkRuntime(cudaMalloc(&tensor_device_, bytes_));

}

preprocess_(cursor_, allimgs_.size(), files_, dims_, tensor_host_);

checkRuntime(cudaMemcpy(tensor_device_, tensor_host_, bytes_, cudaMemcpyHostToDevice));

return true;

}

bool getBatch(void* bindings[], const char* names[], int nBindings) noexcept{

if (!next()) return false;

bindings[0] = tensor_device_;

return true;

}

const std::vector<uint8_t>& getEntropyCalibratorData() {

return entropyCalibratorData_;

}

const void* readCalibrationCache(size_t& length) noexcept {

if (fromCalibratorData_) {

length = this->entropyCalibratorData_.size();

return this->entropyCalibratorData_.data();

}

return nullptr;

}

virtual void writeCalibrationCache(const void* cache, size_t length) noexcept{

entropyCalibratorData_.assign((uint8_t*)cache, (uint8_t*)cache + length);

}

private:

Int8Process preprocess_;

std::vector<std::string> allimgs_;

size_t batchCudaSize_ = 0;

int cursor_ = 0;

size_t bytes_ = 0;

nvinfer1::Dims dims_;

std::vector<std::string> files_;

float* tensor_host_ = nullptr;

float* tensor_device_ = nullptr;

std::vector<uint8_t> entropyCalibratorData_;

bool fromCalibratorData_ = false;

};

// 通过智能指针管理nv返回的指针参数

// 内存自动释放,避免泄漏

template<typename _T>

static std::shared_ptr<_T> make_nvshared(_T* ptr) {

return std::shared_ptr<_T>(ptr, [](_T* p) {p->destroy(); });

}

static bool exists(const std::string& path) {

#ifdef _WIN32

return ::PathFileExistsA(path.c_str()); // <Shlwapi.h>头文件中

#else

return access(path.c_str(), R_OK) == 0;

#endif // !_WIN32

}

bool build_model() {

//if (exists("resnet18_classifier.trtmodel")) {

//printf("resnet18_classifier.trtmodel has exists.\n");

//return true;

//}

TRTLogger logger;

auto builder = make_nvshared(nvinfer1::createInferBuilder(logger));

auto config = make_nvshared(builder->createBuilderConfig());

// createNetworkV2(1)表示采用显性batch size,新版tensorRT(>=7.0)时,不建议采用0非显性batch size

// 因此贯穿以后,请都采用createNetworkV2(1)而非createNetworkV2(0)或者createNetwork

auto network = make_nvshared(builder->createNetworkV2(1));

auto parser = make_nvshared(nvonnxparser::createParser(*network, logger));

if (!parser->parseFromFile("resnet18_classifier.onnx", 1)) {

printf("parse onnx file failed\n");

return false;

}

int maxBatchSize = 10;

printf("Workspace Size = %.2f MB\n", (1 << 28) / 1024.0f / 1024.0f);

config->setMaxWorkspaceSize(1 << 28);

auto profile = builder->createOptimizationProfile();

auto input_tensor = network->getInput(0);

auto input_dims = input_tensor->getDimensions();

input_dims.d[0] = 1;

// 设置int8量化

config->setFlag(nvinfer1::BuilderFlag::kINT8);

auto preprocess = [](

int current, int count, const std::vector<std::string>& files,

nvinfer1::Dims dims, float* ptensor

){

printf("Preprocess %d / %d\n", count, current);

// 标定所采用的数据预处理必须与推理时一样

int width = dims.d[3];

int height = dims.d[2];

float mean[] = { 0.406, 0.456, 0.485 };

float std[] = { 0.225, 0.224, 0.229 };

for (int i = 0; i < files.size(); ++i) {

auto image = cv::imread(files[i]);

cv::resize(image, image, cv::Size(width, height));

int image_area = width * height;

unsigned char* pimage = image.data;

float* phost_b = ptensor + image_area * 0;

float* phost_g = ptensor + image_area * 1;

float* phost_r = ptensor + image_area * 2;

for (int j = 0; j < image_area; ++j, pimage += 3) {

// 注意这里的顺序rgb调换了

*phost_r++ = (pimage[0] / 255.0 - mean[0]) / std[0];

*phost_g++ = (pimage[1] / 255.0 - mean[1]) / std[1];

*phost_b++ = (pimage[2] / 255.0 - mean[2]) / std[2];

}

ptensor += image_area * 3;

}

};

// 配置int8标定数据读取工具

std::shared_ptr<Int8EntropyCalibrator> calibrator(

new Int8EntropyCalibrator({ "kej.jpg" }, input_dims, preprocess)

);

config->setInt8Calibrator(calibrator.get());

// 配置最小允许batch

input_dims.d[0] = 1;

profile->setDimensions(input_tensor->getName(), nvinfer1::OptProfileSelector::kMIN, input_dims);

profile->setDimensions(input_tensor->getName(), nvinfer1::OptProfileSelector::kOPT, input_dims);

// 配置最大允许batch

// if networkDims.d[i] != -1, then minDims.d[i] == optDims.d[i] == maxDims.d[i] == networkDims.d[i]

input_dims.d[0] = maxBatchSize;

profile->setDimensions(input_tensor->getName(), nvinfer1::OptProfileSelector::kMAX, input_dims);

config->addOptimizationProfile(profile);

auto engine = make_nvshared(builder->buildEngineWithConfig(*network, *config));

if (engine == nullptr) {

printf("build engine falied\n");

return false;

}

auto model_data = make_nvshared(engine->serialize());

FILE* f = fopen("resnet18_classifier.trtmodel", "wb");

fwrite(model_data->data(), 1, model_data->size(), f);

fclose(f);

f = fopen("calib.txt", "wb");

auto calib_data = calibrator->getEntropyCalibratorData();

fwrite(calib_data.data(), 1, calib_data.size(), f);

fclose(f);

printf("Done\n");

return true;

}

std::vector<unsigned char> load_file(const std::string& file) {

std::ifstream in(file, std::ios::in | std::ios::binary);

if (!in.is_open()) {

return {};

}

in.seekg(0, std::ios::end);

size_t length = in.tellg();

std::vector<unsigned char> result;

if (length > 0) {

result.resize(length);

in.seekg(0, std::ios::beg);

in.read((char*)result.data(), length);

}

in.close();

return result;

}

std::vector<std::string> load_labels(const char* file) {

std::vector<std::string> lines;

std::ifstream in(file, std::ios::in | std::ios::binary);

if (!in.is_open()) {

printf("open %d failed.\n", file);

return lines;

}

std::string line;

while (std::getline(in, line)) {

lines.push_back(line);

}

in.close();

return lines;

}

void inference() {

TRTLogger logger;

auto runtime = make_nvshared(nvinfer1::createInferRuntime(logger));

auto model_data = load_file("resnet18_classifier.trtmodel");

auto engine = make_nvshared(runtime->deserializeCudaEngine(model_data.data(), model_data.size()));

if (engine == nullptr) {

printf("Deserialize cuda engine failed.\n");

return;

}

auto context = make_nvshared(engine->createExecutionContext());

cudaStream_t stream = nullptr;

checkRuntime(cudaStreamCreate(&stream));

int input_batch = 1;

int input_channel = 3;

int input_height = 224;

int input_width = 224;

int input_numel = input_batch * input_channel * input_height * input_width;

float* input_data_host = nullptr;

float* input_data_device = nullptr;

checkRuntime(cudaMallocHost(&input_data_host, input_numel * sizeof(float)));

checkRuntime(cudaMalloc(&input_data_device, input_numel * sizeof(float)));

// image to float

auto image = cv::imread("kej.jpg");

float mean[] = { 0.406, 0.456, 0.485 };

float std[] = { 0.225, 0.224, 0.229 };

cv::resize(image, image, cv::Size(input_width, input_height));

int image_area = image.cols * image.rows;

unsigned char* pimage = image.data;

float* phost_b = input_data_host + image_area * 0;

float* phost_g = input_data_host + image_area * 1;

float* phost_r = input_data_host + image_area * 2;

for (int i = 0; i < image_area; ++i, pimage += 3) {

// 注意这里的顺序rgb调换了

*phost_r++ = (pimage[0] / 255.0f - mean[0]) / std[0];

*phost_g++ = (pimage[1] / 255.0f - mean[1]) / std[1];

*phost_b++ = (pimage[2] / 255.0f - mean[2]) / std[2];

}

checkRuntime(cudaMemcpyAsync(input_data_device, input_data_host, input_numel * sizeof(float),

cudaMemcpyHostToDevice, stream));

// 3x3输入,对应3x3输出

const int num_classes = 1000;

float output_data_host[num_classes];

float* output_data_device = nullptr;

checkRuntime(cudaMalloc(&output_data_device, sizeof(output_data_host)));

// 明确当前推理时,使用的数据输入大小

auto input_dims = context->getBindingDimensions(0);

input_dims.d[0] = input_batch;

context->setBindingDimensions(0, input_dims);

float* bindings[] = { input_data_device, output_data_device };

bool success = context->enqueueV2((void**)bindings, stream, nullptr);

checkRuntime(cudaMemcpyAsync(output_data_host, output_data_device, sizeof(output_data_host),

cudaMemcpyDeviceToHost, stream));

checkRuntime(cudaStreamSynchronize(stream));

float* prob = output_data_host;

int predict_label = std::max_element(prob, prob + num_classes) - prob;

auto labels = load_labels("labels.imagenet.txt");

auto predict_name = labels[predict_label];

float confidence = prob[predict_label];

printf("Predict: %s, confidence = %f, label = %d\n", predict_name.c_str(), confidence, predict_label);

checkRuntime(cudaStreamDestroy(stream));

checkRuntime(cudaFreeHost(input_data_host));

checkRuntime(cudaFree(input_data_device));

checkRuntime(cudaFree(output_data_device));

}

int main() {

if (!build_model()) {

std::cin.get();

return -1;

}

inference();

std::cin.get();

return 0;

}