MTCNN人脸检测模型

mtcnn: Multitask

数据集:

Wider Face数据集:http://shuoyang1213.me/WIDERFACE/

CelebA数据集: http://mmlab.ie.cuhk.edu.hk/projects/CelebA.html

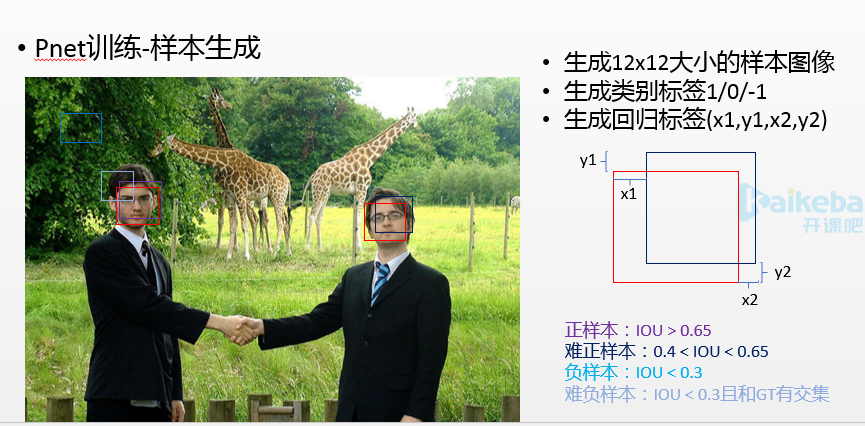

Pnet:

pnet网络:

import torch import torch.nn as nn import torch.nn.functional as F def weights_init(m): if isinstance(m, nn.Conv2d) or isinstance(m, nn.Linear): nn.init.xavier_uniform(m.weight.data) nn.init.constant(m.bias, 0.1) class LossFn: def __init__(self, cls_factor=1, box_factor=1, landmark_factor=1): # loss function self.cls_factor = cls_factor self.box_factor = box_factor self.land_factor = landmark_factor self.loss_cls = nn.BCELoss() # binary cross entropy self.loss_box = nn.MSELoss() # mean square error self.loss_landmark = nn.MSELoss() def cls_loss(self,gt_label,pred_label): pred_label = torch.squeeze(pred_label) gt_label = torch.squeeze(gt_label) # get the mask element which >= 0, only 0 and 1 can effect the detection loss mask = torch.ge(gt_label,0) valid_gt_label = torch.masked_select(gt_label,mask) valid_pred_label = torch.masked_select(pred_label,mask) return self.loss_cls(valid_pred_label,valid_gt_label)*self.cls_factor def box_loss(self,gt_label,gt_offset,pred_offset): pred_offset = torch.squeeze(pred_offset) gt_offset = torch.squeeze(gt_offset) gt_label = torch.squeeze(gt_label) #get the mask element which != 0 unmask = torch.eq(gt_label,0) mask = torch.eq(unmask,0) #convert mask to dim index chose_index = torch.nonzero(mask.data) chose_index = torch.squeeze(chose_index) #only valid element can effect the loss valid_gt_offset = gt_offset[chose_index,:] valid_pred_offset = pred_offset[chose_index,:] return self.loss_box(valid_pred_offset,valid_gt_offset)*self.box_factor def landmark_loss(self,gt_label,gt_landmark,pred_landmark): pred_landmark = torch.squeeze(pred_landmark) gt_landmark = torch.squeeze(gt_landmark) gt_label = torch.squeeze(gt_label) mask = torch.eq(gt_label,-2) chose_index = torch.nonzero(mask.data) chose_index = torch.squeeze(chose_index) valid_gt_landmark = gt_landmark[chose_index, :] valid_pred_landmark = pred_landmark[chose_index, :] return self.loss_landmark(valid_pred_landmark,valid_gt_landmark)*self.land_factor class PNet(nn.Module): ''' PNet ''' def __init__(self, is_train=False, use_cuda=True): super(PNet, self).__init__() self.is_train = is_train self.use_cuda = use_cuda # backend self.pre_layer = nn.Sequential( nn.Conv2d(3, 10, kernel_size=3, stride=1), # conv1 nn.PReLU(), # PReLU1 nn.MaxPool2d(kernel_size=2, stride=2), # pool1 nn.Conv2d(10, 16, kernel_size=3, stride=1), # conv2 nn.PReLU(), # PReLU2 nn.Conv2d(16, 32, kernel_size=3, stride=1), # conv3 nn.PReLU() # PReLU3 ) # detection self.conv4_1 = nn.Conv2d(32, 1, kernel_size=1, stride=1) # bounding box regresion self.conv4_2 = nn.Conv2d(32, 4, kernel_size=1, stride=1) # landmark localization self.conv4_3 = nn.Conv2d(32, 10, kernel_size=1, stride=1) # weight initiation with xavier self.apply(weights_init) def forward(self, x): x = self.pre_layer(x) label = F.sigmoid(self.conv4_1(x)) offset = self.conv4_2(x) # landmark = self.conv4_3(x) if self.is_train is True: # label_loss = LossUtil.label_loss(self.gt_label,torch.squeeze(label)) # bbox_loss = LossUtil.bbox_loss(self.gt_bbox,torch.squeeze(offset)) return label,offset #landmark = self.conv4_3(x) return label, offset class RNet(nn.Module): ''' RNet ''' def __init__(self,is_train=False, use_cuda=True): super(RNet, self).__init__() self.is_train = is_train self.use_cuda = use_cuda # backend self.pre_layer = nn.Sequential( nn.Conv2d(3, 28, kernel_size=3, stride=1), # conv1 nn.PReLU(), # prelu1 nn.MaxPool2d(kernel_size=3, stride=2), # pool1 nn.Conv2d(28, 48, kernel_size=3, stride=1), # conv2 nn.PReLU(), # prelu2 nn.MaxPool2d(kernel_size=3, stride=2), # pool2 nn.Conv2d(48, 64, kernel_size=2, stride=1), # conv3 nn.PReLU() # prelu3 ) self.conv4 = nn.Linear(64*2*2, 128) # conv4 self.prelu4 = nn.PReLU() # prelu4 # detection self.conv5_1 = nn.Linear(128, 1) # bounding box regression self.conv5_2 = nn.Linear(128, 4) # lanbmark localization self.conv5_3 = nn.Linear(128, 10) # weight initiation weih xavier self.apply(weights_init) def forward(self, x): # backend x = self.pre_layer(x) x = x.view(x.size(0), -1) x = self.conv4(x) x = self.prelu4(x) # detection det = torch.sigmoid(self.conv5_1(x)) box = self.conv5_2(x) # landmark = self.conv5_3(x) if self.is_train is True: return det, box #landmard = self.conv5_3(x) return det, box class ONet(nn.Module): ''' RNet ''' def __init__(self,is_train=False, use_cuda=True): super(ONet, self).__init__() self.is_train = is_train self.use_cuda = use_cuda # backend self.pre_layer = nn.Sequential( nn.Conv2d(3, 32, kernel_size=3, stride=1), # conv1 nn.PReLU(), # prelu1 nn.MaxPool2d(kernel_size=3, stride=2), # pool1 nn.Conv2d(32, 64, kernel_size=3, stride=1), # conv2 nn.PReLU(), # prelu2 nn.MaxPool2d(kernel_size=3, stride=2), # pool2 nn.Conv2d(64, 64, kernel_size=3, stride=1), # conv3 nn.PReLU(), # prelu3 nn.MaxPool2d(kernel_size=2,stride=2), # pool3 nn.Conv2d(64,128,kernel_size=2,stride=1), # conv4 nn.PReLU() # prelu4 ) self.conv5 = nn.Linear(128*2*2, 256) # conv5 self.prelu5 = nn.PReLU() # prelu5 # detection self.conv6_1 = nn.Linear(256, 1) # bounding box regression self.conv6_2 = nn.Linear(256, 4) # lanbmark localization self.conv6_3 = nn.Linear(256, 10) # weight initiation weih xavier self.apply(weights_init) def forward(self, x): # backend x = self.pre_layer(x) x = x.view(x.size(0), -1) x = self.conv5(x) x = self.prelu5(x) # detection det = torch.sigmoid(self.conv6_1(x)) box = self.conv6_2(x) landmark = self.conv6_3(x) if self.is_train is True: return det, box, landmark #landmard = self.conv5_3(x) return det, box, landmark # Residual Block class ResidualBlock(nn.Module): def __init__(self, in_channels, out_channels, stride=1, downsample=None): super(ResidualBlock, self).__init__() self.conv1 = nn.Conv2d(in_channels, out_channels, kernel_size=3, stride=stride) self.bn1 = nn.BatchNorm2d(out_channels) self.relu = nn.ReLU(inplace=True) self.conv2 = nn.Conv2d(out_channels, out_channels, kernel_size=3, stride=stride) self.bn2 = nn.BatchNorm2d(out_channels) self.downsample = downsample def forward(self, x): residual = x out = self.conv1(x) out = self.bn1(out) out = self.relu(out) out = self.conv2(out) out = self.bn2(out) if self.downsample: residual = self.downsample(x) out += residual out = self.relu(out) return out # ResNet Module class ResNet(nn.Module): def __init__(self, block, num_classes=10): super(ResNet, self).__init__() self.in_channels = 16 self.conv = nn.Conv2d(3, 16,kernel_size=3) self.bn = nn.BatchNorm2d(16) self.relu = nn.ReLU(inplace=True) self.layer1 = self.make_layer(block, 16, 3) self.layer2 = self.make_layer(block, 32, 3, 2) self.layer3 = self.make_layer(block, 64, 3, 2) self.avg_pool = nn.AvgPool2d(8) self.fc = nn.Linear(64, num_classes) def make_layer(self, block, out_channels, blocks, stride=1): downsample = None if (stride != 1) or (self.in_channels != out_channels): downsample = nn.Sequential( nn.Conv2d(self.in_channels, out_channels, kernel_size=3, stride=stride), nn.BatchNorm2d(out_channels)) layers = [] layers.append(block(self.in_channels, out_channels, stride, downsample)) self.in_channels = out_channels for i in range(1, blocks): layers.append(block(out_channels, out_channels)) return nn.Sequential(*layers) def forward(self, x): out = self.conv(x) out = self.bn(out) out = self.relu(out) out = self.layer1(out) out = self.layer2(out) out = self.layer3(out) out = self.avg_pool(out) out = out.view(out.size(0), -1) out = self.fc(out) return out

产生样本代码:

""" 2018-10-20 15:50:20 generate positive, negative, positive images whose size are 12*12 and feed into PNet """ import sys import numpy as np import cv2 import os sys.path.append(os.getcwd()) import numpy as np from mtcnn.data_preprocess.utils import IoU prefix = '' anno_file = "./anno_store/anno_train.txt" im_dir = "./data_set/face_detection/WIDERFACE/WIDER_train/WIDER_train/images" pos_save_dir = "./data_set/train/12/positive" part_save_dir = "./data_set/train/12/part" neg_save_dir = './data_set/train/12/negative' if not os.path.exists(pos_save_dir): os.mkdir(pos_save_dir) if not os.path.exists(part_save_dir): os.mkdir(part_save_dir) if not os.path.exists(neg_save_dir): os.mkdir(neg_save_dir) # store labels of positive, negative, part images f1 = open(os.path.join('./anno_store', 'pos_12.txt'), 'w') f2 = open(os.path.join('./anno_store', 'neg_12.txt'), 'w') f3 = open(os.path.join('./anno_store', 'part_12.txt'), 'w') # anno_file: store labels of the wider face training data with open(anno_file, 'r') as f: annotations = f.readlines() num = len(annotations) print("%d pics in total" % num) p_idx = 0 # positive n_idx = 0 # negative d_idx = 0 # dont care idx = 0 box_idx = 0 for annotation in annotations: annotation = annotation.strip().split(' ') im_path = os.path.join(prefix, annotation[0]) print(im_path) bbox = list(map(float, annotation[1:])) boxes = np.array(bbox, dtype=np.int32).reshape(-1, 4) img = cv2.imread(im_path) idx += 1 if idx % 100 == 0: print(idx, "images done") height, width, channel = img.shape neg_num = 0 while neg_num < 50: size = np.random.randint(12, min(width, height) / 2) nx = np.random.randint(0, width - size) ny = np.random.randint(0, height - size) crop_box = np.array([nx, ny, nx + size, ny + size]) Iou = IoU(crop_box, boxes) cropped_im = img[ny: ny + size, nx: nx + size, :] resized_im = cv2.resize(cropped_im, (12, 12), interpolation=cv2.INTER_LINEAR) if np.max(Iou) < 0.3: # Iou with all gts must below 0.3 save_file = os.path.join(neg_save_dir, "%s.jpg" % n_idx) f2.write(save_file + ' 0\n') cv2.imwrite(save_file, resized_im) n_idx += 1 neg_num += 1 for box in boxes: # box (x_left, y_top, x_right, y_bottom) x1, y1, x2, y2 = box # w = x2 - x1 + 1 # h = y2 - y1 + 1 w = x2 - x1 + 1 h = y2 - y1 + 1 # ignore small faces # in case the ground truth boxes of small faces are not accurate if max(w, h) < 40 or x1 < 0 or y1 < 0: continue # generate negative examples that have overlap with gt for i in range(5): size = np.random.randint(12, min(width, height) / 2) # delta_x and delta_y are offsets of (x1, y1) delta_x = np.random.randint(max(-size, -x1), w) delta_y = np.random.randint(max(-size, -y1), h) nx1 = max(0, x1 + delta_x) ny1 = max(0, y1 + delta_y) if nx1 + size > width or ny1 + size > height: continue crop_box = np.array([nx1, ny1, nx1 + size, ny1 + size]) Iou = IoU(crop_box, boxes) cropped_im = img[ny1: ny1 + size, nx1: nx1 + size, :] resized_im = cv2.resize(cropped_im, (12, 12), interpolation=cv2.INTER_LINEAR) if np.max(Iou) < 0.3: # Iou with all gts must below 0.3 save_file = os.path.join(neg_save_dir, "%s.jpg" % n_idx) f2.write(save_file + ' 0\n') cv2.imwrite(save_file, resized_im) n_idx += 1 # generate positive examples and part faces for i in range(20): size = np.random.randint(int(min(w, h) * 0.8), np.ceil(1.25 * max(w, h))) # delta here is the offset of box center delta_x = np.random.randint(-w * 0.2, w * 0.2) delta_y = np.random.randint(-h * 0.2, h * 0.2) nx1 = max(x1 + w / 2 + delta_x - size / 2, 0) ny1 = max(y1 + h / 2 + delta_y - size / 2, 0) nx2 = nx1 + size ny2 = ny1 + size if nx2 > width or ny2 > height: continue crop_box = np.array([nx1, ny1, nx2, ny2]) offset_x1 = (x1 - nx1) / float(size) offset_y1 = (y1 - ny1) / float(size) offset_x2 = (x2 - nx2) / float(size) offset_y2 = (y2 - ny2) / float(size) cropped_im = img[int(ny1): int(ny2), int(nx1): int(nx2), :] resized_im = cv2.resize(cropped_im, (12, 12), interpolation=cv2.INTER_LINEAR) box_ = box.reshape(1, -1) if IoU(crop_box, box_) >= 0.65: save_file = os.path.join(pos_save_dir, "%s.jpg" % p_idx) f1.write(save_file + ' 1 %.2f %.2f %.2f %.2f\n' % (offset_x1, offset_y1, offset_x2, offset_y2)) cv2.imwrite(save_file, resized_im) p_idx += 1 elif IoU(crop_box, box_) >= 0.4: save_file = os.path.join(part_save_dir, "%s.jpg" % d_idx) f3.write(save_file + ' -1 %.2f %.2f %.2f %.2f\n' % (offset_x1, offset_y1, offset_x2, offset_y2)) cv2.imwrite(save_file, resized_im) d_idx += 1 box_idx += 1 print("%s images done, pos: %s part: %s neg: %s" % (idx, p_idx, d_idx, n_idx)) f1.close() f2.close() f3.close()

正负样本组合代码:

import os import sys sys.path.append(os.getcwd()) import mtcnn.data_preprocess.assemble as assemble pnet_postive_file = './anno_store/pos_12.txt' pnet_part_file = './anno_store/part_12.txt' pnet_neg_file = './anno_store/neg_12.txt' pnet_landmark_file = './anno_store/landmark_12.txt' imglist_filename = './anno_store/imglist_anno_12.txt' if __name__ == '__main__': anno_list = [] anno_list.append(pnet_postive_file) anno_list.append(pnet_part_file) anno_list.append(pnet_neg_file) # anno_list.append(pnet_landmark_file) chose_count = assemble.assemble_data(imglist_filename ,anno_list) print("PNet train annotation result file path:%s" % imglist_filename)

import os import numpy.random as npr import numpy as np def assemble_data(output_file, anno_file_list=[]): #assemble the pos, neg, part annotations to one file size = 12 if len(anno_file_list)==0: return 0 if os.path.exists(output_file): os.remove(output_file) for anno_file in anno_file_list: with open(anno_file, 'r') as f: print(anno_file) anno_lines = f.readlines() base_num = 250000 if len(anno_lines) > base_num * 3: idx_keep = npr.choice(len(anno_lines), size=base_num * 3, replace=True) elif len(anno_lines) > 100000: idx_keep = npr.choice(len(anno_lines), size=len(anno_lines), replace=True) else: idx_keep = np.arange(len(anno_lines)) np.random.shuffle(idx_keep) chose_count = 0 with open(output_file, 'a+') as f: for idx in idx_keep: # write lables of pos, neg, part images f.write(anno_lines[idx]) chose_count+=1 return chose_count

训练代码:

import argparse import sys import os sys.path.append(os.getcwd()) from mtcnn.core.imagedb import ImageDB from mtcnn.train_net.train import train_pnet import mtcnn.config as config annotation_file = './anno_store/imglist_anno_12.txt' model_store_path = './model_store' end_epoch = 10 frequent = 200 lr = 0.01 batch_size = 512 use_cuda = True def train_net(annotation_file, model_store_path, end_epoch=16, frequent=200, lr=0.01, batch_size=128, use_cuda=False): imagedb = ImageDB(annotation_file) gt_imdb = imagedb.load_imdb() gt_imdb = imagedb.append_flipped_images(gt_imdb) train_pnet(model_store_path=model_store_path, end_epoch=end_epoch, imdb=gt_imdb, batch_size=batch_size, frequent=frequent, base_lr=lr, use_cuda=use_cuda) def parse_args(): parser = argparse.ArgumentParser(description='Train PNet', formatter_class=argparse.ArgumentDefaultsHelpFormatter) parser.add_argument('--anno_file', dest='annotation_file', default=os.path.join(config.ANNO_STORE_DIR,config.PNET_TRAIN_IMGLIST_FILENAME), help='training data annotation file', type=str) parser.add_argument('--model_path', dest='model_store_path', help='training model store directory', default=config.MODEL_STORE_DIR, type=str) parser.add_argument('--end_epoch', dest='end_epoch', help='end epoch of training', default=config.END_EPOCH, type=int) parser.add_argument('--frequent', dest='frequent', help='frequency of logging', default=200, type=int) parser.add_argument('--lr', dest='lr', help='learning rate', default=config.TRAIN_LR, type=float) parser.add_argument('--batch_size', dest='batch_size', help='train batch size', default=config.TRAIN_BATCH_SIZE, type=int) parser.add_argument('--gpu', dest='use_cuda', help='train with gpu', default=config.USE_CUDA, type=bool) parser.add_argument('--prefix_path', dest='', help='training data annotation images prefix root path', type=str) args = parser.parse_args() return args if __name__ == '__main__': # args = parse_args() print('train Pnet argument:') # print(args) train_net(annotation_file, model_store_path, end_epoch, frequent, lr, batch_size, use_cuda) # train_net(annotation_file=args.annotation_file, model_store_path=args.model_store_path, # end_epoch=args.end_epoch, frequent=args.frequent, lr=args.lr, batch_size=args.batch_size, use_cuda=args.use_cuda)

from mtcnn.core.image_reader import TrainImageReader import datetime import os from mtcnn.core.models import PNet,RNet,ONet,LossFn import torch from torch.autograd import Variable import mtcnn.core.image_tools as image_tools import numpy as np def compute_accuracy(prob_cls, gt_cls): prob_cls = torch.squeeze(prob_cls) gt_cls = torch.squeeze(gt_cls) #we only need the detection which >= 0 mask = torch.ge(gt_cls,0) #get valid element valid_gt_cls = torch.masked_select(gt_cls,mask) valid_prob_cls = torch.masked_select(prob_cls,mask) size = min(valid_gt_cls.size()[0], valid_prob_cls.size()[0]) prob_ones = torch.ge(valid_prob_cls,0.6).float() right_ones = torch.eq(prob_ones,valid_gt_cls).float() ## if size == 0 meaning that your gt_labels are all negative, landmark or part return torch.div(torch.mul(torch.sum(right_ones),float(1.0)),float(size)) ## divided by zero meaning that your gt_labels are all negative, landmark or part def train_pnet(model_store_path, end_epoch,imdb, batch_size,frequent=10,base_lr=0.01,use_cuda=True): if not os.path.exists(model_store_path): os.makedirs(model_store_path) lossfn = LossFn() net = PNet(is_train=True, use_cuda=use_cuda) net.train() if use_cuda: net.cuda() optimizer = torch.optim.Adam(net.parameters(), lr=base_lr) train_data=TrainImageReader(imdb,12,batch_size,shuffle=True) frequent = 10 for cur_epoch in range(1,end_epoch+1): train_data.reset() # shuffle for batch_idx,(image,(gt_label,gt_bbox,gt_landmark))in enumerate(train_data): im_tensor = [ image_tools.convert_image_to_tensor(image[i,:,:,:]) for i in range(image.shape[0]) ] im_tensor = torch.stack(im_tensor) im_tensor = Variable(im_tensor) gt_label = Variable(torch.from_numpy(gt_label).float()) gt_bbox = Variable(torch.from_numpy(gt_bbox).float()) # gt_landmark = Variable(torch.from_numpy(gt_landmark).float()) if use_cuda: im_tensor = im_tensor.cuda() gt_label = gt_label.cuda() gt_bbox = gt_bbox.cuda() # gt_landmark = gt_landmark.cuda() cls_pred, box_offset_pred = net(im_tensor) # all_loss, cls_loss, offset_loss = lossfn.loss(gt_label=label_y,gt_offset=bbox_y, pred_label=cls_pred, pred_offset=box_offset_pred) cls_loss = lossfn.cls_loss(gt_label,cls_pred) box_offset_loss = lossfn.box_loss(gt_label,gt_bbox,box_offset_pred) # landmark_loss = lossfn.landmark_loss(gt_label,gt_landmark,landmark_offset_pred) all_loss = cls_loss*1.0+box_offset_loss*0.5 if batch_idx %frequent==0: accuracy=compute_accuracy(cls_pred,gt_label) show1 = accuracy.data.cpu().numpy() show2 = cls_loss.data.cpu().numpy() show3 = box_offset_loss.data.cpu().numpy() # show4 = landmark_loss.data.cpu().numpy() show5 = all_loss.data.cpu().numpy() print("%s : Epoch: %d, Step: %d, accuracy: %s, det loss: %s, bbox loss: %s, all_loss: %s, lr:%s "%(datetime.datetime.now(),cur_epoch,batch_idx, show1,show2,show3,show5,base_lr)) optimizer.zero_grad() all_loss.backward() optimizer.step() torch.save(net.state_dict(), os.path.join(model_store_path,"pnet_epoch_%d.pt" % cur_epoch)) torch.save(net, os.path.join(model_store_path,"pnet_epoch_model_%d.pkl" % cur_epoch)) def train_rnet(model_store_path, end_epoch,imdb, batch_size,frequent=50,base_lr=0.01,use_cuda=True): if not os.path.exists(model_store_path): os.makedirs(model_store_path) lossfn = LossFn() net = RNet(is_train=True, use_cuda=use_cuda) net.train() if use_cuda: net.cuda() optimizer = torch.optim.Adam(net.parameters(), lr=base_lr) train_data=TrainImageReader(imdb,24,batch_size,shuffle=True) for cur_epoch in range(1,end_epoch+1): train_data.reset() for batch_idx,(image,(gt_label,gt_bbox,gt_landmark))in enumerate(train_data): im_tensor = [ image_tools.convert_image_to_tensor(image[i,:,:,:]) for i in range(image.shape[0]) ] im_tensor = torch.stack(im_tensor) im_tensor = Variable(im_tensor) gt_label = Variable(torch.from_numpy(gt_label).float()) gt_bbox = Variable(torch.from_numpy(gt_bbox).float()) gt_landmark = Variable(torch.from_numpy(gt_landmark).float()) if use_cuda: im_tensor = im_tensor.cuda() gt_label = gt_label.cuda() gt_bbox = gt_bbox.cuda() gt_landmark = gt_landmark.cuda() cls_pred, box_offset_pred = net(im_tensor) # all_loss, cls_loss, offset_loss = lossfn.loss(gt_label=label_y,gt_offset=bbox_y, pred_label=cls_pred, pred_offset=box_offset_pred) cls_loss = lossfn.cls_loss(gt_label,cls_pred) box_offset_loss = lossfn.box_loss(gt_label,gt_bbox,box_offset_pred) # landmark_loss = lossfn.landmark_loss(gt_label,gt_landmark,landmark_offset_pred) all_loss = cls_loss*1.0+box_offset_loss*0.5 if batch_idx%frequent==0: accuracy=compute_accuracy(cls_pred,gt_label) show1 = accuracy.data.cpu().numpy() show2 = cls_loss.data.cpu().numpy() show3 = box_offset_loss.data.cpu().numpy() # show4 = landmark_loss.data.cpu().numpy() show5 = all_loss.data.cpu().numpy() print("%s : Epoch: %d, Step: %d, accuracy: %s, det loss: %s, bbox loss: %s, all_loss: %s, lr:%s "%(datetime.datetime.now(), cur_epoch, batch_idx, show1, show2, show3, show5, base_lr)) optimizer.zero_grad() all_loss.backward() optimizer.step() torch.save(net.state_dict(), os.path.join(model_store_path,"rnet_epoch_%d.pt" % cur_epoch)) torch.save(net, os.path.join(model_store_path,"rnet_epoch_model_%d.pkl" % cur_epoch)) def train_onet(model_store_path, end_epoch,imdb, batch_size,frequent=50,base_lr=0.01,use_cuda=True): if not os.path.exists(model_store_path): os.makedirs(model_store_path) lossfn = LossFn() net = ONet(is_train=True) net.train() print(use_cuda) if use_cuda: net.cuda() optimizer = torch.optim.Adam(net.parameters(), lr=base_lr) train_data=TrainImageReader(imdb,48,batch_size,shuffle=True) for cur_epoch in range(1,end_epoch+1): train_data.reset() for batch_idx,(image,(gt_label,gt_bbox,gt_landmark))in enumerate(train_data): # print("batch id {0}".format(batch_idx)) im_tensor = [ image_tools.convert_image_to_tensor(image[i,:,:,:]) for i in range(image.shape[0]) ] im_tensor = torch.stack(im_tensor) im_tensor = Variable(im_tensor) gt_label = Variable(torch.from_numpy(gt_label).float()) gt_bbox = Variable(torch.from_numpy(gt_bbox).float()) gt_landmark = Variable(torch.from_numpy(gt_landmark).float()) if use_cuda: im_tensor = im_tensor.cuda() gt_label = gt_label.cuda() gt_bbox = gt_bbox.cuda() gt_landmark = gt_landmark.cuda() cls_pred, box_offset_pred, landmark_offset_pred = net(im_tensor) # all_loss, cls_loss, offset_loss = lossfn.loss(gt_label=label_y,gt_offset=bbox_y, pred_label=cls_pred, pred_offset=box_offset_pred) cls_loss = lossfn.cls_loss(gt_label,cls_pred) box_offset_loss = lossfn.box_loss(gt_label,gt_bbox,box_offset_pred) landmark_loss = lossfn.landmark_loss(gt_label,gt_landmark,landmark_offset_pred) all_loss = cls_loss*0.8+box_offset_loss*0.6+landmark_loss*1.5 if batch_idx%frequent==0: accuracy=compute_accuracy(cls_pred,gt_label) show1 = accuracy.data.cpu().numpy() show2 = cls_loss.data.cpu().numpy() show3 = box_offset_loss.data.cpu().numpy() show4 = landmark_loss.data.cpu().numpy() show5 = all_loss.data.cpu().numpy() print("%s : Epoch: %d, Step: %d, accuracy: %s, det loss: %s, bbox loss: %s, landmark loss: %s, all_loss: %s, lr:%s "%(datetime.datetime.now(),cur_epoch,batch_idx, show1,show2,show3,show4,show5,base_lr)) optimizer.zero_grad() all_loss.backward() optimizer.step() torch.save(net.state_dict(), os.path.join(model_store_path,"onet_epoch_%d.pt" % cur_epoch)) torch.save(net, os.path.join(model_store_path,"onet_epoch_model_%d.pkl" % cur_epoch))

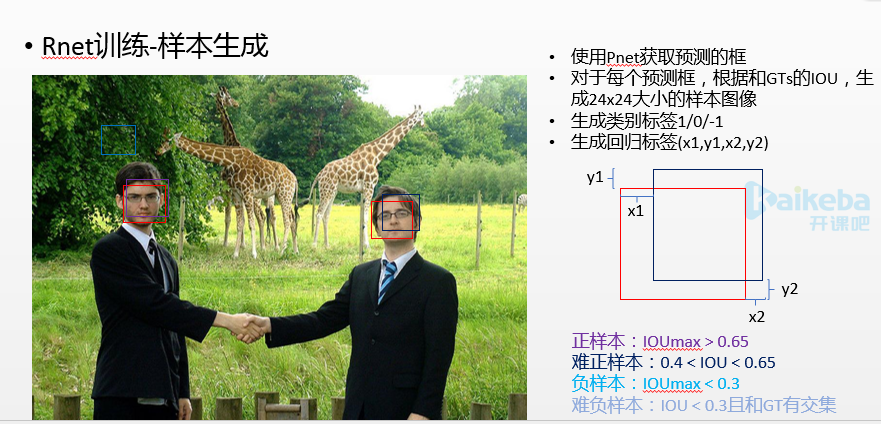

Rnet:

Rnet model:

import torch import torch.nn as nn import torch.nn.functional as F def weights_init(m): if isinstance(m, nn.Conv2d) or isinstance(m, nn.Linear): nn.init.xavier_uniform(m.weight.data) nn.init.constant(m.bias, 0.1) class LossFn: def __init__(self, cls_factor=1, box_factor=1, landmark_factor=1): # loss function self.cls_factor = cls_factor self.box_factor = box_factor self.land_factor = landmark_factor self.loss_cls = nn.BCELoss() # binary cross entropy self.loss_box = nn.MSELoss() # mean square error self.loss_landmark = nn.MSELoss() def cls_loss(self,gt_label,pred_label): pred_label = torch.squeeze(pred_label) gt_label = torch.squeeze(gt_label) # get the mask element which >= 0, only 0 and 1 can effect the detection loss mask = torch.ge(gt_label,0) valid_gt_label = torch.masked_select(gt_label,mask) valid_pred_label = torch.masked_select(pred_label,mask) return self.loss_cls(valid_pred_label,valid_gt_label)*self.cls_factor def box_loss(self,gt_label,gt_offset,pred_offset): pred_offset = torch.squeeze(pred_offset) gt_offset = torch.squeeze(gt_offset) gt_label = torch.squeeze(gt_label) #get the mask element which != 0 unmask = torch.eq(gt_label,0) mask = torch.eq(unmask,0) #convert mask to dim index chose_index = torch.nonzero(mask.data) chose_index = torch.squeeze(chose_index) #only valid element can effect the loss valid_gt_offset = gt_offset[chose_index,:] valid_pred_offset = pred_offset[chose_index,:] return self.loss_box(valid_pred_offset,valid_gt_offset)*self.box_factor def landmark_loss(self,gt_label,gt_landmark,pred_landmark): pred_landmark = torch.squeeze(pred_landmark) gt_landmark = torch.squeeze(gt_landmark) gt_label = torch.squeeze(gt_label) mask = torch.eq(gt_label,-2) chose_index = torch.nonzero(mask.data) chose_index = torch.squeeze(chose_index) valid_gt_landmark = gt_landmark[chose_index, :] valid_pred_landmark = pred_landmark[chose_index, :] return self.loss_landmark(valid_pred_landmark,valid_gt_landmark)*self.land_factor class PNet(nn.Module): ''' PNet ''' def __init__(self, is_train=False, use_cuda=True): super(PNet, self).__init__() self.is_train = is_train self.use_cuda = use_cuda # backend self.pre_layer = nn.Sequential( nn.Conv2d(3, 10, kernel_size=3, stride=1), # conv1 nn.PReLU(), # PReLU1 nn.MaxPool2d(kernel_size=2, stride=2), # pool1 nn.Conv2d(10, 16, kernel_size=3, stride=1), # conv2 nn.PReLU(), # PReLU2 nn.Conv2d(16, 32, kernel_size=3, stride=1), # conv3 nn.PReLU() # PReLU3 ) # detection self.conv4_1 = nn.Conv2d(32, 1, kernel_size=1, stride=1) # bounding box regresion self.conv4_2 = nn.Conv2d(32, 4, kernel_size=1, stride=1) # landmark localization self.conv4_3 = nn.Conv2d(32, 10, kernel_size=1, stride=1) # weight initiation with xavier self.apply(weights_init) def forward(self, x): x = self.pre_layer(x) label = F.sigmoid(self.conv4_1(x)) offset = self.conv4_2(x) # landmark = self.conv4_3(x) if self.is_train is True: # label_loss = LossUtil.label_loss(self.gt_label,torch.squeeze(label)) # bbox_loss = LossUtil.bbox_loss(self.gt_bbox,torch.squeeze(offset)) return label,offset #landmark = self.conv4_3(x) return label, offset class RNet(nn.Module): ''' RNet ''' def __init__(self,is_train=False, use_cuda=True): super(RNet, self).__init__() self.is_train = is_train self.use_cuda = use_cuda # backend self.pre_layer = nn.Sequential( nn.Conv2d(3, 28, kernel_size=3, stride=1), # conv1 nn.PReLU(), # prelu1 nn.MaxPool2d(kernel_size=3, stride=2), # pool1 nn.Conv2d(28, 48, kernel_size=3, stride=1), # conv2 nn.PReLU(), # prelu2 nn.MaxPool2d(kernel_size=3, stride=2), # pool2 nn.Conv2d(48, 64, kernel_size=2, stride=1), # conv3 nn.PReLU() # prelu3 ) self.conv4 = nn.Linear(64*2*2, 128) # conv4 self.prelu4 = nn.PReLU() # prelu4 # detection self.conv5_1 = nn.Linear(128, 1) # bounding box regression self.conv5_2 = nn.Linear(128, 4) # lanbmark localization self.conv5_3 = nn.Linear(128, 10) # weight initiation weih xavier self.apply(weights_init) def forward(self, x): # backend x = self.pre_layer(x) x = x.view(x.size(0), -1) x = self.conv4(x) x = self.prelu4(x) # detection det = torch.sigmoid(self.conv5_1(x)) box = self.conv5_2(x) # landmark = self.conv5_3(x) if self.is_train is True: return det, box #landmard = self.conv5_3(x) return det, box class ONet(nn.Module): ''' RNet ''' def __init__(self,is_train=False, use_cuda=True): super(ONet, self).__init__() self.is_train = is_train self.use_cuda = use_cuda # backend self.pre_layer = nn.Sequential( nn.Conv2d(3, 32, kernel_size=3, stride=1), # conv1 nn.PReLU(), # prelu1 nn.MaxPool2d(kernel_size=3, stride=2), # pool1 nn.Conv2d(32, 64, kernel_size=3, stride=1), # conv2 nn.PReLU(), # prelu2 nn.MaxPool2d(kernel_size=3, stride=2), # pool2 nn.Conv2d(64, 64, kernel_size=3, stride=1), # conv3 nn.PReLU(), # prelu3 nn.MaxPool2d(kernel_size=2,stride=2), # pool3 nn.Conv2d(64,128,kernel_size=2,stride=1), # conv4 nn.PReLU() # prelu4 ) self.conv5 = nn.Linear(128*2*2, 256) # conv5 self.prelu5 = nn.PReLU() # prelu5 # detection self.conv6_1 = nn.Linear(256, 1) # bounding box regression self.conv6_2 = nn.Linear(256, 4) # lanbmark localization self.conv6_3 = nn.Linear(256, 10) # weight initiation weih xavier self.apply(weights_init) def forward(self, x): # backend x = self.pre_layer(x) x = x.view(x.size(0), -1) x = self.conv5(x) x = self.prelu5(x) # detection det = torch.sigmoid(self.conv6_1(x)) box = self.conv6_2(x) landmark = self.conv6_3(x) if self.is_train is True: return det, box, landmark #landmard = self.conv5_3(x) return det, box, landmark # Residual Block class ResidualBlock(nn.Module): def __init__(self, in_channels, out_channels, stride=1, downsample=None): super(ResidualBlock, self).__init__() self.conv1 = nn.Conv2d(in_channels, out_channels, kernel_size=3, stride=stride) self.bn1 = nn.BatchNorm2d(out_channels) self.relu = nn.ReLU(inplace=True) self.conv2 = nn.Conv2d(out_channels, out_channels, kernel_size=3, stride=stride) self.bn2 = nn.BatchNorm2d(out_channels) self.downsample = downsample def forward(self, x): residual = x out = self.conv1(x) out = self.bn1(out) out = self.relu(out) out = self.conv2(out) out = self.bn2(out) if self.downsample: residual = self.downsample(x) out += residual out = self.relu(out) return out # ResNet Module class ResNet(nn.Module): def __init__(self, block, num_classes=10): super(ResNet, self).__init__() self.in_channels = 16 self.conv = nn.Conv2d(3, 16,kernel_size=3) self.bn = nn.BatchNorm2d(16) self.relu = nn.ReLU(inplace=True) self.layer1 = self.make_layer(block, 16, 3) self.layer2 = self.make_layer(block, 32, 3, 2) self.layer3 = self.make_layer(block, 64, 3, 2) self.avg_pool = nn.AvgPool2d(8) self.fc = nn.Linear(64, num_classes) def make_layer(self, block, out_channels, blocks, stride=1): downsample = None if (stride != 1) or (self.in_channels != out_channels): downsample = nn.Sequential( nn.Conv2d(self.in_channels, out_channels, kernel_size=3, stride=stride), nn.BatchNorm2d(out_channels)) layers = [] layers.append(block(self.in_channels, out_channels, stride, downsample)) self.in_channels = out_channels for i in range(1, blocks): layers.append(block(out_channels, out_channels)) return nn.Sequential(*layers) def forward(self, x): out = self.conv(x) out = self.bn(out) out = self.relu(out) out = self.layer1(out) out = self.layer2(out) out = self.layer3(out) out = self.avg_pool(out) out = out.view(out.size(0), -1) out = self.fc(out) return out

生成Rnet训练数据:

import argparse import cv2 import numpy as np import sys import os sys.path.append(os.getcwd()) from mtcnn.core.detect import MtcnnDetector,create_mtcnn_net from mtcnn.core.imagedb import ImageDB from mtcnn.core.image_reader import TestImageLoader import time from six.moves import cPickle from mtcnn.core.utils import convert_to_square,IoU import mtcnn.config as config import mtcnn.core.vision as vision prefix_path = '' traindata_store = './data_set/train' pnet_model_file = './model_store/pnet_epoch.pt' annotation_file = './anno_store/anno_train_test.txt' use_cuda = True def gen_rnet_data(data_dir, anno_file, pnet_model_file, prefix_path='', use_cuda=True, vis=False): """ :param data_dir: train data :param anno_file: :param pnet_model_file: :param prefix_path: :param use_cuda: :param vis: :return: """ # load trained pnet model pnet, _, _ = create_mtcnn_net(p_model_path=pnet_model_file, use_cuda=use_cuda) mtcnn_detector = MtcnnDetector(pnet=pnet,min_face_size=12) # load original_anno_file, length = 12880 imagedb = ImageDB(anno_file,mode="test",prefix_path=prefix_path) imdb = imagedb.load_imdb() image_reader = TestImageLoader(imdb,1,False) all_boxes = list() batch_idx = 0 print('size:%d' %image_reader.size) for databatch in image_reader: if batch_idx % 100 == 0: print ("%d images done" % batch_idx) im = databatch t = time.time() # obtain boxes and aligned boxes boxes, boxes_align = mtcnn_detector.detect_pnet(im=im) if boxes_align is None: all_boxes.append(np.array([])) batch_idx += 1 continue if vis: rgb_im = cv2.cvtColor(np.asarray(im), cv2.COLOR_BGR2RGB) vision.vis_two(rgb_im, boxes, boxes_align) t1 = time.time() - t t = time.time() all_boxes.append(boxes_align) batch_idx += 1 # if batch_idx == 100: # break # print("shape of all boxes {0}".format(all_boxes)) # time.sleep(5) # save_path = model_store_path() # './model_store' save_path = './model_store' if not os.path.exists(save_path): os.mkdir(save_path) save_file = os.path.join(save_path, "detections_%d.pkl" % int(time.time())) with open(save_file, 'wb') as f: cPickle.dump(all_boxes, f, cPickle.HIGHEST_PROTOCOL) gen_rnet_sample_data(data_dir, anno_file, save_file, prefix_path) def gen_rnet_sample_data(data_dir, anno_file, det_boxs_file, prefix_path): """ :param data_dir: :param anno_file: original annotations file of wider face data :param det_boxs_file: detection boxes file :param prefix_path: :return: """ neg_save_dir = os.path.join(data_dir, "24/negative") pos_save_dir = os.path.join(data_dir, "24/positive") part_save_dir = os.path.join(data_dir, "24/part") for dir_path in [neg_save_dir, pos_save_dir, part_save_dir]: # print(dir_path) if not os.path.exists(dir_path): os.makedirs(dir_path) # load ground truth from annotation file # format of each line: image/path [x1,y1,x2,y2] for each gt_box in this image with open(anno_file, 'r') as f: annotations = f.readlines() image_size = 24 net = "rnet" im_idx_list = list() gt_boxes_list = list() num_of_images = len(annotations) print ("processing %d images in total" % num_of_images) for annotation in annotations: annotation = annotation.strip().split(' ') im_idx = os.path.join(prefix_path,annotation[0]) # im_idx = annotation[0] boxes = list(map(float, annotation[1:])) boxes = np.array(boxes, dtype=np.float32).reshape(-1, 4) im_idx_list.append(im_idx) gt_boxes_list.append(boxes) # './anno_store' save_path = './anno_store' if not os.path.exists(save_path): os.makedirs(save_path) f1 = open(os.path.join(save_path, 'pos_%d.txt' % image_size), 'w') f2 = open(os.path.join(save_path, 'neg_%d.txt' % image_size), 'w') f3 = open(os.path.join(save_path, 'part_%d.txt' % image_size), 'w') # print(det_boxs_file) det_handle = open(det_boxs_file, 'rb') det_boxes = cPickle.load(det_handle) # an image contain many boxes stored in an array print(len(det_boxes), num_of_images) # assert len(det_boxes) == num_of_images, "incorrect detections or ground truths" # index of neg, pos and part face, used as their image names n_idx = 0 p_idx = 0 d_idx = 0 image_done = 0 for im_idx, dets, gts in zip(im_idx_list, det_boxes, gt_boxes_list): # if (im_idx+1) == 100: # break gts = np.array(gts, dtype=np.float32).reshape(-1, 4) if image_done % 100 == 0: print("%d images done" % image_done) image_done += 1 if dets.shape[0] == 0: continue img = cv2.imread(im_idx) # change to square dets = convert_to_square(dets) dets[:, 0:4] = np.round(dets[:, 0:4]) neg_num = 0 for box in dets: x_left, y_top, x_right, y_bottom, _ = box.astype(int) width = x_right - x_left + 1 height = y_bottom - y_top + 1 # ignore box that is too small or beyond image border if width < 20 or x_left < 0 or y_top < 0 or x_right > img.shape[1] - 1 or y_bottom > img.shape[0] - 1: continue # compute intersection over union(IoU) between current box and all gt boxes Iou = IoU(box, gts) cropped_im = img[y_top:y_bottom + 1, x_left:x_right + 1, :] resized_im = cv2.resize(cropped_im, (image_size, image_size), interpolation=cv2.INTER_LINEAR) # save negative images and write label # Iou with all gts must below 0.3 if np.max(Iou) < 0.3 and neg_num < 60: # save the examples save_file = os.path.join(neg_save_dir, "%s.jpg" % n_idx) # print(save_file) f2.write(save_file + ' 0\n') cv2.imwrite(save_file, resized_im) n_idx += 1 neg_num += 1 else: # find gt_box with the highest iou idx = np.argmax(Iou) assigned_gt = gts[idx] x1, y1, x2, y2 = assigned_gt # compute bbox reg label offset_x1 = (x1 - x_left) / float(width) offset_y1 = (y1 - y_top) / float(height) offset_x2 = (x2 - x_right) / float(width) offset_y2 = (y2 - y_bottom) / float(height) # save positive and part-face images and write labels if np.max(Iou) >= 0.65: save_file = os.path.join(pos_save_dir, "%s.jpg" % p_idx) f1.write(save_file + ' 1 %.2f %.2f %.2f %.2f\n' % ( offset_x1, offset_y1, offset_x2, offset_y2)) cv2.imwrite(save_file, resized_im) p_idx += 1 elif np.max(Iou) >= 0.4: save_file = os.path.join(part_save_dir, "%s.jpg" % d_idx) f3.write(save_file + ' -1 %.2f %.2f %.2f %.2f\n' % ( offset_x1, offset_y1, offset_x2, offset_y2)) cv2.imwrite(save_file, resized_im) d_idx += 1 f1.close() f2.close() f3.close() def model_store_path(): return os.path.dirname(os.path.dirname(os.path.dirname(os.path.realpath(__file__))))+"/model_store" if __name__ == '__main__': gen_rnet_data(traindata_store, annotation_file, pnet_model_file, prefix_path, use_cuda)

import cv2 import time import numpy as np import torch from torch.autograd.variable import Variable from mtcnn.core.models import PNet,RNet,ONet import mtcnn.core.utils as utils import mtcnn.core.image_tools as image_tools def create_mtcnn_net(p_model_path=None, r_model_path=None, o_model_path=None, use_cuda=True): pnet, rnet, onet = None, None, None if p_model_path is not None: pnet = PNet(use_cuda=use_cuda) if(use_cuda): print('p_model_path:{0}'.format(p_model_path)) pnet.load_state_dict(torch.load(p_model_path)) pnet.cuda() else: # forcing all GPU tensors to be in CPU while loading pnet.load_state_dict(torch.load(p_model_path, map_location=lambda storage, loc: storage)) pnet.eval() if r_model_path is not None: rnet = RNet(use_cuda=use_cuda) if (use_cuda): print('r_model_path:{0}'.format(r_model_path)) rnet.load_state_dict(torch.load(r_model_path)) rnet.cuda() else: rnet.load_state_dict(torch.load(r_model_path, map_location=lambda storage, loc: storage)) rnet.eval() if o_model_path is not None: onet = ONet(use_cuda=use_cuda) if (use_cuda): print('o_model_path:{0}'.format(o_model_path)) onet.load_state_dict(torch.load(o_model_path)) onet.cuda() else: onet.load_state_dict(torch.load(o_model_path, map_location=lambda storage, loc: storage)) onet.eval() return pnet,rnet,onet class MtcnnDetector(object): """ P,R,O net face detection and landmarks align """ def __init__(self, pnet = None, rnet = None, onet = None, min_face_size=12, stride=2, threshold=[0.6, 0.7, 0.7], scale_factor=0.709, ): self.pnet_detector = pnet self.rnet_detector = rnet self.onet_detector = onet self.min_face_size = min_face_size self.stride=stride self.thresh = threshold self.scale_factor = scale_factor def unique_image_format(self,im): if not isinstance(im,np.ndarray): if im.mode == 'I': im = np.array(im, np.int32, copy=False) elif im.mode == 'I;16': im = np.array(im, np.int16, copy=False) else: im = np.asarray(im) return im def square_bbox(self, bbox): """ convert bbox to square Parameters: ---------- bbox: numpy array , shape n x m input bbox Returns: ------- a square bbox """ square_bbox = bbox.copy() # x2 - x1 # y2 - y1 h = bbox[:, 3] - bbox[:, 1] + 1 w = bbox[:, 2] - bbox[:, 0] + 1 l = np.maximum(h,w) # x1 = x1 + w*0.5 - l*0.5 # y1 = y1 + h*0.5 - l*0.5 square_bbox[:, 0] = bbox[:, 0] + w*0.5 - l*0.5 square_bbox[:, 1] = bbox[:, 1] + h*0.5 - l*0.5 # x2 = x1 + l - 1 # y2 = y1 + l - 1 square_bbox[:, 2] = square_bbox[:, 0] + l - 1 square_bbox[:, 3] = square_bbox[:, 1] + l - 1 return square_bbox def generate_bounding_box(self, map, reg, scale, threshold): """ generate bbox from feature map Parameters: ---------- map: numpy array , n x m x 1 detect score for each position reg: numpy array , n x m x 4 bbox scale: float number scale of this detection threshold: float number detect threshold Returns: ------- bbox array """ stride = 2 cellsize = 12 # receptive field t_index = np.where(map > threshold) # print('shape of t_index:{0}'.format(len(t_index))) # print('t_index{0}'.format(t_index)) # time.sleep(5) # find nothing if t_index[0].size == 0: return np.array([]) # reg = (1, n, m, 4) # choose bounding box whose socre are larger than threshold dx1, dy1, dx2, dy2 = [reg[0, t_index[0], t_index[1], i] for i in range(4)] # print(dx1.shape) # time.sleep(5) reg = np.array([dx1, dy1, dx2, dy2]) # print('shape of reg{0}'.format(reg.shape)) # lefteye_dx, lefteye_dy, righteye_dx, righteye_dy, nose_dx, nose_dy, \ # leftmouth_dx, leftmouth_dy, rightmouth_dx, rightmouth_dy = [landmarks[0, t_index[0], t_index[1], i] for i in range(10)] # # landmarks = np.array([lefteye_dx, lefteye_dy, righteye_dx, righteye_dy, nose_dx, nose_dy, leftmouth_dx, leftmouth_dy, rightmouth_dx, rightmouth_dy]) # abtain score of classification which larger than threshold # t_index[0]: choose the first column of t_index # t_index[1]: choose the second column of t_index score = map[t_index[0], t_index[1], 0] # hence t_index[1] means column, t_index[1] is the value of x # hence t_index[0] means row, t_index[0] is the value of y boundingbox = np.vstack([np.round((stride * t_index[1]) / scale), # x1 of prediction box in original image np.round((stride * t_index[0]) / scale), # y1 of prediction box in original image np.round((stride * t_index[1] + cellsize) / scale), # x2 of prediction box in original image np.round((stride * t_index[0] + cellsize) / scale), # y2 of prediction box in original image # reconstruct the box in original image score, reg, # landmarks ]) return boundingbox.T def resize_image(self, img, scale): """ resize image and transform dimention to [batchsize, channel, height, width] Parameters: ---------- img: numpy array , height x width x channel input image, channels in BGR order here scale: float number scale factor of resize operation Returns: ------- transformed image tensor , 1 x channel x height x width """ height, width, channels = img.shape new_height = int(height * scale) # resized new height new_width = int(width * scale) # resized new width new_dim = (new_width, new_height) img_resized = cv2.resize(img, new_dim, interpolation=cv2.INTER_LINEAR) # resized image return img_resized def pad(self, bboxes, w, h): """ pad the the boxes Parameters: ---------- bboxes: numpy array, n x 5 input bboxes w: float number width of the input image h: float number height of the input image Returns : ------ dy, dx : numpy array, n x 1 start point of the bbox in target image edy, edx : numpy array, n x 1 end point of the bbox in target image y, x : numpy array, n x 1 start point of the bbox in original image ex, ex : numpy array, n x 1 end point of the bbox in original image tmph, tmpw: numpy array, n x 1 height and width of the bbox """ # width and height tmpw = (bboxes[:, 2] - bboxes[:, 0] + 1).astype(np.int32) tmph = (bboxes[:, 3] - bboxes[:, 1] + 1).astype(np.int32) numbox = bboxes.shape[0] dx = np.zeros((numbox, )) dy = np.zeros((numbox, )) edx, edy = tmpw.copy()-1, tmph.copy()-1 # x, y: start point of the bbox in original image # ex, ey: end point of the bbox in original image x, y, ex, ey = bboxes[:, 0], bboxes[:, 1], bboxes[:, 2], bboxes[:, 3] tmp_index = np.where(ex > w-1) edx[tmp_index] = tmpw[tmp_index] + w - 2 - ex[tmp_index] ex[tmp_index] = w - 1 tmp_index = np.where(ey > h-1) edy[tmp_index] = tmph[tmp_index] + h - 2 - ey[tmp_index] ey[tmp_index] = h - 1 tmp_index = np.where(x < 0) dx[tmp_index] = 0 - x[tmp_index] x[tmp_index] = 0 tmp_index = np.where(y < 0) dy[tmp_index] = 0 - y[tmp_index] y[tmp_index] = 0 return_list = [dy, edy, dx, edx, y, ey, x, ex, tmpw, tmph] return_list = [item.astype(np.int32) for item in return_list] return return_list def detect_pnet(self, im): """Get face candidates through pnet Parameters: ---------- im: numpy array input image array one batch Returns: ------- boxes: numpy array detected boxes before calibration boxes_align: numpy array boxes after calibration """ # im = self.unique_image_format(im) # original wider face data h, w, c = im.shape net_size = 12 current_scale = float(net_size) / self.min_face_size # find initial scale # print('imgshape:{0}, current_scale:{1}'.format(im.shape, current_scale)) im_resized = self.resize_image(im, current_scale) # scale = 1.0 current_height, current_width, _ = im_resized.shape # fcn all_boxes = list() i = 0 while min(current_height, current_width) > net_size: # print(i) feed_imgs = [] image_tensor = image_tools.convert_image_to_tensor(im_resized) feed_imgs.append(image_tensor) feed_imgs = torch.stack(feed_imgs) feed_imgs = Variable(feed_imgs) if self.pnet_detector.use_cuda: feed_imgs = feed_imgs.cuda() # self.pnet_detector is a trained pnet torch model # receptive field is 12×12 # 12×12 --> score # 12×12 --> bounding box cls_map, reg = self.pnet_detector(feed_imgs) cls_map_np = image_tools.convert_chwTensor_to_hwcNumpy(cls_map.cpu()) reg_np = image_tools.convert_chwTensor_to_hwcNumpy(reg.cpu()) # print(cls_map_np.shape, reg_np.shape) # cls_map_np = (1, n, m, 1) reg_np.shape = (1, n, m 4) # time.sleep(5) # landmark_np = image_tools.convert_chwTensor_to_hwcNumpy(landmark.cpu()) # self.threshold[0] = 0.6 # print(cls_map_np[0,:,:].shape) # time.sleep(4) # boxes = [x1, y1, x2, y2, score, reg] boxes = self.generate_bounding_box(cls_map_np[ 0, :, :], reg_np, current_scale, self.thresh[0]) # generate pyramid images current_scale *= self.scale_factor # self.scale_factor = 0.709 im_resized = self.resize_image(im, current_scale) current_height, current_width, _ = im_resized.shape if boxes.size == 0: continue # non-maximum suppresion keep = utils.nms(boxes[:, :5], 0.5, 'Union') boxes = boxes[keep] # print(boxes.shape) all_boxes.append(boxes) # i+=1 if len(all_boxes) == 0: return None, None all_boxes = np.vstack(all_boxes) # print("shape of all boxes {0}".format(all_boxes.shape)) # time.sleep(5) # merge the detection from first stage keep = utils.nms(all_boxes[:, 0:5], 0.7, 'Union') all_boxes = all_boxes[keep] # boxes = all_boxes[:, :5] # x2 - x1 # y2 - y1 bw = all_boxes[:, 2] - all_boxes[:, 0] + 1 bh = all_boxes[:, 3] - all_boxes[:, 1] + 1 # landmark_keep = all_boxes[:, 9:].reshape((5,2)) boxes = np.vstack([all_boxes[:,0], all_boxes[:,1], all_boxes[:,2], all_boxes[:,3], all_boxes[:,4], # all_boxes[:, 0] + all_boxes[:, 9] * bw, # all_boxes[:, 1] + all_boxes[:,10] * bh, # all_boxes[:, 0] + all_boxes[:, 11] * bw, # all_boxes[:, 1] + all_boxes[:, 12] * bh, # all_boxes[:, 0] + all_boxes[:, 13] * bw, # all_boxes[:, 1] + all_boxes[:, 14] * bh, # all_boxes[:, 0] + all_boxes[:, 15] * bw, # all_boxes[:, 1] + all_boxes[:, 16] * bh, # all_boxes[:, 0] + all_boxes[:, 17] * bw, # all_boxes[:, 1] + all_boxes[:, 18] * bh ]) boxes = boxes.T # boxes = boxes = [x1, y1, x2, y2, score, reg] reg= [px1, py1, px2, py2] (in prediction) align_topx = all_boxes[:, 0] + all_boxes[:, 5] * bw align_topy = all_boxes[:, 1] + all_boxes[:, 6] * bh align_bottomx = all_boxes[:, 2] + all_boxes[:, 7] * bw align_bottomy = all_boxes[:, 3] + all_boxes[:, 8] * bh # refine the boxes boxes_align = np.vstack([ align_topx, align_topy, align_bottomx, align_bottomy, all_boxes[:, 4], # align_topx + all_boxes[:,9] * bw, # align_topy + all_boxes[:,10] * bh, # align_topx + all_boxes[:,11] * bw, # align_topy + all_boxes[:,12] * bh, # align_topx + all_boxes[:,13] * bw, # align_topy + all_boxes[:,14] * bh, # align_topx + all_boxes[:,15] * bw, # align_topy + all_boxes[:,16] * bh, # align_topx + all_boxes[:,17] * bw, # align_topy + all_boxes[:,18] * bh, ]) boxes_align = boxes_align.T return boxes, boxes_align def detect_rnet(self, im, dets): """Get face candidates using rnet Parameters: ---------- im: numpy array input image array dets: numpy array detection results of pnet Returns: ------- boxes: numpy array detected boxes before calibration boxes_align: numpy array boxes after calibration """ # im: an input image h, w, c = im.shape if dets is None: return None,None # (705, 5) = [x1, y1, x2, y2, score, reg] # print("pnet detection {0}".format(dets.shape)) # time.sleep(5) # return square boxes dets = self.square_bbox(dets) # rounds dets[:, 0:4] = np.round(dets[:, 0:4]) [dy, edy, dx, edx, y, ey, x, ex, tmpw, tmph] = self.pad(dets, w, h) num_boxes = dets.shape[0] ''' # helper for setting RNet batch size batch_size = self.rnet_detector.batch_size ratio = float(num_boxes) / batch_size if ratio > 3 or ratio < 0.3: print "You may need to reset RNet batch size if this info appears frequently, \ face candidates:%d, current batch_size:%d"%(num_boxes, batch_size) ''' # cropped_ims_tensors = np.zeros((num_boxes, 3, 24, 24), dtype=np.float32) cropped_ims_tensors = [] for i in range(num_boxes): tmp = np.zeros((tmph[i], tmpw[i], 3), dtype=np.uint8) tmp[dy[i]:edy[i]+1, dx[i]:edx[i]+1, :] = im[y[i]:ey[i]+1, x[i]:ex[i]+1, :] crop_im = cv2.resize(tmp, (24, 24)) crop_im_tensor = image_tools.convert_image_to_tensor(crop_im) # cropped_ims_tensors[i, :, :, :] = crop_im_tensor cropped_ims_tensors.append(crop_im_tensor) feed_imgs = Variable(torch.stack(cropped_ims_tensors)) if self.rnet_detector.use_cuda: feed_imgs = feed_imgs.cuda() cls_map, reg = self.rnet_detector(feed_imgs) cls_map = cls_map.cpu().data.numpy() reg = reg.cpu().data.numpy() # landmark = landmark.cpu().data.numpy() keep_inds = np.where(cls_map > self.thresh[1])[0] if len(keep_inds) > 0: boxes = dets[keep_inds] cls = cls_map[keep_inds] reg = reg[keep_inds] # landmark = landmark[keep_inds] else: return None, None keep = utils.nms(boxes, 0.7) if len(keep) == 0: return None, None keep_cls = cls[keep] keep_boxes = boxes[keep] keep_reg = reg[keep] # keep_landmark = landmark[keep] bw = keep_boxes[:, 2] - keep_boxes[:, 0] + 1 bh = keep_boxes[:, 3] - keep_boxes[:, 1] + 1 boxes = np.vstack([ keep_boxes[:,0], keep_boxes[:,1], keep_boxes[:,2], keep_boxes[:,3], keep_cls[:,0], # keep_boxes[:,0] + keep_landmark[:, 0] * bw, # keep_boxes[:,1] + keep_landmark[:, 1] * bh, # keep_boxes[:,0] + keep_landmark[:, 2] * bw, # keep_boxes[:,1] + keep_landmark[:, 3] * bh, # keep_boxes[:,0] + keep_landmark[:, 4] * bw, # keep_boxes[:,1] + keep_landmark[:, 5] * bh, # keep_boxes[:,0] + keep_landmark[:, 6] * bw, # keep_boxes[:,1] + keep_landmark[:, 7] * bh, # keep_boxes[:,0] + keep_landmark[:, 8] * bw, # keep_boxes[:,1] + keep_landmark[:, 9] * bh, ]) align_topx = keep_boxes[:,0] + keep_reg[:,0] * bw align_topy = keep_boxes[:,1] + keep_reg[:,1] * bh align_bottomx = keep_boxes[:,2] + keep_reg[:,2] * bw align_bottomy = keep_boxes[:,3] + keep_reg[:,3] * bh boxes_align = np.vstack([align_topx, align_topy, align_bottomx, align_bottomy, keep_cls[:, 0], # align_topx + keep_landmark[:, 0] * bw, # align_topy + keep_landmark[:, 1] * bh, # align_topx + keep_landmark[:, 2] * bw, # align_topy + keep_landmark[:, 3] * bh, # align_topx + keep_landmark[:, 4] * bw, # align_topy + keep_landmark[:, 5] * bh, # align_topx + keep_landmark[:, 6] * bw, # align_topy + keep_landmark[:, 7] * bh, # align_topx + keep_landmark[:, 8] * bw, # align_topy + keep_landmark[:, 9] * bh, ]) boxes = boxes.T boxes_align = boxes_align.T return boxes, boxes_align def detect_onet(self, im, dets): """Get face candidates using onet Parameters: ---------- im: numpy array input image array dets: numpy array detection results of rnet Returns: ------- boxes_align: numpy array boxes after calibration landmarks_align: numpy array landmarks after calibration """ h, w, c = im.shape if dets is None: return None, None dets = self.square_bbox(dets) dets[:, 0:4] = np.round(dets[:, 0:4]) [dy, edy, dx, edx, y, ey, x, ex, tmpw, tmph] = self.pad(dets, w, h) num_boxes = dets.shape[0] # cropped_ims_tensors = np.zeros((num_boxes, 3, 24, 24), dtype=np.float32) cropped_ims_tensors = [] for i in range(num_boxes): tmp = np.zeros((tmph[i], tmpw[i], 3), dtype=np.uint8) # crop input image tmp[dy[i]:edy[i] + 1, dx[i]:edx[i] + 1, :] = im[y[i]:ey[i] + 1, x[i]:ex[i] + 1, :] crop_im = cv2.resize(tmp, (48, 48)) crop_im_tensor = image_tools.convert_image_to_tensor(crop_im) # cropped_ims_tensors[i, :, :, :] = crop_im_tensor cropped_ims_tensors.append(crop_im_tensor) feed_imgs = Variable(torch.stack(cropped_ims_tensors)) if self.rnet_detector.use_cuda: feed_imgs = feed_imgs.cuda() cls_map, reg, landmark = self.onet_detector(feed_imgs) cls_map = cls_map.cpu().data.numpy() reg = reg.cpu().data.numpy() landmark = landmark.cpu().data.numpy() keep_inds = np.where(cls_map > self.thresh[2])[0] if len(keep_inds) > 0: boxes = dets[keep_inds] cls = cls_map[keep_inds] reg = reg[keep_inds] landmark = landmark[keep_inds] else: return None, None keep = utils.nms(boxes, 0.7, mode="Minimum") if len(keep) == 0: return None, None keep_cls = cls[keep] keep_boxes = boxes[keep] keep_reg = reg[keep] keep_landmark = landmark[keep] bw = keep_boxes[:, 2] - keep_boxes[:, 0] + 1 bh = keep_boxes[:, 3] - keep_boxes[:, 1] + 1 align_topx = keep_boxes[:, 0] + keep_reg[:, 0] * bw align_topy = keep_boxes[:, 1] + keep_reg[:, 1] * bh align_bottomx = keep_boxes[:, 2] + keep_reg[:, 2] * bw align_bottomy = keep_boxes[:, 3] + keep_reg[:, 3] * bh align_landmark_topx = keep_boxes[:, 0] align_landmark_topy = keep_boxes[:, 1] boxes_align = np.vstack([align_topx, align_topy, align_bottomx, align_bottomy, keep_cls[:, 0], # align_topx + keep_landmark[:, 0] * bw, # align_topy + keep_landmark[:, 1] * bh, # align_topx + keep_landmark[:, 2] * bw, # align_topy + keep_landmark[:, 3] * bh, # align_topx + keep_landmark[:, 4] * bw, # align_topy + keep_landmark[:, 5] * bh, # align_topx + keep_landmark[:, 6] * bw, # align_topy + keep_landmark[:, 7] * bh, # align_topx + keep_landmark[:, 8] * bw, # align_topy + keep_landmark[:, 9] * bh, ]) boxes_align = boxes_align.T landmark = np.vstack([ align_landmark_topx + keep_landmark[:, 0] * bw, align_landmark_topy + keep_landmark[:, 1] * bh, align_landmark_topx + keep_landmark[:, 2] * bw, align_landmark_topy + keep_landmark[:, 3] * bh, align_landmark_topx + keep_landmark[:, 4] * bw, align_landmark_topy + keep_landmark[:, 5] * bh, align_landmark_topx + keep_landmark[:, 6] * bw, align_landmark_topy + keep_landmark[:, 7] * bh, align_landmark_topx + keep_landmark[:, 8] * bw, align_landmark_topy + keep_landmark[:, 9] * bh, ]) landmark_align = landmark.T return boxes_align, landmark_align def detect_face(self,img): """Detect face over image """ boxes_align = np.array([]) landmark_align =np.array([]) t = time.time() # pnet if self.pnet_detector: boxes, boxes_align = self.detect_pnet(img) if boxes_align is None: return np.array([]), np.array([]) t1 = time.time() - t t = time.time() # rnet if self.rnet_detector: boxes, boxes_align = self.detect_rnet(img, boxes_align) if boxes_align is None: return np.array([]), np.array([]) t2 = time.time() - t t = time.time() # onet if self.onet_detector: boxes_align, landmark_align = self.detect_onet(img, boxes_align) if boxes_align is None: return np.array([]), np.array([]) t3 = time.time() - t t = time.time() print("time cost " + '{:.3f}'.format(t1+t2+t3) + ' pnet {:.3f} rnet {:.3f} onet {:.3f}'.format(t1, t2, t3)) return boxes_align, landmark_align

正负样本:

import os import sys sys.path.append(os.getcwd()) import mtcnn.data_preprocess.assemble as assemble rnet_postive_file = './anno_store/pos_24.txt' rnet_part_file = './anno_store/part_24.txt' rnet_neg_file = './anno_store/neg_24.txt' rnet_landmark_file = './anno_store/landmark_24.txt' imglist_filename = './anno_store/imglist_anno_24.txt' if __name__ == '__main__': anno_list = [] anno_list.append(rnet_postive_file) anno_list.append(rnet_part_file) anno_list.append(rnet_neg_file) # anno_list.append(pnet_landmark_file) chose_count = assemble.assemble_data(imglist_filename ,anno_list) print("PNet train annotation result file path:%s" % imglist_filename)

import os import numpy.random as npr import numpy as np def assemble_data(output_file, anno_file_list=[]): #assemble the pos, neg, part annotations to one file size = 12 if len(anno_file_list)==0: return 0 if os.path.exists(output_file): os.remove(output_file) for anno_file in anno_file_list: with open(anno_file, 'r') as f: print(anno_file) anno_lines = f.readlines() base_num = 250000 if len(anno_lines) > base_num * 3: idx_keep = npr.choice(len(anno_lines), size=base_num * 3, replace=True) elif len(anno_lines) > 100000: idx_keep = npr.choice(len(anno_lines), size=len(anno_lines), replace=True) else: idx_keep = np.arange(len(anno_lines)) np.random.shuffle(idx_keep) chose_count = 0 with open(output_file, 'a+') as f: for idx in idx_keep: # write lables of pos, neg, part images f.write(anno_lines[idx]) chose_count+=1 return chose_count

训练:

import argparse import sys import os sys.path.append(os.getcwd()) from mtcnn.core.imagedb import ImageDB import mtcnn.train_net.train as train import mtcnn.config as config def train_net(annotation_file, model_store_path, end_epoch=16, frequent=200, lr=0.01, batch_size=128, use_cuda=False): imagedb = ImageDB(annotation_file) print(imagedb.num_images) gt_imdb = imagedb.load_imdb() gt_imdb = imagedb.append_flipped_images(gt_imdb) train.train_rnet(model_store_path=model_store_path, end_epoch=end_epoch, imdb=gt_imdb, batch_size=batch_size, frequent=frequent, base_lr=lr, use_cuda=use_cuda) def parse_args(): parser = argparse.ArgumentParser(description='Train RNet', formatter_class=argparse.ArgumentDefaultsHelpFormatter) parser.add_argument('--anno_file', dest='annotation_file', default=os.path.join(config.ANNO_STORE_DIR,config.RNET_TRAIN_IMGLIST_FILENAME), help='training data annotation file', type=str) parser.add_argument('--model_path', dest='model_store_path', help='training model store directory', default=config.MODEL_STORE_DIR, type=str) parser.add_argument('--end_epoch', dest='end_epoch', help='end epoch of training', default=config.END_EPOCH, type=int) parser.add_argument('--frequent', dest='frequent', help='frequency of logging', default=200, type=int) parser.add_argument('--lr', dest='lr', help='learning rate', default=config.TRAIN_LR, type=float) parser.add_argument('--batch_size', dest='batch_size', help='train batch size', default=config.TRAIN_BATCH_SIZE, type=int) parser.add_argument('--gpu', dest='use_cuda', help='train with gpu', default=config.USE_CUDA, type=bool) parser.add_argument('--prefix_path', dest='', help='training data annotation images prefix root path', type=str) args = parser.parse_args() return args if __name__ == '__main__': args = parse_args() print('train Rnet argument:') print(args) annotation_file = "./anno_store/imglist_anno_24.txt" model_store_path = "./model_store" end_epoch = 10 lr = 0.01 batch_size = 32 use_cuda = True frequent = 200 train_net(annotation_file, model_store_path, end_epoch, frequent, lr, batch_size, use_cuda) # train_net(annotation_file=args.annotation_file, model_store_path=args.model_store_path, # end_epoch=args.end_epoch, frequent=args.frequent, lr=args.lr, batch_size=args.batch_size, use_cuda=args.use_cuda)

from mtcnn.core.image_reader import TrainImageReader import datetime import os from mtcnn.core.models import PNet,RNet,ONet,LossFn import torch from torch.autograd import Variable import mtcnn.core.image_tools as image_tools import numpy as np def compute_accuracy(prob_cls, gt_cls): prob_cls = torch.squeeze(prob_cls) gt_cls = torch.squeeze(gt_cls) #we only need the detection which >= 0 mask = torch.ge(gt_cls,0) #get valid element valid_gt_cls = torch.masked_select(gt_cls,mask) valid_prob_cls = torch.masked_select(prob_cls,mask) size = min(valid_gt_cls.size()[0], valid_prob_cls.size()[0]) prob_ones = torch.ge(valid_prob_cls,0.6).float() right_ones = torch.eq(prob_ones,valid_gt_cls).float() ## if size == 0 meaning that your gt_labels are all negative, landmark or part return torch.div(torch.mul(torch.sum(right_ones),float(1.0)),float(size)) ## divided by zero meaning that your gt_labels are all negative, landmark or part def train_pnet(model_store_path, end_epoch,imdb, batch_size,frequent=10,base_lr=0.01,use_cuda=True): if not os.path.exists(model_store_path): os.makedirs(model_store_path) lossfn = LossFn() net = PNet(is_train=True, use_cuda=use_cuda) net.train() if use_cuda: net.cuda() optimizer = torch.optim.Adam(net.parameters(), lr=base_lr) train_data=TrainImageReader(imdb,12,batch_size,shuffle=True) frequent = 10 for cur_epoch in range(1,end_epoch+1): train_data.reset() # shuffle for batch_idx,(image,(gt_label,gt_bbox,gt_landmark))in enumerate(train_data): im_tensor = [ image_tools.convert_image_to_tensor(image[i,:,:,:]) for i in range(image.shape[0]) ] im_tensor = torch.stack(im_tensor) im_tensor = Variable(im_tensor) gt_label = Variable(torch.from_numpy(gt_label).float()) gt_bbox = Variable(torch.from_numpy(gt_bbox).float()) # gt_landmark = Variable(torch.from_numpy(gt_landmark).float()) if use_cuda: im_tensor = im_tensor.cuda() gt_label = gt_label.cuda() gt_bbox = gt_bbox.cuda() # gt_landmark = gt_landmark.cuda() cls_pred, box_offset_pred = net(im_tensor) # all_loss, cls_loss, offset_loss = lossfn.loss(gt_label=label_y,gt_offset=bbox_y, pred_label=cls_pred, pred_offset=box_offset_pred) cls_loss = lossfn.cls_loss(gt_label,cls_pred) box_offset_loss = lossfn.box_loss(gt_label,gt_bbox,box_offset_pred) # landmark_loss = lossfn.landmark_loss(gt_label,gt_landmark,landmark_offset_pred) all_loss = cls_loss*1.0+box_offset_loss*0.5 if batch_idx %frequent==0: accuracy=compute_accuracy(cls_pred,gt_label) show1 = accuracy.data.cpu().numpy() show2 = cls_loss.data.cpu().numpy() show3 = box_offset_loss.data.cpu().numpy() # show4 = landmark_loss.data.cpu().numpy() show5 = all_loss.data.cpu().numpy() print("%s : Epoch: %d, Step: %d, accuracy: %s, det loss: %s, bbox loss: %s, all_loss: %s, lr:%s "%(datetime.datetime.now(),cur_epoch,batch_idx, show1,show2,show3,show5,base_lr)) optimizer.zero_grad() all_loss.backward() optimizer.step() torch.save(net.state_dict(), os.path.join(model_store_path,"pnet_epoch_%d.pt" % cur_epoch)) torch.save(net, os.path.join(model_store_path,"pnet_epoch_model_%d.pkl" % cur_epoch)) def train_rnet(model_store_path, end_epoch,imdb, batch_size,frequent=50,base_lr=0.01,use_cuda=True): if not os.path.exists(model_store_path): os.makedirs(model_store_path) lossfn = LossFn() net = RNet(is_train=True, use_cuda=use_cuda) net.train() if use_cuda: net.cuda() optimizer = torch.optim.Adam(net.parameters(), lr=base_lr) train_data=TrainImageReader(imdb,24,batch_size,shuffle=True) for cur_epoch in range(1,end_epoch+1): train_data.reset() for batch_idx,(image,(gt_label,gt_bbox,gt_landmark))in enumerate(train_data): im_tensor = [ image_tools.convert_image_to_tensor(image[i,:,:,:]) for i in range(image.shape[0]) ] im_tensor = torch.stack(im_tensor) im_tensor = Variable(im_tensor) gt_label = Variable(torch.from_numpy(gt_label).float()) gt_bbox = Variable(torch.from_numpy(gt_bbox).float()) gt_landmark = Variable(torch.from_numpy(gt_landmark).float()) if use_cuda: im_tensor = im_tensor.cuda() gt_label = gt_label.cuda() gt_bbox = gt_bbox.cuda() gt_landmark = gt_landmark.cuda() cls_pred, box_offset_pred = net(im_tensor) # all_loss, cls_loss, offset_loss = lossfn.loss(gt_label=label_y,gt_offset=bbox_y, pred_label=cls_pred, pred_offset=box_offset_pred) cls_loss = lossfn.cls_loss(gt_label,cls_pred) box_offset_loss = lossfn.box_loss(gt_label,gt_bbox,box_offset_pred) # landmark_loss = lossfn.landmark_loss(gt_label,gt_landmark,landmark_offset_pred) all_loss = cls_loss*1.0+box_offset_loss*0.5 if batch_idx%frequent==0: accuracy=compute_accuracy(cls_pred,gt_label) show1 = accuracy.data.cpu().numpy() show2 = cls_loss.data.cpu().numpy() show3 = box_offset_loss.data.cpu().numpy() # show4 = landmark_loss.data.cpu().numpy() show5 = all_loss.data.cpu().numpy() print("%s : Epoch: %d, Step: %d, accuracy: %s, det loss: %s, bbox loss: %s, all_loss: %s, lr:%s "%(datetime.datetime.now(), cur_epoch, batch_idx, show1, show2, show3, show5, base_lr)) optimizer.zero_grad() all_loss.backward() optimizer.step() torch.save(net.state_dict(), os.path.join(model_store_path,"rnet_epoch_%d.pt" % cur_epoch)) torch.save(net, os.path.join(model_store_path,"rnet_epoch_model_%d.pkl" % cur_epoch)) def train_onet(model_store_path, end_epoch,imdb, batch_size,frequent=50,base_lr=0.01,use_cuda=True): if not os.path.exists(model_store_path): os.makedirs(model_store_path) lossfn = LossFn() net = ONet(is_train=True) net.train() print(use_cuda) if use_cuda: net.cuda() optimizer = torch.optim.Adam(net.parameters(), lr=base_lr) train_data=TrainImageReader(imdb,48,batch_size,shuffle=True) for cur_epoch in range(1,end_epoch+1): train_data.reset() for batch_idx,(image,(gt_label,gt_bbox,gt_landmark))in enumerate(train_data): # print("batch id {0}".format(batch_idx)) im_tensor = [ image_tools.convert_image_to_tensor(image[i,:,:,:]) for i in range(image.shape[0]) ] im_tensor = torch.stack(im_tensor) im_tensor = Variable(im_tensor) gt_label = Variable(torch.from_numpy(gt_label).float()) gt_bbox = Variable(torch.from_numpy(gt_bbox).float()) gt_landmark = Variable(torch.from_numpy(gt_landmark).float()) if use_cuda: im_tensor = im_tensor.cuda() gt_label = gt_label.cuda() gt_bbox = gt_bbox.cuda() gt_landmark = gt_landmark.cuda() cls_pred, box_offset_pred, landmark_offset_pred = net(im_tensor) # all_loss, cls_loss, offset_loss = lossfn.loss(gt_label=label_y,gt_offset=bbox_y, pred_label=cls_pred, pred_offset=box_offset_pred) cls_loss = lossfn.cls_loss(gt_label,cls_pred) box_offset_loss = lossfn.box_loss(gt_label,gt_bbox,box_offset_pred) landmark_loss = lossfn.landmark_loss(gt_label,gt_landmark,landmark_offset_pred) all_loss = cls_loss*0.8+box_offset_loss*0.6+landmark_loss*1.5 if batch_idx%frequent==0: accuracy=compute_accuracy(cls_pred,gt_label) show1 = accuracy.data.cpu().numpy() show2 = cls_loss.data.cpu().numpy() show3 = box_offset_loss.data.cpu().numpy() show4 = landmark_loss.data.cpu().numpy() show5 = all_loss.data.cpu().numpy() print("%s : Epoch: %d, Step: %d, accuracy: %s, det loss: %s, bbox loss: %s, landmark loss: %s, all_loss: %s, lr:%s "%(datetime.datetime.now(),cur_epoch,batch_idx, show1,show2,show3,show4,show5,base_lr)) optimizer.zero_grad() all_loss.backward() optimizer.step() torch.save(net.state_dict(), os.path.join(model_store_path,"onet_epoch_%d.pt" % cur_epoch)) torch.save(net, os.path.join(model_store_path,"onet_epoch_model_%d.pkl" % cur_epoch))

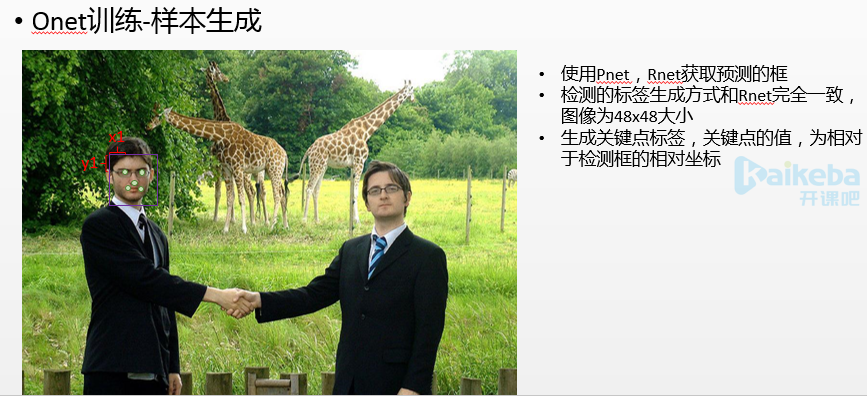

Onet:

onet model:

import torch import torch.nn as nn import torch.nn.functional as F def weights_init(m): if isinstance(m, nn.Conv2d) or isinstance(m, nn.Linear): nn.init.xavier_uniform(m.weight.data) nn.init.constant(m.bias, 0.1) class LossFn: def __init__(self, cls_factor=1, box_factor=1, landmark_factor=1): # loss function self.cls_factor = cls_factor self.box_factor = box_factor self.land_factor = landmark_factor self.loss_cls = nn.BCELoss() # binary cross entropy self.loss_box = nn.MSELoss() # mean square error self.loss_landmark = nn.MSELoss() def cls_loss(self,gt_label,pred_label): pred_label = torch.squeeze(pred_label) gt_label = torch.squeeze(gt_label) # get the mask element which >= 0, only 0 and 1 can effect the detection loss mask = torch.ge(gt_label,0) valid_gt_label = torch.masked_select(gt_label,mask) valid_pred_label = torch.masked_select(pred_label,mask) return self.loss_cls(valid_pred_label,valid_gt_label)*self.cls_factor def box_loss(self,gt_label,gt_offset,pred_offset): pred_offset = torch.squeeze(pred_offset) gt_offset = torch.squeeze(gt_offset) gt_label = torch.squeeze(gt_label) #get the mask element which != 0 unmask = torch.eq(gt_label,0) mask = torch.eq(unmask,0) #convert mask to dim index chose_index = torch.nonzero(mask.data) chose_index = torch.squeeze(chose_index) #only valid element can effect the loss valid_gt_offset = gt_offset[chose_index,:] valid_pred_offset = pred_offset[chose_index,:] return self.loss_box(valid_pred_offset,valid_gt_offset)*self.box_factor def landmark_loss(self,gt_label,gt_landmark,pred_landmark): pred_landmark = torch.squeeze(pred_landmark) gt_landmark = torch.squeeze(gt_landmark) gt_label = torch.squeeze(gt_label) mask = torch.eq(gt_label,-2) chose_index = torch.nonzero(mask.data) chose_index = torch.squeeze(chose_index) valid_gt_landmark = gt_landmark[chose_index, :] valid_pred_landmark = pred_landmark[chose_index, :] return self.loss_landmark(valid_pred_landmark,valid_gt_landmark)*self.land_factor class PNet(nn.Module): ''' PNet ''' def __init__(self, is_train=False, use_cuda=True): super(PNet, self).__init__() self.is_train = is_train self.use_cuda = use_cuda # backend self.pre_layer = nn.Sequential( nn.Conv2d(3, 10, kernel_size=3, stride=1), # conv1 nn.PReLU(), # PReLU1 nn.MaxPool2d(kernel_size=2, stride=2), # pool1 nn.Conv2d(10, 16, kernel_size=3, stride=1), # conv2 nn.PReLU(), # PReLU2 nn.Conv2d(16, 32, kernel_size=3, stride=1), # conv3 nn.PReLU() # PReLU3 ) # detection self.conv4_1 = nn.Conv2d(32, 1, kernel_size=1, stride=1) # bounding box regresion self.conv4_2 = nn.Conv2d(32, 4, kernel_size=1, stride=1) # landmark localization self.conv4_3 = nn.Conv2d(32, 10, kernel_size=1, stride=1) # weight initiation with xavier self.apply(weights_init) def forward(self, x): x = self.pre_layer(x) label = F.sigmoid(self.conv4_1(x)) offset = self.conv4_2(x) # landmark = self.conv4_3(x) if self.is_train is True: # label_loss = LossUtil.label_loss(self.gt_label,torch.squeeze(label)) # bbox_loss = LossUtil.bbox_loss(self.gt_bbox,torch.squeeze(offset)) return label,offset #landmark = self.conv4_3(x) return label, offset class RNet(nn.Module): ''' RNet ''' def __init__(self,is_train=False, use_cuda=True): super(RNet, self).__init__() self.is_train = is_train self.use_cuda = use_cuda # backend self.pre_layer = nn.Sequential( nn.Conv2d(3, 28, kernel_size=3, stride=1), # conv1 nn.PReLU(), # prelu1 nn.MaxPool2d(kernel_size=3, stride=2), # pool1 nn.Conv2d(28, 48, kernel_size=3, stride=1), # conv2 nn.PReLU(), # prelu2 nn.MaxPool2d(kernel_size=3, stride=2), # pool2 nn.Conv2d(48, 64, kernel_size=2, stride=1), # conv3 nn.PReLU() # prelu3 ) self.conv4 = nn.Linear(64*2*2, 128) # conv4 self.prelu4 = nn.PReLU() # prelu4 # detection self.conv5_1 = nn.Linear(128, 1) # bounding box regression self.conv5_2 = nn.Linear(128, 4) # lanbmark localization self.conv5_3 = nn.Linear(128, 10) # weight initiation weih xavier self.apply(weights_init) def forward(self, x): # backend x = self.pre_layer(x) x = x.view(x.size(0), -1) x = self.conv4(x) x = self.prelu4(x) # detection det = torch.sigmoid(self.conv5_1(x)) box = self.conv5_2(x) # landmark = self.conv5_3(x) if self.is_train is True: return det, box #landmard = self.conv5_3(x) return det, box class ONet(nn.Module): ''' RNet ''' def __init__(self,is_train=False, use_cuda=True): super(ONet, self).__init__() self.is_train = is_train self.use_cuda = use_cuda # backend self.pre_layer = nn.Sequential( nn.Conv2d(3, 32, kernel_size=3, stride=1), # conv1 nn.PReLU(), # prelu1 nn.MaxPool2d(kernel_size=3, stride=2), # pool1 nn.Conv2d(32, 64, kernel_size=3, stride=1), # conv2 nn.PReLU(), # prelu2 nn.MaxPool2d(kernel_size=3, stride=2), # pool2 nn.Conv2d(64, 64, kernel_size=3, stride=1), # conv3 nn.PReLU(), # prelu3 nn.MaxPool2d(kernel_size=2,stride=2), # pool3 nn.Conv2d(64,128,kernel_size=2,stride=1), # conv4 nn.PReLU() # prelu4 ) self.conv5 = nn.Linear(128*2*2, 256) # conv5 self.prelu5 = nn.PReLU() # prelu5 # detection self.conv6_1 = nn.Linear(256, 1) # bounding box regression self.conv6_2 = nn.Linear(256, 4) # lanbmark localization self.conv6_3 = nn.Linear(256, 10) # weight initiation weih xavier self.apply(weights_init) def forward(self, x): # backend x = self.pre_layer(x) x = x.view(x.size(0), -1) x = self.conv5(x) x = self.prelu5(x) # detection det = torch.sigmoid(self.conv6_1(x)) box = self.conv6_2(x) landmark = self.conv6_3(x) if self.is_train is True: return det, box, landmark #landmard = self.conv5_3(x) return det, box, landmark # Residual Block class ResidualBlock(nn.Module): def __init__(self, in_channels, out_channels, stride=1, downsample=None): super(ResidualBlock, self).__init__() self.conv1 = nn.Conv2d(in_channels, out_channels, kernel_size=3, stride=stride) self.bn1 = nn.BatchNorm2d(out_channels) self.relu = nn.ReLU(inplace=True) self.conv2 = nn.Conv2d(out_channels, out_channels, kernel_size=3, stride=stride) self.bn2 = nn.BatchNorm2d(out_channels) self.downsample = downsample def forward(self, x): residual = x out = self.conv1(x) out = self.bn1(out) out = self.relu(out) out = self.conv2(out) out = self.bn2(out) if self.downsample: residual = self.downsample(x) out += residual out = self.relu(out) return out # ResNet Module class ResNet(nn.Module): def __init__(self, block, num_classes=10): super(ResNet, self).__init__() self.in_channels = 16 self.conv = nn.Conv2d(3, 16,kernel_size=3) self.bn = nn.BatchNorm2d(16) self.relu = nn.ReLU(inplace=True) self.layer1 = self.make_layer(block, 16, 3) self.layer2 = self.make_layer(block, 32, 3, 2) self.layer3 = self.make_layer(block, 64, 3, 2) self.avg_pool = nn.AvgPool2d(8) self.fc = nn.Linear(64, num_classes) def make_layer(self, block, out_channels, blocks, stride=1): downsample = None if (stride != 1) or (self.in_channels != out_channels): downsample = nn.Sequential( nn.Conv2d(self.in_channels, out_channels, kernel_size=3, stride=stride), nn.BatchNorm2d(out_channels)) layers = [] layers.append(block(self.in_channels, out_channels, stride, downsample)) self.in_channels = out_channels for i in range(1, blocks): layers.append(block(out_channels, out_channels)) return nn.Sequential(*layers) def forward(self, x): out = self.conv(x) out = self.bn(out) out = self.relu(out) out = self.layer1(out) out = self.layer2(out) out = self.layer3(out) out = self.avg_pool(out) out = out.view(out.size(0), -1) out = self.fc(out) return out

生成训练数据:

import argparse import os import sys sys.path.append(os.getcwd()) import cv2 import numpy as np from mtcnn.core.detect import MtcnnDetector,create_mtcnn_net from mtcnn.core.imagedb import ImageDB from mtcnn.core.image_reader import TestImageLoader import time from six.moves import cPickle from mtcnn.core.utils import convert_to_square,IoU import mtcnn.core.vision as vision prefix_path = '' traindata_store = './data_set/train' pnet_model_file = './model_store/pnet_epoch.pt' rnet_model_file = './model_store/rnet_epoch.pt' annotation_file = './anno_store/anno_train_test.txt' use_cuda = True def gen_onet_data(data_dir, anno_file, pnet_model_file, rnet_model_file, prefix_path='', use_cuda=True, vis=False): pnet, rnet, _ = create_mtcnn_net(p_model_path=pnet_model_file, r_model_path=rnet_model_file, use_cuda=use_cuda) mtcnn_detector = MtcnnDetector(pnet=pnet, rnet=rnet, min_face_size=12) imagedb = ImageDB(anno_file,mode="test",prefix_path=prefix_path) imdb = imagedb.load_imdb() image_reader = TestImageLoader(imdb,1,False) all_boxes = list() batch_idx = 0 print('size:%d' % image_reader.size) for databatch in image_reader: if batch_idx % 50 == 0: print("%d images done" % batch_idx) im = databatch t = time.time() # pnet detection = [x1, y1, x2, y2, score, reg] p_boxes, p_boxes_align = mtcnn_detector.detect_pnet(im=im) # rnet detection boxes, boxes_align = mtcnn_detector.detect_rnet(im=im, dets=p_boxes_align) if boxes_align is None: all_boxes.append(np.array([])) batch_idx += 1 continue if vis: rgb_im = cv2.cvtColor(np.asarray(im), cv2.COLOR_BGR2RGB) vision.vis_two(rgb_im, boxes, boxes_align) t1 = time.time() - t t = time.time() all_boxes.append(boxes_align) batch_idx += 1 save_path = './model_store' if not os.path.exists(save_path): os.mkdir(save_path) save_file = os.path.join(save_path, "detections_%d.pkl" % int(time.time())) with open(save_file, 'wb') as f: cPickle.dump(all_boxes, f, cPickle.HIGHEST_PROTOCOL) gen_onet_sample_data(data_dir,anno_file,save_file,prefix_path) def gen_onet_sample_data(data_dir,anno_file,det_boxs_file,prefix): neg_save_dir = os.path.join(data_dir, "48/negative") pos_save_dir = os.path.join(data_dir, "48/positive") part_save_dir = os.path.join(data_dir, "48/part") for dir_path in [neg_save_dir, pos_save_dir, part_save_dir]: if not os.path.exists(dir_path): os.makedirs(dir_path) # load ground truth from annotation file # format of each line: image/path [x1,y1,x2,y2] for each gt_box in this image with open(anno_file, 'r') as f: annotations = f.readlines() image_size = 48 net = "onet" im_idx_list = list() gt_boxes_list = list() num_of_images = len(annotations) print("processing %d images in total" % num_of_images) for annotation in annotations: annotation = annotation.strip().split(' ') im_idx = os.path.join(prefix,annotation[0]) boxes = list(map(float, annotation[1:])) boxes = np.array(boxes, dtype=np.float32).reshape(-1, 4) im_idx_list.append(im_idx) gt_boxes_list.append(boxes) save_path = './anno_store' if not os.path.exists(save_path): os.makedirs(save_path) f1 = open(os.path.join(save_path, 'pos_%d.txt' % image_size), 'w') f2 = open(os.path.join(save_path, 'neg_%d.txt' % image_size), 'w') f3 = open(os.path.join(save_path, 'part_%d.txt' % image_size), 'w') det_handle = open(det_boxs_file, 'rb') det_boxes = cPickle.load(det_handle) print(len(det_boxes), num_of_images) # assert len(det_boxes) == num_of_images, "incorrect detections or ground truths" # index of neg, pos and part face, used as their image names n_idx = 0 p_idx = 0 d_idx = 0 image_done = 0 for im_idx, dets, gts in zip(im_idx_list, det_boxes, gt_boxes_list): if image_done % 100 == 0: print("%d images done" % image_done) image_done += 1 if dets.shape[0] == 0: continue img = cv2.imread(im_idx) dets = convert_to_square(dets) dets[:, 0:4] = np.round(dets[:, 0:4]) for box in dets: x_left, y_top, x_right, y_bottom = box[0:4].astype(int) width = x_right - x_left + 1 height = y_bottom - y_top + 1 # ignore box that is too small or beyond image border if width < 20 or x_left < 0 or y_top < 0 or x_right > img.shape[1] - 1 or y_bottom > img.shape[0] - 1: continue # compute intersection over union(IoU) between current box and all gt boxes Iou = IoU(box, gts) cropped_im = img[y_top:y_bottom + 1, x_left:x_right + 1, :] resized_im = cv2.resize(cropped_im, (image_size, image_size), interpolation=cv2.INTER_LINEAR) # save negative images and write label if np.max(Iou) < 0.3: # Iou with all gts must below 0.3 save_file = os.path.join(neg_save_dir, "%s.jpg" % n_idx) f2.write(save_file + ' 0\n') cv2.imwrite(save_file, resized_im) n_idx += 1 else: # find gt_box with the highest iou idx = np.argmax(Iou) assigned_gt = gts[idx] x1, y1, x2, y2 = assigned_gt # compute bbox reg label offset_x1 = (x1 - x_left) / float(width) offset_y1 = (y1 - y_top) / float(height) offset_x2 = (x2 - x_right) / float(width) offset_y2 = (y2 - y_bottom) / float(height) # save positive and part-face images and write labels if np.max(Iou) >= 0.65: save_file = os.path.join(pos_save_dir, "%s.jpg" % p_idx) f1.write(save_file + ' 1 %.2f %.2f %.2f %.2f\n' % ( offset_x1, offset_y1, offset_x2, offset_y2)) cv2.imwrite(save_file, resized_im) p_idx += 1 elif np.max(Iou) >= 0.4: save_file = os.path.join(part_save_dir, "%s.jpg" % d_idx) f3.write(save_file + ' -1 %.2f %.2f %.2f %.2f\n' % ( offset_x1, offset_y1, offset_x2, offset_y2)) cv2.imwrite(save_file, resized_im) d_idx += 1 f1.close() f2.close() f3.close() def model_store_path(): return os.path.dirname(os.path.dirname(os.path.dirname(os.path.realpath(__file__))))+"/model_store" if __name__ == '__main__': gen_onet_data(traindata_store, annotation_file, pnet_model_file, rnet_model_file, prefix_path, use_cuda)