SparkSql API

通过api使用sparksql

实现步骤:

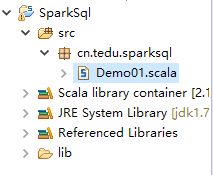

1)打开scala IDE开发环境,创建一个scala工程

2)导入spark相关依赖jar包

3)创建包路径以object类

4)写代码

代码示意:

package cn.tedu.sparksql

import org.apache.spark.SparkConf

import org.apache.spark.SparkContext

import org.apache.spark.sql.SQLContext

object Demo01 {

def main(args: Array[String]): Unit = {

val conf=new SparkConf().setMaster("spark://hadoop01:7077").setAppName("sqlDemo01");

val sc=new SparkContext(conf)

val sqlContext=new SQLContext(sc)

val rdd=sc.makeRDD(List((1,"zhang"),(2,"li"),(3,"wang")))

import sqlContext.implicits._

val df=rdd.toDF("id","name")

df.registerTempTable("tabx")

val df2=sqlContext.sql("select * from tabx order by name");

val rdd2=df2.toJavaRDD;

//将结果输出到linux的本地目录下,当然,也可以输出到HDFS上

rdd2.saveAsTextFile("file:///home/software/result");

}

}

5)打jar包,并上传到linux虚拟机上

6)在spark的bin目录下

执行:sh spark-submit --class cn.tedu.sparksql.Demo01 ./sqlDemo01.jar

7)最后检验