体验Kubernetes 1.19.3,部署Dashboard+Prometheus+Grafana+Alertmanager

1.安装要求

在开始之前,部署Kubernetes集群机器需要满足以下几个条件:

- 一台或多台机器,操作系统 CentOS7.6-86_x64

- 硬件配置:4GB或更多RAM,4个CPU或更多CPU,硬盘30GB或更多

- 集群中所有机器之间网络互通

- 可以访问外网,需要拉取镜像

- 禁止swap分区

- 同步时间服务器

2. 准备环境

安装基础包 yum -y install bash-completion wget ntpdate nfs-utils 关闭防火墙: systemctl stop firewalld systemctl disable firewalld 关闭selinux: sed -i 's/enforcing/disabled/' /etc/selinux/config setenforce 0 关闭swap: swapoff -a # 临时 sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab # 永久 时间同步: crontab -e 0 */2 * * * /usr/sbin/ntpdate time.windows.date

hostnamectl set-hostname k8s-master --static

添加主机名与IP对应关系(记得设置主机名):

$ cat >> /etc/hosts<<EOF 9.110.187.120 k8s-master 9.110.187.125 k8s-node1 9.110.187.126 k8s-node2 EOF

将桥接的IPv4流量传递到iptables的链:

$ cat > /etc/sysctl.d/k8s.conf << EOF net.ipv4.ip_nonlocal_bind = 1 net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_local_port_range = 10000 65000 fs.file-max = 2000000 vm.swappiness = 0 EOF $ sysctl --system

3. 所有节点安装Docker/kubeadm/kubelet

Kubernetes默认CRI(容器运行时)为Docker,因此先安装Docker。

3.1 安装Docker

$ wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo $ yum -y install docker-ce-18.06.1.ce-3.el7 $ yum list docker-ce --showduplicates | sort -r # 确保网络模块开机自动加载 lsmod | grep overlay lsmod | grep br_netfilter # 若上面命令无返回值输出或提示文件不存在,需执行以下命令: cat > /etc/modules-load.d/docker.conf <<EOF overlay br_netfilter EOF modprobe overlay modprobe br_netfilter # 配置Docker mkdir /etc/docker # 修改cgroup驱动为systemd[k8s官方推荐]、限制容器日志量、修改存储类型,最后的docker家目录可修改 cat > /etc/docker/daemon.json <<EOF { "exec-opts": ["native.cgroupdriver=systemd"], "log-driver": "json-file", "log-opts": { "max-size": "100m" }, "storage-driver": "overlay2", "storage-opts": [ "overlay2.override_kernel_check=true" ], "registry-mirrors": ["https://7uuu3esz.mirror.aliyuncs.com"], "data-root": "/data/docker" } EOF #添加开机自启,立即启动 systemctl enable --now docker systemctl daemon-reload systemctl restart docker

3.2 添加阿里云YUM软件源

$ cat > /etc/yum.repos.d/kubernetes.repo << EOF [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF # 查看版本 yum list |grep kubelet yum list |grep kubeadm yum list |grep kubectl

3.3 安装kubeadm,kubelet和kubectl

由于版本更新频繁,这里指定版本号部署:

yum install -y kubelet-1.19.3 kubeadm-1.19.3 kubectl-1.19.3 systemctl enable kubelet

4. 部署Kubernetes Master

在9.110.187.120(Master)执行。

$ kubeadm init \ --apiserver-advertise-address=9.110.187.120 \ --image-repository registry.aliyuncs.com/google_containers \ --kubernetes-version v1.19.3 \ --service-cidr=10.1.0.0/16 \ --pod-network-cidr=10.244.0.0/16

由于默认拉取镜像地址k8s.gcr.io国内无法访问,这里指定阿里云镜像仓库地址。

使用kubectl工具:

$ mkdir -p $HOME/.kube $ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config $ sudo chown $(id -u):$(id -g) $HOME/.kube/config $ kubectl get nodes

5. 安装Pod网络插件(CNI)

$ kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

如果下载失败,可以改成这个镜像地址:lizhenliang/flannel:v0.11.0-amd64

6. 加入Kubernetes Node

在9.110.187.125/126(Node)执行。

向集群添加新节点,执行在kubeadm init输出的kubeadm join命令:

$ kubeadm join 9.110.187.120:6443 --token esce21.q6hetwm8si29qxwn \ --discovery-token-ca-cert-hash sha256:00603a05805807501d7181c3d60b478788408cfe6cedefedb1f97569708be9c5

7. 测试kubernetes集群

在Kubernetes集群中创建一个pod,验证是否正常运行:

kubectl create deployment nginx --image=nginx:1.16 kubectl expose deployment nginx --port=80 --type=NodePort kubectl get pods -A -o wide kubectl describe pod nginx-86c57db685-frwwt

访问地址:http://NodeIP:Port

8. Kubernetes V1.19.3部署Dashboard V2.0(beta5)

$ wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta5/aio/deploy/recommended.yaml

修改recommended.yaml文件内容(vi recommended.yaml):

--- #增加直接访问端口 kind: Service apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: type: NodePort #增加 ports: - port: 443 targetPort: 8443 nodePort: 30001 #增加 selector: k8s-app: kubernetes-dashboard --- #因为自动生成的证书很多浏览器无法使用,所以我们自己创建,注释掉kubernetes-dashboard-certs对象声明 #apiVersion: v1 #kind: Secret #metadata: # labels: # k8s-app: kubernetes-dashboard # name: kubernetes-dashboard-certs # namespace: kubernetes-dashboard #type: Opaque ---

创建证书 mkdir dashboard-certs cd dashboard-certs/ #创建命名空间 # kubectl create namespace kubernetes-dashboard # 创建key文件 openssl genrsa -out dashboard.key 2048 #证书请求 openssl req -days 36000 -new -out dashboard.csr -key dashboard.key -subj '/CN=dashboard-cert' #自签证书 openssl x509 -req -in dashboard.csr -signkey dashboard.key -out dashboard.crt #创建kubernetes-dashboard-certs对象 kubectl create secret generic kubernetes-dashboard-certs --from-file=dashboard.key --from-file=dashboard.crt -n kubernetes-dashboard

部署Dashboard

涉及到的两个镜像可以先下载下来 #安装 kubectl apply -f ~/recommended.yaml #检查结果 kubectl get pods -A -o wide kubectl get service -n kubernetes-dashboard -o wide

9.创建dashboard管理员

cat >> dashboard-admin.yaml<<EOF apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: kubernetes-dashboard name: dashboard-admin namespace: kubernetes-dashboard EOF kubectl apply -f dashboard-admin.yaml 为用户分配权限: cat >>dashboard-admin-bind-cluster-role.yaml<<EOF apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: dashboard-admin-bind-cluster-role labels: k8s-app: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: dashboard-admin namespace: kubernetes-dashboard EOF kubectl apply -f dashboard-admin-bind-cluster-role.yaml

访问地址:https://NodeIP:30000

创建service account并绑定默认cluster-admin管理员集群角色:

kubectl create serviceaccount dashboard-admin -n kube-system kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

使用输出的token登录Dashboard。

10.部署Prometheus+Grafana+Alertmanager

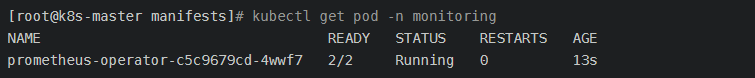

# git clone https://github.com/coreos/kube-prometheus # cd kube-prometheus/manifests/ # 修改镜像源 sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' setup/prometheus-operator-deployment.yaml sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' prometheus-prometheus.yaml sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' alertmanager-alertmanager.yaml sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' kube-state-metrics-deployment.yaml sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' node-exporter-daemonset.yaml sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' prometheus-adapter-deployment.yaml # 安装CRD和prometheus-operator [root@k8s-master manifests]# kubectl apply -f setup/ [root@k8s-master manifests]# kubectl get pod -n monitoring

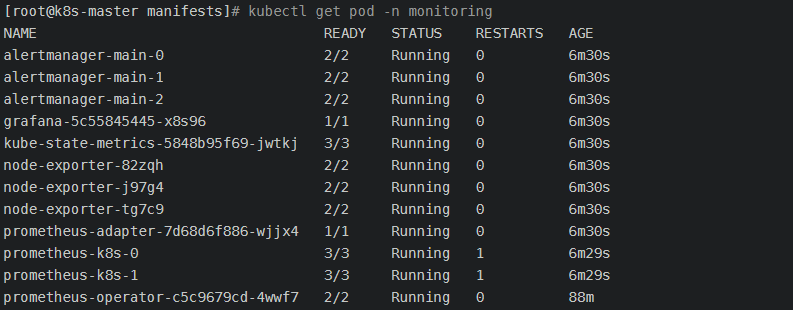

安装prometheus, alertmanager, grafana, kube-state-metrics, node-exporter等资源,时间较长 [root@k8s-master manifests]# kubectl apply -f . [root@k8s-master manifests]# kubectl get pod -n monitoring

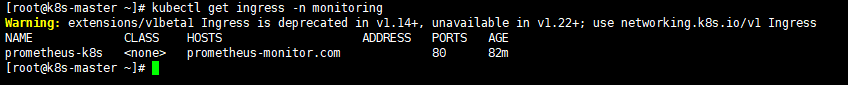

配置 ingress

cat nginx-ingress.yaml

# 如果打算用于生产环境,请参考 https://github.com/nginxinc/kubernetes-ingress/blob/v1.5.5/docs/installation.md 并根据您自己的情况做进一步定制 apiVersion: v1 kind: Namespace metadata: name: nginx-ingress --- apiVersion: v1 kind: ServiceAccount metadata: name: nginx-ingress namespace: nginx-ingress --- apiVersion: v1 kind: Secret metadata: name: default-server-secret namespace: nginx-ingress type: Opaque data: tls.crt: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUN2akNDQWFZQ0NRREFPRjl0THNhWFhEQU5CZ2txaGtpRzl3MEJBUXNGQURBaE1SOHdIUVlEVlFRRERCWk8KUjBsT1dFbHVaM0psYzNORGIyNTBjbTlzYkdWeU1CNFhEVEU0TURreE1qRTRNRE16TlZvWERUSXpNRGt4TVRFNApNRE16TlZvd0lURWZNQjBHQTFVRUF3d1dUa2RKVGxoSmJtZHlaWE56UTI5dWRISnZiR3hsY2pDQ0FTSXdEUVlKCktvWklodmNOQVFFQkJRQURnZ0VQQURDQ0FRb0NnZ0VCQUwvN2hIUEtFWGRMdjNyaUM3QlBrMTNpWkt5eTlyQ08KR2xZUXYyK2EzUDF0azIrS3YwVGF5aGRCbDRrcnNUcTZzZm8vWUk1Y2Vhbkw4WGM3U1pyQkVRYm9EN2REbWs1Qgo4eDZLS2xHWU5IWlg0Rm5UZ0VPaStlM2ptTFFxRlBSY1kzVnNPazFFeUZBL0JnWlJVbkNHZUtGeERSN0tQdGhyCmtqSXVuektURXUyaDU4Tlp0S21ScUJHdDEwcTNRYzhZT3ExM2FnbmovUWRjc0ZYYTJnMjB1K1lYZDdoZ3krZksKWk4vVUkxQUQ0YzZyM1lma1ZWUmVHd1lxQVp1WXN2V0RKbW1GNWRwdEMzN011cDBPRUxVTExSakZJOTZXNXIwSAo1TmdPc25NWFJNV1hYVlpiNWRxT3R0SmRtS3FhZ25TZ1JQQVpQN2MwQjFQU2FqYzZjNGZRVXpNQ0F3RUFBVEFOCkJna3Foa2lHOXcwQkFRc0ZBQU9DQVFFQWpLb2tRdGRPcEsrTzhibWVPc3lySmdJSXJycVFVY2ZOUitjb0hZVUoKdGhrYnhITFMzR3VBTWI5dm15VExPY2xxeC9aYzJPblEwMEJCLzlTb0swcitFZ1U2UlVrRWtWcitTTFA3NTdUWgozZWI4dmdPdEduMS9ienM3bzNBaS9kclkrcUI5Q2k1S3lPc3FHTG1US2xFaUtOYkcyR1ZyTWxjS0ZYQU80YTY3Cklnc1hzYktNbTQwV1U3cG9mcGltU1ZmaXFSdkV5YmN3N0NYODF6cFErUyt1eHRYK2VBZ3V0NHh3VlI5d2IyVXYKelhuZk9HbWhWNThDd1dIQnNKa0kxNXhaa2VUWXdSN0diaEFMSkZUUkk3dkhvQXprTWIzbjAxQjQyWjNrN3RXNQpJUDFmTlpIOFUvOWxiUHNoT21FRFZkdjF5ZytVRVJxbStGSis2R0oxeFJGcGZnPT0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo= tls.key: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcEFJQkFBS0NBUUVBdi91RWM4b1JkMHUvZXVJTHNFK1RYZUprckxMMnNJNGFWaEMvYjVyYy9XMlRiNHEvClJOcktGMEdYaVN1eE9ycXgrajlnamx4NXFjdnhkenRKbXNFUkJ1Z1B0ME9hVGtIekhvb3FVWmcwZGxmZ1dkT0EKUTZMNTdlT1l0Q29VOUZ4amRXdzZUVVRJVUQ4R0JsRlNjSVo0b1hFTkhzbysyR3VTTWk2Zk1wTVM3YUhudzFtMApxWkdvRWEzWFNyZEJ6eGc2clhkcUNlUDlCMXl3VmRyYURiUzc1aGQzdUdETDU4cGszOVFqVUFQaHpxdmRoK1JWClZGNGJCaW9CbTVpeTlZTW1hWVhsMm0wTGZzeTZuUTRRdFFzdEdNVWozcGJtdlFmazJBNnljeGRFeFpkZFZsdmwKMm82MjBsMllxcHFDZEtCRThCay90elFIVTlKcU56cHpoOUJUTXdJREFRQUJBb0lCQVFDZklHbXowOHhRVmorNwpLZnZJUXQwQ0YzR2MxNld6eDhVNml4MHg4Mm15d1kxUUNlL3BzWE9LZlRxT1h1SENyUlp5TnUvZ2IvUUQ4bUFOCmxOMjRZTWl0TWRJODg5TEZoTkp3QU5OODJDeTczckM5bzVvUDlkazAvYzRIbjAzSkVYNzZ5QjgzQm9rR1FvYksKMjhMNk0rdHUzUmFqNjd6Vmc2d2szaEhrU0pXSzBwV1YrSjdrUkRWYmhDYUZhNk5nMUZNRWxhTlozVDhhUUtyQgpDUDNDeEFTdjYxWTk5TEI4KzNXWVFIK3NYaTVGM01pYVNBZ1BkQUk3WEh1dXFET1lvMU5PL0JoSGt1aVg2QnRtCnorNTZud2pZMy8yUytSRmNBc3JMTnIwMDJZZi9oY0IraVlDNzVWYmcydVd6WTY3TWdOTGQ5VW9RU3BDRkYrVm4KM0cyUnhybnhBb0dCQU40U3M0ZVlPU2huMVpQQjdhTUZsY0k2RHR2S2ErTGZTTXFyY2pOZjJlSEpZNnhubmxKdgpGenpGL2RiVWVTbWxSekR0WkdlcXZXaHFISy9iTjIyeWJhOU1WMDlRQ0JFTk5jNmtWajJTVHpUWkJVbEx4QzYrCk93Z0wyZHhKendWelU0VC84ajdHalRUN05BZVpFS2FvRHFyRG5BYWkyaW5oZU1JVWZHRXFGKzJyQW9HQkFOMVAKK0tZL0lsS3RWRzRKSklQNzBjUis3RmpyeXJpY05iWCtQVzUvOXFHaWxnY2grZ3l4b25BWlBpd2NpeDN3QVpGdwpaZC96ZFB2aTBkWEppc1BSZjRMazg5b2pCUmpiRmRmc2l5UmJYbyt3TFU4NUhRU2NGMnN5aUFPaTVBRHdVU0FkCm45YWFweUNweEFkREtERHdObit3ZFhtaTZ0OHRpSFRkK3RoVDhkaVpBb0dCQUt6Wis1bG9OOTBtYlF4VVh5YUwKMjFSUm9tMGJjcndsTmVCaWNFSmlzaEhYa2xpSVVxZ3hSZklNM2hhUVRUcklKZENFaHFsV01aV0xPb2I2NTNyZgo3aFlMSXM1ZUtka3o0aFRVdnpldm9TMHVXcm9CV2xOVHlGanIrSWhKZnZUc0hpOGdsU3FkbXgySkJhZUFVWUNXCndNdlQ4NmNLclNyNkQrZG8wS05FZzFsL0FvR0FlMkFVdHVFbFNqLzBmRzgrV3hHc1RFV1JqclRNUzRSUjhRWXQKeXdjdFA4aDZxTGxKUTRCWGxQU05rMXZLTmtOUkxIb2pZT2pCQTViYjhibXNVU1BlV09NNENoaFJ4QnlHbmR2eAphYkJDRkFwY0IvbEg4d1R0alVZYlN5T294ZGt5OEp0ek90ajJhS0FiZHd6NlArWDZDODhjZmxYVFo5MWpYL3RMCjF3TmRKS2tDZ1lCbyt0UzB5TzJ2SWFmK2UwSkN5TGhzVDQ5cTN3Zis2QWVqWGx2WDJ1VnRYejN5QTZnbXo5aCsKcDNlK2JMRUxwb3B0WFhNdUFRR0xhUkcrYlNNcjR5dERYbE5ZSndUeThXczNKY3dlSTdqZVp2b0ZpbmNvVlVIMwphdmxoTUVCRGYxSjltSDB5cDBwWUNaS2ROdHNvZEZtQktzVEtQMjJhTmtsVVhCS3gyZzR6cFE9PQotLS0tLUVORCBSU0EgUFJJVkFURSBLRVktLS0tLQo= --- kind: ConfigMap apiVersion: v1 metadata: name: nginx-config namespace: nginx-ingress data: server-names-hash-bucket-size: "1024" --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: nginx-ingress rules: - apiGroups: - "" resources: - services - endpoints verbs: - get - list - watch - apiGroups: - "" resources: - secrets verbs: - get - list - watch - apiGroups: - "" resources: - configmaps verbs: - get - list - watch - update - create - apiGroups: - "" resources: - pods verbs: - list - apiGroups: - "" resources: - events verbs: - create - patch - apiGroups: - extensions resources: - ingresses verbs: - list - watch - get - apiGroups: - "extensions" resources: - ingresses/status verbs: - update - apiGroups: - k8s.nginx.org resources: - virtualservers - virtualserverroutes verbs: - list - watch - get --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: nginx-ingress subjects: - kind: ServiceAccount name: nginx-ingress namespace: nginx-ingress roleRef: kind: ClusterRole name: nginx-ingress apiGroup: rbac.authorization.k8s.io --- apiVersion: apps/v1 kind: DaemonSet metadata: name: nginx-ingress namespace: nginx-ingress annotations: prometheus.io/scrape: "true" prometheus.io/port: "9113" spec: selector: matchLabels: app: nginx-ingress template: metadata: labels: app: nginx-ingress spec: serviceAccountName: nginx-ingress containers: - image: nginx/nginx-ingress:1.5.5 name: nginx-ingress ports: - name: http containerPort: 80 hostPort: 80 - name: https containerPort: 443 hostPort: 443 - name: prometheus containerPort: 9113 env: - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name args: - -nginx-configmaps=$(POD_NAMESPACE)/nginx-config - -default-server-tls-secret=$(POD_NAMESPACE)/default-server-secret #- -v=3 # Enables extensive logging. Useful for troubleshooting. #- -report-ingress-status #- -external-service=nginx-ingress #- -enable-leader-election - -enable-prometheus-metrics #- -enable-custom-resources

创建Prometheus ingress

cat prometheus-ingress.yaml

apiVersion: extensions/v1beta1 kind: Ingress metadata: name: prometheus-k8s namespace: default annotations: kubernetes.io/ingress.class: "nginx" spec: rules: - host: prometheus-monitor.com http: paths: - path: backend: serviceName: prometheus-k8s servicePort: 9090

11.安装metrics-server

在Node1/Node2上下载镜像文件:

docker pull bluersw/metrics-server-amd64:v0.3.6 docker tag bluersw/metrics-server-amd64:v0.3.6 k8s.gcr.io/metrics-server-amd64:v0.3.6

在Master上执行安装:

git clone https://github.com/kubernetes-incubator/metrics-server.git cd metrics-server/deploy/1.8+/ 修改metrics-server-deployment.yaml image: k8s.gcr.io/metrics-server-amd64 #在image下添加一下内容 command: - /metrics-server - --metric-resolution=30s - --kubelet-insecure-tls - --kubelet-preferred-address-types=InternalIP 查找runAsNonRoot: true 修改为runAsNonRoot: false kubectl create -f . 如果不能不能FQ拉取不到镜像可以更改image: mirrorgooglecontainers/metrics-server-amd64:v0.3.6

作者:一毛

本博客所有文章仅用于学习、研究和交流目的,欢迎非商业性质转载。

不管遇到了什么烦心事,都不要自己为难自己;无论今天发生多么糟糕的事,都不应该感到悲伤。记住一句话:越努力,越幸运。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· 什么是nginx的强缓存和协商缓存

· 一文读懂知识蒸馏

· Manus爆火,是硬核还是营销?