hadoop学习之路(2)

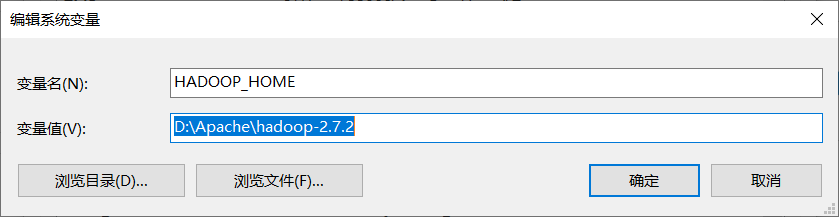

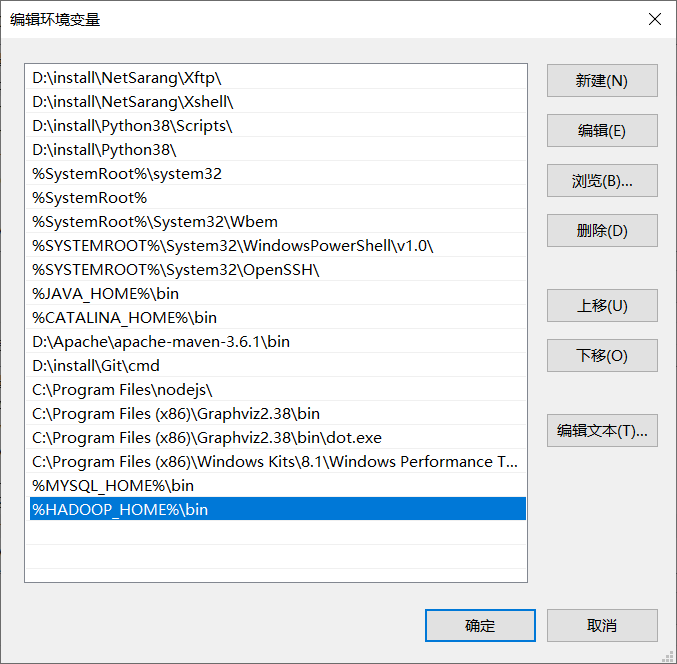

1.本地安装hadoop(不安装本地hadoop会报错,虽然并不影响远程的环境,但会报错:Failed to locate the winutils binary in the hadoop binary path)

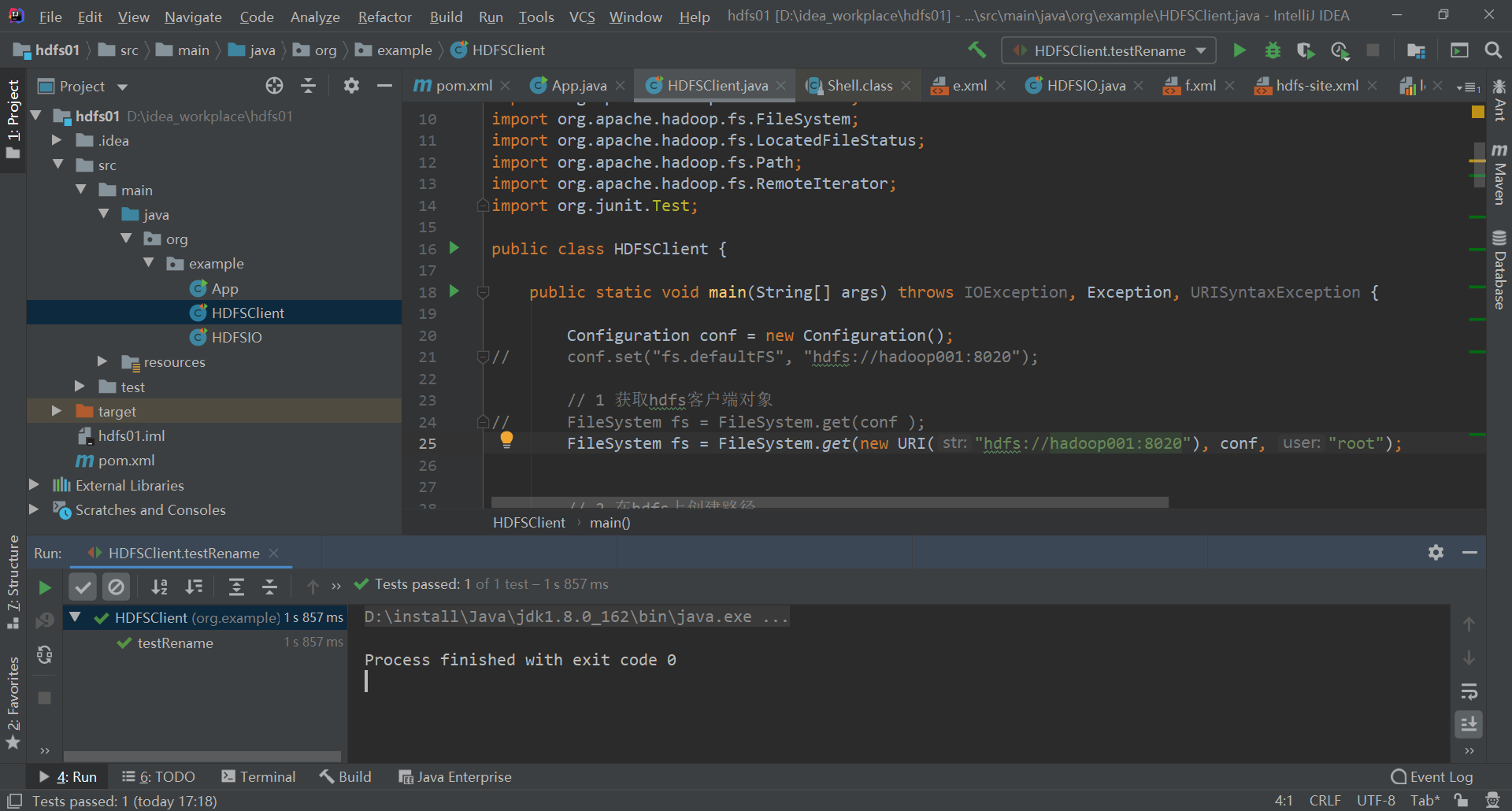

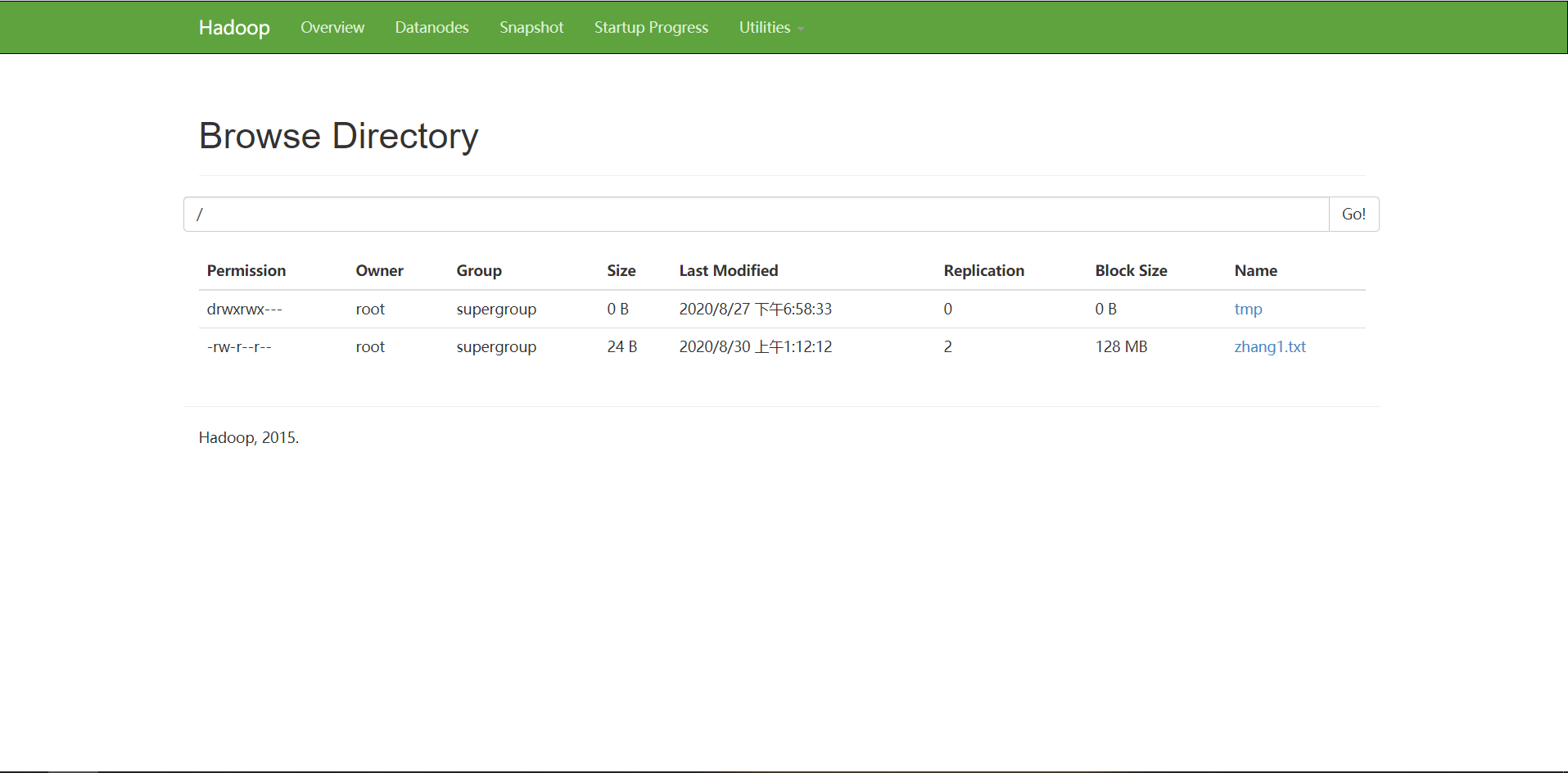

2.启动hadoop环境,dfs,yarn,然后测试代码(DataNode端口与linux设置端口一致)

package org.example; import java.io.File; import java.io.FileInputStream; import java.io.FileOutputStream; import java.io.IOException; import java.net.URI; import java.net.URISyntaxException; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.FSDataInputStream; import org.apache.hadoop.fs.FSDataOutputStream; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.IOUtils; import org.junit.Test; public class HDFSIO { // 把本地d盘上的zhang.txt文件上传到HDFS根目录 @Test public void putFileToHDFS() throws IOException, InterruptedException, URISyntaxException{ // 1 获取对象 Configuration conf = new Configuration(); FileSystem fs = FileSystem.get(new URI("hdfs://hadoop001:8020"), conf , "root"); // 2 获取输入流 FileInputStream fis = new FileInputStream(new File("d:/zhang.txt")); // 3 获取输出流 FSDataOutputStream fos = fs.create(new Path("/zhang.txt")); // 4 流的对拷 IOUtils.copyBytes(fis, fos, conf); // 5 关闭资源 IOUtils.closeStream(fos); IOUtils.closeStream(fis); fs.close(); } // 从HDFS上下载zhang.txt文件到本地e盘上 @Test public void getFileFromHDFS() throws IOException, InterruptedException, URISyntaxException{ // 1 获取对象 Configuration conf = new Configuration(); FileSystem fs = FileSystem.get(new URI("hdfs://hadoop001:8020"), conf , "root"); // 2 获取输入流 FSDataInputStream fis = fs.open(new Path("/san.txt")); // 3 获取输出流 FileOutputStream fos = new FileOutputStream(new File("d:/san.txt")); // 4 流的对拷 IOUtils.copyBytes(fis, fos, conf); // 5 关闭资源 IOUtils.closeStream(fos); IOUtils.closeStream(fis); fs.close(); } // 下载第一块 @Test public void readFileSeek1() throws IOException, InterruptedException, URISyntaxException{ // 1 获取对象 Configuration conf = new Configuration(); FileSystem fs = FileSystem.get(new URI("hdfs://hadoop001:8020"), conf , "root"); // 2 获取输入流 FSDataInputStream fis = fs.open(new Path("/hadoop-2.7.2.tar.gz")); // 3 获取输出流 FileOutputStream fos = new FileOutputStream(new File("d:/hadoop-2.7.2.tar.gz.part1")); // 4 流的对拷(只拷贝128m) byte[] buf = new byte[1024]; for (int i = 0; i < 1024 * 128; i++) { fis.read(buf); fos.write(buf); } // 5 关闭资源 IOUtils.closeStream(fos); IOUtils.closeStream(fis); fs.close(); } // 下载第二块 @SuppressWarnings("resource") @Test public void readFileSeek2() throws IOException, InterruptedException, URISyntaxException{ // 1 获取对象 Configuration conf = new Configuration(); FileSystem fs = FileSystem.get(new URI("hdfs://hadoop001:8020"), conf , "root"); // 2 获取输入流 FSDataInputStream fis = fs.open(new Path("/hadoop-2.7.2.tar.gz")); // 3 设置指定读取的起点 fis.seek(1024*1024*128); // 4 获取输出流 FileOutputStream fos = new FileOutputStream(new File("d:/hadoop-2.7.2.tar.gz.part2")); // 5 流的对拷 IOUtils.copyBytes(fis, fos, conf); // 6 关闭资源 IOUtils.closeStream(fos); IOUtils.closeStream(fis); fs.close(); } }

package org.example; import java.io.IOException; import java.net.URI; import java.net.URISyntaxException; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.BlockLocation; import org.apache.hadoop.fs.FileStatus; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.LocatedFileStatus; import org.apache.hadoop.fs.Path; import org.apache.hadoop.fs.RemoteIterator; import org.junit.Test; public class HDFSClient { public static void main(String[] args) throws IOException, Exception, URISyntaxException { Configuration conf = new Configuration(); // conf.set("fs.defaultFS", "hdfs://hadoop001:8020"); // 1 获取hdfs客户端对象 // FileSystem fs = FileSystem.get(conf ); FileSystem fs = FileSystem.get(new URI("hdfs://hadoop001:8020"), conf, "root"); // 2 在hdfs上创建路径 fs.mkdirs(new Path("/0529/dashen/zhang")); // 3 关闭资源 fs.close(); System.out.println("over"); } // 1 文件上传 @Test public void testCopyFromLocalFile() throws IOException, InterruptedException, URISyntaxException{ // 1 获取fs对象 Configuration conf = new Configuration(); conf.set("dfs.replication", "2"); FileSystem fs = FileSystem.get(new URI("hdfs://hadoop001:8020"), conf , "root"); // 2 执行上传API fs.copyFromLocalFile(new Path("d:/zhang.txt"), new Path("/zhang.txt")); // 3 关闭资源 fs.close(); } // 2 文件下载 @Test public void testCopyToLocalFile() throws IOException, InterruptedException, URISyntaxException{ // 1 获取对象 Configuration conf = new Configuration(); FileSystem fs = FileSystem.get(new URI("hdfs://hadoop001:8020"), conf , "root"); // 2 执行下载操作 // fs.copyToLocalFile(new Path("/zhang.txt"), new Path("d:/zhang1.txt")); fs.copyToLocalFile(false, new Path("/zhang.txt"), new Path("d:/zhangzhang.txt"), true); // 3 关闭资源 fs.close(); } // 3 文件删除 @Test public void testDelete() throws IOException, InterruptedException, URISyntaxException{ // 1 获取对象 Configuration conf = new Configuration(); FileSystem fs = FileSystem.get(new URI("hdfs://hadoop001:8020"), conf , "root"); // 2 文件删除 fs.delete(new Path("/0529"), true); // 3 关闭资源 fs.close(); } // 4 文件更名 @Test public void testRename() throws IOException, InterruptedException, URISyntaxException{ // 1 获取对象 Configuration conf = new Configuration(); FileSystem fs = FileSystem.get(new URI("hdfs://hadoop001:8020"), conf , "root"); // 2 执行更名操作 fs.rename(new Path("/zhang.txt"), new Path("/zhang1.txt")); // 3 关闭资源 fs.close(); } // 5 文件详情查看 @Test public void testListFiles() throws IOException, InterruptedException, URISyntaxException{ // 1 获取对象 Configuration conf = new Configuration(); FileSystem fs = FileSystem.get(new URI("hdfs://hadoop001:8020"), conf , "root"); // 2 查看文件详情 RemoteIterator<LocatedFileStatus> listFiles = fs.listFiles(new Path("/"), true); while(listFiles.hasNext()){ LocatedFileStatus fileStatus = listFiles.next(); // 查看文件名称、权限、长度、块信息 System.out.println(fileStatus.getPath().getName());// 文件名称 System.out.println(fileStatus.getPermission());// 文件权限 System.out.println(fileStatus.getLen());// 文件长度 BlockLocation[] blockLocations = fileStatus.getBlockLocations(); for (BlockLocation blockLocation : blockLocations) { String[] hosts = blockLocation.getHosts(); for (String host : hosts) { System.out.println(host); } } System.out.println("------test分割线--------"); } // 3 关闭资源 fs.close(); } // 6 判断是文件还是文件夹 @Test public void testListStatus() throws IOException, InterruptedException, URISyntaxException{ // 1 获取对象 Configuration conf = new Configuration(); FileSystem fs = FileSystem.get(new URI("hdfs://hadoop001:8020"), conf , "root"); // 2 判断操作 FileStatus[] listStatus = fs.listStatus(new Path("/")); for (FileStatus fileStatus : listStatus) { if (fileStatus.isFile()) { // 文件 System.out.println("f:"+fileStatus.getPath().getName()); }else{ // 文件夹 System.out.println("d:"+fileStatus.getPath().getName()); } } // 3 关闭资源 fs.close(); } }

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>org.example</groupId> <artifactId>hdfs01</artifactId> <version>1.0-SNAPSHOT</version> <name>hdfs01</name> <!-- FIXME change it to the project's website --> <url>http://www.example.com</url> <properties> <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding> <maven.compiler.source>1.8</maven.compiler.source> <maven.compiler.target>1.8</maven.compiler.target> </properties> <dependencies> <dependency> <groupId>junit</groupId> <artifactId>junit</artifactId> <version>RELEASE</version> </dependency> <dependency> <groupId>org.apache.logging.log4j</groupId> <artifactId>log4j-core</artifactId> <version>2.8.2</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-common</artifactId> <version>2.7.2</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-client</artifactId> <version>2.7.2</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-hdfs</artifactId> <version>2.7.2</version> </dependency> <!-- <dependency>--> <!-- <groupId>jdk.tools</groupId>--> <!-- <artifactId>jdk.tools</artifactId>--> <!-- <version>1.8</version>--> <!-- <scope>system</scope>--> <!-- <systemPath>${JAVA_HOME}/lib/tools.jar</systemPath>--> <!-- </dependency>--> </dependencies> <build> <pluginManagement><!-- lock down plugins versions to avoid using Maven defaults (may be moved to parent pom) --> <plugins> <!-- clean lifecycle, see https://maven.apache.org/ref/current/maven-core/lifecycles.html#clean_Lifecycle --> <plugin> <artifactId>maven-clean-plugin</artifactId> <version>3.1.0</version> </plugin> <!-- default lifecycle, jar packaging: see https://maven.apache.org/ref/current/maven-core/default-bindings.html#Plugin_bindings_for_jar_packaging --> <plugin> <artifactId>maven-resources-plugin</artifactId> <version>3.0.2</version> </plugin> <plugin> <artifactId>maven-compiler-plugin</artifactId> <version>3.8.0</version> </plugin> <plugin> <artifactId>maven-surefire-plugin</artifactId> <version>2.22.1</version> </plugin> <plugin> <artifactId>maven-jar-plugin</artifactId> <version>3.0.2</version> </plugin> <plugin> <artifactId>maven-install-plugin</artifactId> <version>2.5.2</version> </plugin> <plugin> <artifactId>maven-deploy-plugin</artifactId> <version>2.8.2</version> </plugin> <!-- site lifecycle, see https://maven.apache.org/ref/current/maven-core/lifecycles.html#site_Lifecycle --> <plugin> <artifactId>maven-site-plugin</artifactId> <version>3.7.1</version> </plugin> <plugin> <artifactId>maven-project-info-reports-plugin</artifactId> <version>3.0.0</version> </plugin> </plugins> </pluginManagement> </build> </project>