ceph搭建配置-三节点

| 主机名 | IP | 磁盘 | 角色 |

| ceph01 | 10.10.20.55 | /dev/sd{a,b,c,d} | osd,mon,mgr |

| ceph02 | 10.10.20.66 | /dev/sd{a,b,c,d} | osd,mon,mds |

| chph03 | 10.10.20.77 | /dev/sd{a,b,c,d} | osd,mon,mds |

1、由于尝试阿里、清华、163等源,在yum安装时都会出现问题,最后选用网友一种将yum源拉去到本地做镜像的方式。

下载ceph nautilus版本yum源(最新版),安装ftp服务器

yum install -y vsftpd wget lrzsz

systemctl start vsftpd

下载这两个文件夹里对应 14.2.5-0.el7 的 rpm

noarch/ 14-Jan-2020 23:21

x86_64/ 14-Jan-2020 23:24

1.1 下载aarch64文件夹对应版本的rpm文件:(物理机)

]# mkdir /var/ftp/pub/ceph

]# cd /var/ftp/pub/ceph

ceph]# mkdir ceph noarch

ceph]# ls

ceph noarch

进入/var/ftp/pub/ceph/ceph文件夹,创建x86_64.txt

ceph]# vim x86_64.txt

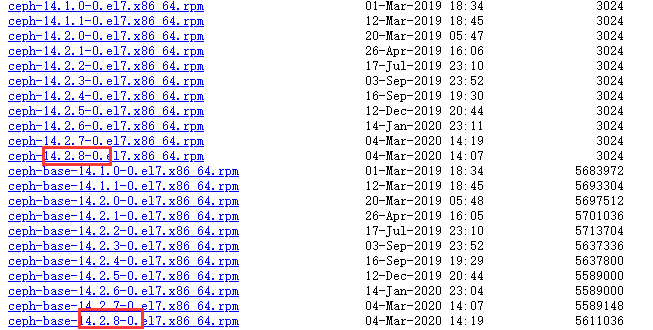

注意:用鼠标全选复制网页:

"https://mirrors.aliyun.com/ceph/rpm-nautilus/el7/x86_64/"

上面所有的文字粘贴到x86_64.txt

如下图:

1.2 编写脚本,将最新14.2.8拉去下来

[root@ceph01 ceph]# cat get.sh #!/bin/bash rpm_file=/var/ftp/pub/ceph/ceph/$1.txt rpm_netaddr=https://mirrors.aliyun.com/ceph/rpm-nautilus/el7/$1 for i in `cat $rpm_file` do if [[ $i =~ rpm ]] && [[ $i =~ 14.2.8-0 ]] then wget $rpm_netaddr/$i fi done

1.3 执行脚本,下载rpm文件

ceph]# bash get.sh x86_64

查看:

ceph]# ls

ceph-14.2.8-0.el7.x86_64.rpm

ceph-base-14.2.8-0.el7.x86_64.rpm

ceph-common-14.2.8-0.el7.x86_64.rpm

ceph-debuginfo-14.2.8-0.el7.x86_64.rpm

cephfs-java-14.2.8-0.el7.x86_64.rpm

ceph-fuse-14.2.8-0.el7.x86_64.rpm

1.4 在noarch文件夹内也下载对应的noarch.rpm包,复制网页时有些不全,可自己补充

[root@ceph01 ceph]# cd ../noarch/ You have new mail in /var/spool/mail/root [root@ceph01 noarch]# cat get.sh #!/bin/bash rpm_file=/var/ftp/pub/ceph/noarch/$1.txt rpm_netaddr=https://mirrors.aliyun.com/ceph/rpm-nautilus/el7/$1 for i in `cat $rpm_file` do if [[ $i =~ rpm ]] && [[ $i =~ 14.2.8-0 ]] then wget $rpm_netaddr/$i fi done

1.5 (所有节点都要)将下载的rpm文件制作本地yum源,给虚拟机ceph集群使用(此本地源在安装完后会被保存,新的ceph.repo文件为官方源)

[root@ceph01 ~]# cat /etc/yum.repos.d/ceph.repo [ceph] name=ceph repo baseurl=ftp://10.10.20.55/pub/ceph/ceph gpgcheck=0 enable=1 [ceph-noarch] name=Ceph noarch packages baseurl=ftp://10.10.20.55/pub/ceph/noarch gpgcheck=0 enable=1

2 准备集群环境

2.1 确认主机名,设置hosts配置文件按

(所有节点执行)

[root@ceph01 ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 10.10.20.55 ceph01 10.10.20.66 ceph02 10.10.20.77 ceph03

2.2 创建用户并设置免密登陆

(所有节点执行)创建用户

useradd -d /home/admin -m admin echo "123456" | passwd admin --stdin #sudo权限 echo "admin ALL = (root) NOPASSWD:ALL" | sudo tee /etc/sudoers.d/admin sudo chmod 0440 /etc/sudoers.d/admin

设置免密登陆

[root@ceph01 ~]# su - admin [admin@ceph01 ~]$ ssh-keygen [admin@ceph01 ~]$ ssh-copy-id admin@ceph01 [admin@ceph01 ~]$ ssh-copy-id admin@ceph02 [admin@ceph01 ~]$ ssh-copy-id admin@ceph03

2.3 配置NTP时间同步服务器

(所有节点执行)

yum -y install ntpdate ntpdate -u cn.ntp.org.cn crontab -e */20 * * * * ntpdate -u cn.ntp.org.cn > /dev/null 2>&1 systemctl reload crond.service

2.4 磁盘准备(所有节点准备)

虚拟机新增三块盘

/dev/sdb 用于日志盘

/dev/sdc /dev/sdd 用于数据盘

3. 开始部署集群环境

安装部署工具ceph-deploy

创建ceph集群

准备日志磁盘分区

创建OSD存储空间

查看ceph状态,验证

3.1 部署前安装

所有节点执行

]# wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo ]# yum clean all;yum repolist ]# yum -y install ceph-release ]# rm -rf /etc/yum.repos.d/ceph.repo.rpmnew

ceph01:

创建目录,并安装ceph-deploy

]# su - admin

]$ mkdir ceph-cluster

]$ cd ceph-cluster/

]$ sudo yum install ceph-deploy python-pip python-setuptools -y

]$ ceph-deploy --version

2.0.1

开始部署集群

[admin@ceph01 ceph-cluster]$ ceph-deploy new ceph01 ceph02 ceph03

[admin@ceph01 ceph-cluster]$ ls

ceph.conf ceph-deploy-ceph.log ceph.mon.keyring

[admin@ceph01 ceph-cluster]$ cat ceph.conf

[global]

fsid = b1b27e22-fc76-4a56-8a45-30bb87f6207c

mon_initial_members = ceph01, ceph02, ceph03

mon_host = 10.10.20.55,10.10.20.66,10.10.20.77

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

View Code

View Code

修改ceph.conf配置文件,添加如下配置:

public network = 10.10.20.0/24 osd pool default size = 3 osd pool default min size = 2 osd pool default pg num = 128 osd pool default pgp num = 128 osd pool default crush rule = 0 osd crush chooseleaf type = 1 max open files = 131072 ms bind ipv6 = false [mon] mon clock drift allowed = 10 mon clock drift warn backoff = 30 mon osd full ratio = .95 mon osd nearfull ratio = .85 mon osd down out interval = 600 mon osd report timeout = 300 mon allow pool delete = true [osd] osd recovery max active = 3 osd max backfills = 5 osd max scrubs = 2 osd mkfs type = xfs osd mkfs options xfs = -f -i size=1024 osd mount options xfs = rw,noatime,inode64,logbsize=256k,delaylog filestore max sync interval = 5 osd op threads = 2

3.2 开始安装ceph包

[admin@ceph01 ceph-cluster]$ sudo yum install -y ceph ceph-radosgw

[admin@ceph02 ~]$ sudo yum install -y ceph ceph-radosgw

[admin@ceph03 ~]$ sudo yum install -y ceph ceph-radosgw

查看ceph版本

[admin@ceph01 ceph-cluster]$ ceph --version

ceph version 14.2.8 (2d095e947a02261ce61424021bb43bd3022d35cb) nautilus (stable)

给所有节点安装软件包(ceph01操作),操作前检查/etc/yum.repo.d/下是否有ceph.repo.rpmnew 或ceph.repo.rpmsave

由于网络或镜像原因,一次不成功多执行几次,每次执行先删除ceph.repo.rpmnew 和 ceph.repo.rpmsave文件

[admin@ceph01 ceph-cluster]$ ceph-deploy install ceph01 ceph02 ceph03

部署初始的monitors,并获得keys

[admin@ceph01 ceph-cluster]$ ceph-deploy mon create-initial

[admin@ceph01 ceph-cluster]$ ceph-deploy mon create-initial [ceph_deploy.conf][DEBUG ] found configuration file at: /home/admin/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy mon create-initial [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] subcommand : create-initial [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f9d9dce1d88> [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] func : <function mon at 0x7f9d9df45410> [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.cli][INFO ] keyrings : None [ceph_deploy.mon][DEBUG ] Deploying mon, cluster ceph hosts ceph01 ceph02 ceph03 [ceph_deploy.mon][DEBUG ] detecting platform for host ceph01 ... [ceph01][DEBUG ] connection detected need for sudo [ceph01][DEBUG ] connected to host: ceph01 [ceph01][DEBUG ] detect platform information from remote host [ceph01][DEBUG ] detect machine type [ceph01][DEBUG ] find the location of an executable [ceph_deploy.mon][INFO ] distro info: CentOS Linux 7.7.1908 Core [ceph01][DEBUG ] determining if provided host has same hostname in remote [ceph01][DEBUG ] get remote short hostname [ceph01][DEBUG ] deploying mon to ceph01 [ceph01][DEBUG ] get remote short hostname [ceph01][DEBUG ] remote hostname: ceph01 [ceph01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf [ceph01][DEBUG ] create the mon path if it does not exist [ceph01][DEBUG ] checking for done path: /var/lib/ceph/mon/ceph-ceph01/done [ceph01][DEBUG ] done path does not exist: /var/lib/ceph/mon/ceph-ceph01/done [ceph01][INFO ] creating keyring file: /var/lib/ceph/tmp/ceph-ceph01.mon.keyring [ceph01][DEBUG ] create the monitor keyring file [ceph01][INFO ] Running command: sudo ceph-mon --cluster ceph --mkfs -i ceph01 --keyring /var/lib/ceph/tmp/ceph-ceph01.mon.keyring --setuser 167 --setgroup 167 [ceph01][INFO ] unlinking keyring file /var/lib/ceph/tmp/ceph-ceph01.mon.keyring [ceph01][DEBUG ] create a done file to avoid re-doing the mon deployment [ceph01][DEBUG ] create the init path if it does not exist [ceph01][INFO ] Running command: sudo systemctl enable ceph.target [ceph01][INFO ] Running command: sudo systemctl enable ceph-mon@ceph01 [ceph01][WARNIN] Created symlink from /etc/systemd/system/ceph-mon.target.wants/ceph-mon@ceph01.service to /usr/lib/systemd/system/ceph-mon@.service. [ceph01][INFO ] Running command: sudo systemctl start ceph-mon@ceph01 [ceph01][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph01.asok mon_status [ceph01][DEBUG ] ******************************************************************************** [ceph01][DEBUG ] status for monitor: mon.ceph01 [ceph01][DEBUG ] { [ceph01][DEBUG ] "election_epoch": 0, [ceph01][DEBUG ] "extra_probe_peers": [ [ceph01][DEBUG ] { [ceph01][DEBUG ] "addrvec": [ [ceph01][DEBUG ] { [ceph01][DEBUG ] "addr": "10.10.20.66:3300", [ceph01][DEBUG ] "nonce": 0, [ceph01][DEBUG ] "type": "v2" [ceph01][DEBUG ] }, [ceph01][DEBUG ] { [ceph01][DEBUG ] "addr": "10.10.20.66:6789", [ceph01][DEBUG ] "nonce": 0, [ceph01][DEBUG ] "type": "v1" [ceph01][DEBUG ] } [ceph01][DEBUG ] ] [ceph01][DEBUG ] }, [ceph01][DEBUG ] { [ceph01][DEBUG ] "addrvec": [ [ceph01][DEBUG ] { [ceph01][DEBUG ] "addr": "10.10.20.77:3300", [ceph01][DEBUG ] "nonce": 0, [ceph01][DEBUG ] "type": "v2" [ceph01][DEBUG ] }, [ceph01][DEBUG ] { [ceph01][DEBUG ] "addr": "10.10.20.77:6789", [ceph01][DEBUG ] "nonce": 0, [ceph01][DEBUG ] "type": "v1" [ceph01][DEBUG ] } [ceph01][DEBUG ] ] [ceph01][DEBUG ] } [ceph01][DEBUG ] ], [ceph01][DEBUG ] "feature_map": { [ceph01][DEBUG ] "mon": [ [ceph01][DEBUG ] { [ceph01][DEBUG ] "features": "0x3ffddff8ffacffff", [ceph01][DEBUG ] "num": 1, [ceph01][DEBUG ] "release": "luminous" [ceph01][DEBUG ] } [ceph01][DEBUG ] ] [ceph01][DEBUG ] }, [ceph01][DEBUG ] "features": { [ceph01][DEBUG ] "quorum_con": "0", [ceph01][DEBUG ] "quorum_mon": [], [ceph01][DEBUG ] "required_con": "0", [ceph01][DEBUG ] "required_mon": [] [ceph01][DEBUG ] }, [ceph01][DEBUG ] "monmap": { [ceph01][DEBUG ] "created": "2020-04-02 14:12:49.642933", [ceph01][DEBUG ] "epoch": 0, [ceph01][DEBUG ] "features": { [ceph01][DEBUG ] "optional": [], [ceph01][DEBUG ] "persistent": [] [ceph01][DEBUG ] }, [ceph01][DEBUG ] "fsid": "b1b27e22-fc76-4a56-8a45-30bb87f6207c", [ceph01][DEBUG ] "min_mon_release": 0, [ceph01][DEBUG ] "min_mon_release_name": "unknown", [ceph01][DEBUG ] "modified": "2020-04-02 14:12:49.642933", [ceph01][DEBUG ] "mons": [ [ceph01][DEBUG ] { [ceph01][DEBUG ] "addr": "10.10.20.55:6789/0", [ceph01][DEBUG ] "name": "ceph01", [ceph01][DEBUG ] "public_addr": "10.10.20.55:6789/0", [ceph01][DEBUG ] "public_addrs": { [ceph01][DEBUG ] "addrvec": [ [ceph01][DEBUG ] { [ceph01][DEBUG ] "addr": "10.10.20.55:3300", [ceph01][DEBUG ] "nonce": 0, [ceph01][DEBUG ] "type": "v2" [ceph01][DEBUG ] }, [ceph01][DEBUG ] { [ceph01][DEBUG ] "addr": "10.10.20.55:6789", [ceph01][DEBUG ] "nonce": 0, [ceph01][DEBUG ] "type": "v1" [ceph01][DEBUG ] } [ceph01][DEBUG ] ] [ceph01][DEBUG ] }, [ceph01][DEBUG ] "rank": 0 [ceph01][DEBUG ] }, [ceph01][DEBUG ] { [ceph01][DEBUG ] "addr": "0.0.0.0:0/1", [ceph01][DEBUG ] "name": "ceph02", [ceph01][DEBUG ] "public_addr": "0.0.0.0:0/1", [ceph01][DEBUG ] "public_addrs": { [ceph01][DEBUG ] "addrvec": [ [ceph01][DEBUG ] { [ceph01][DEBUG ] "addr": "0.0.0.0:0", [ceph01][DEBUG ] "nonce": 1, [ceph01][DEBUG ] "type": "v1" [ceph01][DEBUG ] } [ceph01][DEBUG ] ] [ceph01][DEBUG ] }, [ceph01][DEBUG ] "rank": 1 [ceph01][DEBUG ] }, [ceph01][DEBUG ] { [ceph01][DEBUG ] "addr": "0.0.0.0:0/2", [ceph01][DEBUG ] "name": "ceph03", [ceph01][DEBUG ] "public_addr": "0.0.0.0:0/2", [ceph01][DEBUG ] "public_addrs": { [ceph01][DEBUG ] "addrvec": [ [ceph01][DEBUG ] { [ceph01][DEBUG ] "addr": "0.0.0.0:0", [ceph01][DEBUG ] "nonce": 2, [ceph01][DEBUG ] "type": "v1" [ceph01][DEBUG ] } [ceph01][DEBUG ] ] [ceph01][DEBUG ] }, [ceph01][DEBUG ] "rank": 2 [ceph01][DEBUG ] } [ceph01][DEBUG ] ] [ceph01][DEBUG ] }, [ceph01][DEBUG ] "name": "ceph01", [ceph01][DEBUG ] "outside_quorum": [ [ceph01][DEBUG ] "ceph01" [ceph01][DEBUG ] ], [ceph01][DEBUG ] "quorum": [], [ceph01][DEBUG ] "rank": 0, [ceph01][DEBUG ] "state": "probing", [ceph01][DEBUG ] "sync_provider": [] [ceph01][DEBUG ] } [ceph01][DEBUG ] ******************************************************************************** [ceph01][INFO ] monitor: mon.ceph01 is running [ceph01][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph01.asok mon_status [ceph_deploy.mon][DEBUG ] detecting platform for host ceph02 ... [ceph02][DEBUG ] connection detected need for sudo [ceph02][DEBUG ] connected to host: ceph02 [ceph02][DEBUG ] detect platform information from remote host [ceph02][DEBUG ] detect machine type [ceph02][DEBUG ] find the location of an executable [ceph_deploy.mon][INFO ] distro info: CentOS Linux 7.7.1908 Core [ceph02][DEBUG ] determining if provided host has same hostname in remote [ceph02][DEBUG ] get remote short hostname [ceph02][DEBUG ] deploying mon to ceph02 [ceph02][DEBUG ] get remote short hostname [ceph02][DEBUG ] remote hostname: ceph02 [ceph02][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf [ceph02][DEBUG ] create the mon path if it does not exist [ceph02][DEBUG ] checking for done path: /var/lib/ceph/mon/ceph-ceph02/done [ceph02][DEBUG ] done path does not exist: /var/lib/ceph/mon/ceph-ceph02/done [ceph02][INFO ] creating keyring file: /var/lib/ceph/tmp/ceph-ceph02.mon.keyring [ceph02][DEBUG ] create the monitor keyring file [ceph02][INFO ] Running command: sudo ceph-mon --cluster ceph --mkfs -i ceph02 --keyring /var/lib/ceph/tmp/ceph-ceph02.mon.keyring --setuser 167 --setgroup 167 [ceph02][INFO ] unlinking keyring file /var/lib/ceph/tmp/ceph-ceph02.mon.keyring [ceph02][DEBUG ] create a done file to avoid re-doing the mon deployment [ceph02][DEBUG ] create the init path if it does not exist [ceph02][INFO ] Running command: sudo systemctl enable ceph.target [ceph02][INFO ] Running command: sudo systemctl enable ceph-mon@ceph02 [ceph02][WARNIN] Created symlink from /etc/systemd/system/ceph-mon.target.wants/ceph-mon@ceph02.service to /usr/lib/systemd/system/ceph-mon@.service. [ceph02][INFO ] Running command: sudo systemctl start ceph-mon@ceph02 [ceph02][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph02.asok mon_status [ceph02][DEBUG ] ******************************************************************************** [ceph02][DEBUG ] status for monitor: mon.ceph02 [ceph02][DEBUG ] { [ceph02][DEBUG ] "election_epoch": 0, [ceph02][DEBUG ] "extra_probe_peers": [ [ceph02][DEBUG ] { [ceph02][DEBUG ] "addrvec": [ [ceph02][DEBUG ] { [ceph02][DEBUG ] "addr": "10.10.20.55:3300", [ceph02][DEBUG ] "nonce": 0, [ceph02][DEBUG ] "type": "v2" [ceph02][DEBUG ] }, [ceph02][DEBUG ] { [ceph02][DEBUG ] "addr": "10.10.20.55:6789", [ceph02][DEBUG ] "nonce": 0, [ceph02][DEBUG ] "type": "v1" [ceph02][DEBUG ] } [ceph02][DEBUG ] ] [ceph02][DEBUG ] }, [ceph02][DEBUG ] { [ceph02][DEBUG ] "addrvec": [ [ceph02][DEBUG ] { [ceph02][DEBUG ] "addr": "10.10.20.77:3300", [ceph02][DEBUG ] "nonce": 0, [ceph02][DEBUG ] "type": "v2" [ceph02][DEBUG ] }, [ceph02][DEBUG ] { [ceph02][DEBUG ] "addr": "10.10.20.77:6789", [ceph02][DEBUG ] "nonce": 0, [ceph02][DEBUG ] "type": "v1" [ceph02][DEBUG ] } [ceph02][DEBUG ] ] [ceph02][DEBUG ] } [ceph02][DEBUG ] ], [ceph02][DEBUG ] "feature_map": { [ceph02][DEBUG ] "mon": [ [ceph02][DEBUG ] { [ceph02][DEBUG ] "features": "0x3ffddff8ffacffff", [ceph02][DEBUG ] "num": 1, [ceph02][DEBUG ] "release": "luminous" [ceph02][DEBUG ] } [ceph02][DEBUG ] ] [ceph02][DEBUG ] }, [ceph02][DEBUG ] "features": { [ceph02][DEBUG ] "quorum_con": "0", [ceph02][DEBUG ] "quorum_mon": [], [ceph02][DEBUG ] "required_con": "0", [ceph02][DEBUG ] "required_mon": [] [ceph02][DEBUG ] }, [ceph02][DEBUG ] "monmap": { [ceph02][DEBUG ] "created": "2020-04-02 14:12:52.614709", [ceph02][DEBUG ] "epoch": 0, [ceph02][DEBUG ] "features": { [ceph02][DEBUG ] "optional": [], [ceph02][DEBUG ] "persistent": [] [ceph02][DEBUG ] }, [ceph02][DEBUG ] "fsid": "b1b27e22-fc76-4a56-8a45-30bb87f6207c", [ceph02][DEBUG ] "min_mon_release": 0, [ceph02][DEBUG ] "min_mon_release_name": "unknown", [ceph02][DEBUG ] "modified": "2020-04-02 14:12:52.614709", [ceph02][DEBUG ] "mons": [ [ceph02][DEBUG ] { [ceph02][DEBUG ] "addr": "10.10.20.66:6789/0", [ceph02][DEBUG ] "name": "ceph02", [ceph02][DEBUG ] "public_addr": "10.10.20.66:6789/0", [ceph02][DEBUG ] "public_addrs": { [ceph02][DEBUG ] "addrvec": [ [ceph02][DEBUG ] { [ceph02][DEBUG ] "addr": "10.10.20.66:3300", [ceph02][DEBUG ] "nonce": 0, [ceph02][DEBUG ] "type": "v2" [ceph02][DEBUG ] }, [ceph02][DEBUG ] { [ceph02][DEBUG ] "addr": "10.10.20.66:6789", [ceph02][DEBUG ] "nonce": 0, [ceph02][DEBUG ] "type": "v1" [ceph02][DEBUG ] } [ceph02][DEBUG ] ] [ceph02][DEBUG ] }, [ceph02][DEBUG ] "rank": 0 [ceph02][DEBUG ] }, [ceph02][DEBUG ] { [ceph02][DEBUG ] "addr": "0.0.0.0:0/1", [ceph02][DEBUG ] "name": "ceph01", [ceph02][DEBUG ] "public_addr": "0.0.0.0:0/1", [ceph02][DEBUG ] "public_addrs": { [ceph02][DEBUG ] "addrvec": [ [ceph02][DEBUG ] { [ceph02][DEBUG ] "addr": "0.0.0.0:0", [ceph02][DEBUG ] "nonce": 1, [ceph02][DEBUG ] "type": "v1" [ceph02][DEBUG ] } [ceph02][DEBUG ] ] [ceph02][DEBUG ] }, [ceph02][DEBUG ] "rank": 1 [ceph02][DEBUG ] }, [ceph02][DEBUG ] { [ceph02][DEBUG ] "addr": "0.0.0.0:0/2", [ceph02][DEBUG ] "name": "ceph03", [ceph02][DEBUG ] "public_addr": "0.0.0.0:0/2", [ceph02][DEBUG ] "public_addrs": { [ceph02][DEBUG ] "addrvec": [ [ceph02][DEBUG ] { [ceph02][DEBUG ] "addr": "0.0.0.0:0", [ceph02][DEBUG ] "nonce": 2, [ceph02][DEBUG ] "type": "v1" [ceph02][DEBUG ] } [ceph02][DEBUG ] ] [ceph02][DEBUG ] }, [ceph02][DEBUG ] "rank": 2 [ceph02][DEBUG ] } [ceph02][DEBUG ] ] [ceph02][DEBUG ] }, [ceph02][DEBUG ] "name": "ceph02", [ceph02][DEBUG ] "outside_quorum": [ [ceph02][DEBUG ] "ceph02" [ceph02][DEBUG ] ], [ceph02][DEBUG ] "quorum": [], [ceph02][DEBUG ] "rank": 0, [ceph02][DEBUG ] "state": "probing", [ceph02][DEBUG ] "sync_provider": [] [ceph02][DEBUG ] } [ceph02][DEBUG ] ******************************************************************************** [ceph02][INFO ] monitor: mon.ceph02 is running [ceph02][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph02.asok mon_status [ceph_deploy.mon][DEBUG ] detecting platform for host ceph03 ... [ceph03][DEBUG ] connection detected need for sudo [ceph03][DEBUG ] connected to host: ceph03 [ceph03][DEBUG ] detect platform information from remote host [ceph03][DEBUG ] detect machine type [ceph03][DEBUG ] find the location of an executable [ceph_deploy.mon][INFO ] distro info: CentOS Linux 7.7.1908 Core [ceph03][DEBUG ] determining if provided host has same hostname in remote [ceph03][DEBUG ] get remote short hostname [ceph03][DEBUG ] deploying mon to ceph03 [ceph03][DEBUG ] get remote short hostname [ceph03][DEBUG ] remote hostname: ceph03 [ceph03][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf [ceph03][DEBUG ] create the mon path if it does not exist [ceph03][DEBUG ] checking for done path: /var/lib/ceph/mon/ceph-ceph03/done [ceph03][DEBUG ] done path does not exist: /var/lib/ceph/mon/ceph-ceph03/done [ceph03][INFO ] creating keyring file: /var/lib/ceph/tmp/ceph-ceph03.mon.keyring [ceph03][DEBUG ] create the monitor keyring file [ceph03][INFO ] Running command: sudo ceph-mon --cluster ceph --mkfs -i ceph03 --keyring /var/lib/ceph/tmp/ceph-ceph03.mon.keyring --setuser 167 --setgroup 167 [ceph03][INFO ] unlinking keyring file /var/lib/ceph/tmp/ceph-ceph03.mon.keyring [ceph03][DEBUG ] create a done file to avoid re-doing the mon deployment [ceph03][DEBUG ] create the init path if it does not exist [ceph03][INFO ] Running command: sudo systemctl enable ceph.target [ceph03][INFO ] Running command: sudo systemctl enable ceph-mon@ceph03 [ceph03][WARNIN] Created symlink from /etc/systemd/system/ceph-mon.target.wants/ceph-mon@ceph03.service to /usr/lib/systemd/system/ceph-mon@.service. [ceph03][INFO ] Running command: sudo systemctl start ceph-mon@ceph03 [ceph03][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph03.asok mon_status [ceph03][DEBUG ] ******************************************************************************** [ceph03][DEBUG ] status for monitor: mon.ceph03 [ceph03][DEBUG ] { [ceph03][DEBUG ] "election_epoch": 1, [ceph03][DEBUG ] "extra_probe_peers": [ [ceph03][DEBUG ] { [ceph03][DEBUG ] "addrvec": [ [ceph03][DEBUG ] { [ceph03][DEBUG ] "addr": "10.10.20.55:3300", [ceph03][DEBUG ] "nonce": 0, [ceph03][DEBUG ] "type": "v2" [ceph03][DEBUG ] }, [ceph03][DEBUG ] { [ceph03][DEBUG ] "addr": "10.10.20.55:6789", [ceph03][DEBUG ] "nonce": 0, [ceph03][DEBUG ] "type": "v1" [ceph03][DEBUG ] } [ceph03][DEBUG ] ] [ceph03][DEBUG ] }, [ceph03][DEBUG ] { [ceph03][DEBUG ] "addrvec": [ [ceph03][DEBUG ] { [ceph03][DEBUG ] "addr": "10.10.20.66:3300", [ceph03][DEBUG ] "nonce": 0, [ceph03][DEBUG ] "type": "v2" [ceph03][DEBUG ] }, [ceph03][DEBUG ] { [ceph03][DEBUG ] "addr": "10.10.20.66:6789", [ceph03][DEBUG ] "nonce": 0, [ceph03][DEBUG ] "type": "v1" [ceph03][DEBUG ] } [ceph03][DEBUG ] ] [ceph03][DEBUG ] } [ceph03][DEBUG ] ], [ceph03][DEBUG ] "feature_map": { [ceph03][DEBUG ] "mon": [ [ceph03][DEBUG ] { [ceph03][DEBUG ] "features": "0x3ffddff8ffacffff", [ceph03][DEBUG ] "num": 1, [ceph03][DEBUG ] "release": "luminous" [ceph03][DEBUG ] } [ceph03][DEBUG ] ] [ceph03][DEBUG ] }, [ceph03][DEBUG ] "features": { [ceph03][DEBUG ] "quorum_con": "0", [ceph03][DEBUG ] "quorum_mon": [], [ceph03][DEBUG ] "required_con": "0", [ceph03][DEBUG ] "required_mon": [] [ceph03][DEBUG ] }, [ceph03][DEBUG ] "monmap": { [ceph03][DEBUG ] "created": "2020-04-02 14:12:55.661627", [ceph03][DEBUG ] "epoch": 0, [ceph03][DEBUG ] "features": { [ceph03][DEBUG ] "optional": [], [ceph03][DEBUG ] "persistent": [] [ceph03][DEBUG ] }, [ceph03][DEBUG ] "fsid": "b1b27e22-fc76-4a56-8a45-30bb87f6207c", [ceph03][DEBUG ] "min_mon_release": 0, [ceph03][DEBUG ] "min_mon_release_name": "unknown", [ceph03][DEBUG ] "modified": "2020-04-02 14:12:55.661627", [ceph03][DEBUG ] "mons": [ [ceph03][DEBUG ] { [ceph03][DEBUG ] "addr": "10.10.20.55:6789/0", [ceph03][DEBUG ] "name": "ceph01", [ceph03][DEBUG ] "public_addr": "10.10.20.55:6789/0", [ceph03][DEBUG ] "public_addrs": { [ceph03][DEBUG ] "addrvec": [ [ceph03][DEBUG ] { [ceph03][DEBUG ] "addr": "10.10.20.55:3300", [ceph03][DEBUG ] "nonce": 0, [ceph03][DEBUG ] "type": "v2" [ceph03][DEBUG ] }, [ceph03][DEBUG ] { [ceph03][DEBUG ] "addr": "10.10.20.55:6789", [ceph03][DEBUG ] "nonce": 0, [ceph03][DEBUG ] "type": "v1" [ceph03][DEBUG ] } [ceph03][DEBUG ] ] [ceph03][DEBUG ] }, [ceph03][DEBUG ] "rank": 0 [ceph03][DEBUG ] }, [ceph03][DEBUG ] { [ceph03][DEBUG ] "addr": "10.10.20.66:6789/0", [ceph03][DEBUG ] "name": "ceph02", [ceph03][DEBUG ] "public_addr": "10.10.20.66:6789/0", [ceph03][DEBUG ] "public_addrs": { [ceph03][DEBUG ] "addrvec": [ [ceph03][DEBUG ] { [ceph03][DEBUG ] "addr": "10.10.20.66:3300", [ceph03][DEBUG ] "nonce": 0, [ceph03][DEBUG ] "type": "v2" [ceph03][DEBUG ] }, [ceph03][DEBUG ] { [ceph03][DEBUG ] "addr": "10.10.20.66:6789", [ceph03][DEBUG ] "nonce": 0, [ceph03][DEBUG ] "type": "v1" [ceph03][DEBUG ] } [ceph03][DEBUG ] ] [ceph03][DEBUG ] }, [ceph03][DEBUG ] "rank": 1 [ceph03][DEBUG ] }, [ceph03][DEBUG ] { [ceph03][DEBUG ] "addr": "10.10.20.77:6789/0", [ceph03][DEBUG ] "name": "ceph03", [ceph03][DEBUG ] "public_addr": "10.10.20.77:6789/0", [ceph03][DEBUG ] "public_addrs": { [ceph03][DEBUG ] "addrvec": [ [ceph03][DEBUG ] { [ceph03][DEBUG ] "addr": "10.10.20.77:3300", [ceph03][DEBUG ] "nonce": 0, [ceph03][DEBUG ] "type": "v2" [ceph03][DEBUG ] }, [ceph03][DEBUG ] { [ceph03][DEBUG ] "addr": "10.10.20.77:6789", [ceph03][DEBUG ] "nonce": 0, [ceph03][DEBUG ] "type": "v1" [ceph03][DEBUG ] } [ceph03][DEBUG ] ] [ceph03][DEBUG ] }, [ceph03][DEBUG ] "rank": 2 [ceph03][DEBUG ] } [ceph03][DEBUG ] ] [ceph03][DEBUG ] }, [ceph03][DEBUG ] "name": "ceph03", [ceph03][DEBUG ] "outside_quorum": [], [ceph03][DEBUG ] "quorum": [], [ceph03][DEBUG ] "rank": 2, [ceph03][DEBUG ] "state": "electing", [ceph03][DEBUG ] "sync_provider": [] [ceph03][DEBUG ] } [ceph03][DEBUG ] ******************************************************************************** [ceph03][INFO ] monitor: mon.ceph03 is running [ceph03][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph03.asok mon_status [ceph_deploy.mon][INFO ] processing monitor mon.ceph01 [ceph01][DEBUG ] connection detected need for sudo [ceph01][DEBUG ] connected to host: ceph01 [ceph01][DEBUG ] detect platform information from remote host [ceph01][DEBUG ] detect machine type [ceph01][DEBUG ] find the location of an executable [ceph01][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph01.asok mon_status [ceph_deploy.mon][WARNIN] mon.ceph01 monitor is not yet in quorum, tries left: 5 [ceph_deploy.mon][WARNIN] waiting 5 seconds before retrying [ceph01][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph01.asok mon_status [ceph_deploy.mon][INFO ] mon.ceph01 monitor has reached quorum! [ceph_deploy.mon][INFO ] processing monitor mon.ceph02 [ceph02][DEBUG ] connection detected need for sudo [ceph02][DEBUG ] connected to host: ceph02 [ceph02][DEBUG ] detect platform information from remote host [ceph02][DEBUG ] detect machine type [ceph02][DEBUG ] find the location of an executable [ceph02][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph02.asok mon_status [ceph_deploy.mon][INFO ] mon.ceph02 monitor has reached quorum! [ceph_deploy.mon][INFO ] processing monitor mon.ceph03 [ceph03][DEBUG ] connection detected need for sudo [ceph03][DEBUG ] connected to host: ceph03 [ceph03][DEBUG ] detect platform information from remote host [ceph03][DEBUG ] detect machine type [ceph03][DEBUG ] find the location of an executable [ceph03][INFO ] Running command: sudo ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph03.asok mon_status [ceph_deploy.mon][INFO ] mon.ceph03 monitor has reached quorum! [ceph_deploy.mon][INFO ] all initial monitors are running and have formed quorum [ceph_deploy.mon][INFO ] Running gatherkeys... [ceph_deploy.gatherkeys][INFO ] Storing keys in temp directory /tmp/tmp5YLF2A [ceph01][DEBUG ] connection detected need for sudo [ceph01][DEBUG ] connected to host: ceph01 [ceph01][DEBUG ] detect platform information from remote host [ceph01][DEBUG ] detect machine type [ceph01][DEBUG ] get remote short hostname [ceph01][DEBUG ] fetch remote file [ceph01][INFO ] Running command: sudo /usr/bin/ceph --connect-timeout=25 --cluster=ceph --admin-daemon=/var/run/ceph/ceph-mon.ceph01.asok mon_status [ceph01][INFO ] Running command: sudo /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph01/keyring auth get client.admin [ceph01][INFO ] Running command: sudo /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph01/keyring auth get client.bootstrap-mds [ceph01][INFO ] Running command: sudo /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph01/keyring auth get client.bootstrap-mgr [ceph01][INFO ] Running command: sudo /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph01/keyring auth get client.bootstrap-osd [ceph01][INFO ] Running command: sudo /usr/bin/ceph --connect-timeout=25 --cluster=ceph --name mon. --keyring=/var/lib/ceph/mon/ceph-ceph01/keyring auth get client.bootstrap-rgw [ceph_deploy.gatherkeys][INFO ] Storing ceph.client.admin.keyring [ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-mds.keyring [ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-mgr.keyring [ceph_deploy.gatherkeys][INFO ] keyring 'ceph.mon.keyring' already exists [ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-osd.keyring [ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-rgw.keyring [ceph_deploy.gatherkeys][INFO ] Destroy temp directory /tmp/tmp5YLF2A

做完这步,在当前目录下就会看到有如下的keyrings

[admin@ceph01 ceph-cluster]$ ls -l total 284 -rw------- 1 admin admin 113 Apr 2 14:13 ceph.bootstrap-mds.keyring -rw------- 1 admin admin 113 Apr 2 14:13 ceph.bootstrap-mgr.keyring -rw------- 1 admin admin 113 Apr 2 14:13 ceph.bootstrap-osd.keyring -rw------- 1 admin admin 113 Apr 2 14:13 ceph.bootstrap-rgw.keyring -rw------- 1 admin admin 151 Apr 2 14:13 ceph.client.admin.keyring -rw-rw-r-- 1 admin admin 1083 Apr 2 13:40 ceph.conf -rw-rw-r-- 1 admin admin 167356 Apr 2 14:13 ceph-deploy-ceph.log -rw------- 1 admin admin 73 Apr 2 13:36 ceph.mon.keyring

将配置文件和密匙复制到集群各节点,命令如下

[admin@ceph01 ceph-cluster]$ ceph-deploy admin ceph01

[admin@ceph01 ceph-cluster]$ ceph-deploy admin ceph01 ceph02 ceph03 [ceph_deploy.conf][DEBUG ] found configuration file at: /home/admin/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy admin ceph01 ceph02 ceph03 [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7ff2f76481b8> [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] client : ['ceph01', 'ceph02', 'ceph03'] [ceph_deploy.cli][INFO ] func : <function admin at 0x7ff2f7edf230> [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph01 [ceph01][DEBUG ] connection detected need for sudo [ceph01][DEBUG ] connected to host: ceph01 [ceph01][DEBUG ] detect platform information from remote host [ceph01][DEBUG ] detect machine type [ceph01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf [ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph02 [ceph02][DEBUG ] connection detected need for sudo [ceph02][DEBUG ] connected to host: ceph02 [ceph02][DEBUG ] detect platform information from remote host [ceph02][DEBUG ] detect machine type [ceph02][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf [ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph03 [ceph03][DEBUG ] connection detected need for sudo [ceph03][DEBUG ] connected to host: ceph03 [ceph03][DEBUG ] detect platform information from remote host [ceph03][DEBUG ] detect machine type [ceph03][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

3.3 部署ceph-mgr(ceph01执行)

[admin@ceph01 ceph-cluster]$ ceph-deploy mgr create ceph01

3.4 创建osd

初始化清空磁盘数据(仅ceph01操作即可)

[admin@ceph01 ceph-cluster] ceph-deploy disk zap ceph01 /dev/sd{c,d}

[admin@ceph01 ceph-cluster] ceph-deploy disk zap ceph02 /dev/sd{c,d}

[admin@ceph01 ceph-cluster] ceph-deploy disk zap ceph03 /dev/sd{c,d}

[admin@ceph01 ceph-cluster]$ ceph-deploy disk zap ceph01 /dev/sd{c,d} [ceph_deploy.conf][DEBUG ] found configuration file at: /home/admin/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy disk zap ceph01 /dev/sdc /dev/sdd [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] debug : False [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] subcommand : zap [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f70e7ca40e0> [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] host : ceph01 [ceph_deploy.cli][INFO ] func : <function disk at 0x7f70e7eef938> [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.cli][INFO ] disk : ['/dev/sdc', '/dev/sdd'] [ceph_deploy.osd][DEBUG ] zapping /dev/sdc on ceph01 [ceph01][DEBUG ] connection detected need for sudo [ceph01][DEBUG ] connected to host: ceph01 [ceph01][DEBUG ] detect platform information from remote host [ceph01][DEBUG ] detect machine type [ceph01][DEBUG ] find the location of an executable [ceph_deploy.osd][INFO ] Distro info: CentOS Linux 7.7.1908 Core [ceph01][DEBUG ] zeroing last few blocks of device [ceph01][DEBUG ] find the location of an executable [ceph01][INFO ] Running command: sudo /usr/sbin/ceph-volume lvm zap /dev/sdc [ceph01][WARNIN] --> Zapping: /dev/sdc [ceph01][WARNIN] --> --destroy was not specified, but zapping a whole device will remove the partition table [ceph01][WARNIN] Running command: /bin/dd if=/dev/zero of=/dev/sdc bs=1M count=10 conv=fsync [ceph01][WARNIN] stderr: 10+0 records in [ceph01][WARNIN] 10+0 records out [ceph01][WARNIN] 10485760 bytes (10 MB) copied [ceph01][WARNIN] stderr: , 0.0833592 s, 126 MB/s [ceph01][WARNIN] --> Zapping successful for: <Raw Device: /dev/sdc> [ceph_deploy.osd][DEBUG ] zapping /dev/sdd on ceph01 [ceph01][DEBUG ] connection detected need for sudo [ceph01][DEBUG ] connected to host: ceph01 [ceph01][DEBUG ] detect platform information from remote host [ceph01][DEBUG ] detect machine type [ceph01][DEBUG ] find the location of an executable [ceph_deploy.osd][INFO ] Distro info: CentOS Linux 7.7.1908 Core [ceph01][DEBUG ] zeroing last few blocks of device [ceph01][DEBUG ] find the location of an executable [ceph01][INFO ] Running command: sudo /usr/sbin/ceph-volume lvm zap /dev/sdd [ceph01][WARNIN] --> Zapping: /dev/sdd [ceph01][WARNIN] --> --destroy was not specified, but zapping a whole device will remove the partition table [ceph01][WARNIN] Running command: /bin/dd if=/dev/zero of=/dev/sdd bs=1M count=10 conv=fsync [ceph01][WARNIN] stderr: 10+0 records in [ceph01][WARNIN] 10+0 records out [ceph01][WARNIN] 10485760 bytes (10 MB) copied [ceph01][WARNIN] stderr: , 0.0660085 s, 159 MB/s [ceph01][WARNIN] --> Zapping successful for: <Raw Device: /dev/sdd> [admin@ceph01 ceph-cluster]$ ceph-deploy disk zap ceph02 /dev/sd{c,d} [ceph_deploy.conf][DEBUG ] found configuration file at: /home/admin/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy disk zap ceph02 /dev/sdc /dev/sdd [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] debug : False [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] subcommand : zap [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7fc88a4cf0e0> [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] host : ceph02 [ceph_deploy.cli][INFO ] func : <function disk at 0x7fc88a71a938> [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.cli][INFO ] disk : ['/dev/sdc', '/dev/sdd'] [ceph_deploy.osd][DEBUG ] zapping /dev/sdc on ceph02 [ceph02][DEBUG ] connection detected need for sudo [ceph02][DEBUG ] connected to host: ceph02 [ceph02][DEBUG ] detect platform information from remote host [ceph02][DEBUG ] detect machine type [ceph02][DEBUG ] find the location of an executable [ceph_deploy.osd][INFO ] Distro info: CentOS Linux 7.7.1908 Core [ceph02][DEBUG ] zeroing last few blocks of device [ceph02][DEBUG ] find the location of an executable [ceph02][INFO ] Running command: sudo /usr/sbin/ceph-volume lvm zap /dev/sdc [ceph02][WARNIN] --> Zapping: /dev/sdc [ceph02][WARNIN] --> --destroy was not specified, but zapping a whole device will remove the partition table [ceph02][WARNIN] Running command: /bin/dd if=/dev/zero of=/dev/sdc bs=1M count=10 conv=fsync [ceph02][WARNIN] stderr: 10+0 records in [ceph02][WARNIN] 10+0 records out [ceph02][WARNIN] 10485760 bytes (10 MB) copied [ceph02][WARNIN] stderr: , 0.195467 s, 53.6 MB/s [ceph02][WARNIN] --> Zapping successful for: <Raw Device: /dev/sdc> [ceph_deploy.osd][DEBUG ] zapping /dev/sdd on ceph02 [ceph02][DEBUG ] connection detected need for sudo [ceph02][DEBUG ] connected to host: ceph02 [ceph02][DEBUG ] detect platform information from remote host [ceph02][DEBUG ] detect machine type [ceph02][DEBUG ] find the location of an executable [ceph_deploy.osd][INFO ] Distro info: CentOS Linux 7.7.1908 Core [ceph02][DEBUG ] zeroing last few blocks of device [ceph02][DEBUG ] find the location of an executable [ceph02][INFO ] Running command: sudo /usr/sbin/ceph-volume lvm zap /dev/sdd [ceph02][WARNIN] --> Zapping: /dev/sdd [ceph02][WARNIN] --> --destroy was not specified, but zapping a whole device will remove the partition table [ceph02][WARNIN] Running command: /bin/dd if=/dev/zero of=/dev/sdd bs=1M count=10 conv=fsync [ceph02][WARNIN] stderr: 10+0 records in [ceph02][WARNIN] 10+0 records out [ceph02][WARNIN] 10485760 bytes (10 MB) copied [ceph02][WARNIN] stderr: , 0.0420581 s, 249 MB/s [ceph02][WARNIN] --> Zapping successful for: <Raw Device: /dev/sdd> [admin@ceph01 ceph-cluster]$ ceph-deploy disk zap ceph03 /dev/sd{c,d} [ceph_deploy.conf][DEBUG ] found configuration file at: /home/admin/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy disk zap ceph03 /dev/sdc /dev/sdd [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] debug : False [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] subcommand : zap [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f0181a940e0> [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] host : ceph03 [ceph_deploy.cli][INFO ] func : <function disk at 0x7f0181cdf938> [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.cli][INFO ] disk : ['/dev/sdc', '/dev/sdd'] [ceph_deploy.osd][DEBUG ] zapping /dev/sdc on ceph03 [ceph03][DEBUG ] connection detected need for sudo [ceph03][DEBUG ] connected to host: ceph03 [ceph03][DEBUG ] detect platform information from remote host [ceph03][DEBUG ] detect machine type [ceph03][DEBUG ] find the location of an executable [ceph_deploy.osd][INFO ] Distro info: CentOS Linux 7.7.1908 Core [ceph03][DEBUG ] zeroing last few blocks of device [ceph03][DEBUG ] find the location of an executable [ceph03][INFO ] Running command: sudo /usr/sbin/ceph-volume lvm zap /dev/sdc [ceph03][WARNIN] --> Zapping: /dev/sdc [ceph03][WARNIN] --> --destroy was not specified, but zapping a whole device will remove the partition table [ceph03][WARNIN] Running command: /bin/dd if=/dev/zero of=/dev/sdc bs=1M count=10 conv=fsync [ceph03][WARNIN] --> Zapping successful for: <Raw Device: /dev/sdc> [ceph_deploy.osd][DEBUG ] zapping /dev/sdd on ceph03 [ceph03][DEBUG ] connection detected need for sudo [ceph03][DEBUG ] connected to host: ceph03 [ceph03][DEBUG ] detect platform information from remote host [ceph03][DEBUG ] detect machine type [ceph03][DEBUG ] find the location of an executable [ceph_deploy.osd][INFO ] Distro info: CentOS Linux 7.7.1908 Core [ceph03][DEBUG ] zeroing last few blocks of device [ceph03][DEBUG ] find the location of an executable [ceph03][INFO ] Running command: sudo /usr/sbin/ceph-volume lvm zap /dev/sdd [ceph03][WARNIN] --> Zapping: /dev/sdd [ceph03][WARNIN] --> --destroy was not specified, but zapping a whole device will remove the partition table [ceph03][WARNIN] Running command: /bin/dd if=/dev/zero of=/dev/sdd bs=1M count=10 conv=fsync [ceph03][WARNIN] stderr: 10+0 records in [ceph03][WARNIN] 10+0 records out [ceph03][WARNIN] 10485760 bytes (10 MB) copied [ceph03][WARNIN] stderr: , 0.0704686 s, 149 MB/s [ceph03][WARNIN] --> Zapping successful for: <Raw Device: /dev/sdd>

使用bluestore存储方式,先创建日志盘

创建逻辑卷

[admin@ceph01 ceph-cluster]$ sudo vgcreate cache /dev/sdb Physical volume "/dev/sdb" successfully created. Volume group "cache" successfully created [admin@ceph01 ceph-cluster]$ sudo lvcreate --size 10G --name db-lv-0 cache Logical volume "db-lv-0" created. [admin@ceph01 ceph-cluster]$ sudo lvcreate --size 9.5G --name wal-lv-0 cache Logical volume "wal-lv-0" created. [admin@ceph01 ceph-cluster]$ sudo lvs LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert db-lv-0 cache -wi-a----- 10.00g wal-lv-0 cache -wi-a----- 9.50g

创建osd

ceph-deploy osd create --bluestore ceph01 --data /dev/sdc --block-db cache/db-lv-0 --block-wal cache/wal-lv-0 ceph-deploy osd create --bluestore ceph01 --data /dev/sdd --block-db cache/db-lv-0 --block-wal cache/wal-lv-0 ceph-deploy osd create --bluestore ceph02 --data /dev/sdc --block-db cache/db-lv-0 --block-wal cache/wal-lv-0 ceph-deploy osd create --bluestore ceph02 --data /dev/sdd --block-db cache/db-lv-0 --block-wal cache/wal-lv-0 ceph-deploy osd create --bluestore ceph03 --data /dev/sdc --block-db cache/db-lv-0 --block-wal cache/wal-lv-0 ceph-deploy osd create --bluestore ceph03 --data /dev/sdd --block-db cache/db-lv-0 --block-wal cache/wal-lv-0

[admin@ceph01 ceph-cluster]$ ceph-deploy osd create --bluestore ceph01 --data /dev/sdc --block-db cache/db-lv-0 --block-wal cache/wal-lv-0 [ceph_deploy.conf][DEBUG ] found configuration file at: /home/admin/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy osd create --bluestore ceph01 --data /dev/sdc --block-db cache/db-lv-0 --block-wal cache/wal-lv-0 [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] bluestore : True [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7ffb7dcee200> [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] fs_type : xfs [ceph_deploy.cli][INFO ] block_wal : cache/wal-lv-0 [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] journal : None [ceph_deploy.cli][INFO ] subcommand : create [ceph_deploy.cli][INFO ] host : ceph01 [ceph_deploy.cli][INFO ] filestore : None [ceph_deploy.cli][INFO ] func : <function osd at 0x7ffb7df358c0> [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] zap_disk : False [ceph_deploy.cli][INFO ] data : /dev/sdc [ceph_deploy.cli][INFO ] block_db : cache/db-lv-0 [ceph_deploy.cli][INFO ] dmcrypt : False [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] dmcrypt_key_dir : /etc/ceph/dmcrypt-keys [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] debug : False [ceph_deploy.osd][DEBUG ] Creating OSD on cluster ceph with data device /dev/sdc [ceph01][DEBUG ] connection detected need for sudo [ceph01][DEBUG ] connected to host: ceph01 [ceph01][DEBUG ] detect platform information from remote host [ceph01][DEBUG ] detect machine type [ceph01][DEBUG ] find the location of an executable [ceph_deploy.osd][INFO ] Distro info: CentOS Linux 7.7.1908 Core [ceph_deploy.osd][DEBUG ] Deploying osd to ceph01 [ceph01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf [ceph01][WARNIN] osd keyring does not exist yet, creating one [ceph01][DEBUG ] create a keyring file [ceph01][DEBUG ] find the location of an executable [ceph01][INFO ] Running command: sudo /usr/sbin/ceph-volume --cluster ceph lvm create --bluestore --data /dev/sdc --block.wal cache/wal-lv-0 --block.db cache/db-lv-0 [ceph01][WARNIN] Running command: /bin/ceph-authtool --gen-print-key [ceph01][WARNIN] Running command: /bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new 4115b164-ba03-42c8-9425-6c048ce0a44b [ceph01][WARNIN] Running command: /sbin/vgcreate --force --yes ceph-8af2add5-a3f2-4d70-9d7a-171695238a61 /dev/sdc [ceph01][WARNIN] stdout: Physical volume "/dev/sdc" successfully created. [ceph01][WARNIN] stdout: Volume group "ceph-8af2add5-a3f2-4d70-9d7a-171695238a61" successfully created [ceph01][WARNIN] Running command: /sbin/lvcreate --yes -l 100%FREE -n osd-block-4115b164-ba03-42c8-9425-6c048ce0a44b ceph-8af2add5-a3f2-4d70-9d7a-171695238a61 [ceph01][WARNIN] stdout: Logical volume "osd-block-4115b164-ba03-42c8-9425-6c048ce0a44b" created. [ceph01][WARNIN] Running command: /bin/ceph-authtool --gen-print-key [ceph01][WARNIN] Running command: /bin/mount -t tmpfs tmpfs /var/lib/ceph/osd/ceph-0 [ceph01][WARNIN] Running command: /bin/chown -h ceph:ceph /dev/ceph-8af2add5-a3f2-4d70-9d7a-171695238a61/osd-block-4115b164-ba03-42c8-9425-6c048ce0a44b [ceph01][WARNIN] Running command: /bin/chown -R ceph:ceph /dev/dm-2 [ceph01][WARNIN] Running command: /bin/ln -s /dev/ceph-8af2add5-a3f2-4d70-9d7a-171695238a61/osd-block-4115b164-ba03-42c8-9425-6c048ce0a44b /var/lib/ceph/osd/ceph-0/block [ceph01][WARNIN] Running command: /bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /var/lib/ceph/osd/ceph-0/activate.monmap [ceph01][WARNIN] stderr: 2020-04-02 15:11:50.646 7fc139825700 -1 auth: unable to find a keyring on /etc/ceph/ceph.client.bootstrap-osd.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,: (2) No such file or directory [ceph01][WARNIN] 2020-04-02 15:11:50.646 7fc139825700 -1 AuthRegistry(0x7fc134064fe8) no keyring found at /etc/ceph/ceph.client.bootstrap-osd.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,, disabling cephx [ceph01][WARNIN] stderr: got monmap epoch 1 [ceph01][WARNIN] Running command: /bin/ceph-authtool /var/lib/ceph/osd/ceph-0/keyring --create-keyring --name osd.0 --add-key AQA0kIVe/oXiMxAAt30M1sP7TD5Y+tqPnqg2wQ== [ceph01][WARNIN] stdout: creating /var/lib/ceph/osd/ceph-0/keyring [ceph01][WARNIN] added entity osd.0 auth(key=AQA0kIVe/oXiMxAAt30M1sP7TD5Y+tqPnqg2wQ==) [ceph01][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0/keyring [ceph01][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0/ [ceph01][WARNIN] Running command: /bin/chown -h ceph:ceph /dev/cache/wal-lv-0 [ceph01][WARNIN] Running command: /bin/chown -R ceph:ceph /dev/dm-1 [ceph01][WARNIN] Running command: /bin/chown -h ceph:ceph /dev/cache/db-lv-0 [ceph01][WARNIN] Running command: /bin/chown -R ceph:ceph /dev/dm-0 [ceph01][WARNIN] Running command: /bin/ceph-osd --cluster ceph --osd-objectstore bluestore --mkfs -i 0 --monmap /var/lib/ceph/osd/ceph-0/activate.monmap --keyfile - --bluestore-block-wal-path /dev/cache/wal-lv-0 --bluestore-block-db-path /dev/cache/db-lv-0 --osd-data /var/lib/ceph/osd/ceph-0/ --osd-uuid 4115b164-ba03-42c8-9425-6c048ce0a44b --setuser ceph --setgroup ceph [ceph01][WARNIN] --> ceph-volume lvm prepare successful for: /dev/sdc [ceph01][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0 [ceph01][WARNIN] Running command: /bin/ceph-bluestore-tool --cluster=ceph prime-osd-dir --dev /dev/ceph-8af2add5-a3f2-4d70-9d7a-171695238a61/osd-block-4115b164-ba03-42c8-9425-6c048ce0a44b --path /var/lib/ceph/osd/ceph-0 --no-mon-config [ceph01][WARNIN] Running command: /bin/ln -snf /dev/ceph-8af2add5-a3f2-4d70-9d7a-171695238a61/osd-block-4115b164-ba03-42c8-9425-6c048ce0a44b /var/lib/ceph/osd/ceph-0/block [ceph01][WARNIN] Running command: /bin/chown -h ceph:ceph /var/lib/ceph/osd/ceph-0/block [ceph01][WARNIN] Running command: /bin/chown -R ceph:ceph /dev/dm-2 [ceph01][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0 [ceph01][WARNIN] Running command: /bin/ln -snf /dev/cache/db-lv-0 /var/lib/ceph/osd/ceph-0/block.db [ceph01][WARNIN] Running command: /bin/chown -h ceph:ceph /dev/cache/db-lv-0 [ceph01][WARNIN] Running command: /bin/chown -R ceph:ceph /dev/dm-0 [ceph01][WARNIN] Running command: /bin/chown -h ceph:ceph /var/lib/ceph/osd/ceph-0/block.db [ceph01][WARNIN] Running command: /bin/chown -R ceph:ceph /dev/dm-0 [ceph01][WARNIN] Running command: /bin/ln -snf /dev/cache/wal-lv-0 /var/lib/ceph/osd/ceph-0/block.wal [ceph01][WARNIN] Running command: /bin/chown -h ceph:ceph /dev/cache/wal-lv-0 [ceph01][WARNIN] Running command: /bin/chown -R ceph:ceph /dev/dm-1 [ceph01][WARNIN] Running command: /bin/chown -h ceph:ceph /var/lib/ceph/osd/ceph-0/block.wal [ceph01][WARNIN] Running command: /bin/chown -R ceph:ceph /dev/dm-1 [ceph01][WARNIN] Running command: /bin/systemctl enable ceph-volume@lvm-0-4115b164-ba03-42c8-9425-6c048ce0a44b [ceph01][WARNIN] stderr: Created symlink from /etc/systemd/system/multi-user.target.wants/ceph-volume@lvm-0-4115b164-ba03-42c8-9425-6c048ce0a44b.service to /usr/lib/systemd/system/ceph-volume@.service. [ceph01][WARNIN] Running command: /bin/systemctl enable --runtime ceph-osd@0 [ceph01][WARNIN] stderr: Created symlink from /run/systemd/system/ceph-osd.target.wants/ceph-osd@0.service to /usr/lib/systemd/system/ceph-osd@.service. [ceph01][WARNIN] Running command: /bin/systemctl start ceph-osd@0 [ceph01][WARNIN] --> ceph-volume lvm activate successful for osd ID: 0 [ceph01][WARNIN] --> ceph-volume lvm create successful for: /dev/sdc [ceph01][INFO ] checking OSD status... [ceph01][DEBUG ] find the location of an executable [ceph01][INFO ] Running command: sudo /bin/ceph --cluster=ceph osd stat --format=json [ceph_deploy.osd][DEBUG ] Host ceph01 is now ready for osd use.

systemctl stop ceph-mon@ceph01

systemctl stop ceph-mon@ceph02

systemctl stop ceph-mon@ceph03

[root@ceph02 ~]# parted /dev/sdb mklabel gpt

Information: You may need to update /etc/fstab.

[root@ceph02 ~]# parted /dev/sdb mkpart primary 1M 50%

Information: You may need to update /etc/fstab.

[root@ceph02 ~]# parted /dev/sdb mkpart primary 50% 100%

Information: You may need to update /etc/fstab.

[root@ceph02 ~]# chown ceph.ceph /dev/sdb1

[root@ceph02 ~]# chown ceph.ceph /dev/sdb2

创建OSD存储空间(仅node1操作即可)

# 创建osd存储设备,vdc为集群提供存储空间,vdb1提供JOURNAL缓存

# 一个存储设备对应一个缓存设备,缓存需要SSD,不需要很大

[root@ceph01 ceph-cluster]# ceph-deploy osd create ceph01 --data /dev/sdc --journal /dev/sdb1

[root@ceph01 ceph-cluster]# ceph-deploy osd create ceph01 --data /dev/sdd --journal /dev/sdb2

[root@ceph01 ceph-cluster]# ceph-deploy osd create ceph02 --data /dev/sdc --journal /dev/sdb1

[root@ceph01 ceph-cluster]# ceph-deploy osd create ceph02 --data /dev/sdd --journal /dev/sdb2

[root@ceph01 ceph-cluster]# ceph-deploy osd create ceph03 --data /dev/sdc --journal /dev/sdb1

[root@ceph01 ceph-cluster]# ceph-deploy osd create ceph03 --data /dev/sdd --journal /dev/sdb2

验证测试,可观察到osd由0变为6了

[root@ceph01 ceph-cluster]# ceph -s cluster: id: fbc66f50-ced8-4ad1-93f7-2453cdbf59ba health: HEALTH_WARN no active mgr services: mon: 3 daemons, quorum ceph01,ceph02,ceph03 (age 10m) mgr: no daemons active osd: 6 osds: 0 up, 0 in data: pools: 0 pools, 0 pgs objects: 0 objects, 0 B usage: 0 B used, 0 B / 0 B avail pgs:

报错,no active mgr

配置mgr

在ceph01上创建名为mgr1的mgr,三节点均可查看到

[root@ceph01 ceph-cluster]# ceph-deploy mgr create ceph01:mgr1

[root@ceph01 ceph-cluster]# ceph-deploy mgr create ceph01:mgr1 [ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy mgr create ceph01:mgr1 [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] mgr : [('ceph01', 'mgr1')] [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] subcommand : create [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f2b64aedd40> [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] func : <function mgr at 0x7f2b65357cf8> [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.mgr][DEBUG ] Deploying mgr, cluster ceph hosts ceph01:mgr1 [ceph01][DEBUG ] connected to host: ceph01 [ceph01][DEBUG ] detect platform information from remote host [ceph01][DEBUG ] detect machine type [ceph_deploy.mgr][INFO ] Distro info: CentOS Linux 7.7.1908 Core [ceph_deploy.mgr][DEBUG ] remote host will use systemd [ceph_deploy.mgr][DEBUG ] deploying mgr bootstrap to ceph01 [ceph01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf [ceph01][WARNIN] mgr keyring does not exist yet, creating one [ceph01][DEBUG ] create a keyring file [ceph01][DEBUG ] create path recursively if it doesn't exist [ceph01][INFO ] Running command: ceph --cluster ceph --name client.bootstrap-mgr --keyring /var/lib/ceph/bootstrap-mgr/ceph.keyring auth get-or-create mgr.mgr1 mon allow profile mgr osd allow * mds allow * -o /var/lib/ceph/mgr/ceph-mgr1/keyring [ceph01][INFO ] Running command: systemctl enable ceph-mgr@mgr1 [ceph01][WARNIN] Created symlink from /etc/systemd/system/ceph-mgr.target.wants/ceph-mgr@mgr1.service to /usr/lib/systemd/system/ceph-mgr@.service. [ceph01][INFO ] Running command: systemctl start ceph-mgr@mgr1 [ceph01][INFO ] Running command: systemctl enable ceph.target You have new mail in /var/spool/mail/root

4.1 创建镜像(node1)

查看存储池

[root@ceph01 ceph-cluster]# ceph osd lspools

[root@ceph01 ceph-cluster]# ceph osd pool create pool-zk 100

pool 'pool-zk' created

指定池为块设备

[root@ceph01 ceph-cluster]# ceph osd pool application enable pool-zk rbd

enabled application 'rbd' on pool 'pool-zk'

重命名为pool为rbd

[root@ceph01 ceph-cluster]# ceph osd pool rename pool-zk rbd

pool 'pool-zk' renamed to 'rbd'

[root@ceph01 ceph-cluster]# ceph osd lspools

1 rbd