python-scrapy环境配置

window下:

1.先安装well pip install wheel

2.先下载twisted 网址:https://www.lfd.uci.edu/~gohlke/pythonlibs/#twisted

3.安装twisted pip install Twisted-20.3.0-cp38-cp38-win32.whl

4.安装pywin32 pip install pywin32

3.安装scrapy pip install scrapy

linux下:

直接安装scrapy pip install scrapy

创建爬虫项目MyProjectMovie

1.创建项目,以爬取https://www.1905.com/dianyinghao/为例 scrapy startproject MyProjectMovie

2.进入项目 cd MyProjectMovie

3.创建爬虫应用文件(必须放在spider目录下) scrapy genspider movie www.xxx.com

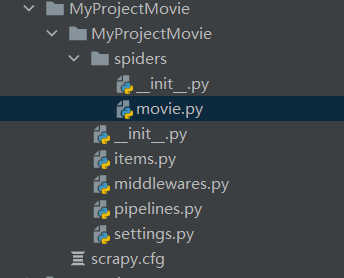

项目文件夹目录

4.movie.py文件修改

import scrapy

class MovieSpider(scrapy.Spider):

name = 'movie'

# allowed_domains = ['www.xxx.com']

start_urls = ['https://www.1905.com/dianyinghao/']

def parse(self, response):

print(response.text)

print(response)

5.settings文件配置

USER_AGENT = 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36'

ROBOTSTXT_OBEY = False

LOG_LEVEL = 'ERROR'

6.程序运行

scrapy crawl movie

持久化存储

import scrapy

class QiubaiSpider(scrapy.Spider):

name = 'qiubai'

# allowed_domains = ['www.xxx.com']

start_urls = ['https://www.qiushibaike.com/text/']

def parse(self, response):

div_list = response.xpath('//*[@id="content"]/div/div[2]/div')

list_data = []

for div in div_list:

title = div.xpath('./div[1]/a[2]/h2/text()').extract_first()

content = div.xpath('./a/div/span[1]//text()').extract()

content = "".join(content)

dic = {

'title' : title,

'content' :content,

}

list_data.append(dic)

return list_data

方式1:基于终端指令的持久化存储,只可以将parse方法的返回值存储在指定的文件中,例如csv/lxl/json等,需要在终端下写代码 scrapy crawl qiubai -o qiubai.csv

方式2:基于管道的持久化存储(推荐)

items.py

import scrapy

class MyprojectqiubaiItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

title = scrapy.Field()

content = scrapy.Field()

qiubai.py

import scrapy

from MyProjectQiubai.items import MyprojectqiubaiItem

class QiubaiSpider(scrapy.Spider):

name = 'qiubai'

# allowed_domains = ['www.xxx.com']

start_urls = ['https://www.qiushibaike.com/text/']

def parse(self, response):

div_list = response.xpath('//*[@id="content"]/div/div[2]/div')

for div in div_list:

title = div.xpath('./div[1]/a[2]/h2/text()').extract_first()

content = div.xpath('./a/div/span[1]//text()').extract()

content = "".join(content)

item = MyprojectqiubaiItem()

item["title"] = title

item["content"] = content

yield item

pipelines.py

import pymysql

class MyprojectqiubaiPipeline:

fp = None

def open_spider(self,spider):

self.fp = open("qiubai.txt", mode="w", encoding="utf-8")

def process_item(self, item, spider):

content = item["content"]

title = item["title"]

self.fp.write(title+content)

return item

def close_spider(self,spider):

self.fp.close()

class MysqlPileline:

conn = None

cur = None

def open_spider(self,spider):

self.conn = pymysql.connect(

host="localhost",

port=3306,

user="root",

password="root",

database="student",

charset="utf8")

def process_item(self, item, spider):

content = item["content"]

title = item["title"]

sql = 'insert into qiubai(title,content)value("%s","%s")' % (title,content)

print(sql)

self.cur = self.conn.cursor()

try:

self.cur.execute(sql)

self.conn.commit()

except Exception as e:

print(e)

self.conn.rollback()

return item

settings.py

ITEM_PIPELINES = {

'MyProjectQiubai.pipelines.MyprojectqiubaiPipeline': 300,

'MyProjectQiubai.pipelines.MysqlPileline': 200,

}

执行项目 scrapy crawl qiubai

手动请求发送

yield scrapy.Request(url, callback=self.parse) 执行get请求

yield scrapy.FormRequest(url, callback=self.parse) 执行post请求

import scrapy

from MyProjectQiubai.items import MyprojectqiubaiItem

class QiubaiSpider(scrapy.Spider):

name = 'qiubai'

# allowed_domains = ['www.xxx.com']

start_urls = ['https://www.qiushibaike.com/text/']

url_new = 'https://www.qiushibaike.com/text/page/%d/'

page = 2

def parse(self, response):

div_list = response.xpath('//*[@id="content"]/div/div[2]/div')

for div in div_list:

title = div.xpath('./div[1]/a[2]/h2/text()').extract_first()

content = div.xpath('./a/div/span[1]//text()').extract()

content = "".join(content)

item = MyprojectqiubaiItem()

item["title"] = title

item["content"] = content

yield item

if self.page < 10:

url_new = format(self.url_new % self.page)

self.page += 1

yield scrapy.Request(url_new, callback=self.parse)