深度残差网络+自适应参数化ReLU激活函数(调参记录14)

这次再尝试解决过拟合,把残差模块的数量减少到2个,自适应参数化ReLU激活函数里面第一个全连接层的权重数量,减少为之前的1/8,批量大小设置为1000(主要是为了省时间)。

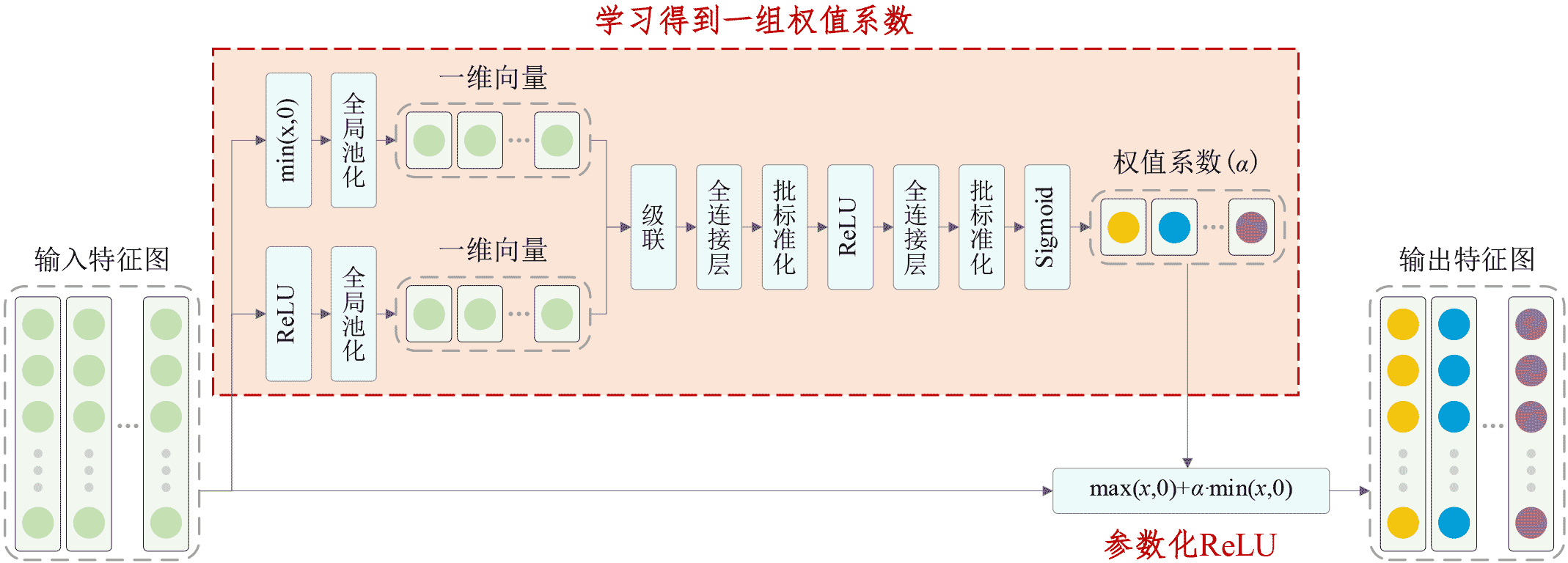

自适应参数化ReLU激活函数的基本原理如下:

Keras程序如下:

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

"""

Created on Tue Apr 14 04:17:45 2020

Implemented using TensorFlow 1.10.0 and Keras 2.2.1

Minghang Zhao, Shisheng Zhong, Xuyun Fu, Baoping Tang, Shaojiang Dong, Michael Pecht,

Deep Residual Networks with Adaptively Parametric Rectifier Linear Units for Fault Diagnosis,

IEEE Transactions on Industrial Electronics, 2020, DOI: 10.1109/TIE.2020.2972458

@author: Minghang Zhao

"""

from __future__ import print_function

import keras

import numpy as np

from keras.datasets import cifar10

from keras.layers import Dense, Conv2D, BatchNormalization, Activation, Minimum

from keras.layers import AveragePooling2D, Input, GlobalAveragePooling2D, Concatenate, Reshape

from keras.regularizers import l2

from keras import backend as K

from keras.models import Model

from keras import optimizers

from keras.preprocessing.image import ImageDataGenerator

from keras.callbacks import LearningRateScheduler

K.set_learning_phase(1)

# The data, split between train and test sets

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

# Noised data

x_train = x_train.astype('float32') / 255.

x_test = x_test.astype('float32') / 255.

x_test = x_test-np.mean(x_train)

x_train = x_train-np.mean(x_train)

print('x_train shape:', x_train.shape)

print(x_train.shape[0], 'train samples')

print(x_test.shape[0], 'test samples')

# convert class vectors to binary class matrices

y_train = keras.utils.to_categorical(y_train, 10)

y_test = keras.utils.to_categorical(y_test, 10)

# Schedule the learning rate, multiply 0.1 every 1500 epoches

def scheduler(epoch):

if epoch % 1500 == 0 and epoch != 0:

lr = K.get_value(model.optimizer.lr)

K.set_value(model.optimizer.lr, lr * 0.1)

print("lr changed to {}".format(lr * 0.1))

return K.get_value(model.optimizer.lr)

# An adaptively parametric rectifier linear unit (APReLU)

def aprelu(inputs):

# get the number of channels

channels = inputs.get_shape().as_list()[-1]

# get a zero feature map

zeros_input = keras.layers.subtract([inputs, inputs])

# get a feature map with only positive features

pos_input = Activation('relu')(inputs)

# get a feature map with only negative features

neg_input = Minimum()([inputs,zeros_input])

# define a network to obtain the scaling coefficients

scales_p = GlobalAveragePooling2D()(pos_input)

scales_n = GlobalAveragePooling2D()(neg_input)

scales = Concatenate()([scales_n, scales_p])

scales = Dense(channels//8, activation='linear', kernel_initializer='he_normal', kernel_regularizer=l2(1e-4))(scales)

scales = BatchNormalization(momentum=0.9, gamma_regularizer=l2(1e-4))(scales)

scales = Activation('relu')(scales)

scales = Dense(channels, activation='linear', kernel_initializer='he_normal', kernel_regularizer=l2(1e-4))(scales)

scales = BatchNormalization(momentum=0.9, gamma_regularizer=l2(1e-4))(scales)

scales = Activation('sigmoid')(scales)

scales = Reshape((1,1,channels))(scales)

# apply a paramtetric relu

neg_part = keras.layers.multiply([scales, neg_input])

return keras.layers.add([pos_input, neg_part])

# Residual Block

def residual_block(incoming, nb_blocks, out_channels, downsample=False,

downsample_strides=2):

residual = incoming

in_channels = incoming.get_shape().as_list()[-1]

for i in range(nb_blocks):

identity = residual

if not downsample:

downsample_strides = 1

residual = BatchNormalization(momentum=0.9, gamma_regularizer=l2(1e-4))(residual)

residual = aprelu(residual)

residual = Conv2D(out_channels, 3, strides=(downsample_strides, downsample_strides),

padding='same', kernel_initializer='he_normal',

kernel_regularizer=l2(1e-4))(residual)

residual = BatchNormalization(momentum=0.9, gamma_regularizer=l2(1e-4))(residual)

residual = aprelu(residual)

residual = Conv2D(out_channels, 3, padding='same', kernel_initializer='he_normal',

kernel_regularizer=l2(1e-4))(residual)

# Downsampling

if downsample_strides > 1:

identity = AveragePooling2D(pool_size=(1,1), strides=(2,2))(identity)

# Zero_padding to match channels

if in_channels != out_channels:

zeros_identity = keras.layers.subtract([identity, identity])

identity = keras.layers.concatenate([identity, zeros_identity])

in_channels = out_channels

residual = keras.layers.add([residual, identity])

return residual

# define and train a model

inputs = Input(shape=(32, 32, 3))

net = Conv2D(16, 3, padding='same', kernel_initializer='he_normal', kernel_regularizer=l2(1e-4))(inputs)

# net = residual_block(net, 3, 16, downsample=False)

net = residual_block(net, 1, 32, downsample=True)

# net = residual_block(net, 2, 32, downsample=False)

net = residual_block(net, 1, 64, downsample=True)

# net = residual_block(net, 2, 64, downsample=False)

net = BatchNormalization(momentum=0.9, gamma_regularizer=l2(1e-4))(net)

net = Activation('relu')(net)

net = GlobalAveragePooling2D()(net)

outputs = Dense(10, activation='softmax', kernel_initializer='he_normal', kernel_regularizer=l2(1e-4))(net)

model = Model(inputs=inputs, outputs=outputs)

sgd = optimizers.SGD(lr=0.1, decay=0., momentum=0.9, nesterov=True)

model.compile(loss='categorical_crossentropy', optimizer=sgd, metrics=['accuracy'])

# data augmentation

datagen = ImageDataGenerator(

# randomly rotate images in the range (deg 0 to 180)

rotation_range=30,

# Range for random zoom

zoom_range = 0.2,

# shear angle in counter-clockwise direction in degrees

shear_range = 30,

# randomly flip images

horizontal_flip=True,

# randomly shift images horizontally

width_shift_range=0.125,

# randomly shift images vertically

height_shift_range=0.125)

reduce_lr = LearningRateScheduler(scheduler)

# fit the model on the batches generated by datagen.flow().

model.fit_generator(datagen.flow(x_train, y_train, batch_size=1000),

validation_data=(x_test, y_test), epochs=5000,

verbose=1, callbacks=[reduce_lr], workers=4)

# get results

K.set_learning_phase(0)

DRSN_train_score = model.evaluate(x_train, y_train, batch_size=1000, verbose=0)

print('Train loss:', DRSN_train_score[0])

print('Train accuracy:', DRSN_train_score[1])

DRSN_test_score = model.evaluate(x_test, y_test, batch_size=1000, verbose=0)

print('Test loss:', DRSN_test_score[0])

print('Test accuracy:', DRSN_test_score[1])

实验结果如下(为了方便看,删掉了一部分等号):

Epoch 2000/5000 10s 191ms/step - loss: 0.5083 - acc: 0.8658 - val_loss: 0.5190 - val_acc: 0.8644 Epoch 2001/5000 10s 194ms/step - loss: 0.5102 - acc: 0.8651 - val_loss: 0.5203 - val_acc: 0.8656 Epoch 2002/5000 10s 192ms/step - loss: 0.5073 - acc: 0.8659 - val_loss: 0.5245 - val_acc: 0.8612 Epoch 2003/5000 10s 190ms/step - loss: 0.5105 - acc: 0.8646 - val_loss: 0.5181 - val_acc: 0.8636 Epoch 2004/5000 9s 186ms/step - loss: 0.5080 - acc: 0.8661 - val_loss: 0.5217 - val_acc: 0.8631 Epoch 2005/5000 9s 186ms/step - loss: 0.5074 - acc: 0.8641 - val_loss: 0.5237 - val_acc: 0.8614 Epoch 2006/5000 9s 186ms/step - loss: 0.5060 - acc: 0.8651 - val_loss: 0.5241 - val_acc: 0.8641 Epoch 2007/5000 10s 190ms/step - loss: 0.5096 - acc: 0.8651 - val_loss: 0.5185 - val_acc: 0.8660 Epoch 2008/5000 9s 190ms/step - loss: 0.5053 - acc: 0.8686 - val_loss: 0.5186 - val_acc: 0.8624 Epoch 2009/5000 10s 191ms/step - loss: 0.5057 - acc: 0.8670 - val_loss: 0.5208 - val_acc: 0.8636 Epoch 2010/5000 10s 190ms/step - loss: 0.5102 - acc: 0.8653 - val_loss: 0.5214 - val_acc: 0.8614 Epoch 2011/5000 9s 188ms/step - loss: 0.5091 - acc: 0.8651 - val_loss: 0.5211 - val_acc: 0.8629 Epoch 2012/5000 9s 187ms/step - loss: 0.5069 - acc: 0.8672 - val_loss: 0.5221 - val_acc: 0.8616 Epoch 2013/5000 9s 184ms/step - loss: 0.5098 - acc: 0.8652 - val_loss: 0.5241 - val_acc: 0.8625 Epoch 2014/5000 9s 187ms/step - loss: 0.5056 - acc: 0.8666 - val_loss: 0.5188 - val_acc: 0.8623 Epoch 2015/5000 9s 189ms/step - loss: 0.5050 - acc: 0.8672 - val_loss: 0.5237 - val_acc: 0.8621 Epoch 2016/5000 10s 193ms/step - loss: 0.5057 - acc: 0.8667 - val_loss: 0.5207 - val_acc: 0.8607 Epoch 2017/5000 10s 192ms/step - loss: 0.5092 - acc: 0.8642 - val_loss: 0.5172 - val_acc: 0.8637 Epoch 2018/5000 9s 190ms/step - loss: 0.5062 - acc: 0.8671 - val_loss: 0.5265 - val_acc: 0.8612 Epoch 2019/5000 9s 188ms/step - loss: 0.5112 - acc: 0.8648 - val_loss: 0.5256 - val_acc: 0.8617 Epoch 2020/5000 9s 185ms/step - loss: 0.5071 - acc: 0.8663 - val_loss: 0.5241 - val_acc: 0.8622 Epoch 2021/5000 9s 186ms/step - loss: 0.5079 - acc: 0.8660 - val_loss: 0.5212 - val_acc: 0.8627 Epoch 2022/5000 9s 185ms/step - loss: 0.5058 - acc: 0.8667 - val_loss: 0.5227 - val_acc: 0.8600 Epoch 2023/5000 9s 189ms/step - loss: 0.5070 - acc: 0.8661 - val_loss: 0.5259 - val_acc: 0.8608 Epoch 2024/5000 10s 193ms/step - loss: 0.5053 - acc: 0.8663 - val_loss: 0.5219 - val_acc: 0.8606 Epoch 2025/5000 10s 191ms/step - loss: 0.5106 - acc: 0.8655 - val_loss: 0.5205 - val_acc: 0.8625 Epoch 2026/5000 10s 191ms/step - loss: 0.5090 - acc: 0.8649 - val_loss: 0.5221 - val_acc: 0.8610 Epoch 2027/5000 9s 189ms/step - loss: 0.5103 - acc: 0.8648 - val_loss: 0.5242 - val_acc: 0.8631 Epoch 2028/5000 9s 189ms/step - loss: 0.5051 - acc: 0.8663 - val_loss: 0.5253 - val_acc: 0.8596 Epoch 2029/5000 9s 185ms/step - loss: 0.5091 - acc: 0.8654 - val_loss: 0.5237 - val_acc: 0.8612 Epoch 2030/5000 9s 189ms/step - loss: 0.5076 - acc: 0.8654 - val_loss: 0.5197 - val_acc: 0.8627 Epoch 2031/5000 9s 189ms/step - loss: 0.5058 - acc: 0.8657 - val_loss: 0.5226 - val_acc: 0.8625 Epoch 2032/5000 10s 194ms/step - loss: 0.5078 - acc: 0.8669 - val_loss: 0.5225 - val_acc: 0.8639 Epoch 2033/5000 10s 193ms/step - loss: 0.5101 - acc: 0.8643 - val_loss: 0.5195 - val_acc: 0.8632 Epoch 2034/5000 10s 191ms/step - loss: 0.5088 - acc: 0.8665 - val_loss: 0.5237 - val_acc: 0.8634 Epoch 2035/5000 10s 191ms/step - loss: 0.5091 - acc: 0.8644 - val_loss: 0.5182 - val_acc: 0.8652 Epoch 2036/5000 9s 186ms/step - loss: 0.5090 - acc: 0.8658 - val_loss: 0.5199 - val_acc: 0.8615 Epoch 2037/5000 9s 187ms/step - loss: 0.5056 - acc: 0.8670 - val_loss: 0.5256 - val_acc: 0.8600 Epoch 2038/5000 9s 187ms/step - loss: 0.5057 - acc: 0.8665 - val_loss: 0.5261 - val_acc: 0.8600 Epoch 2039/5000 9s 190ms/step - loss: 0.5088 - acc: 0.8647 - val_loss: 0.5242 - val_acc: 0.8625 Epoch 2040/5000 10s 192ms/step - loss: 0.5068 - acc: 0.8662 - val_loss: 0.5223 - val_acc: 0.8620 Epoch 2041/5000 10s 191ms/step - loss: 0.5068 - acc: 0.8655 - val_loss: 0.5189 - val_acc: 0.8594 Epoch 2042/5000 10s 191ms/step - loss: 0.5070 - acc: 0.8661 - val_loss: 0.5244 - val_acc: 0.8613 Epoch 2043/5000 9s 187ms/step - loss: 0.5008 - acc: 0.8683 - val_loss: 0.5238 - val_acc: 0.8603 Epoch 2044/5000 9s 189ms/step - loss: 0.5100 - acc: 0.8652 - val_loss: 0.5233 - val_acc: 0.8609 Epoch 2045/5000 9s 186ms/step - loss: 0.5007 - acc: 0.8692 - val_loss: 0.5206 - val_acc: 0.8651 Epoch 2046/5000 9s 188ms/step - loss: 0.5043 - acc: 0.8662 - val_loss: 0.5246 - val_acc: 0.8623 Epoch 2047/5000 10s 190ms/step - loss: 0.5084 - acc: 0.8644 - val_loss: 0.5211 - val_acc: 0.8620 Epoch 2048/5000 10s 192ms/step - loss: 0.5068 - acc: 0.8658 - val_loss: 0.5206 - val_acc: 0.8635 Epoch 2049/5000 10s 194ms/step - loss: 0.5033 - acc: 0.8669 - val_loss: 0.5230 - val_acc: 0.8589 Epoch 2050/5000 10s 192ms/step - loss: 0.5096 - acc: 0.8653 - val_loss: 0.5217 - val_acc: 0.8610 Epoch 2051/5000 10s 191ms/step - loss: 0.5081 - acc: 0.8651 - val_loss: 0.5238 - val_acc: 0.8600 Epoch 2052/5000 9s 186ms/step - loss: 0.5061 - acc: 0.8665 - val_loss: 0.5258 - val_acc: 0.8617 Epoch 2053/5000 9s 187ms/step - loss: 0.5036 - acc: 0.8683 - val_loss: 0.5233 - val_acc: 0.8606 Epoch 2054/5000 9s 187ms/step - loss: 0.5075 - acc: 0.8652 - val_loss: 0.5242 - val_acc: 0.8599 Epoch 2055/5000 9s 189ms/step - loss: 0.5105 - acc: 0.8656 - val_loss: 0.5201 - val_acc: 0.8621 Epoch 2056/5000 10s 191ms/step - loss: 0.5064 - acc: 0.8664 - val_loss: 0.5264 - val_acc: 0.8586 Epoch 2057/5000 10s 192ms/step - loss: 0.5049 - acc: 0.8655 - val_loss: 0.5225 - val_acc: 0.8621 Epoch 2058/5000 10s 190ms/step - loss: 0.5083 - acc: 0.8657 - val_loss: 0.5218 - val_acc: 0.8648 Epoch 2059/5000 9s 188ms/step - loss: 0.5118 - acc: 0.8657 - val_loss: 0.5233 - val_acc: 0.8627 Epoch 2060/5000 9s 187ms/step - loss: 0.5037 - acc: 0.8668 - val_loss: 0.5183 - val_acc: 0.8618 Epoch 2061/5000 9s 185ms/step - loss: 0.5092 - acc: 0.8661 - val_loss: 0.5207 - val_acc: 0.8642 Epoch 2062/5000 9s 188ms/step - loss: 0.5089 - acc: 0.8649 - val_loss: 0.5175 - val_acc: 0.8625 Epoch 2063/5000 10s 190ms/step - loss: 0.5056 - acc: 0.8667 - val_loss: 0.5175 - val_acc: 0.8635 Epoch 2064/5000 10s 192ms/step - loss: 0.5078 - acc: 0.8659 - val_loss: 0.5217 - val_acc: 0.8602 Epoch 2065/5000 10s 192ms/step - loss: 0.5065 - acc: 0.8685 - val_loss: 0.5195 - val_acc: 0.8627 Epoch 2066/5000 10s 190ms/step - loss: 0.5099 - acc: 0.8660 - val_loss: 0.5223 - val_acc: 0.8608 Epoch 2067/5000 10s 190ms/step - loss: 0.5048 - acc: 0.8663 - val_loss: 0.5188 - val_acc: 0.8629 Epoch 2068/5000 9s 187ms/step - loss: 0.5047 - acc: 0.8659 - val_loss: 0.5176 - val_acc: 0.8611 Epoch 2069/5000 9s 185ms/step - loss: 0.5055 - acc: 0.8680 - val_loss: 0.5182 - val_acc: 0.8636 Epoch 2070/5000 9s 186ms/step - loss: 0.5076 - acc: 0.8659 - val_loss: 0.5235 - val_acc: 0.8619 Epoch 2071/5000 9s 189ms/step - loss: 0.5100 - acc: 0.8664 - val_loss: 0.5185 - val_acc: 0.8630 Epoch 2072/5000 10s 192ms/step - loss: 0.5052 - acc: 0.8663 - val_loss: 0.5214 - val_acc: 0.8611 Epoch 2073/5000 10s 192ms/step - loss: 0.5052 - acc: 0.8662 - val_loss: 0.5250 - val_acc: 0.8630 Epoch 2074/5000 10s 193ms/step - loss: 0.5054 - acc: 0.8668 - val_loss: 0.5162 - val_acc: 0.8650 Epoch 2075/5000 9s 189ms/step - loss: 0.5081 - acc: 0.8647 - val_loss: 0.5238 - val_acc: 0.8598 Epoch 2076/5000 9s 189ms/step - loss: 0.5075 - acc: 0.8647 - val_loss: 0.5237 - val_acc: 0.8609 Epoch 2077/5000 9s 185ms/step - loss: 0.5127 - acc: 0.8642 - val_loss: 0.5209 - val_acc: 0.8662 Epoch 2078/5000 9s 186ms/step - loss: 0.5063 - acc: 0.8655 - val_loss: 0.5192 - val_acc: 0.8639 Epoch 2079/5000 9s 189ms/step - loss: 0.5113 - acc: 0.8650 - val_loss: 0.5193 - val_acc: 0.8613 Epoch 2080/5000 10s 191ms/step - loss: 0.5087 - acc: 0.8660 - val_loss: 0.5199 - val_acc: 0.8623 Epoch 2081/5000 10s 191ms/step - loss: 0.5097 - acc: 0.8648 - val_loss: 0.5187 - val_acc: 0.8611 Epoch 2082/5000 10s 191ms/step - loss: 0.5059 - acc: 0.8674 - val_loss: 0.5229 - val_acc: 0.8608 Epoch 2083/5000 10s 191ms/step - loss: 0.5100 - acc: 0.8641 - val_loss: 0.5251 - val_acc: 0.8599 Epoch 2084/5000 9s 187ms/step - loss: 0.5098 - acc: 0.8645 - val_loss: 0.5195 - val_acc: 0.8631 Epoch 2085/5000 9s 187ms/step - loss: 0.5023 - acc: 0.8680 - val_loss: 0.5185 - val_acc: 0.8638 Epoch 2086/5000 9s 187ms/step - loss: 0.5077 - acc: 0.8660 - val_loss: 0.5249 - val_acc: 0.8628 Epoch 2087/5000 9s 189ms/step - loss: 0.5076 - acc: 0.8645 - val_loss: 0.5219 - val_acc: 0.8599 Epoch 2088/5000 9s 189ms/step - loss: 0.5074 - acc: 0.8665 - val_loss: 0.5228 - val_acc: 0.8633 Epoch 2089/5000 10s 191ms/step - loss: 0.5064 - acc: 0.8658 - val_loss: 0.5219 - val_acc: 0.8626 Epoch 2090/5000 10s 191ms/step - loss: 0.5064 - acc: 0.8673 - val_loss: 0.5207 - val_acc: 0.8626 Epoch 2091/5000 9s 188ms/step - loss: 0.5064 - acc: 0.8673 - val_loss: 0.5229 - val_acc: 0.8616 Epoch 2092/5000 9s 190ms/step - loss: 0.5055 - acc: 0.8670 - val_loss: 0.5236 - val_acc: 0.8613 Epoch 2093/5000 9s 187ms/step - loss: 0.5075 - acc: 0.8657 - val_loss: 0.5197 - val_acc: 0.8627 Epoch 2094/5000 9s 186ms/step - loss: 0.5082 - acc: 0.8664 - val_loss: 0.5228 - val_acc: 0.8602 Epoch 2095/5000 9s 189ms/step - loss: 0.5072 - acc: 0.8672 - val_loss: 0.5258 - val_acc: 0.8618 Epoch 2096/5000 10s 192ms/step - loss: 0.5099 - acc: 0.8641 - val_loss: 0.5190 - val_acc: 0.8631 Epoch 2097/5000 10s 192ms/step - loss: 0.5069 - acc: 0.8662 - val_loss: 0.5212 - val_acc: 0.8603 Epoch 2098/5000 10s 191ms/step - loss: 0.5080 - acc: 0.8656 - val_loss: 0.5203 - val_acc: 0.8611 Epoch 2099/5000 10s 192ms/step - loss: 0.5051 - acc: 0.8663 - val_loss: 0.5177 - val_acc: 0.8604 Epoch 2100/5000 9s 186ms/step - loss: 0.5059 - acc: 0.8643 - val_loss: 0.5206 - val_acc: 0.8633 Epoch 2101/5000 9s 186ms/step - loss: 0.5081 - acc: 0.8651 - val_loss: 0.5215 - val_acc: 0.8622 Epoch 2102/5000 9s 188ms/step - loss: 0.5060 - acc: 0.8655 - val_loss: 0.5183 - val_acc: 0.8645 Epoch 2103/5000 10s 190ms/step - loss: 0.5062 - acc: 0.8650 - val_loss: 0.5229 - val_acc: 0.8610 Epoch 2104/5000 10s 192ms/step - loss: 0.5060 - acc: 0.8664 - val_loss: 0.5250 - val_acc: 0.8595 Epoch 2105/5000 10s 194ms/step - loss: 0.5072 - acc: 0.8653 - val_loss: 0.5233 - val_acc: 0.8634 Epoch 2106/5000 10s 193ms/step - loss: 0.5073 - acc: 0.8645 - val_loss: 0.5187 - val_acc: 0.8626 Epoch 2107/5000 9s 187ms/step - loss: 0.5070 - acc: 0.8644 - val_loss: 0.5202 - val_acc: 0.8636 Epoch 2108/5000 9s 186ms/step - loss: 0.5075 - acc: 0.8648 - val_loss: 0.5222 - val_acc: 0.8609 Epoch 2109/5000 9s 186ms/step - loss: 0.5049 - acc: 0.8649 - val_loss: 0.5200 - val_acc: 0.8623 Epoch 2110/5000 9s 188ms/step - loss: 0.5025 - acc: 0.8691 - val_loss: 0.5246 - val_acc: 0.8613 Epoch 2111/5000 10s 190ms/step - loss: 0.5086 - acc: 0.8646 - val_loss: 0.5212 - val_acc: 0.8598 Epoch 2112/5000 10s 191ms/step - loss: 0.5071 - acc: 0.8658 - val_loss: 0.5213 - val_acc: 0.8636 Epoch 2113/5000 10s 193ms/step - loss: 0.5063 - acc: 0.8657 - val_loss: 0.5229 - val_acc: 0.8599 Epoch 2114/5000 9s 190ms/step - loss: 0.5070 - acc: 0.8652 - val_loss: 0.5194 - val_acc: 0.8629 Epoch 2115/5000 10s 191ms/step - loss: 0.5089 - acc: 0.8648 - val_loss: 0.5208 - val_acc: 0.8608 Epoch 2116/5000 9s 188ms/step - loss: 0.5021 - acc: 0.8666 - val_loss: 0.5199 - val_acc: 0.8595 Epoch 2117/5000 9s 188ms/step - loss: 0.5027 - acc: 0.8682 - val_loss: 0.5228 - val_acc: 0.8613 Epoch 2118/5000 9s 186ms/step - loss: 0.5099 - acc: 0.8648 - val_loss: 0.5247 - val_acc: 0.8596 Epoch 2119/5000 10s 190ms/step - loss: 0.5082 - acc: 0.8667 - val_loss: 0.5223 - val_acc: 0.8598 Epoch 2120/5000 10s 191ms/step - loss: 0.5107 - acc: 0.8639 - val_loss: 0.5200 - val_acc: 0.8608 Epoch 2121/5000 10s 191ms/step - loss: 0.5075 - acc: 0.8651 - val_loss: 0.5216 - val_acc: 0.8601 Epoch 2122/5000 10s 191ms/step - loss: 0.5030 - acc: 0.8646 - val_loss: 0.5156 - val_acc: 0.8647 Epoch 2123/5000 9s 187ms/step - loss: 0.5031 - acc: 0.8668 - val_loss: 0.5280 - val_acc: 0.8588 Epoch 2124/5000 9s 187ms/step - loss: 0.5066 - acc: 0.8663 - val_loss: 0.5184 - val_acc: 0.8622 Epoch 2125/5000 9s 187ms/step - loss: 0.5025 - acc: 0.8660 - val_loss: 0.5189 - val_acc: 0.8624 Epoch 2126/5000 9s 189ms/step - loss: 0.5038 - acc: 0.8676 - val_loss: 0.5244 - val_acc: 0.8605 Epoch 2127/5000 10s 191ms/step - loss: 0.5106 - acc: 0.8634 - val_loss: 0.5201 - val_acc: 0.8634 Epoch 2128/5000 10s 193ms/step - loss: 0.5068 - acc: 0.8653 - val_loss: 0.5264 - val_acc: 0.8617 Epoch 2129/5000 10s 193ms/step - loss: 0.5054 - acc: 0.8635 - val_loss: 0.5238 - val_acc: 0.8604 Epoch 2130/5000 9s 189ms/step - loss: 0.5082 - acc: 0.8652 - val_loss: 0.5238 - val_acc: 0.8599 Epoch 2131/5000 10s 191ms/step - loss: 0.5055 - acc: 0.8659 - val_loss: 0.5204 - val_acc: 0.8627 Epoch 2132/5000 9s 186ms/step - loss: 0.5059 - acc: 0.8656 - val_loss: 0.5225 - val_acc: 0.8619 Epoch 2133/5000 9s 189ms/step - loss: 0.5044 - acc: 0.8671 - val_loss: 0.5210 - val_acc: 0.8617 Epoch 2134/5000 9s 187ms/step - loss: 0.5066 - acc: 0.8676 - val_loss: 0.5216 - val_acc: 0.8609 Epoch 2135/5000 10s 191ms/step - loss: 0.5031 - acc: 0.8667 - val_loss: 0.5206 - val_acc: 0.8612 Epoch 2136/5000 10s 190ms/step - loss: 0.5058 - acc: 0.8651 - val_loss: 0.5200 - val_acc: 0.8621 Epoch 2137/5000 10s 192ms/step - loss: 0.5065 - acc: 0.8653 - val_loss: 0.5189 - val_acc: 0.8634 Epoch 2138/5000 10s 191ms/step - loss: 0.5080 - acc: 0.8654 - val_loss: 0.5173 - val_acc: 0.8638 Epoch 2139/5000 9s 188ms/step - loss: 0.5008 - acc: 0.8676 - val_loss: 0.5229 - val_acc: 0.8609 Epoch 2140/5000 9s 188ms/step - loss: 0.5061 - acc: 0.8642 - val_loss: 0.5203 - val_acc: 0.8622 Epoch 2141/5000 9s 186ms/step - loss: 0.5077 - acc: 0.8655 - val_loss: 0.5212 - val_acc: 0.8614 Epoch 2142/5000 9s 187ms/step - loss: 0.5080 - acc: 0.8662 - val_loss: 0.5197 - val_acc: 0.8609 Epoch 2143/5000 9s 189ms/step - loss: 0.5042 - acc: 0.8669 - val_loss: 0.5261 - val_acc: 0.8592 Epoch 2144/5000 10s 192ms/step - loss: 0.5115 - acc: 0.8645 - val_loss: 0.5163 - val_acc: 0.8638 Epoch 2145/5000 10s 193ms/step - loss: 0.5032 - acc: 0.8663 - val_loss: 0.5202 - val_acc: 0.8608 Epoch 2146/5000 10s 192ms/step - loss: 0.5061 - acc: 0.8647 - val_loss: 0.5189 - val_acc: 0.8614 Epoch 2147/5000 10s 191ms/step - loss: 0.5073 - acc: 0.8665 - val_loss: 0.5224 - val_acc: 0.8612 Epoch 2148/5000 9s 186ms/step - loss: 0.5074 - acc: 0.8647 - val_loss: 0.5217 - val_acc: 0.8605 Epoch 2149/5000 9s 186ms/step - loss: 0.5077 - acc: 0.8646 - val_loss: 0.5221 - val_acc: 0.8611 Epoch 2150/5000 9s 186ms/step - loss: 0.5079 - acc: 0.8641 - val_loss: 0.5205 - val_acc: 0.8609 Epoch 2151/5000 10s 191ms/step - loss: 0.5036 - acc: 0.8665 - val_loss: 0.5173 - val_acc: 0.8648 Epoch 2152/5000 10s 193ms/step - loss: 0.5104 - acc: 0.8642 - val_loss: 0.5295 - val_acc: 0.8571 Epoch 2153/5000 10s 192ms/step - loss: 0.5030 - acc: 0.8656 - val_loss: 0.5240 - val_acc: 0.8589 Epoch 2154/5000 10s 192ms/step - loss: 0.5046 - acc: 0.8664 - val_loss: 0.5217 - val_acc: 0.8620 Epoch 2155/5000 9s 188ms/step - loss: 0.5104 - acc: 0.8644 - val_loss: 0.5199 - val_acc: 0.8598 Epoch 2156/5000 9s 187ms/step - loss: 0.5042 - acc: 0.8657 - val_loss: 0.5159 - val_acc: 0.8643 Epoch 2157/5000 9s 185ms/step - loss: 0.5019 - acc: 0.8681 - val_loss: 0.5242 - val_acc: 0.8617 Epoch 2158/5000 9s 188ms/step - loss: 0.5101 - acc: 0.8635 - val_loss: 0.5187 - val_acc: 0.8628 Epoch 2159/5000 9s 190ms/step - loss: 0.5064 - acc: 0.8645 - val_loss: 0.5147 - val_acc: 0.8623 Epoch 2160/5000 10s 191ms/step - loss: 0.5034 - acc: 0.8663 - val_loss: 0.5175 - val_acc: 0.8623 Epoch 2161/5000 10s 191ms/step - loss: 0.5051 - acc: 0.8670 - val_loss: 0.5236 - val_acc: 0.8593 Epoch 2162/5000 9s 189ms/step - loss: 0.5020 - acc: 0.8666 - val_loss: 0.5165 - val_acc: 0.8641 Epoch 2163/5000 9s 189ms/step - loss: 0.5059 - acc: 0.8657 - val_loss: 0.5250 - val_acc: 0.8592 Epoch 2164/5000 9s 186ms/step - loss: 0.5047 - acc: 0.8649 - val_loss: 0.5237 - val_acc: 0.8613 Epoch 2165/5000 9s 186ms/step - loss: 0.5071 - acc: 0.8654 - val_loss: 0.5203 - val_acc: 0.8624 Epoch 2166/5000 9s 185ms/step - loss: 0.5001 - acc: 0.8673 - val_loss: 0.5168 - val_acc: 0.8634 Epoch 2167/5000 9s 188ms/step - loss: 0.5043 - acc: 0.8654 - val_loss: 0.5185 - val_acc: 0.8608 Epoch 2168/5000 10s 193ms/step - loss: 0.5071 - acc: 0.8649 - val_loss: 0.5225 - val_acc: 0.8629 Epoch 2169/5000 10s 192ms/step - loss: 0.5060 - acc: 0.8655 - val_loss: 0.5231 - val_acc: 0.8597 Epoch 2170/5000 10s 191ms/step - loss: 0.5072 - acc: 0.8665 - val_loss: 0.5236 - val_acc: 0.8621 Epoch 2171/5000 9s 187ms/step - loss: 0.5050 - acc: 0.8676 - val_loss: 0.5193 - val_acc: 0.8618 Epoch 2172/5000 9s 187ms/step - loss: 0.5016 - acc: 0.8674 - val_loss: 0.5173 - val_acc: 0.8625 Epoch 2173/5000 9s 184ms/step - loss: 0.5027 - acc: 0.8657 - val_loss: 0.5197 - val_acc: 0.8621 Epoch 2174/5000 9s 185ms/step - loss: 0.5039 - acc: 0.8668 - val_loss: 0.5183 - val_acc: 0.8637 Epoch 2175/5000 9s 186ms/step - loss: 0.5056 - acc: 0.8670 - val_loss: 0.5257 - val_acc: 0.8614 Epoch 2176/5000 9s 189ms/step - loss: 0.5045 - acc: 0.8651 - val_loss: 0.5171 - val_acc: 0.8641 Epoch 2177/5000 9s 190ms/step - loss: 0.5045 - acc: 0.8661 - val_loss: 0.5215 - val_acc: 0.8637 Epoch 2178/5000 9s 190ms/step - loss: 0.5073 - acc: 0.8629 - val_loss: 0.5189 - val_acc: 0.8600 Epoch 2179/5000 9s 190ms/step - loss: 0.5054 - acc: 0.8658 - val_loss: 0.5240 - val_acc: 0.8620 Epoch 2180/5000 9s 185ms/step - loss: 0.5089 - acc: 0.8648 - val_loss: 0.5281 - val_acc: 0.8587 Epoch 2181/5000 9s 185ms/step - loss: 0.5047 - acc: 0.8660 - val_loss: 0.5253 - val_acc: 0.8614 Epoch 2182/5000 9s 185ms/step - loss: 0.5057 - acc: 0.8654 - val_loss: 0.5213 - val_acc: 0.8594 Epoch 2183/5000 9s 188ms/step - loss: 0.5088 - acc: 0.8657 - val_loss: 0.5214 - val_acc: 0.8608 Epoch 2184/5000 9s 189ms/step - loss: 0.5038 - acc: 0.8663 - val_loss: 0.5253 - val_acc: 0.8584 Epoch 2185/5000 9s 190ms/step - loss: 0.5070 - acc: 0.8658 - val_loss: 0.5223 - val_acc: 0.8584 Epoch 2186/5000 10s 190ms/step - loss: 0.5065 - acc: 0.8650 - val_loss: 0.5250 - val_acc: 0.8602 Epoch 2187/5000 9s 185ms/step - loss: 0.5032 - acc: 0.8669 - val_loss: 0.5148 - val_acc: 0.8636 Epoch 2188/5000 9s 184ms/step - loss: 0.5025 - acc: 0.8668 - val_loss: 0.5234 - val_acc: 0.8595 Epoch 2189/5000 9s 184ms/step - loss: 0.5077 - acc: 0.8638 - val_loss: 0.5249 - val_acc: 0.8600 Epoch 2190/5000 9s 185ms/step - loss: 0.5090 - acc: 0.8635 - val_loss: 0.5256 - val_acc: 0.8606 Epoch 2191/5000 9s 188ms/step - loss: 0.5072 - acc: 0.8648 - val_loss: 0.5211 - val_acc: 0.8605 Epoch 2192/5000 9s 187ms/step - loss: 0.5054 - acc: 0.8649 - val_loss: 0.5203 - val_acc: 0.8608 Epoch 2193/5000 9s 189ms/step - loss: 0.5024 - acc: 0.8666 - val_loss: 0.5207 - val_acc: 0.8618 Epoch 2194/5000 9s 190ms/step - loss: 0.5049 - acc: 0.8652 - val_loss: 0.5231 - val_acc: 0.8608 Epoch 2195/5000 9s 188ms/step - loss: 0.5061 - acc: 0.8658 - val_loss: 0.5225 - val_acc: 0.8606 Epoch 2196/5000 9s 185ms/step - loss: 0.5104 - acc: 0.8637 - val_loss: 0.5259 - val_acc: 0.8589 Epoch 2197/5000 9s 184ms/step - loss: 0.5042 - acc: 0.8657 - val_loss: 0.5193 - val_acc: 0.8633 Epoch 2198/5000 9s 185ms/step - loss: 0.5036 - acc: 0.8664 - val_loss: 0.5178 - val_acc: 0.8663 Epoch 2199/5000 9s 187ms/step - loss: 0.5066 - acc: 0.8675 - val_loss: 0.5172 - val_acc: 0.8633 Epoch 2200/5000 9s 188ms/step - loss: 0.5095 - acc: 0.8641 - val_loss: 0.5165 - val_acc: 0.8624 Epoch 2201/5000 10s 190ms/step - loss: 0.5089 - acc: 0.8634 - val_loss: 0.5183 - val_acc: 0.8635 Epoch 2202/5000 9s 189ms/step - loss: 0.5069 - acc: 0.8649 - val_loss: 0.5179 - val_acc: 0.8624 Epoch 2203/5000 9s 184ms/step - loss: 0.5116 - acc: 0.8632 - val_loss: 0.5259 - val_acc: 0.8591 Epoch 2204/5000 9s 187ms/step - loss: 0.5066 - acc: 0.8659 - val_loss: 0.5187 - val_acc: 0.8611 Epoch 2205/5000 9s 181ms/step - loss: 0.5052 - acc: 0.8655 - val_loss: 0.5187 - val_acc: 0.8618 Epoch 2206/5000 9s 186ms/step - loss: 0.5067 - acc: 0.8650 - val_loss: 0.5182 - val_acc: 0.8619 Epoch 2207/5000 9s 185ms/step - loss: 0.5091 - acc: 0.8644 - val_loss: 0.5177 - val_acc: 0.8624 Epoch 2208/5000 10s 192ms/step - loss: 0.5038 - acc: 0.8661 - val_loss: 0.5194 - val_acc: 0.8607 Epoch 2209/5000 10s 190ms/step - loss: 0.5070 - acc: 0.8640 - val_loss: 0.5176 - val_acc: 0.8623 Epoch 2210/5000 10s 190ms/step - loss: 0.5076 - acc: 0.8637 - val_loss: 0.5210 - val_acc: 0.8609 Epoch 2211/5000 9s 189ms/step - loss: 0.5018 - acc: 0.8666 - val_loss: 0.5191 - val_acc: 0.8606 Epoch 2212/5000 9s 186ms/step - loss: 0.5055 - acc: 0.8661 - val_loss: 0.5209 - val_acc: 0.8609 Epoch 2213/5000 9s 185ms/step - loss: 0.5056 - acc: 0.8640 - val_loss: 0.5210 - val_acc: 0.8596 Epoch 2214/5000 9s 184ms/step - loss: 0.5034 - acc: 0.8672 - val_loss: 0.5182 - val_acc: 0.8618 Epoch 2215/5000 9s 187ms/step - loss: 0.5066 - acc: 0.8649 - val_loss: 0.5201 - val_acc: 0.8603 Epoch 2216/5000 9s 189ms/step - loss: 0.5060 - acc: 0.8652 - val_loss: 0.5190 - val_acc: 0.8597 Epoch 2217/5000 10s 190ms/step - loss: 0.5062 - acc: 0.8649 - val_loss: 0.5234 - val_acc: 0.8583 Epoch 2218/5000 10s 192ms/step - loss: 0.5044 - acc: 0.8663 - val_loss: 0.5167 - val_acc: 0.8638 Epoch 2219/5000 9s 185ms/step - loss: 0.5063 - acc: 0.8647 - val_loss: 0.5253 - val_acc: 0.8575 Epoch 2220/5000 9s 186ms/step - loss: 0.5096 - acc: 0.8649 - val_loss: 0.5245 - val_acc: 0.8585 Epoch 2221/5000 9s 184ms/step - loss: 0.5083 - acc: 0.8634 - val_loss: 0.5212 - val_acc: 0.8588 Epoch 2222/5000 9s 187ms/step - loss: 0.5060 - acc: 0.8656 - val_loss: 0.5202 - val_acc: 0.8603 Epoch 2223/5000 9s 188ms/step - loss: 0.5032 - acc: 0.8669 - val_loss: 0.5179 - val_acc: 0.8603 Epoch 2224/5000 9s 190ms/step - loss: 0.5051 - acc: 0.8658 - val_loss: 0.5224 - val_acc: 0.8608 Epoch 2225/5000 10s 191ms/step - loss: 0.5022 - acc: 0.8663 - val_loss: 0.5204 - val_acc: 0.8615 Epoch 2226/5000 9s 189ms/step - loss: 0.5044 - acc: 0.8668 - val_loss: 0.5194 - val_acc: 0.8603 Epoch 2227/5000 9s 188ms/step - loss: 0.5066 - acc: 0.8658 - val_loss: 0.5194 - val_acc: 0.8612 Epoch 2228/5000 9s 183ms/step - loss: 0.5091 - acc: 0.8642 - val_loss: 0.5199 - val_acc: 0.8590 Epoch 2229/5000 9s 185ms/step - loss: 0.5023 - acc: 0.8648 - val_loss: 0.5202 - val_acc: 0.8637 Epoch 2230/5000 9s 185ms/step - loss: 0.5041 - acc: 0.8646 - val_loss: 0.5196 - val_acc: 0.8622 Epoch 2231/5000 9s 189ms/step - loss: 0.5014 - acc: 0.8668 - val_loss: 0.5193 - val_acc: 0.8612 Epoch 2232/5000 9s 188ms/step - loss: 0.5031 - acc: 0.8663 - val_loss: 0.5227 - val_acc: 0.8614 Epoch 2233/5000 10s 192ms/step - loss: 0.5037 - acc: 0.8661 - val_loss: 0.5181 - val_acc: 0.8610 Epoch 2234/5000 10s 192ms/step - loss: 0.5067 - acc: 0.8666 - val_loss: 0.5257 - val_acc: 0.8587 Epoch 2235/5000 9s 186ms/step - loss: 0.5068 - acc: 0.8649 - val_loss: 0.5163 - val_acc: 0.8618 Epoch 2236/5000 9s 186ms/step - loss: 0.5071 - acc: 0.8639 - val_loss: 0.5234 - val_acc: 0.8599 Epoch 2237/5000 9s 185ms/step - loss: 0.5042 - acc: 0.8648 - val_loss: 0.5147 - val_acc: 0.8623 Epoch 2238/5000 9s 185ms/step - loss: 0.5053 - acc: 0.8652 - val_loss: 0.5217 - val_acc: 0.8588 Epoch 2239/5000 9s 186ms/step - loss: 0.5070 - acc: 0.8636 - val_loss: 0.5205 - val_acc: 0.8624 Epoch 2240/5000 10s 192ms/step - loss: 0.5024 - acc: 0.8658 - val_loss: 0.5219 - val_acc: 0.8639 Epoch 2241/5000 10s 191ms/step - loss: 0.5069 - acc: 0.8651 - val_loss: 0.5211 - val_acc: 0.8619 Epoch 2242/5000 9s 188ms/step - loss: 0.5040 - acc: 0.8658 - val_loss: 0.5152 - val_acc: 0.8622 Epoch 2243/5000 9s 188ms/step - loss: 0.5036 - acc: 0.8651 - val_loss: 0.5222 - val_acc: 0.8599 Epoch 2244/5000 9s 185ms/step - loss: 0.5068 - acc: 0.8635 - val_loss: 0.5177 - val_acc: 0.8611 Epoch 2245/5000 9s 186ms/step - loss: 0.5059 - acc: 0.8650 - val_loss: 0.5247 - val_acc: 0.8600 Epoch 2246/5000 9s 184ms/step - loss: 0.5041 - acc: 0.8661 - val_loss: 0.5194 - val_acc: 0.8609 Epoch 2247/5000 9s 188ms/step - loss: 0.5058 - acc: 0.8652 - val_loss: 0.5250 - val_acc: 0.8607 Epoch 2248/5000 10s 191ms/step - loss: 0.5072 - acc: 0.8649 - val_loss: 0.5225 - val_acc: 0.8623 Epoch 2249/5000 10s 191ms/step - loss: 0.5071 - acc: 0.8650 - val_loss: 0.5214 - val_acc: 0.8609 Epoch 2250/5000 10s 192ms/step - loss: 0.5040 - acc: 0.8660 - val_loss: 0.5235 - val_acc: 0.8594 Epoch 2251/5000 9s 188ms/step - loss: 0.5052 - acc: 0.8667 - val_loss: 0.5213 - val_acc: 0.8615 Epoch 2252/5000 9s 187ms/step - loss: 0.5055 - acc: 0.8664 - val_loss: 0.5178 - val_acc: 0.8604 Epoch 2253/5000 9s 184ms/step - loss: 0.5074 - acc: 0.8666 - val_loss: 0.5228 - val_acc: 0.8605 Epoch 2254/5000 9s 187ms/step - loss: 0.5065 - acc: 0.8657 - val_loss: 0.5181 - val_acc: 0.8640 Epoch 2255/5000 9s 187ms/step - loss: 0.5033 - acc: 0.8668 - val_loss: 0.5194 - val_acc: 0.8625 Epoch 2256/5000 9s 190ms/step - loss: 0.5065 - acc: 0.8644 - val_loss: 0.5209 - val_acc: 0.8599 Epoch 2257/5000 9s 190ms/step - loss: 0.5064 - acc: 0.8645 - val_loss: 0.5248 - val_acc: 0.8588 Epoch 2258/5000 9s 188ms/step - loss: 0.5070 - acc: 0.8645 - val_loss: 0.5210 - val_acc: 0.8633 Epoch 2259/5000 9s 189ms/step - loss: 0.5039 - acc: 0.8642 - val_loss: 0.5231 - val_acc: 0.8633 Epoch 2260/5000 9s 185ms/step - loss: 0.5078 - acc: 0.8641 - val_loss: 0.5208 - val_acc: 0.8609 Epoch 2261/5000 9s 185ms/step - loss: 0.5001 - acc: 0.8675 - val_loss: 0.5218 - val_acc: 0.8633 Epoch 2262/5000 9s 186ms/step - loss: 0.5063 - acc: 0.8653 - val_loss: 0.5183 - val_acc: 0.8602 Epoch 2263/5000 9s 186ms/step - loss: 0.5039 - acc: 0.8665 - val_loss: 0.5264 - val_acc: 0.8582 Epoch 2264/5000 10s 190ms/step - loss: 0.5058 - acc: 0.8660 - val_loss: 0.5223 - val_acc: 0.8605 Epoch 2265/5000 9s 190ms/step - loss: 0.5027 - acc: 0.8659 - val_loss: 0.5194 - val_acc: 0.8629 Epoch 2266/5000 9s 189ms/step - loss: 0.5042 - acc: 0.8663 - val_loss: 0.5171 - val_acc: 0.8610 Epoch 2267/5000 9s 185ms/step - loss: 0.5032 - acc: 0.8662 - val_loss: 0.5224 - val_acc: 0.8594 Epoch 2268/5000 9s 186ms/step - loss: 0.5089 - acc: 0.8636 - val_loss: 0.5156 - val_acc: 0.8617 Epoch 2269/5000 9s 183ms/step - loss: 0.5065 - acc: 0.8629 - val_loss: 0.5170 - val_acc: 0.8615 Epoch 2270/5000 9s 185ms/step - loss: 0.5052 - acc: 0.8653 - val_loss: 0.5179 - val_acc: 0.8612 Epoch 2271/5000 9s 187ms/step - loss: 0.5058 - acc: 0.8646 - val_loss: 0.5214 - val_acc: 0.8610 Epoch 2272/5000 9s 188ms/step - loss: 0.5054 - acc: 0.8655 - val_loss: 0.5210 - val_acc: 0.8616 Epoch 2273/5000 10s 196ms/step - loss: 0.5026 - acc: 0.8650 - val_loss: 0.5182 - val_acc: 0.8612 Epoch 2274/5000 10s 194ms/step - loss: 0.5045 - acc: 0.8653 - val_loss: 0.5181 - val_acc: 0.8621 Epoch 2275/5000 9s 187ms/step - loss: 0.5019 - acc: 0.8674 - val_loss: 0.5220 - val_acc: 0.8617 Epoch 2276/5000 9s 185ms/step - loss: 0.5060 - acc: 0.8633 - val_loss: 0.5245 - val_acc: 0.8592 Epoch 2277/5000 9s 182ms/step - loss: 0.5051 - acc: 0.8664 - val_loss: 0.5211 - val_acc: 0.8593 Epoch 2278/5000 9s 186ms/step - loss: 0.5048 - acc: 0.8640 - val_loss: 0.5253 - val_acc: 0.8605 Epoch 2279/5000 9s 189ms/step - loss: 0.5082 - acc: 0.8631 - val_loss: 0.5198 - val_acc: 0.8642 Epoch 2280/5000 10s 192ms/step - loss: 0.5044 - acc: 0.8660 - val_loss: 0.5190 - val_acc: 0.8618 Epoch 2281/5000 10s 192ms/step - loss: 0.5053 - acc: 0.8660 - val_loss: 0.5215 - val_acc: 0.8586 Epoch 2282/5000 10s 191ms/step - loss: 0.5032 - acc: 0.8647 - val_loss: 0.5280 - val_acc: 0.8604 Epoch 2283/5000 9s 186ms/step - loss: 0.5086 - acc: 0.8635 - val_loss: 0.5234 - val_acc: 0.8564 Epoch 2284/5000 9s 187ms/step - loss: 0.5015 - acc: 0.8669 - val_loss: 0.5199 - val_acc: 0.8601 Epoch 2285/5000 9s 183ms/step - loss: 0.5049 - acc: 0.8642 - val_loss: 0.5206 - val_acc: 0.8607 Epoch 2286/5000 9s 187ms/step - loss: 0.5046 - acc: 0.8644 - val_loss: 0.5229 - val_acc: 0.8590 Epoch 2287/5000 9s 186ms/step - loss: 0.5042 - acc: 0.8663 - val_loss: 0.5194 - val_acc: 0.8618 Epoch 2288/5000 9s 188ms/step - loss: 0.5001 - acc: 0.8680 - val_loss: 0.5266 - val_acc: 0.8598 Epoch 2289/5000 10s 191ms/step - loss: 0.5044 - acc: 0.8660 - val_loss: 0.5180 - val_acc: 0.8626 Epoch 2290/5000 9s 189ms/step - loss: 0.5053 - acc: 0.8638 - val_loss: 0.5227 - val_acc: 0.8600 Epoch 2291/5000 9s 189ms/step - loss: 0.5054 - acc: 0.8645 - val_loss: 0.5171 - val_acc: 0.8617 Epoch 2292/5000 9s 182ms/step - loss: 0.5035 - acc: 0.8667 - val_loss: 0.5225 - val_acc: 0.8587 Epoch 2293/5000 9s 186ms/step - loss: 0.5067 - acc: 0.8657 - val_loss: 0.5227 - val_acc: 0.8621 Epoch 2294/5000 9s 185ms/step - loss: 0.4980 - acc: 0.8681 - val_loss: 0.5199 - val_acc: 0.8593 Epoch 2295/5000 9s 188ms/step - loss: 0.5036 - acc: 0.8653 - val_loss: 0.5232 - val_acc: 0.8627 Epoch 2296/5000 10s 190ms/step - loss: 0.5046 - acc: 0.8648 - val_loss: 0.5222 - val_acc: 0.8598 Epoch 2297/5000 10s 191ms/step - loss: 0.5052 - acc: 0.8657 - val_loss: 0.5163 - val_acc: 0.8621 Epoch 2298/5000 9s 189ms/step - loss: 0.5079 - acc: 0.8636 - val_loss: 0.5138 - val_acc: 0.8651 Epoch 2299/5000 9s 189ms/step - loss: 0.5021 - acc: 0.8646 - val_loss: 0.5184 - val_acc: 0.8637 Epoch 2300/5000 9s 186ms/step - loss: 0.5071 - acc: 0.8634 - val_loss: 0.5206 - val_acc: 0.8629 Epoch 2301/5000 9s 182ms/step - loss: 0.5054 - acc: 0.8657 - val_loss: 0.5240 - val_acc: 0.8620 Epoch 2302/5000 9s 185ms/step - loss: 0.5034 - acc: 0.8658 - val_loss: 0.5235 - val_acc: 0.8608 Epoch 2303/5000 9s 187ms/step - loss: 0.5092 - acc: 0.8627 - val_loss: 0.5228 - val_acc: 0.8604 Epoch 2304/5000 10s 191ms/step - loss: 0.5026 - acc: 0.8664 - val_loss: 0.5142 - val_acc: 0.8619 Epoch 2305/5000 9s 190ms/step - loss: 0.5031 - acc: 0.8655 - val_loss: 0.5167 - val_acc: 0.8627 Epoch 2306/5000 9s 188ms/step - loss: 0.5065 - acc: 0.8655 - val_loss: 0.5212 - val_acc: 0.8631 Epoch 2307/5000 9s 190ms/step - loss: 0.5036 - acc: 0.8675 - val_loss: 0.5190 - val_acc: 0.8609 Epoch 2308/5000 9s 184ms/step - loss: 0.5017 - acc: 0.8668 - val_loss: 0.5247 - val_acc: 0.8598 Epoch 2309/5000 9s 186ms/step - loss: 0.5043 - acc: 0.8639 - val_loss: 0.5142 - val_acc: 0.8641 Epoch 2310/5000 9s 185ms/step - loss: 0.5070 - acc: 0.8637 - val_loss: 0.5193 - val_acc: 0.8622 Epoch 2311/5000 9s 188ms/step - loss: 0.5043 - acc: 0.8650 - val_loss: 0.5229 - val_acc: 0.8637 Epoch 2312/5000 9s 189ms/step - loss: 0.5068 - acc: 0.8645 - val_loss: 0.5190 - val_acc: 0.8607 Epoch 2313/5000 10s 190ms/step - loss: 0.5041 - acc: 0.8653 - val_loss: 0.5195 - val_acc: 0.8620 Epoch 2314/5000 10s 191ms/step - loss: 0.5037 - acc: 0.8640 - val_loss: 0.5208 - val_acc: 0.8613 Epoch 2315/5000 9s 186ms/step - loss: 0.5041 - acc: 0.8652 - val_loss: 0.5194 - val_acc: 0.8625 Epoch 2316/5000 9s 186ms/step - loss: 0.5075 - acc: 0.8632 - val_loss: 0.5149 - val_acc: 0.8620 Epoch 2317/5000 9s 185ms/step - loss: 0.5038 - acc: 0.8657 - val_loss: 0.5223 - val_acc: 0.8617 Epoch 2318/5000 9s 186ms/step - loss: 0.5053 - acc: 0.8643 - val_loss: 0.5190 - val_acc: 0.8634 Epoch 2319/5000 9s 187ms/step - loss: 0.5028 - acc: 0.8662 - val_loss: 0.5242 - val_acc: 0.8596 Epoch 2320/5000 9s 189ms/step - loss: 0.5017 - acc: 0.8669 - val_loss: 0.5195 - val_acc: 0.8606 Epoch 2321/5000 10s 191ms/step - loss: 0.5055 - acc: 0.8646 - val_loss: 0.5220 - val_acc: 0.8609 Epoch 2322/5000 10s 190ms/step - loss: 0.5034 - acc: 0.8655 - val_loss: 0.5179 - val_acc: 0.8606 Epoch 2323/5000 9s 189ms/step - loss: 0.5033 - acc: 0.8668 - val_loss: 0.5193 - val_acc: 0.8605 Epoch 2324/5000 9s 183ms/step - loss: 0.5032 - acc: 0.8663 - val_loss: 0.5221 - val_acc: 0.8610 Epoch 2325/5000 9s 183ms/step - loss: 0.5066 - acc: 0.8649 - val_loss: 0.5202 - val_acc: 0.8610 Epoch 2326/5000 9s 184ms/step - loss: 0.5042 - acc: 0.8660 - val_loss: 0.5222 - val_acc: 0.8597 Epoch 2327/5000 9s 187ms/step - loss: 0.5091 - acc: 0.8655 - val_loss: 0.5203 - val_acc: 0.8598 Epoch 2328/5000 9s 188ms/step - loss: 0.5090 - acc: 0.8636 - val_loss: 0.5196 - val_acc: 0.8603 Epoch 2329/5000 10s 192ms/step - loss: 0.5030 - acc: 0.8658 - val_loss: 0.5255 - val_acc: 0.8602 Epoch 2330/5000 9s 188ms/step - loss: 0.5063 - acc: 0.8650 - val_loss: 0.5205 - val_acc: 0.8606 Epoch 2331/5000 9s 188ms/step - loss: 0.5038 - acc: 0.8659 - val_loss: 0.5256 - val_acc: 0.8584 Epoch 2332/5000 9s 186ms/step - loss: 0.5077 - acc: 0.8642 - val_loss: 0.5186 - val_acc: 0.8618 Epoch 2333/5000 9s 183ms/step - loss: 0.5051 - acc: 0.8643 - val_loss: 0.5204 - val_acc: 0.8586 Epoch 2334/5000 9s 187ms/step - loss: 0.5008 - acc: 0.8670 - val_loss: 0.5186 - val_acc: 0.8607 Epoch 2335/5000 9s 185ms/step - loss: 0.5044 - acc: 0.8664 - val_loss: 0.5204 - val_acc: 0.8623 Epoch 2336/5000 10s 191ms/step - loss: 0.5059 - acc: 0.8646 - val_loss: 0.5191 - val_acc: 0.8616 Epoch 2337/5000 10s 191ms/step - loss: 0.5013 - acc: 0.8675 - val_loss: 0.5189 - val_acc: 0.8629 Epoch 2338/5000 9s 189ms/step - loss: 0.5069 - acc: 0.8645 - val_loss: 0.5244 - val_acc: 0.8630 Epoch 2339/5000 10s 190ms/step - loss: 0.5085 - acc: 0.8638 - val_loss: 0.5189 - val_acc: 0.8609 Epoch 2340/5000 9s 184ms/step - loss: 0.5009 - acc: 0.8653 - val_loss: 0.5210 - val_acc: 0.8609 Epoch 2341/5000 9s 187ms/step - loss: 0.5008 - acc: 0.8657 - val_loss: 0.5144 - val_acc: 0.8628 Epoch 2342/5000 9s 184ms/step - loss: 0.5017 - acc: 0.8661 - val_loss: 0.5251 - val_acc: 0.8606 Epoch 2343/5000 9s 186ms/step - loss: 0.5069 - acc: 0.8646 - val_loss: 0.5204 - val_acc: 0.8608 Epoch 2344/5000 9s 189ms/step - loss: 0.4997 - acc: 0.8675 - val_loss: 0.5203 - val_acc: 0.8651 Epoch 2345/5000 9s 189ms/step - loss: 0.5015 - acc: 0.8672 - val_loss: 0.5220 - val_acc: 0.8610 Epoch 2346/5000 10s 192ms/step - loss: 0.5005 - acc: 0.8656 - val_loss: 0.5200 - val_acc: 0.8620 Epoch 2347/5000 9s 186ms/step - loss: 0.5070 - acc: 0.8636 - val_loss: 0.5230 - val_acc: 0.8607 Epoch 2348/5000 9s 189ms/step - loss: 0.5018 - acc: 0.8657 - val_loss: 0.5175 - val_acc: 0.8611 Epoch 2349/5000 9s 182ms/step - loss: 0.5042 - acc: 0.8656 - val_loss: 0.5244 - val_acc: 0.8603 Epoch 2350/5000 9s 187ms/step - loss: 0.5062 - acc: 0.8656 - val_loss: 0.5182 - val_acc: 0.8614 Epoch 2351/5000 9s 184ms/step - loss: 0.5053 - acc: 0.8642 - val_loss: 0.5183 - val_acc: 0.8611 Epoch 2352/5000 10s 192ms/step - loss: 0.5094 - acc: 0.8640 - val_loss: 0.5202 - val_acc: 0.8589 Epoch 2353/5000 10s 191ms/step - loss: 0.5077 - acc: 0.8648 - val_loss: 0.5176 - val_acc: 0.8630 Epoch 2354/5000 9s 189ms/step - loss: 0.5082 - acc: 0.8635 - val_loss: 0.5217 - val_acc: 0.8620 Epoch 2355/5000 9s 189ms/step - loss: 0.5024 - acc: 0.8656 - val_loss: 0.5174 - val_acc: 0.8627 Epoch 2356/5000 9s 185ms/step - loss: 0.5014 - acc: 0.8653 - val_loss: 0.5185 - val_acc: 0.8615 Epoch 2357/5000 9s 183ms/step - loss: 0.5070 - acc: 0.8650 - val_loss: 0.5207 - val_acc: 0.8630 Epoch 2358/5000 9s 182ms/step - loss: 0.5031 - acc: 0.8648 - val_loss: 0.5190 - val_acc: 0.8649 Epoch 2359/5000 9s 186ms/step - loss: 0.5080 - acc: 0.8630 - val_loss: 0.5172 - val_acc: 0.8636 Epoch 2360/5000 9s 189ms/step - loss: 0.5041 - acc: 0.8661 - val_loss: 0.5220 - val_acc: 0.8597 Epoch 2361/5000 10s 191ms/step - loss: 0.5029 - acc: 0.8654 - val_loss: 0.5226 - val_acc: 0.8602 Epoch 2362/5000 9s 188ms/step - loss: 0.4963 - acc: 0.8706 - val_loss: 0.5257 - val_acc: 0.8605 Epoch 2363/5000 9s 189ms/step - loss: 0.4999 - acc: 0.8673 - val_loss: 0.5233 - val_acc: 0.8563 Epoch 2364/5000 9s 184ms/step - loss: 0.5031 - acc: 0.8658 - val_loss: 0.5160 - val_acc: 0.8612 Epoch 2365/5000 9s 184ms/step - loss: 0.5002 - acc: 0.8673 - val_loss: 0.5206 - val_acc: 0.8605 Epoch 2366/5000 9s 185ms/step - loss: 0.5039 - acc: 0.8649 - val_loss: 0.5190 - val_acc: 0.8608 Epoch 2367/5000 9s 185ms/step - loss: 0.5082 - acc: 0.8639 - val_loss: 0.5184 - val_acc: 0.8623 Epoch 2368/5000 9s 189ms/step - loss: 0.5051 - acc: 0.8658 - val_loss: 0.5175 - val_acc: 0.8611 Epoch 2369/5000 10s 191ms/step - loss: 0.5079 - acc: 0.8635 - val_loss: 0.5172 - val_acc: 0.8612 Epoch 2370/5000 9s 189ms/step - loss: 0.5056 - acc: 0.8647 - val_loss: 0.5243 - val_acc: 0.8609 Epoch 2371/5000 9s 186ms/step - loss: 0.5034 - acc: 0.8654 - val_loss: 0.5149 - val_acc: 0.8632 Epoch 2372/5000 9s 185ms/step - loss: 0.5055 - acc: 0.8625 - val_loss: 0.5204 - val_acc: 0.8613 Epoch 2373/5000 9s 183ms/step - loss: 0.5015 - acc: 0.8663 - val_loss: 0.5233 - val_acc: 0.8620 Epoch 2374/5000 9s 185ms/step - loss: 0.5034 - acc: 0.8649 - val_loss: 0.5199 - val_acc: 0.8624 Epoch 2375/5000 9s 186ms/step - loss: 0.5043 - acc: 0.8667 - val_loss: 0.5198 - val_acc: 0.8643 Epoch 2376/5000 10s 190ms/step - loss: 0.5060 - acc: 0.8655 - val_loss: 0.5159 - val_acc: 0.8641 Epoch 2377/5000 10s 190ms/step - loss: 0.5025 - acc: 0.8661 - val_loss: 0.5171 - val_acc: 0.8600 Epoch 2378/5000 9s 190ms/step - loss: 0.5058 - acc: 0.8643 - val_loss: 0.5229 - val_acc: 0.8596 Epoch 2379/5000 9s 186ms/step - loss: 0.5067 - acc: 0.8649 - val_loss: 0.5204 - val_acc: 0.8585 Epoch 2380/5000 9s 185ms/step - loss: 0.5039 - acc: 0.8648 - val_loss: 0.5211 - val_acc: 0.8616 Epoch 2381/5000 9s 186ms/step - loss: 0.5031 - acc: 0.8650 - val_loss: 0.5184 - val_acc: 0.8609 Epoch 2382/5000 9s 185ms/step - loss: 0.5069 - acc: 0.8644 - val_loss: 0.5213 - val_acc: 0.8594 Epoch 2383/5000 9s 189ms/step - loss: 0.5071 - acc: 0.8639 - val_loss: 0.5227 - val_acc: 0.8589 Epoch 2384/5000 9s 189ms/step - loss: 0.5073 - acc: 0.8663 - val_loss: 0.5190 - val_acc: 0.8623 Epoch 2385/5000 10s 193ms/step - loss: 0.5056 - acc: 0.8662 - val_loss: 0.5171 - val_acc: 0.8633 Epoch 2386/5000 9s 188ms/step - loss: 0.5086 - acc: 0.8638 - val_loss: 0.5144 - val_acc: 0.8637 Epoch 2387/5000 9s 188ms/step - loss: 0.4997 - acc: 0.8667 - val_loss: 0.5166 - val_acc: 0.8624 Epoch 2388/5000 9s 184ms/step - loss: 0.4999 - acc: 0.8663 - val_loss: 0.5198 - val_acc: 0.8641 Epoch 2389/5000 9s 185ms/step - loss: 0.5057 - acc: 0.8638 - val_loss: 0.5206 - val_acc: 0.8614 Epoch 2390/5000 9s 185ms/step - loss: 0.5030 - acc: 0.8666 - val_loss: 0.5173 - val_acc: 0.8608 Epoch 2391/5000 9s 188ms/step - loss: 0.5021 - acc: 0.8650 - val_loss: 0.5237 - val_acc: 0.8592 Epoch 2392/5000 9s 190ms/step - loss: 0.5059 - acc: 0.8660 - val_loss: 0.5201 - val_acc: 0.8632 Epoch 2393/5000 10s 192ms/step - loss: 0.5029 - acc: 0.8650 - val_loss: 0.5208 - val_acc: 0.8596 Epoch 2394/5000 10s 191ms/step - loss: 0.5014 - acc: 0.8664 - val_loss: 0.5260 - val_acc: 0.8575 Epoch 2395/5000 9s 185ms/step - loss: 0.5004 - acc: 0.8677 - val_loss: 0.5158 - val_acc: 0.8635 Epoch 2396/5000 9s 187ms/step - loss: 0.5001 - acc: 0.8665 - val_loss: 0.5234 - val_acc: 0.8596 Epoch 2397/5000 9s 182ms/step - loss: 0.5038 - acc: 0.8644 - val_loss: 0.5157 - val_acc: 0.8631 Epoch 2398/5000 9s 187ms/step - loss: 0.5047 - acc: 0.8655 - val_loss: 0.5172 - val_acc: 0.8619 Epoch 2399/5000 9s 190ms/step - loss: 0.5061 - acc: 0.8647 - val_loss: 0.5225 - val_acc: 0.8602 Epoch 2400/5000 10s 191ms/step - loss: 0.5054 - acc: 0.8632 - val_loss: 0.5205 - val_acc: 0.8616 Epoch 2401/5000 10s 193ms/step - loss: 0.5061 - acc: 0.8649 - val_loss: 0.5271 - val_acc: 0.8580

程序还没运行完,看这样子,估计是欠拟合了。

Minghang Zhao, Shisheng Zhong, Xuyun Fu, Baoping Tang, Shaojiang Dong, Michael Pecht, Deep Residual Networks with Adaptively Parametric Rectifier Linear Units for Fault Diagnosis, IEEE Transactions on Industrial Electronics, 2020, DOI: 10.1109/TIE.2020.2972458