Hive安装及Beeline连接

1.下载

wget https://mirrors.tuna.tsinghua.edu.cn/apache/hive/hive-3.1.2/apache-hive-3.1.2-bin.tar.gz

2. 解压并安装

tar -zxvf hive-3.1.2.tar.gz -C /usr/local

vi /etc/profile

export HIVE_HOME=/usr/local/hive-3.1.2

export PATH=$PATH:$HIVE_HOME/bin

source /etc/profile

3. 配置

修改hive-site.xml($HIVE_HOME/conf)

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>123456</value>

</property>

<!--hive server2 web ui host-->

<property>

<name>hive.server2 webui host</name>

<value>0.0.0.0</value>

</property>

<!--hive server2 webui port-->

<property>

<name>hive.server2.webui.port</name>

<value>10002</value>

</property>

4. 复制mysql驱动

scp -r mysql-connector-java-5.1.28.jar root@192.168.2.27:/root

mv ~/mysql-connector-java-5.1.28.jar /usr/local/hive-3.1.2/lib

5.初始化hive

bin/schematool -dbType mysql -initSchema

6.启动hive

hive

7. 使用beeline启动

# 启动hiveserver2

nohup hive --service hiveserver2 &

# 启动metastore

nohup hive --service metastore &

8.验证beeline连接

beeline -u jdbc:hive2://192.168.2.27:10000 -n root

Connecting to jdbc:hive2://192.168.2.27:10000

Connected to: Apache Hive (version 3.1.2)

Driver: Hive JDBC (version 3.1.2)

Transaction isolation: TRANSACTION_REPEATABLE_READ

Beeline version 3.1.2 by Apache Hive

0: jdbc:hive2://192.168.2.27:10000>

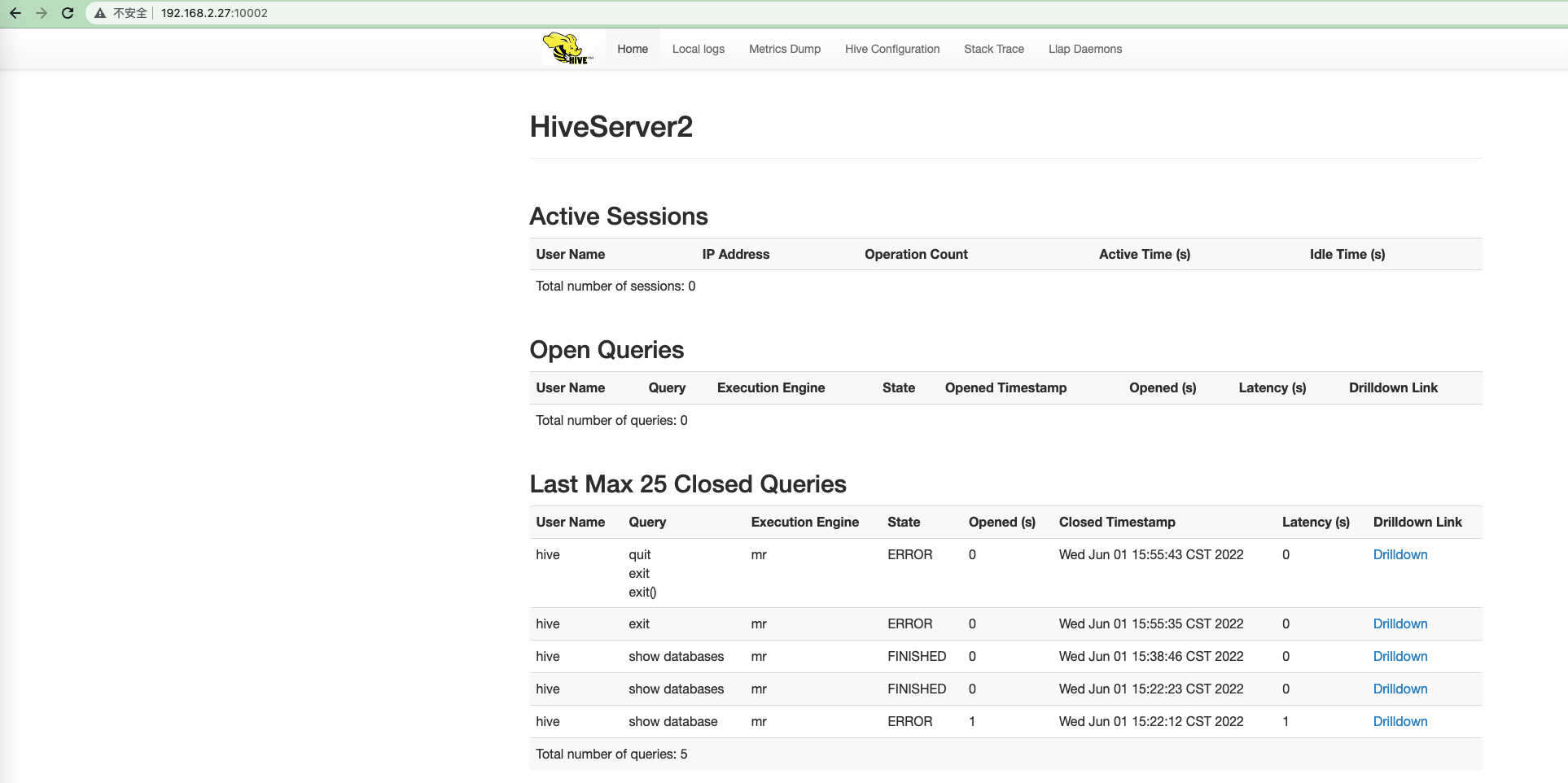

9. 验证hive2 web ui

访问 http://ip:port,查看hiveserver2 web ui

10.可能遇到的问题

Hive User: root is not allowed to impersonate xxx

解决:在hadoop的配置文件core-site.xml增加如下配置重启hadoop

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>

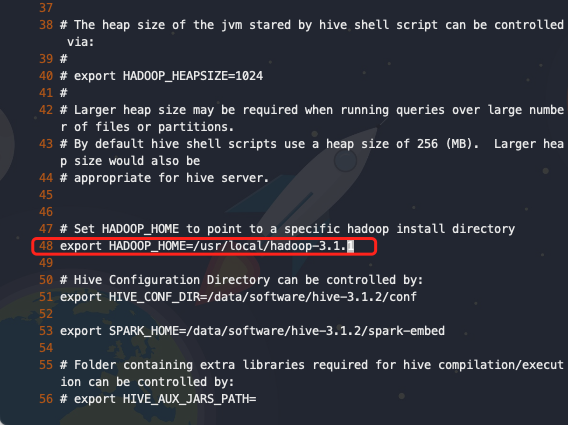

Cannot find hadoop installation: $HADOOP_HOME or $HADOOP_PREFIX must be set or hadoop must be in the path

解决:

cp hive-env.sh.template hive-env.sh

vi hive-env.sh

export HADOOP_HOME=/usr/local/hadoop-3.1.1

source hive-env.sh