java8新特性-引用流-distinct

distinct是对对象去重,所以流中的对象必须重写equals和hashCode方法。

例子:

List<User> users = new ArrayList<>();

users.add(new User("张三",30));

users.add(new User("李四",39));

users.add(new User("王五",20));

users.add(new User("王五",20));

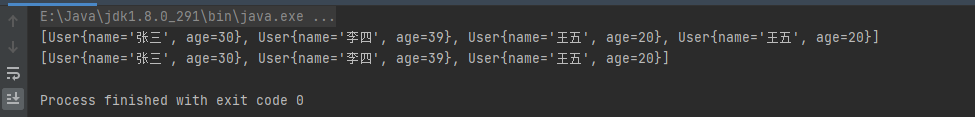

System.out.println(users);

List<User> collect = users.stream().distinct().collect(Collectors.toList());

System.out.println(collect);

输出:

源码分析

ReferencePipeline#distinct()

public final Stream<P_OUT> distinct() {

return DistinctOps.makeRef(this);

}

看看DistinctOps.makeRef。

DistinctOps#makeRef

static <T> ReferencePipeline<T, T> makeRef(AbstractPipeline<?, T, ?> upstream) {

return new ReferencePipeline.StatefulOp<T, T>(upstream, StreamShape.REFERENCE,

StreamOpFlag.IS_DISTINCT | StreamOpFlag.NOT_SIZED) {

<P_IN> Node<T> reduce(PipelineHelper<T> helper, Spliterator<P_IN> spliterator) {

// If the stream is SORTED then it should also be ORDERED so the following will also

// preserve the sort order

TerminalOp<T, LinkedHashSet<T>> reduceOp

= ReduceOps.<T, LinkedHashSet<T>>makeRef(LinkedHashSet::new, LinkedHashSet::add,

LinkedHashSet::addAll);

return Nodes.node(reduceOp.evaluateParallel(helper, spliterator));

}

@Override

<P_IN> Node<T> opEvaluateParallel(PipelineHelper<T> helper,

Spliterator<P_IN> spliterator,

IntFunction<T[]> generator) {

if (StreamOpFlag.DISTINCT.isKnown(helper.getStreamAndOpFlags())) {

// No-op

return helper.evaluate(spliterator, false, generator);

}

else if (StreamOpFlag.ORDERED.isKnown(helper.getStreamAndOpFlags())) {

return reduce(helper, spliterator);

}

else {

// Holder of null state since ConcurrentHashMap does not support null values

AtomicBoolean seenNull = new AtomicBoolean(false);

ConcurrentHashMap<T, Boolean> map = new ConcurrentHashMap<>();

TerminalOp<T, Void> forEachOp = ForEachOps.makeRef(t -> {

if (t == null)

seenNull.set(true);

else

map.putIfAbsent(t, Boolean.TRUE);

}, false);

forEachOp.evaluateParallel(helper, spliterator);

// If null has been seen then copy the key set into a HashSet that supports null values

// and add null

Set<T> keys = map.keySet();

if (seenNull.get()) {

// TODO Implement a more efficient set-union view, rather than copying

keys = new HashSet<>(keys);

keys.add(null);

}

return Nodes.node(keys);

}

}

@Override

<P_IN> Spliterator<T> opEvaluateParallelLazy(PipelineHelper<T> helper, Spliterator<P_IN> spliterator) {

if (StreamOpFlag.DISTINCT.isKnown(helper.getStreamAndOpFlags())) {

// No-op

return helper.wrapSpliterator(spliterator);

}

else if (StreamOpFlag.ORDERED.isKnown(helper.getStreamAndOpFlags())) {

// Not lazy, barrier required to preserve order

return reduce(helper, spliterator).spliterator();

}

else {

// Lazy

return new StreamSpliterators.DistinctSpliterator<>(helper.wrapSpliterator(spliterator));

}

}

@Override

Sink<T> opWrapSink(int flags, Sink<T> sink) {

Objects.requireNonNull(sink);

if (StreamOpFlag.DISTINCT.isKnown(flags)) {

return sink;

} else if (StreamOpFlag.SORTED.isKnown(flags)) {

return new Sink.ChainedReference<T, T>(sink) {

boolean seenNull;

T lastSeen;

@Override

public void begin(long size) {

seenNull = false;

lastSeen = null;

downstream.begin(-1);

}

@Override

public void end() {

seenNull = false;

lastSeen = null;

downstream.end();

}

@Override

public void accept(T t) {

if (t == null) {

if (!seenNull) {

seenNull = true;

downstream.accept(lastSeen = null);

}

} else if (lastSeen == null || !t.equals(lastSeen)) {

downstream.accept(lastSeen = t);

}

}

};

} else {

return new Sink.ChainedReference<T, T>(sink) {

Set<T> seen;

@Override

public void begin(long size) {

seen = new HashSet<>();

downstream.begin(-1);

}

@Override

public void end() {

seen = null;

downstream.end();

}

@Override

public void accept(T t) {

if (!seen.contains(t)) {

seen.add(t);

downstream.accept(t);

}

}

};

}

}

};

}

关注ReferencePipeline.StatefulOp里的opWrapSink方法。

ReferencePipeline#StatefulOp#opWrapSink

Sink<T> opWrapSink(int flags, Sink<T> sink) {

Objects.requireNonNull(sink);

if (StreamOpFlag.DISTINCT.isKnown(flags)) {

return sink;

} else if (StreamOpFlag.SORTED.isKnown(flags)) {

return new Sink.ChainedReference<T, T>(sink) {

boolean seenNull;

T lastSeen;

@Override

public void begin(long size) {

seenNull = false;

lastSeen = null;

downstream.begin(-1);

}

@Override

public void end() {

seenNull = false;

lastSeen = null;

downstream.end();

}

@Override

public void accept(T t) {

if (t == null) {

if (!seenNull) {

seenNull = true;

downstream.accept(lastSeen = null);

}

} else if (lastSeen == null || !t.equals(lastSeen)) {

downstream.accept(lastSeen = t);

}

}

};

} else {

return new Sink.ChainedReference<T, T>(sink) {

Set<T> seen;

@Override

public void begin(long size) {

seen = new HashSet<>();

downstream.begin(-1);

}

@Override

public void end() {

seen = null;

downstream.end();

}

@Override

public void accept(T t) {

if (!seen.contains(t)) {

seen.add(t);

downstream.accept(t);

}

}

};

}

}

根据流的特征进行不同处理。如果Stream有DISTINCT特征,表示已经去重了,直接返回。如果Stream有SORTED特征,表示Stream按照相遇顺序遵循可比较元素的自然排序顺序的特征值,即流已经排序了,只需保存上一次的元素和对null处理即可。从上面代码的中间的if代码块的accept方法可知,保存了上一次的元素,这次进行处理时,进行null值判断和与上一次元素对比即可,用seenNull字段只对第一个遇到的null进行处理。如果Stream没有DISTINCT和SORTED特征,则走上面代码的else代码块。用HashSet进行去重。