Evaluation metrics for classification

Accuracy/Error rate

ACC = (TP+TN)/(P+N)

ERR = (FP+FN)/(P+N) = 1-ACC

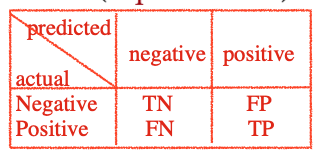

Confusion matrix

Precision/Recall/F1

Precision = TP/(TP+FP)-- positive predictive value

Recall= TP/(TP+FN) -- true positive rate

F1=1/(1/precision+1/recall)

ROC

True positive rate (TPR): the ratio of positive instances that are correctly classified as positive

TPR = TP/(TP+FN) = recall

True negative rate (TNR): the ratio of negative instances that are correctly classified as negative

TNR = TN/(TN+FP) = specify

False positive rate (FPR): the ratio of negative instances that are incorrectly classified as positive.

FPR = FN/(TN+FP) = 1-specify

ROC: TPR vs FPR

Matthews correlation coefficient

Logarithm loss/cross entropy

浙公网安备 33010602011771号

浙公网安备 33010602011771号