kubeadm (kubeconfig) 方式安装 k8sv1.22.5

1. 实验准备

两台机:资源不能太小,最好 4C8G 以上

192.168.10.100 master

192.168.10.101 worker

关闭防火墙,selinux,swap(三台机)

systemctl stop firewalld && systemctl disable firewalld

setenforce 0

swapoff -a

修改主机名,并写入三台服务器的 host 中

hostnamectl set-hostname 192.168.10.100 && su

hostnamectl set-hostname 192.168.10.101 && su

cat >> /etc/hosts << EOF

192.168.10.100 192.168.10.100

192.168.10.101 192.168.10.101

EOF

将桥接的 IPV4 流量传递到 iptables 链

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

fs.inotify.max_user_watches = 1048576

net.ipv4.ip_forward = 1

EOF

sysctl --system

同步时间

yum -y install ntpdate

ntpdate time.windows.com

如果时区不对执行下面命令,然后再同步

cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

(date -s "2021 00:00:00" hwclock -systohc)

kube-proxy 开启 ipvs:如果使用默认的 iptables 模式此步不做

#加载netfilter模块

[root@k8s-master01 ~]# modprobe br_netfilter

#添加配置文件

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

#赋予权限并引导

[root@k8s-master01 ~]# chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules &&lsmod | grep -e ip_vs -e nf_conntrack_ipv4

nf_conntrack_ipv4 20480 0

nf_defrag_ipv4 16384 1 nf_conntrack_ipv4

ip_vs_sh 16384 0

ip_vs_wrr 16384 0

ip_vs_rr 16384 0

ip_vs 147456 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 114688 2 ip_vs,nf_conntrack_ipv4

libcrc32c 16384 2 xfs,ip_vs

2. 安装 docker(三个节点)

yum -y install yum-utils device-mapper-persistemt-data lvm2

cd /etc/yum.repos.d/

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum -y install docker-ce

systemctl enable docker && systemctl start docker

sudo mkdir -p /etc/docker

systemctl start docker && systemctl enable docker

cat > /etc/docker/daemon.json << EOF

{

#"exec-opts": ["native.cgroupdriver=systemd"],

"storage-driver": "overlay2",

"registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"],

"ip-forward": true,

"live-restore": false,

"log-opts": {

"max-size": "100m",

"max-file":"3"

},

"default-ulimits": {

"nproc": {

"Name": "nproc",

"Hard": 32768,

"Soft": 32768

},

"nofile": {

"Name": "nofile",

"Hard": 32768,

"Soft": 32768

}

}

}

EOF

sed -i 's/-H fd:\/\/ //g' /usr/lib/systemd/system/docker.service

systemctl daemon-reload && systemctl restart docker

systemctl status docker

加了

"exec-opts": ["native.cgroupdriver=systemd"],参数 docker 的 Cgroup Driver 会变成 systemd(高版本默认 cgroupfs)

3. 配置阿里云 K8S repo 源(三个节点)

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum clean all && yum makecache

4. 安装 kubeadm,kubelet,kubectl(三个节点)

yum install -y kubelet-1.22.5 kubeadm-1.22.5 kubectl-1.22.5

systemctl enable kubelet

5. 部署 kubernetes Master 节点(master 节点上执行)

拉取镜像

# 查看所需镜像

kubeadm config images list --config kubeadm-config.yaml

#----------------------------------------------------------------------------

docker pull registry.aliyuncs.com/google_containers/kube-controller-manager:v1.22.5

docker pull registry.aliyuncs.com/google_containers/kube-scheduler:v1.22.5

docker pull registry.aliyuncs.com/google_containers/kube-proxy:v1.22.5

docker pull registry.aliyuncs.com/google_containers/kube-apiserver:v1.22.5

docker pull registry.aliyuncs.com/google_containers/etcd:3.5.0-0

docker pull registry.aliyuncs.com/google_containers/coredns:v1.8.4

docker pull registry.aliyuncs.com/google_containers/pause:3.5

生成 kubeadm-config.yaml:修改需要的配置

[root@master ~]# kubeadm config print init-defaults > kubeadm-config.yaml

[root@master ~]# ls

kubeadm-config.yaml

[root@master ~]# vim kubeadm-config.yaml

[root@master ~]# cat kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.10.100

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

imagePullPolicy: IfNotPresent

name: node

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: 1.22.5

networking:

dnsDomain: cluster.local

podSubnet: 100.80.0.0/16

serviceSubnet: 10.254.0.0/16

scheduler: {}

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: cgroupfs

使用 ipvs 添加如下配置:前提开启 ipvs 模块

apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration featureGates: SupportIPVSProxyMode: true mode: ipvs

指定配置文件进行初始化

kubeadm init \

--config ./kubeadm-config.yaml \

--node-name=${HOST_IP} \

--upload-certs

# --upload-certs 参数可以在后续执行加入节点时自动分发证书文件

# tee kubeadm-init.log 用以输出日志

#--------------------------------------------------------

[root@192 ~]# kubeadm init --config ./kubeadm-config.yaml --node-name=192.168.10.100 --upload-certs

W0811 13:28:53.847936 11658 strict.go:55] error unmarshaling configuration schema.GroupVersionKind{Group:"kubeadm.k8s.io", Version:"v1beta3", Kind:"ClusterConfiguration"}: error unmarshaling JSON: while decoding JSON: json: unknown field "type"

[init] Using Kubernetes version: v1.22.5

......

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.10.100:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:3b85c420b13aec838cb004b9390009bb4ed61fda09178ddca7cfbd6776f20c43

使用 kubectl 工具:master 节点

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

查看 node 状态

[root@192 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

192.168.10.100 NotReady control-plane,master 79s v1.22.5

[root@192 ~]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Unhealthy Get "http://127.0.0.1:10251/healthz": dial tcp 127.0.0.1:10251: connect: connection refused

controller-manager Healthy ok

etcd-0 Healthy {"health":"true","reason":""}

## 注意 scheduler 显示不健康是高版本的原因,参考:

## https://wenku.baidu.com/view/61cacb4ea75177232f60ddccda38376bae1fe058.html

6. node 节点加入集群

node01

[root@192 ~]# kubeadm join 192.168.10.100:6443 --token abcdef.0123456789abcdef \

> --discovery-token-ca-cert-hash sha256:3b85c420b13aec838cb004b9390009bb4ed61fda09178ddca7cfbd6776f20c43[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

master 查看

[root@192 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

192.168.10.100 NotReady control-plane,master 3m52s v1.22.5

192.168.10.101 NotReady <none> 82s v1.22.5

7. 安装网络插件

如果有污点

kubectl taint nodes --all node.kubernetes.io/not-ready-

kubectl taint nodes --all node-role.kubernetes.io/master-

flannel

wget http://120.78.77.38/file/kube-flannel.yaml

sed -i -r "s#quay.io/coreos/flannel:.*-amd64#lizhenliang/flannel:v0.12.0-amd64#g" kube-flannel.yaml

kubectl apply -f kube-flannel.yaml

calico

# 镜像看以 yaml 文件里的为准,可提前拉取

docker pull docker.io/calico/cni:v3.23.3

docker pull docker.io/calico/kube-controllers:v3.23.3

curl https://docs.projectcalico.org/manifests/calico.yaml -O

kubectl apply -f calico.yaml

### 注意点一

这个 calico 有个挂载点 /sys/fs/bpf,这个目录是系统目录,可能无法挂载,calico pod 会显示 0/1 Init:0/3

可 sed 修改 yaml 文件挂载点为其他路径,例如 /data/calico/bpf

mkdir -p /data/calico/bpf

sed -i -r "s#/sys/fs#/data/calico#g" calico.yaml

sed -i -r "s#sys-fs#data-calico#g" calico.yaml

### 注意点二

可修改 CALICO_IPV4POOL_CIDR 与安装 k8s 集群时为 pod 分配的网段一致;该字段默认注释,使用宿主机 IP,也可不用修改

查看 pod

[root@192 ~]# kubectl get pods,ds,deploy,svc -o wide -n kube-system | grep calico

pod/calico-kube-controllers-58f755f869-9dvjb 1/1 Running 0 50s 10.244.195.129 192.168.10.101 <none> <none>

pod/calico-node-pcrfm 1/1 Running 0 50s 192.168.10.100 192.168.10.100 <none> <none>

pod/calico-node-rvlsn 1/1 Running 0 50s 192.168.10.101 192.168.10.101 <none> <none>

daemonset.apps/calico-node 2 2 2 2 2 kubernetes.io/os=linux 50s calico-node docker.io/calico/node:v3.23.3 k8s-app=calico-node

deployment.apps/calico-kube-controllers 1/1 1 1 50s calico-kube-controllers docker.io/calico/kube-controllers:v3.23.3 k8s-app=calico-kube-controllers

8. 安装 Harbor 仓库

8.1 docker-compose 部署 harbor

(1) docker-compose 安装

wget https://github.com/docker/compose/releases/download/v2.9.0/docker-compose-linux-x86_64

chmod +x docker-compose-linux-x86_64

mv docker-compose-linux-x86_64 /usr/local/bin/docker-compose

docker-compose -v

(2) harbor 安装

下载 harbor 二进制包

wget https://github.com/goharbor/harbor/releases/download/v2.4.3/harbor-offline-installer-v2.4.3.tgz

wget http://49.232.8.65/harbor/harbor-offline-installer-v2.4.3.tgz

解压到指定目录

tar zxvf harbor-offline-installer-v2.4.3.tgz -C /usr/local

修改配置文件

cd /usr/local/harbor/

cp harbor.yml.tmpl harbor.yml

vim harbor.yml

hostname: 192.168.10.100

harbor_admin_password: Harbor12345

data_volume: /data/harbor # 指定数据目录,若无则手动创建该目录

self_registration: off # 添加禁止用户自注册

## 将 https 部分注释掉

# https related config

#https:

# https port for harbor, default is 443

#port: 443

# The path of cert and key files for nginx

#certificate: /your/certificate/path

#private_key: /your/private/key/path

启动

cd /usr/local/harbor/

./install.sh

docker images

docker ps -a

docker-compose ps

设置开机自启

echo "/usr/local/bin/docker-compose -f /usr/local/harbor/docker-compose.yml up -d" >> /etc/rc.local

chmod +x /etc/rc.local /etc/rc.d/rc.local

(3) 配置文件 harbor.yml 修改操作

docker-compose down #停止并删除 Harbor 容器,加上 -v 参数可以同时移除挂载在容器上的目录

vim harbor.yaml

./install.sh

docker-compose up -d #创建并启动 Harbor 容器,参数 -d 表示后台运行命令

docker-compose start | stop | restart

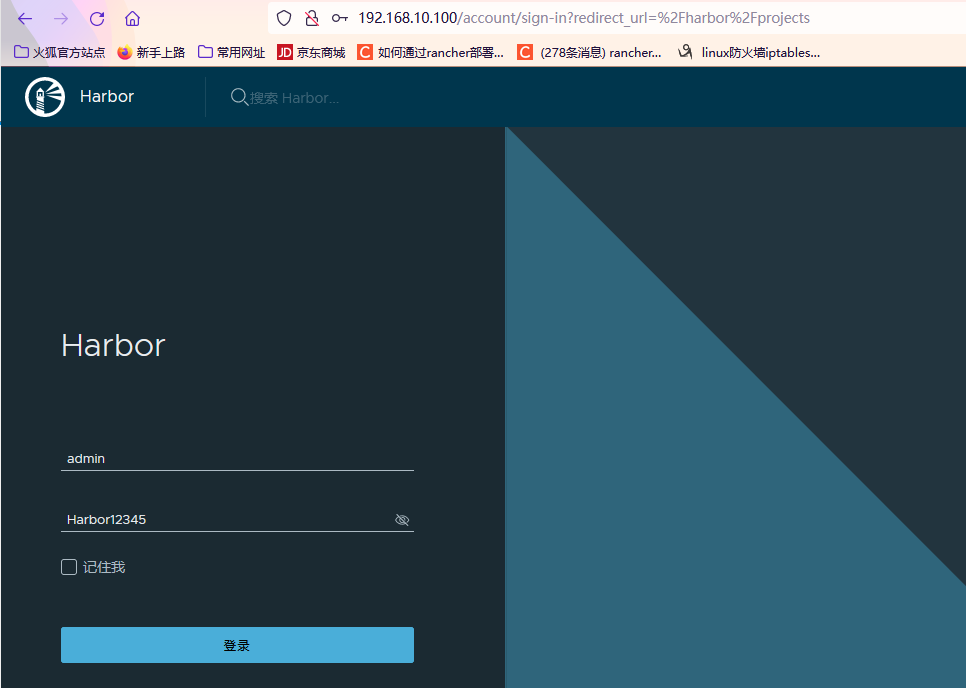

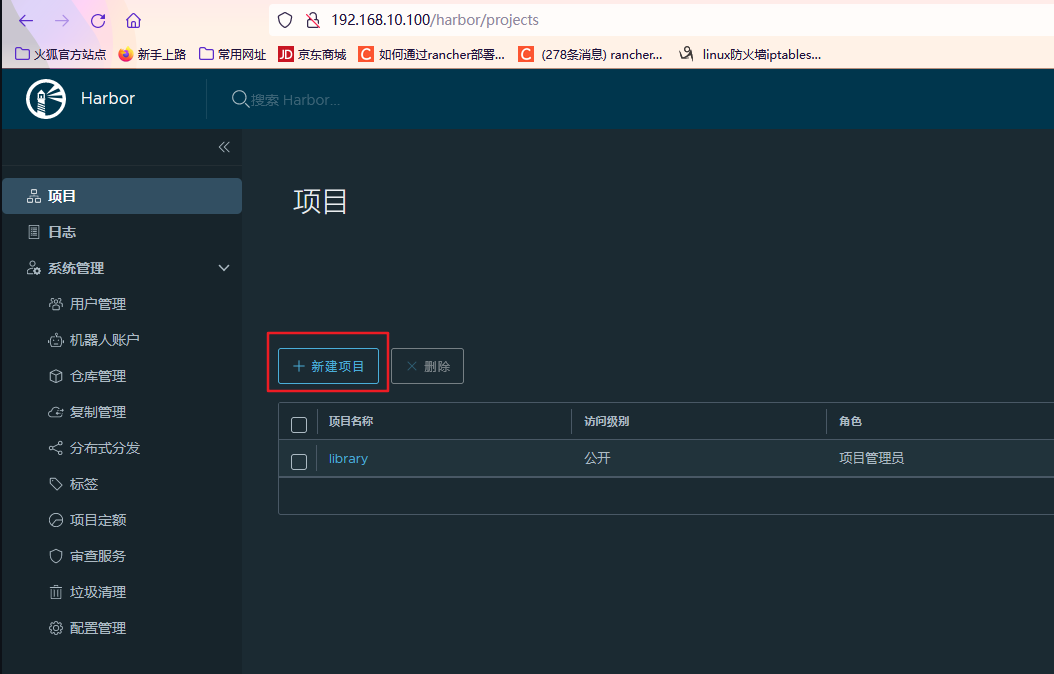

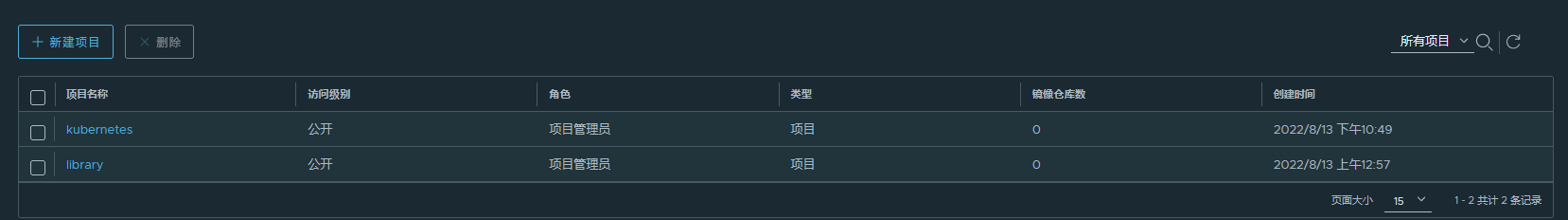

(4) web 登录 harbor

admin/Harbor12345

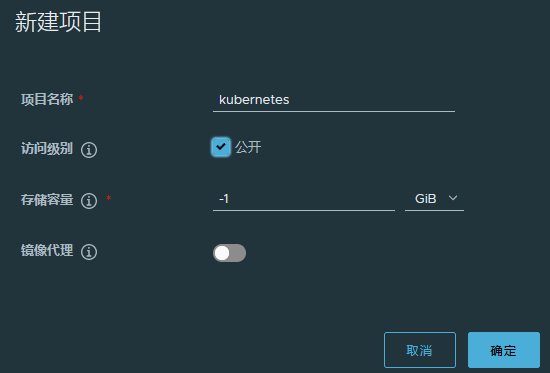

创建新的公开项目

(5) 其他节点登录 harbor 仓库

方法一:修改 daemon.json ---》"insecure-registries"

方法二:修改 docker.service ---》ExecStart ---》--insecure-registry

# 修改登录 harbor 节点的 daemon.json

[root@192 ~]# cat /etc/docker/daemon.json

{

"storage-driver": "overlay2",

"insecure-registries": ["192.168.10.100","https://b9pmyelo.mirror.aliyuncs.com"],

"ip-forward": true,

"live-restore": false,

"log-opts": {

"max-size": "100m",

"max-file":"3"

},

"default-ulimits": {

"nproc": {

"Name": "nproc",

"Hard": 32768,

"Soft": 32768

},

"nofile": {

"Name": "nofile",

"Hard": 32768,

"Soft": 32768

}

}

}

[root@192 ~]# systemctl daemon-reload

[root@192 ~]# systemctl restart docker

[root@192 ~]# docker login 192.168.10.100

Username: admin

Password: Harbor12345

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

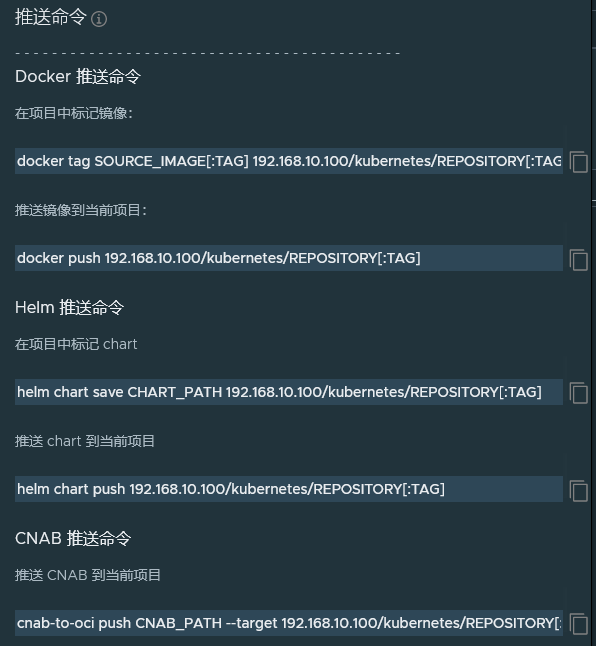

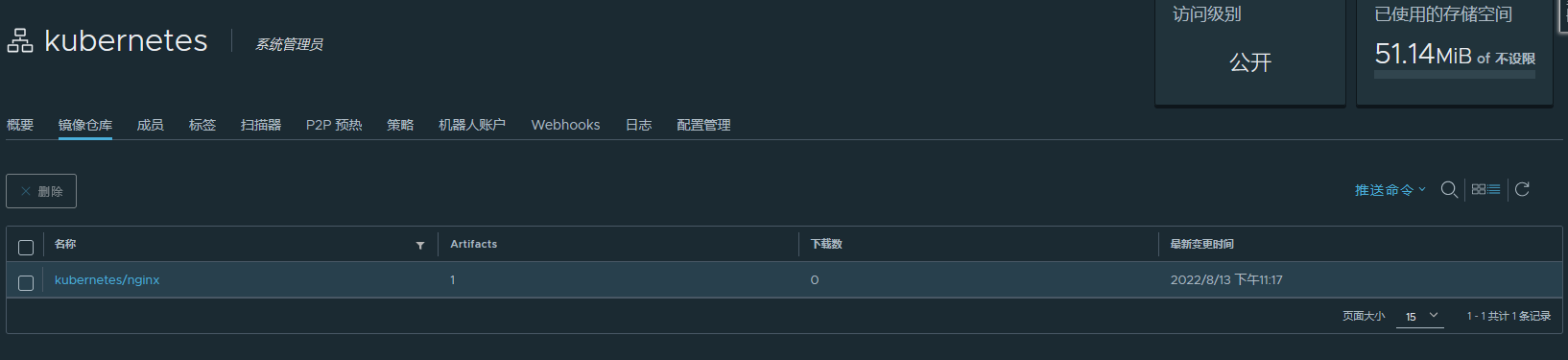

(6) 镜像拉取与推送

推送

docker images | grep nginx | grep 1.18.0

docker tag nginx:1.18.0 192.168.10.100/kubernetes/nginx:1.18.0

docker images | grep nginx | grep 1.18.0

docker push 192.168.10.100/kubernetes/nginx:1.18.0

拉取

docker rmi 192.168.10.100/kubernetes/nginx:1.18.0

docker pull 192.168.10.100/kubernetes/nginx:1.18.0

docker images | grep nginx

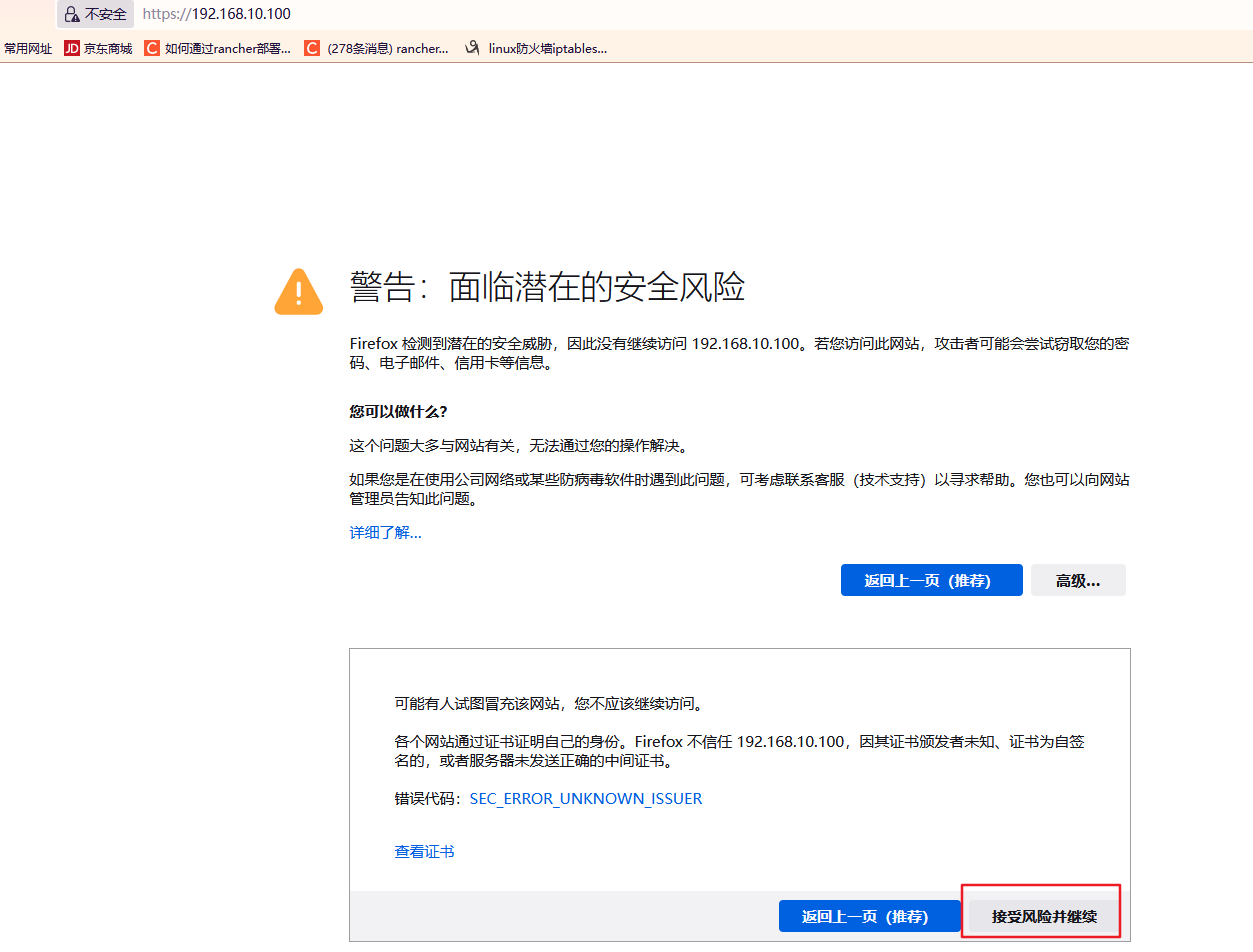

(7) 生成 TLS 证书,用于 Harbor 配置 Https

创建证书的临时目录

mkdir -p /data/harbor/cert

cd !$

创建自签名根证书

openssl genrsa -out ca.key 4096

openssl req -x509 -new -nodes -sha512 -days 3650 -subj "/C=CN/ST=JS/L=NJ/O=example/OU=Personal/CN=192.168.10.100" -key ca.key -out ca.crt

如有以下报错

Can't load /root/.rnd into RNG

140496635077056:error:2406F079:random number generator:RAND_load_file:Cannot open file:../crypto/rand/randfile.c:88:Filename=/root/.rnd

# 解决方法

cd /root

openssl rand -writerand .rnd

产生证书签名请求

openssl genrsa -out 192.168.10.100.key 4096

openssl req -sha512 -new -subj "/C=CN/ST=JS/L=NJ/O=example/OU=Personal/CN=192.168.10.100" -key 192.168.10.100.key -out 192.168.10.100.csr

查看证书

[root@192 /data/harbor/cert]# ls

192.168.10.100.csr 192.168.10.100.key ca.crt ca.key

为 Registry 主机产生证书

cat > v3.ext <<-EOF

authorityKeyIdentifier=keyid,issuer

basicConstraints=CA:FALSE

keyUsage = digitalSignature, nonRepudiation, keyEncipherment, dataEncipherment

extendedKeyUsage = serverAuth

subjectAltName = @alt_names

[alt_names]

DNS.1=192.168.10.100

DNS.2=harbor

EOF

openssl x509 -req -sha512 -days 3650 -extfile v3.ext -CA ca.crt -CAkey ca.key -CAcreateserial -in 192.168.10.100.csr -out 192.168.10.100.crt

openssl x509 -inform PEM -in 192.168.10.100.crt -out 192.168.10.100.cert

查看证书

[root@192 /data/harbor/cert]# ls

192.168.10.100.cert 192.168.10.100.crt 192.168.10.100.csr 192.168.10.100.key ca.crt ca.key ca.srl v3.ext

创建 Harbor 的证书目录,拷贝 harbor-registry 证书到 Harbor 的证书目录

mkdir -p /data/harbor/harbor-cert

cp 192.168.10.100.crt /data/harbor/harbor-cert

cp 192.168.10.100.key /data/harbor/harbor-cert

(8) Harbor 配置 Https

进入 Harbor 的安装目录

cd /usr/local/harbor/

停止并删除 Harbor 容器

docker-compose down

修改 harbor.yml 配置文件

vim harbor.yml

......

#打开 https 配置

https:

# https port for harbor, default is 443

port: 443

# The path of cert and key files for nginx

certificate: /data/harbor/harbor-cert/192.168.10.100.crt

private_key: /data/harbor/harbor-cert/192.168.10.100.key

......

重新生成配置文件

./prepare

重新加载 docker 配置文件

systemctl daemon-reload

重启 docker

systemctl restart docker

创建并启动 harbor 容器

docker-compose up -d

docker-compose ps

(9) 通过 https 访问 harbor

https://192.168.10.100:443

配置 https 不影响其他主机登录 harbor

8.2 helm 部署 harbor

(1) nfs 安装

| IP | ROLE |

|---|---|

| 192.168.10.100 | nfs-server |

| 192.168.10.101 | nfs-client |

nfs-server

yum -y install nfs-utils

echo "/harbor *(insecure,rw,sync,no_root_squash)" > /etc/exports

mkdir -p /harbor/{chartmuseum,jobservice,registry,database,redis,trivy}

chmod -R 777 /harbor

exportfs -rv # 使配置生效

exportfs # 检查配置是否生效

systemctl enable rpcbind && systemctl start rpcbind

systemctl enable nfs && systemctl start nfs

nfs-client

yum -y install nfs-utils

mkdir -p /harbor

systemctl start rpcbind && systemctl enable rpcbind

systemctl enable nfs

showmount -e 192.168.10.100

mount -t nfs 192.168.10.100:/harbor /harbor

ll /harbor/

(2) helm 安装

curl http://49.232.8.65/shell/helm/helm-v3.5.0_install.sh | bash

helm list

(3) helm 拉取 harbor

添加 helm 仓库

helm repo add harbor https://helm.goharbor.io

helm repo list

查看 harbor 所有版本

helm search repo harbor/harbor --versions

拉取指定版本 chart 包并解压缩

helm pull harbor/harbor --version 1.8.3 --untar

查看

[root@192 ~]# ls

harbor harbor-offline-installer-v2.4.3.tgz helm-v3.5.0-linux-amd64.tar.gz linux-amd64

[root@192 ~]# cd harbor/

[root@192 ~/harbor]# ls

cert Chart.yaml conf LICENSE README.md templates values.yaml

(4) 创建 harbor 的 pv、pvc

harbor-pv.yaml:替换 spec.nfs.server 的 IP 地址。

apiVersion: v1

kind: PersistentVolume

metadata:

name: harbor-chartmuseum

labels:

app: harbor

component: chartmuseum

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

storageClassName: harbor-chartmuseum

persistentVolumeReclaimPolicy: Recycle

nfs:

server: 192.168.10.100

path: /harbor/chartmuseum

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: harbor-jobservice

labels:

app: harbor

component: jobservice

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

storageClassName: harbor-jobservice

persistentVolumeReclaimPolicy: Recycle

nfs:

server: 192.168.10.100

path: /harbor/jobservice

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: harbor-registry

labels:

app: harbor

component: registry

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

storageClassName: harbor-registry

persistentVolumeReclaimPolicy: Recycle

nfs:

server: 192.168.10.100

path: /harbor/registry

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: harbor-database

labels:

app: harbor

component: database

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

storageClassName: harbor-database

persistentVolumeReclaimPolicy: Recycle

nfs:

server: 192.168.10.100

path: /harbor/database

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: harbor-redis

labels:

app: harbor

component: redis

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

storageClassName: harbor-redis

persistentVolumeReclaimPolicy: Recycle

nfs:

server: 192.168.10.100

path: /harbor/redis

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: harbor-trivy

labels:

app: harbor

component: trivy

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

storageClassName: harbor-trivy

persistentVolumeReclaimPolicy: Recycle

nfs:

server: 192.168.10.100

path: /harbor/trivy

harbor-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: harbor-chartmuseum

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

storageClassName: harbor-chartmuseum

selector:

matchLabels:

app: "harbor"

component: "chartmuseum"

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: harbor-jobservice

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: harbor-jobservice

selector:

matchLabels:

app: "harbor"

component: "jobservice"

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: harbor-registry

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

storageClassName: harbor-registry

selector:

matchLabels:

app: "harbor"

component: "registry"

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: harbor-database

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: harbor-database

selector:

matchLabels:

app: "harbor"

component: "database"

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: harbor-redis

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: harbor-redis

selector:

matchLabels:

app: "harbor"

component: "redis"

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: harbor-trivy

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

storageClassName: harbor-trivy

selector:

matchLabels:

app: "harbor"

component: "trivy"

(5) 自建 harbor 安装文件

[root@192 ~]# cd harbor/

[root@192 ~/harbor]# ls

cert Chart.yaml conf LICENSE README.md templates values.yaml

[root@192 ~/harbor]# cp values.yaml values.yaml.bak

[root@192 ~/harbor]# vim values.yaml

# 下面展示修改的部分,其他的按照默认参数

expose:

type: nodePort

tls:

# 这里使用 http,修改为 false

enabled: false

......

# 修改为自己集群 ip

externalURL: http://192.168.10.100:30002

......

persistence:

enabled: true

resourcePolicy: "keep"

persistentVolumeClaim:

registry:

existingClaim: "harbor-registry"

storageClass: "harbor-registry"

subPath: ""

accessMode: ReadWriteOnce

size: 5Gi

chartmuseum:

existingClaim: "harbor-chartmuseum"

storageClass: "harbor-chartmuseum"

subPath: ""

accessMode: ReadWriteOnce

size: 5Gi

jobservice:

existingClaim: "harbor-jobservice"

storageClass: "harbor-jobservice"

subPath: ""

accessMode: ReadWriteOnce

size: 1Gi

database:

existingClaim: "harbor-database"

storageClass: "harbor-database"

subPath: ""

accessMode: ReadWriteOnce

size: 1Gi

redis:

existingClaim: "harbor-redis"

storageClass: "harbor-redis"

subPath: ""

accessMode: ReadWriteOnce

size: 1Gi

trivy:

existingClaim: "harbor-trivy"

storageClass: "harbor-trivy"

subPath: ""

accessMode: ReadWriteOnce

size: 5Gi

......

harborAdminPassword: "Harbor12345"

......

(6) 部署 chart

查看需要的镜像

docker pull goharbor/nginx-photon:v2.4.3

docker pull goharbor/harbor-portal:v2.4.3

docker pull goharbor/harbor-core:v2.4.3

docker pull goharbor/harbor-jobservice:v2.4.3

docker pull goharbor/registry-photon:v2.4.3

docker pull goharbor/chartmuseum-photon:v2.4.3

docker pull goharbor/trivy-adapter-photon:v2.4.3

docker pull goharbor/notary-server-photon:v2.4.3

docker pull goharbor/notary-signer-photon:v2.4.3

docker pull goharbor/harbor-db:v2.4.3

docker pull goharbor/redis-photon:v2.4.3

docker pull goharbor/harbor-exporter:v2.4.3

# 指定 ns:-n harbor

# 这里不指定了,因为 pvc 在 default 下

[root@192 ~]# helm install my-harbor ./harbor/

NAME: my-harbor

LAST DEPLOYED: Sun Aug 14 12:48:40 2022

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Please wait for several minutes for Harbor deployment to complete.

Then you should be able to visit the Harbor portal at http://192.168.10.100:30002

For more details, please visit https://github.com/goharbor/harbor

卸载:

helm uninstall my-harbor -n

查看部署状态:需要拉取镜像,最好提前拉取,不然可能网络原因 ImagePullBackOff

[root@192 ~]# kubectl get pods,svc

NAME READY STATUS RESTARTS AGE

pod/my-harbor-chartmuseum-6b4d55cf89-xtvq7 1/1 Running 0 60s

pod/my-harbor-core-5dcb856896-wq8n5 1/1 Running 0 60s

pod/my-harbor-database-0 1/1 Running 0 60s

pod/my-harbor-jobservice-698895fd4f-zmphr 1/1 Running 0 60s

pod/my-harbor-nginx-5cb655b57b-bw2ql 1/1 Running 0 59s

pod/my-harbor-notary-server-8bd7cfbcd-jjzsf 1/1 Running 0 60s

pod/my-harbor-notary-signer-6cb7f95879-xfbzq 1/1 Running 0 59s

pod/my-harbor-portal-cf97c9c56-gk77j 1/1 Running 0 60s

pod/my-harbor-redis-0 1/1 Running 0 60s

pod/my-harbor-registry-757968bb8b-npq65 2/2 Running 0 59s

pod/my-harbor-trivy-0 1/1 Running 0 60s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/harbor NodePort 10.111.91.121 <none> 80:30002/TCP,4443:30004/TCP 60s

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d1h

service/my-harbor-chartmuseum ClusterIP 10.100.225.69 <none> 80/TCP 60s

service/my-harbor-core ClusterIP 10.107.50.224 <none> 80/TCP 60s

service/my-harbor-database ClusterIP 10.103.119.136 <none> 5432/TCP 60s

service/my-harbor-jobservice ClusterIP 10.100.46.16 <none> 80/TCP 60s

service/my-harbor-notary-server ClusterIP 10.106.56.70 <none> 4443/TCP 60s

service/my-harbor-notary-signer ClusterIP 10.111.206.134 <none> 7899/TCP 60s

service/my-harbor-portal ClusterIP 10.98.184.40 <none> 80/TCP 60s

service/my-harbor-redis ClusterIP 10.109.152.32 <none> 6379/TCP 60s

service/my-harbor-registry ClusterIP 10.102.210.222 <none> 5000/TCP,8080/TCP 60s

service/my-harbor-trivy ClusterIP 10.96.4.244 <none> 8080/TCP 60s

更新:仅作示例

[root@192 ~]# helm list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

my-harbor default 1 2022-08-14 14:55:50.412454926 +0800 CST deployed harbor-1.8.3 2.4.3

[root@192 ~]# helm upgrade my-harbor ./harbor/

Release "my-harbor" has been upgraded. Happy Helming!

NAME: my-harbor

LAST DEPLOYED: Sun Aug 14 14:58:11 2022

NAMESPACE: default

STATUS: deployed

REVISION: 2

TEST SUITE: None

NOTES:

Please wait for several minutes for Harbor deployment to complete.

Then you should be able to visit the Harbor portal at http://192.168.10.100:30002

For more details, please visit https://github.com/goharbor/harbor

[root@192 ~]# helm repo list

NAME URL

harbor https://helm.goharbor.io

(7) harbor Login

[root@c7-1 ~]#cat /etc/docker/daemon.json

{

"insecure-registries":["192.168.10.100","http://192.168.10.100:30002","https://6kx4zyno.mirror.aliyuncs.com"]

}

# admin/Harbor12345

[root@c7-1 ~]#docker login https://192.168.10.100:30002

Username: admin

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

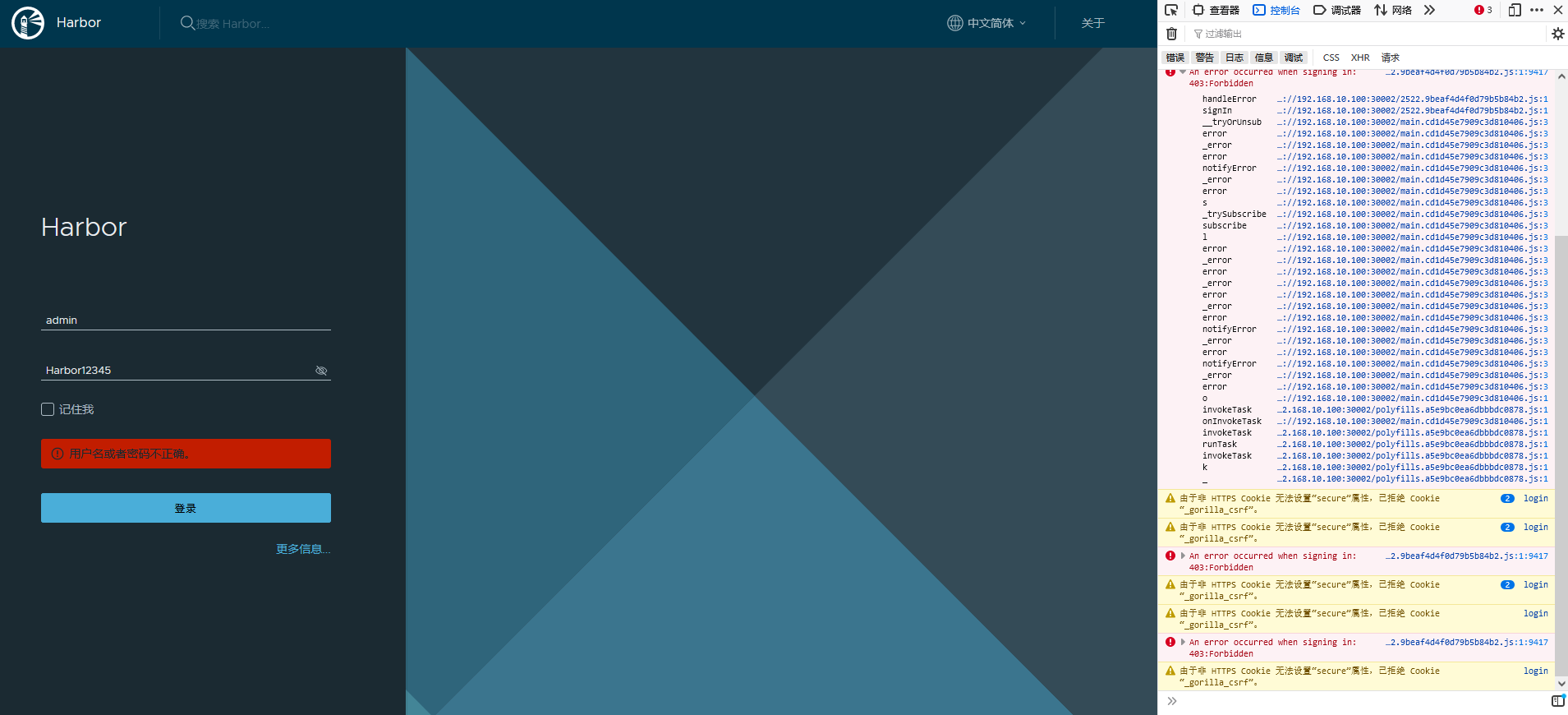

(8) web 访问 harbor

提示账号密码错误,疑为 BUG 引起。

解决:

配置 ingress-nginx,配置 tls 证书,使用 ingress-nginx 访问