K3S 集群安装 helmv3 与使用

1. Helm 介绍

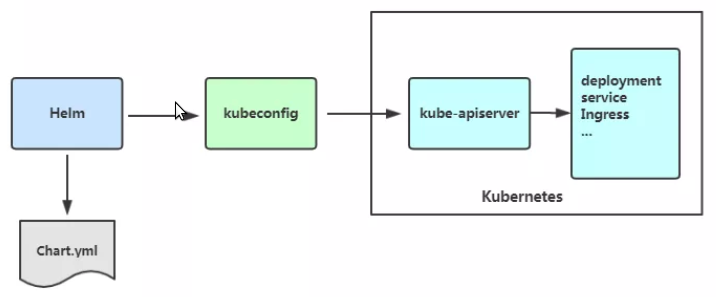

Helm 是 kubernetes 的包管理器,包管理器类似于我们在 ubuntu 中使用的 apt,在 centos 中使用的 yum 或者 python 中的 pip 一样,能够快速查找,下载和安装软件包。helm 由客户端组件 helm 和服务端组件 Tiller 组成,能够将一组众多分散的 k8s 资源打包统一管理,是查找、共享和使用为 kubernetes 构建软件的最佳方式。

helm 的重要概念:

- Charts:是创建 Kubernetes 应用实例的信息集合,也就是一个 helm 的程序包,它包含了运行一个 k8s 应用所有需要的镜像、依赖关系和资源定义等,必要时还会包含 Service 资源定义,它类似于 yum 的 rpm 文件。

- Repository:Chart 仓库,用于集中存储和分发 Charts。

- Config:应用程序实例化安装时运行使用的配置信息。

- Release:chart 的运行实例,包含特定的 config。

使用 Helm 前提条件:

- 一个 K8S/K3S 集群环境

- Helm 客户端和 Tiller 服务器

Helm 客户端

是一个供终端用户使用的命令行工具。负责如下的工作:

- 本地 chart 开发

- 管理仓库

- 与 Tiller 服务器交互(发送需要被安装的 charts、请求关于发布版本的信息、请求更新或者卸载已安装的发布版本)

Tiller 服务器(v2 版本有,v3 移除)

Tiller 是 helm 的服务器端,一般运行于 kubernetes 集群之上,定义 tiller 的 ServiceAccount,并通过 ClusterRoleBinding 将其绑定至集群管理员角色 cluster-admin,从而使得它拥有集群级别所有的最高权限。Tiller 服务器负责如下的工作:

- 监听来自于 Helm 客户端的请求

- 组合 chart 和配置来构建一个发布

- 在 Kubernetes 中安装,并跟踪后续的发布

- 通过与 Kubernetes 交互,更新或者 chart

Helm v3 相对于 v2 的变化

a. 最明显的变化是 Tiller 的删除

b. Release 名称可以在不同命名空间重用

c. 支持将 Chart 推送至 Docker 镜像仓库中

d. 使用 JSONSchema 验证chart values

① 为了更好地协调其他包管理者的措辞 Helm CLI 个别更名

helm delete 更名为 helm uninstall

helm inspect 更名为 helm show

helm fetch 更名为 helm pull

但以上旧的命令当前仍能使用。

② 移除了用于本地临时搭建 Chart Repository 的 helm serve 命令。

③ 自动创建名称空间:在不存在的命名空间中创建发行版时,Helm 2 自动创建命名空间。Helm 3 遵循其他 Kubernetes 对象的行为,如命名空间不存在则返回错误。

④ 不再需要 requirements.yaml, 依赖关系是直接在 chart.yaml 中定义。

2. K8S/K3S 集群安装

3. Helm 安装(K3S 集群)

[root@master ~]#wget http://49.232.8.65/helm/helm-v3.5.0-linux-amd64.tar.gz

[root@master ~]#ls

helm-v3.5.0-linux-amd64.tar.gz

[root@master ~]#tar zxvf helm-v3.5.0-linux-amd64.tar.gz

linux-amd64/

linux-amd64/README.md

linux-amd64/LICENSE

linux-amd64/helm

[root@master ~]#chmod 755 linux-amd64/helm

[root@master ~]#cp linux-amd64/helm /usr/bin/

[root@master ~]#cp linux-amd64/helm /usr/local/bin/

[root@master ~]#export KUBECONFIG=/etc/rancher/k3s/k3s.yaml

[root@master ~]#echo 'source <(helm completion bash)' >> /etc/profile

[root@master ~]#. /etc/profile

[root@master ~]#helm version

version.BuildInfo{Version:"v3.5.0", GitCommit:"32c22239423b3b4ba6706d450bd044baffdcf9e6", GitTreeState:"clean", GoVersion:"go1.15.6"}

配置国外 helm 源

helm repo remove stable

helm repo add stable https://charts.helm.sh/stable # 官方,stable 是自己指定的源名字

helm repo update

helm list

helm search repo stable/prometheus-operator

# 微软源(推荐)

helm repo add stable http://mirror.azure.cn/kubernetes/charts

helm repo update

配置国内 helm 源(推荐):可添加多个源,例如:azure、aliyun

helm repo remove stable

helm repo add stable https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

helm repo update

helm list

helm search repo stable/prometheus-operator

# ------------------------ #

[root@master ~]#helm repo add stable https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

"stable" has been added to your repositories

[root@master ~]#helm repo list

NAME URL

stable https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

[root@master ~]#helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "stable" chart repository

Update Complete. ⎈Happy Helming!⎈

[root@master ~]#helm list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

命令测试

[root@master ~]#helm search hub wordpress

URL CHART VERSION APP VERSION DESCRIPTION

https://artifacthub.io/packages/helm/kube-wordp... 0.1.0 1.1 this is my wordpress package

......

[root@master ~]#helm search hub mysql

URL CHART VERSION APP VERSION DESCRIPTION

https://artifacthub.io/packages/helm/cloudnativ... 5.0.1 8.0.16 Chart to create a Highly available MySQL cluster

......

# ---------------------- #

helm install happy-panda stable/mariadb

helm status happy-panda

helm show values stable/mariadb

4. Helmv3 使用

| 命令 | 描述 |

|---|---|

| create | 创建一个 chart 并指定名字 |

| install | 安装一个 chart |

| uninstall | 卸载一个 release |

| upgrade | 更新一个 release |

| rollback | 回滚之前版本 |

| version | 查看 helm 客户端版本 |

| dependency | 管理 chart 依赖 |

| get | 下载一个 release。可用子命令:all、hooks、manifest、notes、values |

| history | 获取 release 历史 |

| list | 列出 release |

| package | 将 chart 目录打包到 chart 存档文件中 |

| pull | 从远程仓库中下载 chart 并解压到本地 # helm pull stable/mysql --untar |

| repo | 添加,列出,移出,更新和索引 chart 仓库。可用子命令:add、index、list、remove、update |

| search | 根据关键字搜索 chart。可用子命令:hub、repo |

| show | 查看 chart 详细信息。可用子命令:all、chart、readme、values |

| status | 显示已命名版本的状态 |

| template | 本地呈现模板 |

查询

# 查看已部署的 release

helm ls

# 查看指定 release 的状态

helm status <release_name>

# 查看已经移除但保留在历史记录中的 release

helm ls --uninstalled

安装

# 普通安装

helm install <release_name> <chart_path>

# 指定变量安装

helm install --set image.tag=*** <release_name> <chart_path>

更新

# 普通更新

helm upgrade [flag] <release_name> <chart_path>

# 指定文件更新

helm upgrade -f myvalues.yaml -f override.yaml <release_name> <chart_path>

# 指定变量更新

helm upgrade --set foo=bar --set foo=newbar redis ./redis

删除

# 移除 release,不保留历史记录

helm uninstall <release_name>

# 移除 release,保留历史记录

helm uninstall <release_name> --keep-history

# 查看历史记录

helm ls --uninstalled

回滚

helm rollback <release> [revision]

4.1 使用 chart 部署一个应用

# 查找 chart

[root@master ~]#helm search repo mysql

NAME CHART VERSION APP VERSION DESCRIPTION

azure/mysql 1.6.9 5.7.30 DEPRECATED - Fast, reliable, scalable, and easy...

azure/mysqldump 2.6.2 2.4.1

......

# 查看 chart 信息

[root@master ~]#helm show values azure/mysql

......

# 安装包

# 一个 chart 包是可以多次安装到同一个集群中的,每次安装都会产生一个 release, 每个 release 都可以独立管理和升级。

[root@master ~]#helm install db azure/mysql # db 是自己指定的 chart 名

WARNING: This chart is deprecated

NAME: db

LAST DEPLOYED: Wed Jun 22 14:07:47 2022

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

MySQL can be accessed via port 3306 on the following DNS name from within your cluster:

db-mysql.default.svc.cluster.local

To get your root password run:

......

# 查看发布状态

[root@master ~]#helm list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

db default 1 2022-06-22 14:07:47.233583511 +0800 CST deployed mysql-1.6.9 5.7.30

[root@master ~]#helm status db

......

[root@master ~]#kubectl get secret --namespace default db-mysql -o jsonpath="{.data.mysql-root-password}" | base64 --decode; echo

xAn4Rw12RY

[root@master ~]#kubectl get Deployment

NAME READY UP-TO-DATE AVAILABLE AGE

db-mysql 1/1 1 1 10m

[root@master ~]#kubectl get pods,svc -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/db-mysql-864bfb89bb-rh8m8 1/1 Running 0 10m 10.42.1.3 node01 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 4h44m <none>

service/db-mysql ClusterIP 10.43.198.37 <none> 3306/TCP 10m app=db-mysql

[root@master ~]#kubectl get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-938130aa-ee96-422a-8a2c-2798a7719e5e 8Gi RWO Delete Bound default/db-mysql local-path 5m17s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/db-mysql Bound pvc-938130aa-ee96-422a-8a2c-2798a7719e5e 8Gi RWO local-path 5m37s

[root@master ~]#kubectl exec -it db-mysql-864bfb89bb-rh8m8 /bin/bash

root@db-mysql-864bfb89bb-rh8m8:/# mysql -uroot -pxAn4Rw12RY

mysql: [Warning] Using a password on the command line interface can be insecure.

..........

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

+--------------------+

4 rows in set (0.00 sec)

mysql> exit

Bye

root@db-mysql-864bfb89bb-rh8m8:/# exit

exit

# 卸载一个 charts

[root@master ~]#helm uninstall db

release "db" uninstalled

[root@master ~]#kubectl get pods,svc -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 4h53m <none>

[root@master ~]#kubectl get pv,pvc

No resources found in default namespace.

[root@master ~]#kubectl get deploy

No resources found in default namespace.

[root@master ~]#helm list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

# 可以看到 mysql 已经卸载完全

4.2 安装应用前自定义 chart 配置选项

自定义 chart 配置选项,安装过程中有两种方法可以传递配置数据:

--values (或 -f):指定带有覆盖的 YAML 文件。这可以多次指定,最右边的文件优先。--set:在命令行上指定替代。如果两者都用,--set 优先级高。

[root@master ~]#cat config.yaml # 注意这个文件里的内容不要和 charts 描述的内容冲突

mysqlUser: "k8s"

mysqlPassword: "123456"

mysqlDatabase: "k8s"

[root@master ~]#helm install mysql azure/mysql -f config.yaml

......

[root@master ~]#helm ls

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

mysql default 1 2022-06-22 14:53:01.891424978 +0800 CST deployed mysql-1.6.9 5.7.30

[root@master ~]#kubectl get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-afe437fb-e5cd-4568-92fc-47aebf15c08d 8Gi RWO Delete Bound default/mysql local-path 15s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/mysql Bound pvc-afe437fb-e5cd-4568-92fc-47aebf15c08d 8Gi RWO local-path 18s

[root@master ~]#kubectl get pods,svc -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/mysql-5554bc7c4-vtjjh 1/1 Running 0 32s 10.42.1.6 node01 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 5h19m <none>

service/mysql ClusterIP 10.43.207.2 <none> 3306/TCP 32s app=mysql

[root@master ~]#kubectl get secret --namespace default mysql -o jsonpath="{.data.mysql-root-password}" | base64 --decode; echo

NBQk9NlXlu

[root@master ~]#kubectl exec -it mysql-5554bc7c4-vtjjh /bin/bash

root@mysql-5554bc7c4-vtjjh:/# mysql -uk8s -p123456

......

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| k8s |

+--------------------+

2 rows in set (0.00 sec)

......

4.3 Helm Chart 自定义模板

4.3.1 Charts 文件组织结构

一个 Charts 就是按特定格式组织的目录结构,目录名即为 Charts 名,目录名称本身不包含版本信息。目录结构中除了 charts/ 和 templates/ 是目录之外,其他的都是文件。它们的基本组成如下:

[root@master ~]#yum -y install tree

......

[root@master ~]#helm create mychart

Creating mychart

[root@master ~]#ls

mychart

[root@master ~]#tree mychart/

mychart/

├── charts

├── Chart.yaml

├── templates

│ ├── deployment.yaml

│ ├── _helpers.tpl

│ ├── hpa.yaml

│ ├── ingress.yaml

│ ├── NOTES.txt

│ ├── serviceaccount.yaml

│ ├── service.yaml

│ └── tests

│ └── test-connection.yaml

└── values.yaml

3 directories, 10 files

# ------------------------------------ #

# Chart.yaml:用于描述这个 Chart 的基本信息,包括名字、描述信息以及版本等。

# values.yaml:用于存储 templates 目录中模板文件中用到变量的值。

# Templates:目录里面存放所有 yaml 模板文件。

# charts:目录里存放这个 chart 依赖的所有子 chart。

# NOTES.txt:用于介绍 Chart 帮助信息,helm install 部署后展示给用户。例如:如何使用这个 Chart、列出缺省的设置等。

# _helpers.tpl:放置模板助手的地方,可以在整个 chart 中重复使用。

打包推送的 charts 仓库

[root@master ~]#helm package mychart/

Successfully packaged chart and saved it to: /root/mychart-0.1.0.tgz

[root@master ~]#ls

mychart mychart-0.1.0.tgz

升级、回滚和删除

# 发布新版本的 chart 时,或者当您要更改发布的配置时,可以使用该 helm upgrade 命令

helm upgrade --set imageTag=1.17 web mychart

或调用文件

helm upgrade -f values.yaml web mychart

4.3.2 chart 模板配置

Helm 最核心的就是模板,即模板化的 K8S manifests 文件。 它本质上就是一个 Go 的 template 模板。Helm 在 Go template 模板的基础上,还会增加很多东西。如一些自定义的元数据信息、扩展的库以及一些类似于编程形式的工作流,例如条件语句、管道等等。这些东西都会使得我们的模板变得更加丰富。

(1) helm chart values 的引用

# 创建 chart

[root@master ~]#helm create nginx

Creating nginx

[root@master ~]#ls

deployment.yml nginx service.yaml

[root@master ~]#helm install web nginx

NAME: web

LAST DEPLOYED: Wed Jun 22 16:34:09 2022

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

......

[root@master ~]#helm list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

web default 1 2022-06-22 16:34:09.068833757 +0800 CST deployed nginx-0.1.0 1.16.0

[root@master ~]#kubectl get pod,svc -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/web-nginx-55cc868c48-2xw47 1/1 Running 0 19s 10.42.2.4 node02 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 7h <none>

service/web-nginx ClusterIP 10.43.70.195 <none> 80/TCP 19s app.kubernetes.io/instance=web,app.kubernetes.io/name=nginx

# 查看 nginx 版本是 1.16.0

[root@master ~]#curl -I 10.43.70.195

HTTP/1.1 200 OK

Server: nginx/1.16.0

Date: Wed, 22 Jun 2022 08:34:45 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 23 Apr 2019 10:18:21 GMT

Connection: keep-alive

ETag: "5cbee66d-264"

Accept-Ranges: bytes

# 修改版本

[root@master ~]#vim nginx/values.yaml

[root@master ~]#cat nginx/values.yaml | grep tag

# Overrides the image tag whose default is the chart appVersion.

tag: "1.17"

targetCPUUtilizationPercentage: 80

# targetMemoryUtilizationPercentage: 80

# 更新

[root@master ~]#helm upgrade web nginx

Release "web" has been upgraded. Happy Helming!

NAME: web

LAST DEPLOYED: Wed Jun 22 16:36:03 2022

NAMESPACE: default

STATUS: deployed

REVISION: 2

NOTES:

......

[root@master ~]#kubectl get pod,svc -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/web-nginx-7f4f88cdc7-8wq88 1/1 Running 0 23s 10.42.1.13 node01 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 7h2m <none>

service/web-nginx ClusterIP 10.43.70.195 <none> 80/TCP 2m17s app.kubernetes.io/instance=web,app.kubernetes.io/name=nginx

# 更新过后版本变成 1.17.10

[root@master ~]#curl -I 10.43.70.195

HTTP/1.1 200 OK

Server: nginx/1.17.10

Date: Wed, 22 Jun 2022 08:36:36 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 14 Apr 2020 14:19:26 GMT

Connection: keep-alive

ETag: "5e95c66e-264"

Accept-Ranges: bytes

# 查看历史版本

[root@master ~]#helm history web

REVISION UPDATED STATUS CHART APP VERSION DESCRIPTION

1 Wed Jun 22 16:34:09 2022 superseded nginx-0.1.0 1.16.0 Install complete

2 Wed Jun 22 16:36:03 2022 deployed nginx-0.1.0 1.16.0 Upgrade complete

# 回滚到 1 版本

[root@master ~]#helm rollback web 1

Rollback was a success! Happy Helming!

# 版本退回 1.16.0

[root@master ~]#kubectl get pod,svc -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/web-nginx-55cc868c48-4qzsx 1/1 Running 0 17s 10.42.2.5 node02 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 7h6m <none>

service/web-nginx ClusterIP 10.43.70.195 <none> 80/TCP 6m15s app.kubernetes.io/instance=web,app.kubernetes.io/name=nginx

[root@master ~]#curl -I 10.43.70.195

HTTP/1.1 200 OK

Server: nginx/1.16.0

Date: Wed, 22 Jun 2022 08:40:33 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 23 Apr 2019 10:18:21 GMT

Connection: keep-alive

ETag: "5cbee66d-264"

Accept-Ranges: bytes

(2) 构建自己的 chart 模板

Helm 最核心的就是模板,即模板化的 K8S manifests 文件。使用如下命令可以看到实际的模板被渲染过后的资源文件:

[root@master ~]#helm get manifest web # 查看 manifest --- # Source: nginx/templates/serviceaccount.yaml apiVersion: v1 kind: ServiceAccount metadata: name: web-nginx labels: helm.sh/chart: nginx-0.1.0 app.kubernetes.io/name: nginx app.kubernetes.io/instance: web app.kubernetes.io/version: "1.16.0" app.kubernetes.io/managed-by: Helm --- # Source: nginx/templates/service.yaml apiVersion: v1 kind: Service metadata: name: web-nginx labels: helm.sh/chart: nginx-0.1.0 app.kubernetes.io/name: nginx app.kubernetes.io/instance: web app.kubernetes.io/version: "1.16.0" app.kubernetes.io/managed-by: Helm spec: type: ClusterIP ports: - port: 80 targetPort: http protocol: TCP name: http selector: app.kubernetes.io/name: nginx app.kubernetes.io/instance: web --- # Source: nginx/templates/deployment.yaml apiVersion: apps/v1 kind: Deployment metadata: name: web-nginx labels: helm.sh/chart: nginx-0.1.0 app.kubernetes.io/name: nginx app.kubernetes.io/instance: web app.kubernetes.io/version: "1.16.0" app.kubernetes.io/managed-by: Helm spec: replicas: 1 selector: matchLabels: app.kubernetes.io/name: nginx app.kubernetes.io/instance: web template: metadata: labels: app.kubernetes.io/name: nginx app.kubernetes.io/instance: web spec: serviceAccountName: web-nginx securityContext: {} containers: - name: nginx securityContext: {} image: "nginx:1.16.0" imagePullPolicy: IfNotPresent ports: - name: http containerPort: 80 protocol: TCP livenessProbe: httpGet: path: / port: http readinessProbe: httpGet: path: / port: http resources: {}

一个 deployment.yaml 部署多个应用,有哪些字段需要修改:镜像、标签、副本数、资源限制、环境变量、端口、资源名称

helm 内置变量:

| 内置对象 | Release 就是 Helm 的内置对象 |

|---|---|

| Release.Name | release 名称 |

| Release.Time | release 的时间 |

| Release.Namespace | release 的 namespace |

| Release.Service | release 服务的名称 |

| Release.Revision | 此 release 的修订版本号,从 1 开始累加 |

| Release.IsUpgrade | 如果当前操作是升级或回滚,则将其设置为 true |

| Release.IsInstall | 如果当前操作是安装,则设置为 true |

Values 对象为 Chart 模板提供值,这个对象的值有 4 个来源:

- chart 包中的 values.yaml 文件

- 父 chart 包的 values.yaml 文件

- 通过 helm install 或者 helm upgrade 的 -f 或者 --values 参数传入的自定义的 yaml 文件

- 通过 --set 参数传入的值 chart 的 values.yaml 提供的值可以被用户提供的 values 文件覆盖,而该文件同样可以被 --set 提供的参数所覆盖

通过 charts 模板部署 nginx:

PASS

4.3.3 模板函数与管道

模板函数

从 .Values 中读取的值变成字符串,可以使用 quote 模板函数实现(templates/configmap.yaml)

apiVersion: v1

kind: ConfigMap

metadata:

name: {{ .Release.Name }}-configmap

data:

myvalue: "Hello World"

k8s: {{ quote .Values.course.k8s }}

python: {{ .Values.course.python }}

模板函数遵循调用的语法为:functionName arg1 arg2...。在上面的模板文件中,quote .Values.course.k8s 调用 quote 函数并将后面的值作为一个参数传递给它。最终被渲染为:

$ helm install --dry-run --debug .

[debug] Created tunnel using local port: '39405'

......

---

# Source: mychart/templates/configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: masked-saola-configmap

data:

myvalue: "Hello World"

k8s: "devops"

python: django

辅助模板

有时想在图表中创建一些可重复使用的部分,无论它们是块还是模板部分。通常,将它们保存在自己的文件中会更干净。在 templates/ 目录中,任何以下划线 _ 开头的文件都不会输出 Kubernetes 清单文件。所以按照惯例,辅助模板和部分被放置在一个 _helpers.tpl 文件中。

4.3.4 helm 流程控制

4.4 创建自己的 chart

开发 Chart 大致流程:先创建模板 helm create demo 修改 Chart.yaml,Values.yaml,添加常用的变量,在 templates 目录下创建部署镜像所需要的 yaml 文件,并变量引用 yaml 里经常变动的字段。

创建模板

[root@master ~]#helm create demo

Creating demo

[root@master ~]#ls

demo

[root@master ~]#tree

.

└── demo

├── charts

├── Chart.yaml

├── templates

│ ├── deployment.yaml

│ ├── _helpers.tpl

│ ├── hpa.yaml

│ ├── ingress.yaml

│ ├── NOTES.txt

│ ├── serviceaccount.yaml

│ ├── service.yaml

│ └── tests

│ └── test-connection.yaml

└── values.yaml

4 directories, 10 files

[root@master ~]#cd demo/templates/

[root@master ~/demo/templates]#ls

deployment.yaml hpa.yaml NOTES.txt service.yaml

_helpers.tpl ingress.yaml serviceaccount.yaml tests

[root@master ~/demo/templates]#rm -rf *

[root@master ~/demo/templates]#ls

[root@master ~/demo/templates]#kubectl create deployment web --image=lizhenliang/java-demo --dry-run -o yaml > deployment.yaml

[root@master ~/demo/templates]#kubectl expose deployment web --port=80 --target-port=8080 --dry-run -o yaml > service.yaml

Error from server (NotFound): deployments.apps "web" not found

[root@master ~/demo/templates]#ls

deployment.yaml service.yaml

[root@master ~/demo/templates]#vim ingress.yaml

[root@master ~/demo/templates]#cat ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: rpdns.com

spec:

rules:

- host: paas.rpdns.com

http:

paths:

- backend:

serviceName: java-demo

servicePort: 80

[root@master ~/demo/templates]#vim _helpers.tpl

[root@master ~/demo/templates]#cat _helpers.tpl

{{- define "demo.fullname" -}}

{{- .Chart.Name -}}-{{ .Release.Name }}

{{- end -}}

{{/*

公用标签

*/}}

{{- define "demo.labels" -}}

app: {{ template "demo.fullname" . }}

chart: "{{ .Chart.Name }}-{{ .Chart.Version }}"

release: "{{ .Release.Name }}"

{{- end -}}

{{/*

标签选择器

*/}}

{{- define "demo.selectorLabels" -}}

app: {{ template "demo.fullname" . }}

release: "{{ .Release.Name }}"

{{- end -}}

[root@master ~/demo/templates]#cd ..

[root@master ~/demo]#ls

charts Chart.yaml templates values.yaml

[root@master ~/demo]#vim values.yaml

[root@master ~/demo]#cat values.yaml

image:

pullPolicy: IfNotPresent

repository: lizhenliang/java-demo

tag: latest

imagePullSecrets: []

ingress:

annotations:

nginx.ingress.kubernetes.io/proxy-body-size: 100m

nginx.ingress.kubernetes.io/proxy-connect-timeout: "600"

nginx.ingress.kubernetes.io/proxy-read-timeout: "600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "600"

enabled: true

host: example.ctnrs.com

tls:

secretName: example-ctnrs-com-tls

nodeSelector: {}

replicaCount: 3

resources:

limits:

cpu: 1000m

memory: 1Gi

requests:

cpu: 100m

memory: 128Mi

service:

port: 80

type: ClusterIP

tolerations: []

[root@master ~/demo]#vim templates/deployment.yaml

# 修改应用,服务,代理的常量,采用变量的方式

[root@master ~/demo]#cat templates/deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{ include "demo.fullname" . }}

labels:

{{- include "demo.labels" . | nindent 4 }}

spec:

replicas: {{ .Values.replicaCount }}

selector:

matchLabels:

{{- include "demo.selectorLabels" . | nindent 6 }}

template:

metadata:

labels:

{{- include "demo.selectorLabels" . | nindent 8 }}

spec:

{{- with .Values.imagePullSecrets }}

imagePullSecrets:

{{- toYaml . | nindent 8 }}

{{- end }}

containers:

- name: {{ .Chart.Name }}

image: "{{ .Values.image.repository }}:{{ .Values.image.tag }}"

imagePullPolicy: {{ .Values.image.pullPolicy }}

ports:

- name: http

containerPort: 8080

protocol: TCP

livenessProbe:

httpGet:

path: /

port: http

readinessProbe:

httpGet:

path: /

port: http

resources:

{{- toYaml .Values.resources | nindent 12 }}

{{- with .Values.nodeSelector }}

nodeSelector:

{{- toYaml . | nindent 8 }}

{{- end }}

{{- with .Values.tolerations }}

tolerations:

{{- toYaml . | nindent 8 }}

{{- end }}

[root@master ~/demo]#vim templates/service.yaml

[root@master ~/demo]#cat templates/service.yaml

apiVersion: v1

kind: Service

metadata:

name: {{ include "demo.fullname" . }}

labels:

{{- include "demo.labels" . | nindent 4 }}

spec:

type: {{ .Values.service.type }}

ports:

- port: {{ .Values.service.port }}

targetPort: http

protocol: TCP

name: http

selector:

{{- include "demo.selectorLabels" . | nindent 4 }}

[root@master ~/demo]#vim templates/ingress.yaml

[root@master ~/demo]#cat templates/ingress.yaml

{{- if .Values.ingress.enabled -}}

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: {{ include "demo.fullname" . }}

labels:

{{- include "demo.labels" . | nindent 4 }}

{{- with .Values.ingress.annotations }}

annotations:

{{- toYaml . | nindent 4 }}

{{- end }}

spec:

{{- if .Values.ingress.tls }}

tls:

- hosts:

- {{ .Values.ingress.host }}

secretName: {{ .Values.ingress.tls.secretName }}

{{- end }}

rules:

- host: {{ .Values.ingress.host }}

http:

paths:

- path: /

backend:

serviceName: {{ include "demo.fullname" . }}

servicePort: {{ .Values.service.port }}

{{- end }}

[root@master ~/demo]#vim templates/NOTES.txt

# 加个说明

[root@master ~/demo]#cat templates/NOTES.txt

访问地址:

{{- if .Values.ingress.enabled }}

http{{ if $.Values.ingress.tls }}s{{ end }}://{{ .Values.ingress.host }}

{{- end }}

{{- if contains "NodePort" .Values.service.type }}

export NODE_PORT=$(kubectl get --namespace {{ .Release.Namespace }} -o jsonpath="{.spec.ports[0].nodePort}" services {{ include "demo.fullname" . }})

export NODE_IP=$(kubectl get nodes --namespace {{ .Release.Namespace }} -o jsonpath="{.items[0].status.addresses[0].address}")

echo http://$NODE_IP:$NODE_PORT

{{- end }}

# 查看项目编写的有没有问题

[root@master ~/demo]#helm install java-demo --dry-run ../demo/

NAME: java-demo

LAST DEPLOYED: Wed Jun 22 23:50:29 2022

NAMESPACE: default

STATUS: pending-install

REVISION: 1

TEST SUITE: None

HOOKS:

MANIFEST:

---

# Source: demo/templates/service.yaml

apiVersion: v1

kind: Service

metadata:

name: demo-java-demo

labels:

app: demo-java-demo

chart: "demo-0.1.0"

release: "java-demo"

spec:

type: ClusterIP

ports:

- port: 80

targetPort: http

protocol: TCP

name: http

selector:

app: demo-java-demo

release: "java-demo"

---

# Source: demo/templates/deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: demo-java-demo

labels:

app: demo-java-demo

chart: "demo-0.1.0"

release: "java-demo"

spec:

replicas: 3

selector:

matchLabels:

app: demo-java-demo

release: "java-demo"

template:

metadata:

labels:

app: demo-java-demo

release: "java-demo"

spec:

containers:

- name: demo

image: "lizhenliang/java-demo:latest"

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 8080

protocol: TCP

livenessProbe:

httpGet:

path: /

port: http

readinessProbe:

httpGet:

path: /

port: http

resources:

limits:

cpu: 1000m

memory: 1Gi

requests:

cpu: 100m

memory: 128Mi

---

# Source: demo/templates/ingress.yaml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: demo-java-demo

labels:

app: demo-java-demo

chart: "demo-0.1.0"

release: "java-demo"

annotations:

nginx.ingress.kubernetes.io/proxy-body-size: 100m

nginx.ingress.kubernetes.io/proxy-connect-timeout: "600"

nginx.ingress.kubernetes.io/proxy-read-timeout: "600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "600"

spec:

tls:

- hosts:

- example.ctnrs.com

secretName: example-ctnrs-com-tls

rules:

- host: example.ctnrs.com

http:

paths:

- path: /

backend:

serviceName: demo-java-demo

servicePort: 80

NOTES:

访问地址:

https://example.ctnrs.com

[root@master ~/demo]#helm install java-demo ../demo/

NAME: java-demo

LAST DEPLOYED: Wed Jun 22 23:50:49 2022

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

访问地址:

https://example.ctnrs.com

# 运行项目,默认是没有暴露 service 应用的

[root@master ~/demo]#helm upgrade java-demo --set service.type=NodePort ../demo/

Release "java-demo" has been upgraded. Happy Helming!

NAME: java-demo

LAST DEPLOYED: Wed Jun 22 23:50:58 2022

NAMESPACE: default

STATUS: deployed

REVISION: 2

TEST SUITE: None

NOTES:

访问地址:

https://example.ctnrs.com

export NODE_PORT=$(kubectl get --namespace default -o jsonpath="{.spec.ports[0].nodePort}" services demo-java-demo)

export NODE_IP=$(kubectl get nodes --namespace default -o jsonpath="{.items[0].status.addresses[0].address}")

echo http://$NODE_IP:$NODE_PORT

# 更新,暴露该应用

[root@master ~/demo]#helm upgrade java-demo --set service.type=NodePort ../demo/

# 修改副本数

[root@master ~/demo]#helm upgrade java-demo --set replicaCount=2 ../demo/

[root@master ~/demo]#kubectl get pods,svc -o wide

......

[root@master ~/demo]#kubectl get ingress

# 访问浏览器验证 demo java 运行情况

我遇到的状况

kubectl describe pod ***

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled <unknown> default-scheduler Successfully assigned default/demo-java-demo-7d6c585c5-cfz6q to node01

Warning FailedCreatePodContainer 18m kubelet, node01 unable to ensure pod container exists: failed to create container for [kubepods burstable pode116a439-29ca-4685-9364-4277e577ee31] : failed to write 1 to memory.kmem.limit_in_bytes: write /sys/fs/cgroup/memory/kubepods/burstable/pode116a439-29ca-4685-9364-4277e577ee31/memory.kmem.limit_in_bytes: operation not supported

Normal Pulling 18m kubelet, node01 Pulling image "lizhenliang/java-demo:latest"

# pod 无法运行,因为我用的 containerd 作为容器引擎,仓库中没有这个镜像。

4.5 使用 Harbor 作为 Chart 仓库

4.5.1 安装 Harbor

# 安装 docker(17.06.0+) 版本以上

[root@c7-4 ~]#cat docker.sh

#!/bin/bash

#环境配置

systemctl stop firewalld && systemctl disable firewalld

setenforce 0

#安装依赖包

yum -y install yum-utils device-mapper-persistemt-data lvm2

#设置阿里云镜像源

cd /etc/yum.repos.d/

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

#安装 docker-ce 社区版(企业版叫 docker-ee,收费)

yum -y install docker-ce

#配置阿里云镜像加速(尽量使用自己的)

#地址 https://help.aliyun.com/document_detail/60750.html

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://4iv7219l.mirror.aliyuncs.com"]

}

EOF

sudo systemctl daemon-reload

#网络优化

cat >> /etc/sysctl.conf <<EOF

net.ipv4.ip_forward=1

EOF

sysctl -p

systemctl restart network

systemctl enable docker && systemctl restart docker

[root@c7-4 ~]#bash docker.sh

[root@c7-4 ~]#systemctl status docker

# 安装 docker-compose

[root@c7-4 ~]#wget http://101.34.22.188/docker-compose/docker-compose -P /usr/local/bin

.....

[root@c7-4 ~]#chmod +x /usr/local/bin/docker-compose

[root@c7-4 ~]#docker-compose -v

docker-compose version 1.21.1, build 5a3f1a3

# 安装 harbor

[root@c7-4 ~]#wget http://101.34.22.188/harbor/harbor-offline-installer-v1.9.2.tgz -P /opt

.....

[root@c7-4 ~]#cd /opt

[root@c7-4 /opt]#ls

harbor-offline-installer-v1.9.2.tgz rh

[root@c7-4 /opt]#tar zxvf harbor-offline-installer-v1.9.2.tgz -C /usr/local/

.....

[root@c7-4 /usr/local/harbor]#ls

harbor.v1.9.2.tar.gz harbor.yml install.sh LICENSE prepare

[root@c7-4 /opt]#vim /usr/local/harbor/harbor.yml

#5行,修改设置为 Harbor 服务器的 IP 地址或者域名

hostname = 192.168.10.50

#59行,指定管理员的初始密码,默认的用户名/密码是 admin/Harbor12345

harbor_admin_password = Harbor12345

4.5.2 启用 Harbor 的 Chart 仓库服务

# --with-chartmuseum 启用后,默认创建的项目就带有 helm charts 功能了

[root@c7-4 /usr/local/harbor]#./install.sh --with-chartmuseum

......

......

✔ ----Harbor has been installed and started successfully.----

Now you should be able to visit the admin portal at http://192.168.10.50.

For more details, please visit https://github.com/goharbor/harbor .

浏览器访问:http://192.168.10.50/ 登录 harbor web ui 界面,默认的管理员用户名和密码是 admin/Harbor12345

创建项目 javademo,公开

4.5.3 安装 push 插件

[root@master ~]#wget http://49.232.8.65/helm/plugin/helm-push_0.10.1_linux_amd64.tar.gz

......

[root@master ~]#ls

demo helm-push_0.10.1_linux_amd64.tar.gz

[root@master ~]#mkdir -p helm-push/

[root@master ~]#tar -xzf helm-push_0.10.1_linux_amd64.tar.gz -C helm-push/

[root@master ~]#helm env

HELM_BIN="helm"

HELM_CACHE_HOME="/root/.cache/helm"

HELM_CONFIG_HOME="/root/.config/helm"

HELM_DATA_HOME="/root/.local/share/helm"

HELM_DEBUG="false"

HELM_KUBEAPISERVER=""

HELM_KUBEASGROUPS=""

HELM_KUBEASUSER=""

HELM_KUBECAFILE=""

HELM_KUBECONTEXT=""

HELM_KUBETOKEN=""

HELM_MAX_HISTORY="10"

HELM_NAMESPACE="default"

HELM_PLUGINS="/root/.local/share/helm/plugins"

HELM_REGISTRY_CONFIG="/root/.config/helm/registry.json"

HELM_REPOSITORY_CACHE="/root/.cache/helm/repository"

HELM_REPOSITORY_CONFIG="/root/.config/helm/repositories.yaml"

[root@master ~]#ls

demo helm-push helm-push_0.10.1_linux_amd64.tar.gz

[root@master ~]#cd helm-push/

[root@master ~/helm-push]#ls

bin LICENSE plugin.yaml

[root@master ~/helm-push]#mkdir -p /root/.local/share/helm/plugins/helm-push

[root@master ~/helm-push]#chmod +x bin/*

[root@master ~/helm-push]#mv bin/ plugin.yaml /root/.local/share/helm/plugins/helm-push[root@master ~/helm-push]#helm plugin list

NAME VERSION DESCRIPTION

cm-push 0.10.1 Push chart package to ChartMuseum

4.5.4 添加 repo

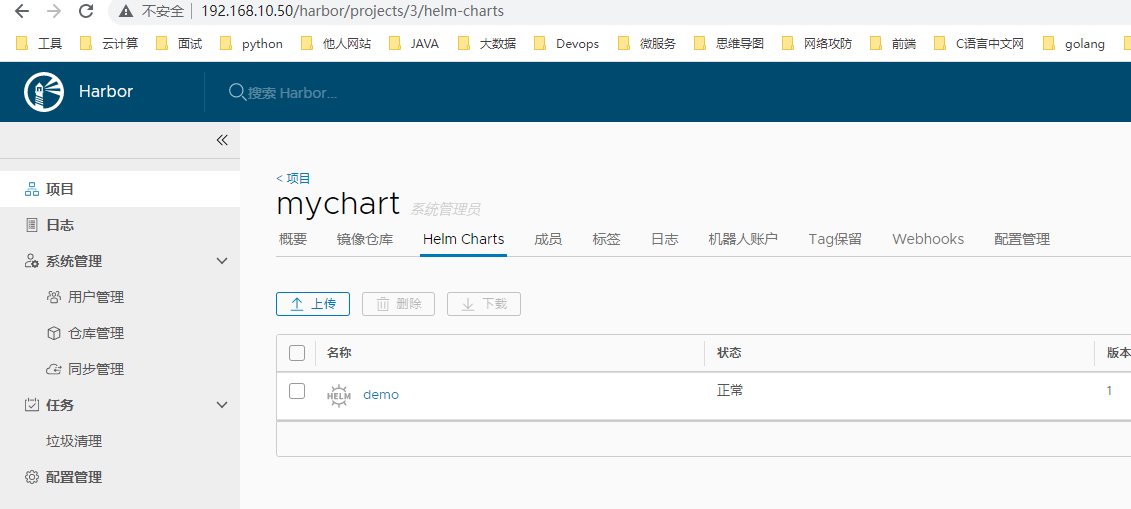

# 我的 harbor 是用的 http 登录,先创建 mychart 项目

[root@master ~]#helm repo add harbor_lc_chart --username admin --password Harbor12345 http://192.168.10.50/chartrepo/mychart

"harbor_lc_chart" has been added to your repositories

仓库地址格式为:

http(s)//{harbor 域名或iP:端口(如果默认443 或80 可不加)}/chartrepo/{mychart}

确认你启动仓库 harbor 配置的是 https 还是 http 如果是 http 上面的命令可以执行成功,如果是 https 还需带上 ca 证书,启动 harbor 用的服务器证书和密钥如下:

helm repo add --ca-file harbor.devopstack.cn.cert --cert-file harbor.devopstack.cn.crt --key-file harbor.devopstack.cn.key --username admin --password Harbor12345 myrepo https://192.168.10.20:443/chartrepo/chart/

查看

[root@master ~]#helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "harbor_lc_chart" chart repository

...Successfully got an update from the "stable" chart repository

...Successfully got an update from the "azure" chart repository

Update Complete. ⎈Happy Helming!⎈

[root@master ~]#helm repo list

NAME URL

stable https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

azure http://mirror.azure.cn/kubernetes/charts

harbor_lc_chart http://192.168.10.50/chartrepo/mychart

4.5.5 配置好 harbor 到 k8s/k3s 集群的 insecure-registry

https 访问的 harbor 需要。

参考:配置好 harbor 到 k8s 集群的 insecure-registry

4.5.6 推送与安装 Chart

[root@master ~]#helm package demo/

Successfully packaged chart and saved it to: /root/demo-0.1.0.tgz

[root@master ~]#ls

demo demo-0.1.0.tgz helm-push helm-push_0.10.1_linux_amd64.tar.gz

[root@master ~]#helm cm-push demo-0.1.0.tgz --username=admin --password=Harbor12345 http://192.168.10.50/chartrepo/mychart

Pushing demo-0.1.0.tgz to http://192.168.10.50/chartrepo/mychart...

Done.

可以看到 chart 包已经推送到 harbor 仓库。

[root@master ~]#helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "harbor_lc_chart" chart repository

...Successfully got an update from the "stable" chart repository

...Successfully got an update from the "azure" chart repository

Update Complete. ⎈Happy Helming!⎈

[root@master ~]#helm search repo harbor_lc_chart/demo

NAME CHART VERSION APP VERSION DESCRIPTION

harbor_lc_chart/demo 0.1.0 1.16.0 A Helm chart for Kubernetes

# 拉取到本地部署

[root@master ~]#helm pull --version 0.1.0 harbor_lc_chart/demo

......

[root@master ~]#helm install web demo-0.1.0.tgz -n default

......

# 在线部署

[root@master ~]#helm install web --version 0.1.0 harbor_lc_chart/demo -n default

......