K8S 持久化存储 PV/PVC

1. 通过 NFS 实现持久化存储

1.1 配置 nfs

| 角色 | 主机 |

|---|---|

| nfs-server | master(192.168.10.20) |

| nfs-client | node01(192.168.10.30),node02(192.168.10.40) |

所有节点安装 nfs:一定注意,客户端服务端都要安装,k8s 集群就是所有节点。

yum install -y nfs-common nfs-utils

master 节点创建共享目录

[root@master ~]#mkdir -p /data/v{1..5}

[root@master ~]#chmod 777 -R /data/*

编辑 exports 文件

[root@master ~]#vim /etc/exports

/data/v1 *(insecure,rw,no_root_squash,sync,no_subtree_check)

/data/v2 *(insecure,rw,no_root_squash,sync,no_subtree_check)

/data/v3 *(insecure,rw,no_root_squash,sync,no_subtree_check)

/data/v4 *(insecure,rw,no_root_squash,sync,no_subtree_check)

/data/v5 *(insecure,rw,no_root_squash,sync,no_subtree_check)

# 配置生效

[root@master ~]#exportfs -rv

......

启动 rpc 和 nfs(注意顺序)

[root@master ~]#systemctl start rpcbind && systemctl enable rpcbind

[root@master ~]#systemctl start nfs && systemctl enable nfs # 所有节点启动

查看本机发布的共享目录

[root@master ~]#showmount -e

master 创建访问页面供测试用

echo '11111' > /data/v1/index.html

echo '22222' > /data/v2/index.html

echo '33333' > /data/v3/index.html

echo '44444' > /data/v4/index.html

echo '55555' > /data/v5/index.html

1.2 创建 PV

vim pv-demo.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv001

labels:

name: pv001

spec:

nfs:

path: /data/v1

server: 192.168.10.20

accessModes: ["ReadWriteMany","ReadWriteOnce"]

capacity:

storage: 1Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv002

labels:

name: pv002

spec:

nfs:

path: /data/v2

server: 192.168.10.20

accessModes: ["ReadWriteOnce"]

capacity:

storage: 2Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv003

labels:

name: pv003

spec:

nfs:

path: /data/v3

server: 192.168.10.20

accessModes: ["ReadWriteMany","ReadWriteOnce"]

capacity:

storage: 2Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv004

labels:

name: pv004

spec:

nfs:

path: /data/v4

server: 192.168.10.20

accessModes: ["ReadWriteMany","ReadWriteOnce"]

capacity:

storage: 4Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv005

labels:

name: pv005

spec:

nfs:

path: /data/v5

server: 192.168.10.20

accessModes: ["ReadWriteMany","ReadWriteOnce"]

capacity:

storage: 5Gi

发布 pv 并查看

[root@master ~]#kubectl apply -f pv-demo.yaml

persistentvolume/pv001 created

persistentvolume/pv002 created

persistentvolume/pv003 created

persistentvolume/pv004 created

persistentvolume/pv005 created

[root@master ~]#kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv001 1Gi RWO,RWX Retain Available 8s

pv002 2Gi RWO Retain Available 8s

pv003 2Gi RWO,RWX Retain Available 8s

pv004 4Gi RWO,RWX Retain Available 8s

pv005 5Gi RWO,RWX Retain Available

[root@master ~]#showmount -e 192.168.10.20

Export list for 192.168.10.20:

/data/v5 192.168.10.0/24

/data/v4 192.168.10.0/24

/data/v3 192.168.10.0/24

/data/v2 192.168.10.0/24

/data/v1 192.168.10.0/24

1.3 定义 PVC

这里定义了 pvc 的访问模式为多路读写,该访问模式必须在前面 pv 定义的访问模式之中。定义 pvc 申请的大小为 2Gi,此时 pvc 会自动去匹配多路读写且大小为 2Gi 的 pv,匹配成功获取 PVC 的状态即为 Bound

vim pvc-demo.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mypvc

spec:

accessModes: ["ReadWriteMany"]

resources:

requests:

storage: 2Gi

---

apiVersion: v1

kind: Pod

metadata:

name: pv-pvc

spec:

containers:

- name: myapp

image: nginx

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

volumes:

- name: html

persistentVolumeClaim:

claimName: mypvc

发布并查看

[root@master ~]#kubectl apply -f pvc-demo.yaml

persistentvolumeclaim/mypvc created

pod/pv-pvc created

[root@master ~]#kubectl get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pv001 1Gi RWO,RWX Retain Available 5m54s

persistentvolume/pv002 2Gi RWO Retain Available 5m54s

persistentvolume/pv003 2Gi RWO,RWX Retain Bound default/mypvc 5m54s

persistentvolume/pv004 4Gi RWO,RWX Retain Available 5m54s

persistentvolume/pv005 5Gi RWO,RWX Retain Available 5m54s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/mypvc Bound pv003 2Gi RWO,RWX 10s

[root@master ~]#kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pv-pvc 1/1 Running 0 58s 10.244.1.3 node01 <none> <none>

测试访问

[root@master ~]#curl 10.244.1.3

33333

测试多路读写:

- 我们通过相同的存储卷,只修改 pod 的名称

cp pvc-demo.yaml 1.yaml

cp pvc-demo.yaml 2.yaml- 修改 pod 的名称后,apply 执行创建

kubectl apply -f 1.yaml

kubectl apply -f 2.yaml- 查看 ip

kubectl get pod -o wide- curl 进行测试,查看是否共享存储卷,多路读写

2. 基于动态 storageclass 创建 pv/pvc

注意:pv 是全局的,pvc 通过 ns 隔离,sc 是全局的。

--- 部署 StorageClass 步骤:https://blog.51cto.com/jiangxl/5076635

1.编写 nfs-client-provisioner 程序的 rbac 授权角色账号

2.编写 nfs-client-provisioner 程序的 deployment 资源文件,与 rbac 账号进行绑定,使 nfs-client-provisioner 对 pv、pvc 有增删改查权限

3.创建一个 StorageClass 资源关联 nfs-client,自动创建 PV 时,就将 PV 存储到了 nfs-client 对应的 nfs 存储上

4.编写一个 PVC yaml 文件,验证是否能自动创建 PV

5.编写一个无状态的 yaml 文件,使用 PVC 挂载数据

6.在 statefulset 里定义 StorageCLass,实现每个 pod 都使用单独的 pvc 存储

2.1 创建 PV

[root@master ~]#mkdir /nfsdata

[root@master ~]#chmod 777 /nfsdata

[root@master ~]#vim /etc/exports

/data/v1 *(insecure,rw,no_root_squash,sync,no_subtree_check)

/data/v2 *(insecure,rw,no_root_squash,sync,no_subtree_check)

/data/v3 *(insecure,rw,no_root_squash,sync,no_subtree_check)

/data/v4 *(insecure,rw,no_root_squash,sync,no_subtree_check)

/data/v5 *(insecure,rw,no_root_squash,sync,no_subtree_check)

/nfsdata *(insecure,rw,no_root_squash,sync,no_subtree_check)

[root@master ~]#exportfs -rv

exporting 192.168.10.0/24:/nfsdata

exporting 192.168.10.0/24:/data/v5

exporting 192.168.10.0/24:/data/v4

exporting 192.168.10.0/24:/data/v3

exporting 192.168.10.0/24:/data/v2

exporting 192.168.10.0/24:/data/v1

[root@master ~]#showmount -e

Export list for master:

/nfsdata 192.168.10.0/24

/data/v5 192.168.10.0/24

/data/v4 192.168.10.0/24

/data/v3 192.168.10.0/24

/data/v2 192.168.10.0/24

/data/v1 192.168.10.0/24

[root@master ~]#echo 'this is a test' > /nfsdata/index.html

2.2 测试 storageclass 效果

rbac 和 nfs-client-provisioner 控制器必须在一个 ns 下,可以将其当成前置插件,我们最终需要的是 pvc。k8sv1.20.x 版本及以上这两个就建在 default ns 下。

rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

prvisor-deployment.yaml

1️⃣该文件必须在 default 命名空间下,storageclass 会默认去 default 下找这个控制器,如果找不到创建的 pvc 就会显示 Pending,这可能是 k8sv1.20.X 及以上版本实现逻辑的问题。

2️⃣该 rs 依赖于 serviceaccount,如果 rs 找不到 serviceaccount 也会无法创建。[链接]

3️⃣我在 default 开启 istio 边车注入后,deploy 无法创建,检查是 rs 无法创建,待解决。

(https://blog.csdn.net/weixin_43905458/article/details/104060485#t6)

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: nfs-client-provisioner

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: registry.cn-hangzhou.aliyuncs.com/syhj/public:nfs-client-provisioner_D20241016

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: wuchang-nfs-storage

- name: NFS_SERVER

value: 192.168.10.20 #NFS Server IP地址

- name: NFS_PATH

value: /nfsdata #NFS挂载卷

securityContext:

runAsUser: 0 # 设置为 root 用户

runAsGroup: 0 # 设置为 root 组

volumes:

- name: nfs-client-root

nfs:

server: 192.168.10.20 #NFS Server IP地址

path: /nfsdata #NFS 挂载卷

storageclass.yaml链接

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

provisioner: wuchang-nfs-storage

# 允许 pvc 创建后扩容

allowVolumeExpansion: True

parameters:

# 当archiveOnDelete为true时,PV和NAS文件只是被重命名,不会被删除。

# 当archiveOnDelete为false时,PV和NAS文件会被真正删除。

archiveOnDelete: "true"

test-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: test-claim

annotations:

volume.beta.kubernetes.io/storage-class: "managed-nfs-storage"

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Mi

pod-demo.yaml

apiVersion: v1

kind: Pod

metadata:

name: test-pd

spec:

containers:

- image: ikubernetes/myapp:v1

name: test-container

volumeMounts:

- mountPath: /test-pd

name: nfs-pvc

volumes:

- name: nfs-pvc

persistentVolumeClaim:

claimName: test-claim #与PVC名称保持一致

执行 yaml 文件

# 创建一个等一会,可查看资源创建情况

kubectl apply -f rbac.yaml

kubectl apply -f prvisor-deployment.yaml

kubectl apply -f storageclass.yaml # 创建 storageclass

kubectl apply -f test-pvc.yaml # 测试 pvc:pvc-test

kubectl apply -f pod-demo.yaml # 测试 pod:test-pd

测试

[root@master ~]#kubectl get pods,svc

NAME READY STATUS RESTARTS AGE

pod/nfs-client-provisioner-555df7ccd5-z86nn 1/1 Running 0 105s

pod/pv-pvc 1/1 Running 0 33m

pod/test-pd 1/1 Running 0 20s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d16h

[root@master ~]#kubectl exec -it test-pd /bin/sh

/ # ls

bin etc lib mnt root sbin sys tmp var

dev home media proc run srv test-pd usr

/ # cd test-pd/

/test-pd # ls

/test-pd # touch zc syhj

/test-pd # ls

syhj zc

/test-pd # exit

[root@master ~]#cd /nfsdata/

[root@master /nfsdata]#ls

default-test-claim-pvc-3474333e-1f03-4ed5-8e7f-83cf3b97b50f index.html

[root@master /nfsdata]#cd default-test-claim-pvc-3474333e-1f03-4ed5-8e7f-83cf3b97b50f/

[root@master /nfsdata/default-test-claim-pvc-3474333e-1f03-4ed5-8e7f-83cf3b97b50f]#ls

syhj zc

故障描述:

PVC 显示创建不成功:

kubectl get pvc -n efk显示 Pending,这是由于版本太高导致的。k8sv1.20 以上版本默认禁止使用 selfLink。(selfLink:通过 API 访问资源自身的 URL,例如一个 Pod 的 link 可能是 /api/v1/namespaces/ns36aa8455/pods/sc-cluster-test-1-6bc58d44d6-r8hld)。故障解决:

[root@k8sm storage]# vi /etc/kubernetes/manifests/kube-apiserver.yaml apiVersion: v1 ··· - --tls-private-key-file=/etc/kubernetes/pki/apiserver.key - --feature-gates=RemoveSelfLink=false # 添加这个配置,这个配置在 k8s1.24 版本移除了,所以这个插件在 1.24 以上可能不能使用了 重启下kube-apiserver.yaml # 如果是二进制安装的 k8s,执行 systemctl restart kube-apiserver && systemctl restart kubelet # 如果是 kubeadm 安装的 k8s [root@k8sm manifests]# systemctl restart kubelet [root@k8sm manifests]# ps aux|grep kube-apiserver [root@k8sm manifests]# kill -9 [Pid] # 有可能自动重启 [root@k8sm manifests]# kubectl apply -f /etc/kubernetes/manifests/kube-apiserver.yaml ... [root@master ~]# kubectl get pods -A | grep kube-apiserver-master # 查看时间 ...... [root@k8sm storage]# kubectl get pvc # 查看 pvc 显示 Bound NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE my-pvc Bound pvc-ae9f6d4b-fc4c-4e19-8854-7bfa259a3a04 1Gi RWX example-nfs 13m

在 statefulset 上测试文件 storageclass

3. PV、PVC 应用在 mysql 的持久化存储

3.1 创建 Mysql 的 PV 和 PVC

创建挂载点

[root@master ~]#mkdir -p /nfsdata/mysql

[root@master ~]#chmod 777 -R /nfsdata/mysql/

[root@master ~]#vim /etc/exports

/nfsdata/mysql 192.168.10.0/24(rw,no_root_squash,sync)

[root@master ~]#exportfs -rv

...

[root@master ~]#systemctl restart nfs rpcbind

[root@master ~]#showmount -e

Export list for master:

/nfsdata/mysql 192.168.10.0/24

/nfsdata 192.168.10.0/24

kubectl apply -f mysql-pv.yml

apiVersion: v1

kind: PersistentVolume

metadata:

name: mysql-pv

spec:

accessModes:

- ReadWriteOnce

capacity:

storage: 1Gi

persistentVolumeReclaimPolicy: Retain

storageClassName: nfs

nfs:

path: /nfsdata/mysql

server: 192.168.10.20

kubectl apply -f mysql-pvc.yml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mysql-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: nfs

查看

[root@master ~]#kubectl get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/mysql-pv 1Gi RWO Retain Bound default/mysql-pvc nfs 12s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/mysql-pvc Bound mysql-pv 1Gi RWO nfs 9s

3.2 部署 Mysql pod

kubectl apply -f mysql.yml

apiVersion: v1

kind: Service

metadata:

name: mysql

spec:

ports:

- port: 3306

selector:

app: mysql

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql

spec:

selector:

matchLabels:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

containers:

- image: daocloud.io/library/mysql:5.7.5-m15 # 镜像一定要能拉取,可先在 node 节点 docker pull

name: mysql

env:

- name: MYSQL_ROOT_PASSWORD

value: password

ports:

- containerPort: 3306

name: mysql

volumeMounts:

- name: mysql-persistent-storage

mountPath: /var/lib/mysql

volumes:

- name: mysql-persistent-storage

persistentVolumeClaim:

claimName: mysql-pvc

PVC mysql-pvc Bound 的 PV mysql-pv 将被 mount 到 MySQL 的数据目录 /var/lib/mysql。

[root@master ~]#kubectl get pods,svc -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/mysql-6654fcb867-xnfjq 1/1 Running 0 20s 10.244.1.3 node01 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d22h <none>

service/mysql ClusterIP 10.96.245.196 <none> 3306/TCP 20s app=mysql

3.3 模拟故障

① 切换到数据库 mysql

② 创建数据库和表

③ 插入一条数据

④ 确认数据已经写入

⑤ 关闭

k8s-node1,模拟节点宕机故障

[root@master ~]#kubectl exec -it mysql-6654fcb867-xnfjq /bin/bash

root@mysql-6654fcb867-xnfjq:/# mysql -uroot -p

Enter password: # password

..........

mysql> create database my_db;

Query OK, 1 row affected (0.02 sec)

mysql> create table my_db.t1(id int);

Query OK, 0 rows affected (0.05 sec)

mysql> insert into my_db.t1 values(2);

Query OK, 1 row affected (0.01 sec)

模拟 node01 故障

[root@node01 ~]#poweroff

...

验证数据的一致性

由于 node01 节点已经宕机,node02 节点接管了这个任务,pod 转移需要等待一段时间,我这里等待了 10 分钟左右。

[root@master ~]#kubectl get pods,svc -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/mysql-6654fcb867-5ln28 1/1 Running 0 12m 10.244.2.3 node02 <none> <none>

pod/mysql-6654fcb867-z86nn 1/1 Terminating 0 19m 10.244.1.3 node01 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d23h <none>

service/mysql ClusterIP 10.96.158.55 <none> 3306/TCP 19m app=mysql

访问新的 pod 查看数据是否存在

[root@master ~]#kubectl exec -it mysql-6654fcb867-5ln28 /bin/bash

root@mysql-6654fcb867-5ln28:/# mysql -uroot -p

Enter password:

......

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| my_db |

| mysql |

| performance_schema |

+--------------------+

4 rows in set (0.03 sec)

mysql> select * from my_db.t1;

+------+

| id |

+------+

| 2 |

+------+

1 row in set (0.04 sec)

MySQL 服务恢复,数据也完好无损。

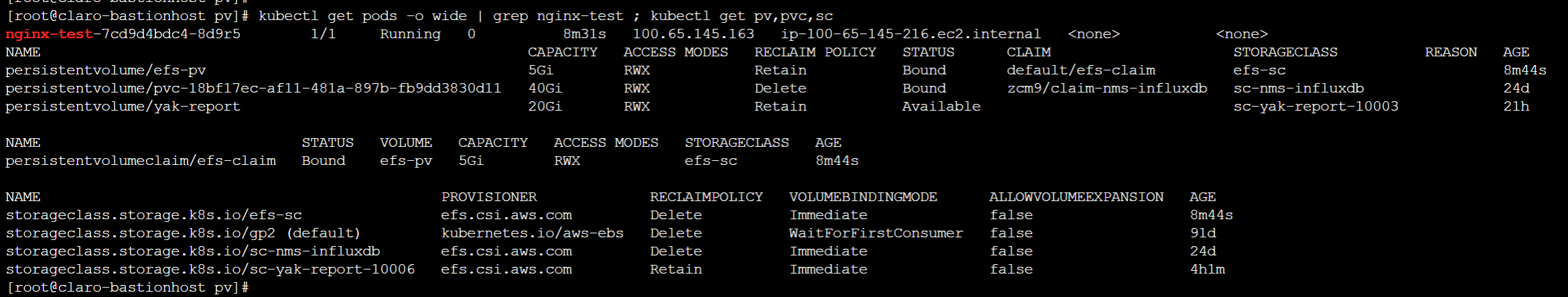

4. AWS EKS 使用 EFS

nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-test

namespace: default

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

app: nginx-test

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

labels:

app: nginx-test

spec:

containers:

- image: 163335386826.dkr.ecr.us-east-1.amazonaws.com/zcm9/nginx:1.19.hk

imagePullPolicy: IfNotPresent

name: nginx-test

volumeMounts:

- mountPath: /etc/localtime

name: localtime

- name: persistent-storage

mountPath: /data

volumes:

- hostPath:

path: /etc/localtime

type: ""

name: localtime

- name: persistent-storage

persistentVolumeClaim:

claimName: efs-claim

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: efs-pv

spec:

capacity:

storage: 5Gi

volumeMode: Filesystem

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

storageClassName: efs-sc

csi:

driver: efs.csi.aws.com

volumeHandle: fs-068d80072c18bc5c9

pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: efs-claim

spec:

accessModes:

- ReadWriteMany

storageClassName: efs-sc

resources:

requests:

storage: 5Gi

sc.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: efs-sc

provisioner: efs.csi.aws.com

https://zhuanlan.zhihu.com/p/477495419