Centos7 kubeadm 部署单 master 集群(1.22.5)

1. 实验准备

三台机

192.168.10.100 master

192.168.10.101 node01

192.168.10.102 node02

关闭防火墙,selinux,swap(三台机)

systemctl stop firewalld && systemctl disable firewalld

setenforce 0

swapoff -a

修改主机名,并写入三台服务器的 host 中

hostnamectl set-hostname master && su

hostnamectl set-hostname node01 && su

hostnamectl set-hostname node02 && su

cat >> /etc/hosts << EOF

192.168.10.100 master

192.168.10.101 node01

192.168.10.102 node02

EOF

将桥接的 IPV4 流量传递到 iptables 链

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

fs.inotify.max_user_watches = 1048576

net.ipv4.ip_forward = 1

EOF

sysctl --system

同步时间

yum -y install ntpdate

ntpdate time.windows.com

如果时区不对执行下面命令,然后再同步

cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

(date -s "2021 00:00:00" hwclock -systohc)

2. 安装 docker(三个节点)

yum -y install yum-utils device-mapper-persistemt-data lvm2

cd /etc/yum.repos.d/

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum -y install docker-ce

systemctl enable docker && systemctl start docker

sudo mkdir -p /etc/docker

systemctl start docker && systemctl enable docker

cat > /etc/docker/daemon.json << EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"storage-driver": "overlay2",

"registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"],

"ip-forward": true,

"live-restore": false,

"log-opts": {

"max-size": "100m",

"max-file":"3"

},

"default-ulimits": {

"nproc": {

"Name": "nproc",

"Hard": 32768,

"Soft": 32768

},

"nofile": {

"Name": "nofile",

"Hard": 32768,

"Soft": 32768

}

}

}

EOF

sed -i 's/-H fd:\/\/ //g' /usr/lib/systemd/system/docker.service

systemctl daemon-reload && systemctl restart docker

systemctl status docker

加了

"exec-opts": ["native.cgroupdriver=systemd"],参数 docker 的 Cgroup Driver 会变成 systemd(高版本默认 cgroupfs)

3. 配置阿里云 K8S repo 源(三个节点)

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum clean all && yum makecache

4. 安装 kubeadm,kubelet,kubectl(三个节点)

# yum install -y kubelet-1.22.5 kubeadm-1.22.5 kubectl-1.22.5

yum -y install kubelet-1.18.0 kubeadm-1.18.0 kubectl-1.18.0 --nogpgcheck

systemctl enable kubelet

5. 部署 kubernetes Master 节点(master 节点上执行)

初始化 kubeadm

kubeadm init \

--apiserver-advertise-address=192.168.10.100 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.80.0 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16

参数说明

kubeadm init \

--apiserver-advertise-address=10.0.0.116 \ #指定master监听的地址,修改为自己的master地址

--image-repository registry.aliyuncs.com/google_containers \ #指定为aliyun的下载源,最好用国内的

--kubernetes-version v1.18.0 \ #指定k8s版本,1.18.0版本比较稳定

--service-cidr=10.96.0.0/12 \ #设置集群内部的网络

--pod-network-cidr=10.244.0.0/16 #设置pod的网络

# service-cidr 和 pod-network-cidr 最好就用这个,不然需要修改后面的 kube-flannel.yaml 文件

出现 Your Kubernetes control-plane has initialized successfully! 为初始化成功,初始化失败先排查原因

高版本例如:v1.22.5 初始化可能失败,参考:链接

在/etc/docker/daemon.json文件中加入"exec-opts": ["native.cgroupdriver=systemd"]一行配置,重启 docker 再清除一下 kubeadm 信息即可重新初始化。[root@k8s-node2 ~]# vim /etc/docker/daemon.json # master/node ... "exec-opts": ["native.cgroupdriver=systemd"], ... [root@k8s-node2 ~]# systemctl restart docker [root@k8s-master ~]# kubeadm reset -f # master

node 节点加入集群需要生成的 token,token 有效期为 24 小时,过期需要重新创建,创建命令为 kubeadm token create --print-join-command

执行以下命令可使用 kubectl 管理工具

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane,master 111m v1.22.5

[root@master ~]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true","reason":""}

## 注意 scheduler 显示不健康是高版本的原因,参考:

## https://wenku.baidu.com/view/61cacb4ea75177232f60ddccda38376bae1fe058.html

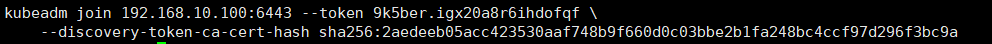

6. k8s-node 节点加入 master 节点(两个 node 执行)

加入报错 master 重新生成 token

node01

[root@node01 ~]# kubeadm join 192.168.10.100:6443 --token 9k5ber.igx20a8r6ihdofqf \

> --discovery-token-ca-cert-hash sha256:2aedeeb05acc423530aaf748b9f660d0c03bbe2b1fa248bc4ccf97d296f3bc9a

......

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

node02

[root@node02 ~]# kubeadm join 192.168.10.100:6443 --token 9k5ber.igx20a8r6ihdofqf \

> --discovery-token-ca-cert-hash sha256:2aedeeb05acc423530aaf748b9f660d0c03bbe2b1fa248bc4ccf97d296f3bc9a

......

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

master 查看

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady master 24m v1.15.1

node01 NotReady <none> 97s v1.15.1

node02 NotReady <none> 91s v1.15.1

节点显示 NotReady 状态,需要安装网络插件

token 过期重 master 新生成 token

kubeadm token create --print-join-command

node 节点加入失败清除缓存:kubeadm reset of

7. 安装 Pod 网络插件(CNI 插件,master 节点)

下载插件 yaml 文件

#国外网站

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

#国内网站

wget http://120.78.77.38/file/kube-flannel.yaml

执行安装

#需要时间安装

kubectl apply -f kube-flannel.yaml

执行 kubectl get pods -n kube-system 查看出错是因为quay.io 网站目前国内无法访问,资源下载不成功,解决方案参考:https://blog.csdn.net/K_520_W/article/details/116566733

这里提供一个方便的解决方法

#修改 flannel 插件文件,这个版本比较低,高版本 k8s 尽量选择一些高一些的版本 flannel

sed -i -r "s#quay.io/coreos/flannel:.*-amd64#lizhenliang/flannel:v0.12.0-amd64#g" kube-flannel.yaml

kubectl apply -f kube-flannel.yaml

kubectl get pods -n kube-system

kubectl get node #部署好网络插件,node 准备就绪

安装 v0.14.0 版本 flannel

wget http://49.232.8.65/yml/flannel-v0.14.0/kube-flannel.yml

sed -i -r "s#quay.io/coreos/flannel:.*-amd64#lizhenliang/flannel:v0.14.0-amd64#g" kube-flannel.yml

kubectl apply -f kube-flannel.yml

去除污点:

kubectl taint nodes --all node-role.kubernetes.io/master-

查看

[root@master ~]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-7f6cbbb7b8-drzqc 1/1 Running 0 19m

kube-system coredns-7f6cbbb7b8-prcwv 1/1 Running 0 19m

kube-system etcd-master 1/1 Running 0 19m

kube-system kube-apiserver-master 1/1 Running 0 19m

kube-system kube-controller-manager-master 1/1 Running 0 16m

kube-system kube-flannel-ds-8g92b 1/1 Running 0 27s

kube-system kube-flannel-ds-qzvxn 1/1 Running 0 27s

kube-system kube-flannel-ds-x6lkb 1/1 Running 0 27s

kube-system kube-proxy-5xx5c 1/1 Running 0 15m

kube-system kube-proxy-fsp8p 1/1 Running 0 15m

kube-system kube-proxy-gldb2 1/1 Running 0 19m

kube-system kube-scheduler-master 1/1 Running 0 16m

[root@master ~]# kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master Ready control-plane,master 22m v1.22.5 192.168.10.100 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://20.10.7

node01 Ready <none> 18m v1.22.5 192.168.10.101 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://20.10.7

node02 Ready <none> 18m v1.22.5 192.168.10.102 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://20.10.7

测试 k8s 集群,在集群中创建一个 pod,验证是否能正常运行

[root@master ~]# kubectl get pod #默认命名空间现在是没有 pod 的

No resources found.

[root@master ~]# kubectl create deployment nginx --image=nginx

deployment.apps/nginx created

[root@master ~]# kubectl expose deployment nginx --port=80 --type=NodePort #暴露端口供外网访问

service/nginx exposed

[root@master ~]# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-554b9c67f9-295wt 0/1 ContainerCreating 0 22s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 55m

service/nginx NodePort 10.106.243.55 <none> 80:32141/TCP 10s

[root@master ~]# curl 192.168.10.100:32141 # nginx 暴露了 32141 端口,http://nodeIP:port 访问,任意节点 IP 都可以

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

安装 calico 网络插件

curl https://docs.projectcalico.org/manifests/calico.yaml -O

kubectl apply -f calico.yaml

执行时间与网络相关

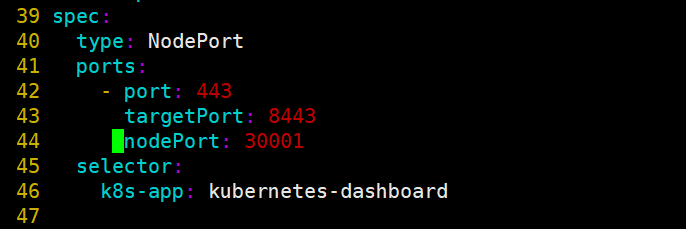

8. master 节点安装可视化管理界面 dashboard

下载插件文件

wget http://120.78.77.38/file/kubernetes-dashboard.yaml

修改文件内容

修改 kubernetes-dashboard.yaml,增加一行 nodePort: 30001,如图所示,光标处为增加的行,端口自行选定,不冲突就行

nodePort: 30001

执行安装

[root@master ~]# kubectl apply -f kubernetes-dashboard.yaml

......

[root@master ~]# kubectl get pods -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-6c554969c6-r5kmx 0/1 ContainerCreating 0 14s

kubernetes-dashboard-56c5f95c6b-j7xgb 0/1 ContainerCreating 0 14s

[root@master ~]# kubectl get pods -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-6c554969c6-r5kmx 1/1 Running 0 52s

kubernetes-dashboard-56c5f95c6b-j7xgb 1/1 Running 0 52s

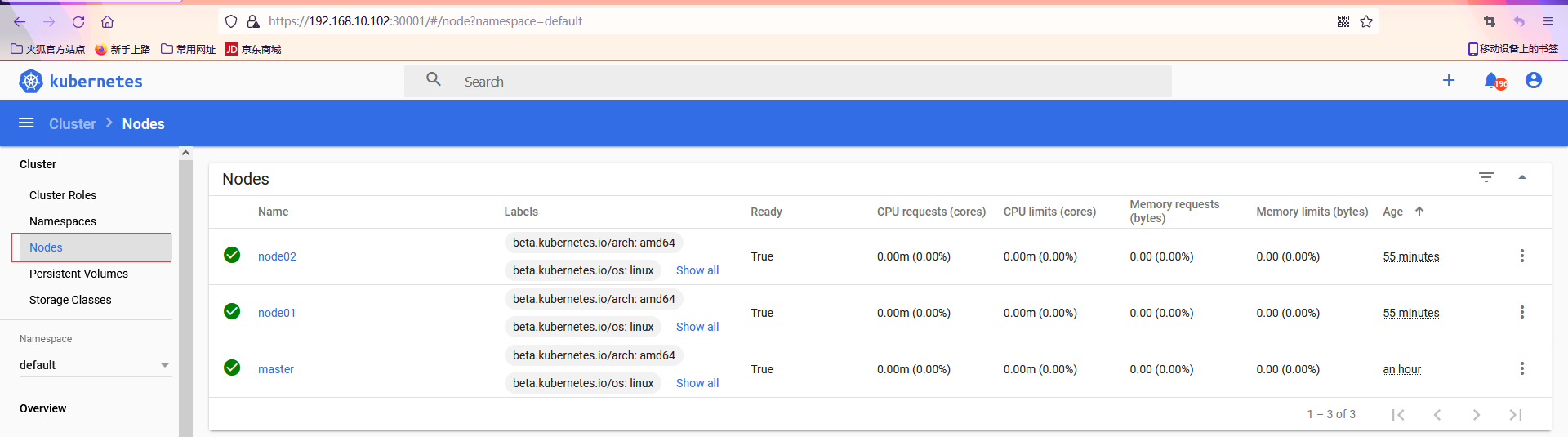

查看 pod 在哪个节点,访问 web 界面

dashboard-metrics 所在节点采集监控指标,kubernetes-dashboard 所在节点为 web 管理页面

[root@master ~]# kubectl get pod -n kubernetes-dashboard -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

dashboard-metrics-scraper-6c554969c6-r5kmx 1/1 Running 0 2m35s 10.244.1.3 node01 <none> <none>

kubernetes-dashboard-56c5f95c6b-j7xgb 1/1 Running 0 2m35s 10.244.2.2 node02 <none> <none>

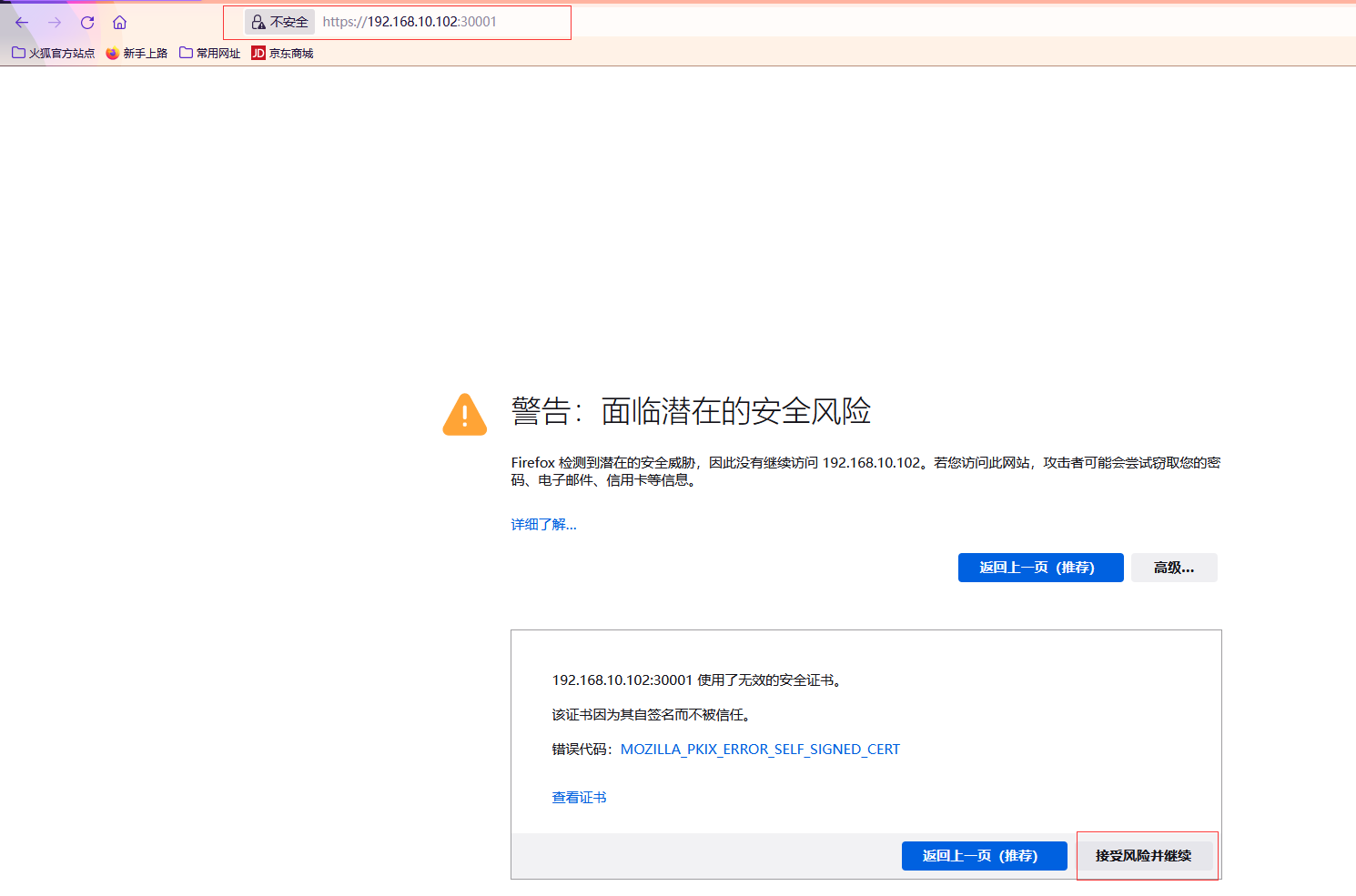

访问:https://192.168.10.102:30001/

推荐使用火狐浏览器,用其他浏览器可能无法访问,因为缺少信任证书,信任证书制作参考:

https://blog.csdn.net/shenyuanhaojie/article/details/121951326?spm=1001.2014.3001.5501

https://blog.csdn.net/weixin_40228200/article/details/124677472

openssl genrsa -out dashboard.key 2048

openssl req -new -out dashboard.csr -key dashboard.key -subj '/CN=<<<<<IP>>>>>'

openssl x509 -req -in dashboard.csr -signkey dashboard.key -out dashboard.crt

kubectl delete secret kubernetes-dashboard-certs -n kubernetes-dashboard

kubectl create secret generic kubernetes-dashboard-certs --from-file=dashboard.key --from-file=dashboard.crt -n kubernetes-dashboard

kubectl delete pod kubernetes-dashboard-5b489d6456-s5nb5 -n kubernetes-dashboard

kubectl describe secrets -n kube-system $(kubectl^Cn kube-system get secret |awk '/dashboard-admin/{pirnt $1}')

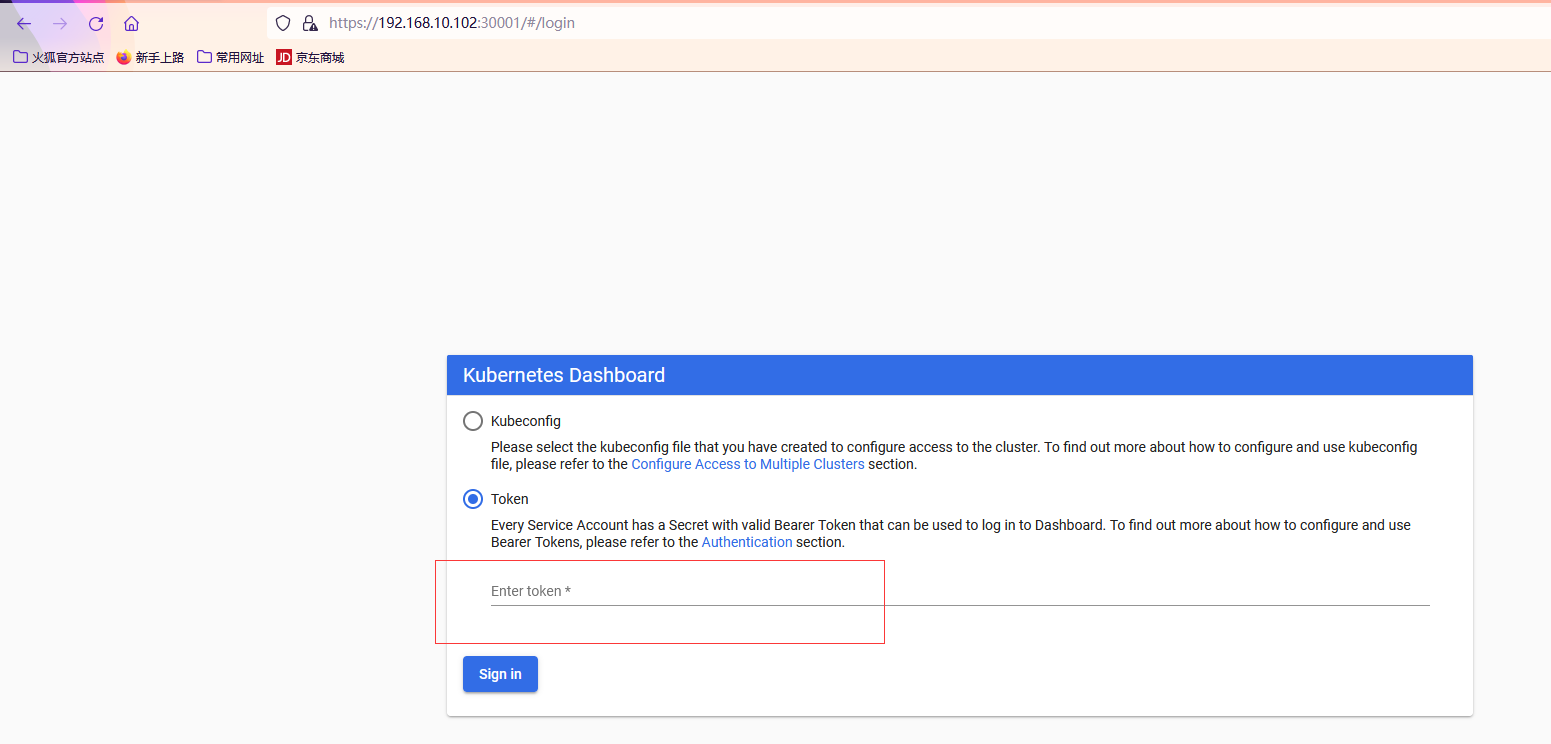

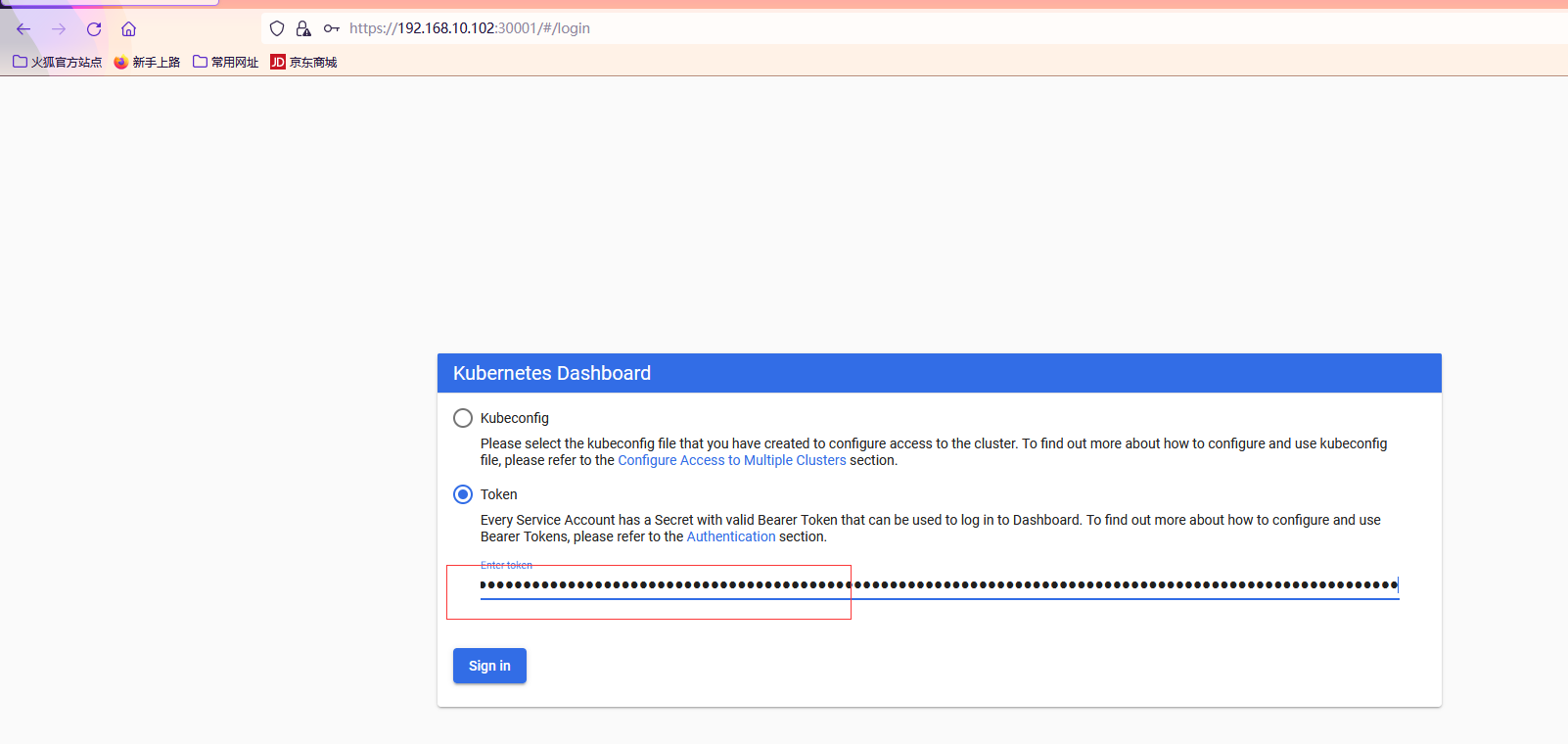

我们使用 token 令牌登录,在 master 节点生成令牌

[root@master ~]# kubectl create serviceaccount dashboard-admin -n kube-system

serviceaccount/dashboard-admin created

[root@master ~]# kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin created

[root@master ~]# kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret |awk '/dashboard-admin/{pirnt $1}')

......

......

选择一个令牌

高权限token

kubectl get secret -n kube-system |grep admin|awk '{print $1}'

kubectl describe secret <<<secret name>>> -n kube-system|grep '^token'|awk '{print $2}'

参考资料:

Kubernetes 二进制方式集群部署(单/多 master)

K8s 之 Dashboard 插件部署与使用

二进制方式搭建单master k8s集群(v1.18.18)

Centos7 kubeadm 部署单 master 集群

k8s 应用 flannel 失败解决 Init:ImagePullBackOff

过程总结

###安装Docker、kubeadm、kubelet

1、安装docker源

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

2、安装docker

yum install -y docker-ce

systemctl start docker

systemctl enable docker

tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://jqqwsp8f.mirror.aliyuncs.com"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

3、设置开机自启

systemctl enable docker && systemctl start docker

查看版本

docker --version

4、安装kubeadm、kubelet核kubectl

#指定安装源

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

#本地映射/etc/hosts

vim /etc/hosts

192.168.226.128 master

192.168.226.129 node1

192.168.226.130 node2

--->wq

#指定安装版本

yum install -y kubelet-1.15.0 kubeadm-1.15.0 kubectl-1.15.0

rpm -qa | grep kube

#设置开机自启动

systemctl enable kubelet

#关闭swap

swapoff -a

sed -i 's/.*swap.*/#&/' /etc/fstab

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

######操作位置:master

mkdir k8s && cd k8s

kubeadm init \

--apiserver-advertise-address=192.168.226.128 \ #本地IP

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.15.0 \

--service-cidr=10.1.0.0/16 \

--pod-network-cidr=10.244.0.0/16

mkdir k8s && cd k8s

kubeadm init \

--apiserver-advertise-address=192.168.226.128 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.15.0 \

--service-cidr=10.1.0.0/16 \

--pod-network-cidr=10.244.0.0/16

#使用kubectl工具

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

###记录kubeadm join xxxxxxxxx(用于添加node节点)

kubeadm join 192.168.226.128:6443 --token vvbp4o.91yfaklznloczfnb \

--discovery-token-ca-cert-hash sha256:ace39b8db9d1c40fe31b85ff2923eedbe16d6587491eca10488fa9c31041faea

#安装pod网络插件(flannel)

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/a70459be0084506e4ec919aa1c114638878db11b/Documentation/kube-flannel.yml

#查看组件状态 && 查看节点状态(稍等一会)

kubectl get cs

kubectl get nodes

#####操作位置:node

#docker 拉取flannel镜像

#0.11.0 版本太老了,1.22.x 以上版本不要用

docker pull lizhenliang/flannel:v0.11.0-amd64

swapoff -a

sed -i 's/.*swap.*/#&/' /etc/fstab

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

#添加节点(kubeadm join,节点token会周期性变化,kubeadm token list查看token)

kubeadm join 192.168.226.128:6443 --token ld7odd.egdzg4z9h37dvumc \

--discovery-token-ca-cert-hash sha256:8e904682e6c1d670cf8b5524b3e03d1e5e5cb4156984f87414f093dc80e1fb23

#出错的时候重载配置(node节点)

kubeadm reset

#重载配置(master节点的)

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

需要删除以上配置才可以继续kube init 初始化

#master节点查看node状态 “三个ready”

kubectl get nodes

#打node标签

kubectl label node node1 node-role.kubernetes.io/node=node

kubectl label node node2 node-role.kubernetes.io/node=node

#kubectl get pods -n kube-system 查看pod 状态 "1/1 Running"为正常

kubectl get pods -n kube-system

####重新生成token

#若token 过期或丢失,需要先申请新的token 令牌

kubeadm token create

#列出token

kubeadm token list | awk -F" " '{print $1}' |tail -n 1

#然后获取CA公钥的的hash值

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^ .* //'

#替换join中token及sha256:

kubeadm join 192.168.226.128:6443 --token ld7odd.egdzg4z9h37dvumc \

--discovery-token-ca-cert-hash sha256:8e904682e6c1d670cf8b5524b3e03d1e5e5cb4156984f87414f093dc80e1fb23

腾讯云环境 K8S 安装及配置测试

腾讯云使用 kubeadm 安装 k8s