Pytorch1.0入门实战三:ResNet实现cifar-10分类,利用visdom可视化训练过程

人的理想志向往往和他的能力成正比。

最近一直在使用pytorch深度学习框架,很想用pytorch搞点事情出来,但是框架中一些基本的原理得懂!本次,利用pytorch实现ResNet神经网络对cifar-10数据集进行分类。CIFAR-10包含60000张32*32的彩色图像,彩色图像,即分别有RGB三个通道,一共有10类图片,每一类图片有6000张,其类别有飞机、鸟、猫、狗等。

注意,如果直接使用torch.torchvision的models中的ResNet18或者ResNet34等等,你会遇到最后的特征图大小不够用的情况,因为cifar-10的图像大小只有32*32,因此需要单独设计ResNet的网络结构!但是采用其他的数据集,比如imagenet的数据集,其图的大小为224*224便不会遇到这种情况。

1、运行环境:

- python3.6.8

- win10

- GTX1060

- cuda9.0+cudnn7.4+vs2017

- torch1.0.1

- visdom0.1.8.8

2、实战cifar10步骤如下:

- 使用torchvision加载并预处理CIFAR-10数据集

- 定义网络

- 定义损失函数和优化器

- 训练网络,计算损失,清除梯度,反向传播,更新网络参数

- 测试网络

3、代码

1 import torch 2 import torch.nn as nn 3 from torch.autograd import Variable 4 from torchvision import datasets,transforms 5 from torch.utils.data import dataloader 6 import torchvision.models as models 7 from tqdm import tgrange 8 import torch.optim as optim 9 import numpy 10 import visdom 11 import torch.nn.functional as F 12 13 vis = visdom.Visdom() 14 batch_size = 100 15 lr = 0.001 16 momentum = 0.9 17 epochs = 100 18 19 device = torch.device('cuda' if torch.cuda.is_available() else 'cpu') 20 21 def conv3x3(in_channels,out_channels,stride = 1): 22 return nn.Conv2d(in_channels,out_channels,kernel_size=3, stride = stride, padding=1, bias=False) 23 class ResidualBlock(nn.Module): 24 def __init__(self, in_channels, out_channels, stride = 1, shotcut = None): 25 super(ResidualBlock, self).__init__() 26 self.conv1 = conv3x3(in_channels, out_channels,stride) 27 self.bn1 = nn.BatchNorm2d(out_channels) 28 self.relu = nn.ReLU(inplace=True) 29 30 self.conv2 = conv3x3(out_channels, out_channels) 31 self.bn2 = nn.BatchNorm2d(out_channels) 32 self.shotcut = shotcut 33 34 def forward(self, x): 35 residual = x 36 out = self.conv1(x) 37 out = self.bn1(out) 38 out = self.relu(out) 39 out = self.conv2(out) 40 out = self.bn2(out) 41 if self.shotcut: 42 residual = self.shotcut(x) 43 out += residual 44 out = self.relu(out) 45 return out 46 class ResNet(nn.Module): 47 def __init__(self, block, layer, num_classes = 10): 48 super(ResNet, self).__init__() 49 self.in_channels = 16 50 self.conv = conv3x3(3,16) 51 self.bn = nn.BatchNorm2d(16) 52 self.relu = nn.ReLU(inplace=True) 53 54 self.layer1 = self.make_layer(block, 16, layer[0]) 55 self.layer2 = self.make_layer(block, 32, layer[1], 2) 56 self.layer3 = self.make_layer(block, 64, layer[2], 2) 57 self.avg_pool = nn.AvgPool2d(8) 58 self.fc = nn.Linear(64, num_classes) 59 60 def make_layer(self, block, out_channels, blocks, stride = 1): 61 shotcut = None 62 if(stride != 1) or (self.in_channels != out_channels): 63 shotcut = nn.Sequential( 64 nn.Conv2d(self.in_channels, out_channels,kernel_size=3,stride = stride,padding=1), 65 nn.BatchNorm2d(out_channels)) 66 67 layers = [] 68 layers.append(block(self.in_channels, out_channels, stride, shotcut)) 69 70 for i in range(1, blocks): 71 layers.append(block(out_channels, out_channels)) 72 self.in_channels = out_channels 73 return nn.Sequential(*layers) 74 75 def forward(self, x): 76 x = self.conv(x) 77 x = self.bn(x) 78 x = self.relu(x) 79 x = self.layer1(x) 80 x = self.layer2(x) 81 x = self.layer3(x) 82 x = self.avg_pool(x) 83 x = x.view(x.size(0), -1) 84 x = self.fc(x) 85 return x 86 87 #标准化数据集 88 data_tf = transforms.Compose( 89 [transforms.ToTensor(), 90 transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])]) 91 92 train_dataset = datasets.CIFAR10(root = './datacifar/', 93 train=True, 94 transform = data_tf, 95 download=False) 96 97 test_dataset =datasets.CIFAR10(root = './datacifar/', 98 train=False, 99 transform= data_tf, 100 download=False) 101 # print(test_dataset[0][0]) 102 # print(test_dataset[0][0][0]) 103 print("训练集的大小:",len(train_dataset),len(train_dataset[0][0]),len(train_dataset[0][0][0]),len(train_dataset[0][0][0][0])) 104 print("测试集的大小:",len(test_dataset),len(test_dataset[0][0]),len(test_dataset[0][0][0]),len(test_dataset[0][0][0][0])) 105 #建立一个数据迭代器 106 train_loader = torch.utils.data.DataLoader(dataset = train_dataset, 107 batch_size = batch_size, 108 shuffle = True) 109 test_loader = torch.utils.data.DataLoader(dataset = test_dataset, 110 batch_size = batch_size, 111 shuffle = False) 112 ''' 113 print(train_loader.dataset) 114 ----> 115 Dataset CIFAR10 116 Number of datapoints: 50000 117 Split: train 118 Root Location: ./datacifar/ 119 Transforms (if any): Compose( 120 ToTensor() 121 Normalize(mean=[0.5, 0.5, 0.5], std=[0.5, 0.5, 0.5]) 122 ) 123 Target Transforms (if any): None 124 ''' 125 126 model = ResNet(ResidualBlock, [3,3,3], 10).to(device) 127 128 criterion = nn.CrossEntropyLoss()#定义损失函数 129 optimizer = optim.SGD(model.parameters(),lr=lr,momentum=momentum) 130 print(model) 131 132 if __name__ == '__main__': 133 global_step = 0 134 for epoch in range(epochs): 135 for i,train_data in enumerate(train_loader): 136 # print("i:",i) 137 # print(len(train_data[0])) 138 # print(len(train_data[1])) 139 inputs,label = train_data 140 inputs = Variable(inputs).cuda() 141 label = Variable(label).cuda() 142 # print(model) 143 output = model(inputs) 144 # print(len(output)) 145 146 loss = criterion(output,label) 147 optimizer.zero_grad() 148 loss.backward() 149 optimizer.step() 150 if i % 100 == 99: 151 print('epoch:%d | batch: %d | loss:%.03f' % (epoch + 1, i + 1, loss.item())) 152 vis.line(X=[global_step],Y=[loss.item()],win='loss',opts=dict(title = 'train loss'),update='append') 153 global_step = global_step +1 154 # 验证测试集 155 156 model.eval() # 将模型变换为测试模式 157 correct = 0 158 total = 0 159 for data_test in test_loader: 160 images, labels = data_test 161 images, labels = Variable(images).cuda(), Variable(labels).cuda() 162 output_test = model(images) 163 # print("output_test:",output_test.shape) 164 _, predicted = torch.max(output_test, 1) # 此处的predicted获取的是最大值的下标 165 # print("predicted:", predicted) 166 total += labels.size(0) 167 correct += (predicted == labels).sum() 168 print("correct1: ", correct) 169 print("Test acc: {0}".format(correct.item() / len(test_dataset))) # .cpu().numpy()

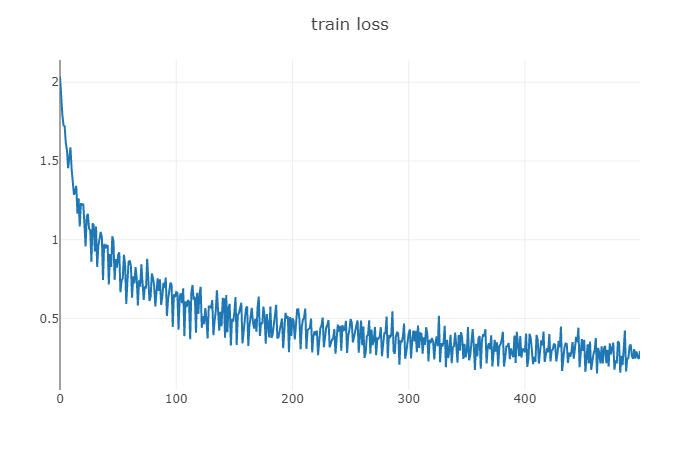

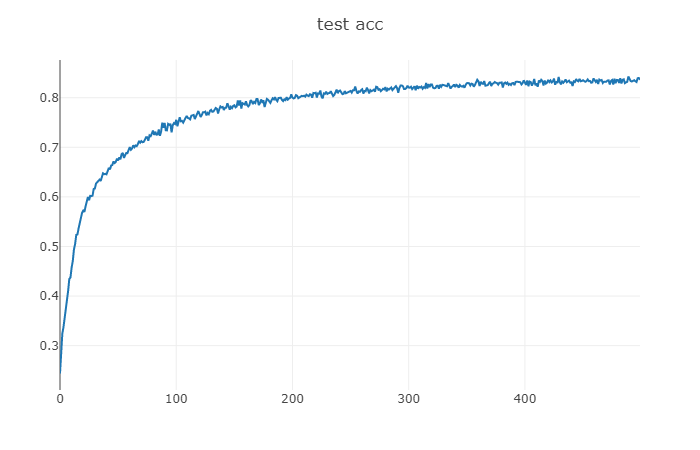

4、结果展示

loss值 epoch:100 | batch: 500 | loss:0.294

test acc epoch: 100 test acc: 0.8363

5、网络结构

ResNet(

(conv): Conv2d(3, 16, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(layer1): Sequential(

(0): ResidualBlock(

(conv1): Conv2d(16, 16, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(16, 16, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(1): ResidualBlock(

(conv1): Conv2d(16, 16, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(16, 16, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(2): ResidualBlock(

(conv1): Conv2d(16, 16, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(16, 16, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(layer2): Sequential(

(0): ResidualBlock(

(conv1): Conv2d(16, 32, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(shotcut): Sequential(

(0): Conv2d(16, 32, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

(1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): ResidualBlock(

(conv1): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(2): ResidualBlock(

(conv1): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(layer3): Sequential(

(0): ResidualBlock(

(conv1): Conv2d(32, 64, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(shotcut): Sequential(

(0): Conv2d(32, 64, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): ResidualBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(2): ResidualBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(avg_pool): AvgPool2d(kernel_size=8, stride=8, padding=0)

(fc): Linear(in_features=64, out_features=10, bias=True)

)