oozie常用调度demo

前言

我们可选择的工作流调度器有很多种,比如crontab、azkaban、oozie

- crontab:linux自带定时器,没有web界面 ,不利于监控任务和调度任务

- azkaban:开源项目,key/value配置对,操作简单,带web界面

- oozie:apache项目,xml配置文件,操作稍微有难度,带web查看界面,可通过hue操作oozie,常用于hadoop相关任务的调度

简介

Apache Oozie是运行在hadoop平台上的一种工作流调度引擎,它可以用来调度与管理hadoop任务,如,shell、MapReduce、Pig、hive、sqoop、spark等。那么,对于Oozie的Workflow中的一个个的action(可以理解成一个个MapReduce任务或shell)。Oozie是根据什么来对action的执行时间与执行顺序进行管理调度的呢?答案就是我们在数据结构中常见的有向无环图(DAGDirect Acyclic Graph)的模式来进行管理调度的,我们可以利用HPDL语言(一种xml语言)来定义整个workflow,以MapReduce任务来进行举例说明

oozie主要有Workflow、Coordinator、Bundle3个部分:

- Workflow:工作流,由我们需要处理的每个工作组成,进行需求的流式处理。

- Coordinator:协调器,可以理解为工作流的协调器和定时器,可以将多个工作流协调成一个工作流来进行处理。

- Bundle:捆,束。将一堆的coordinator进行汇总处理。

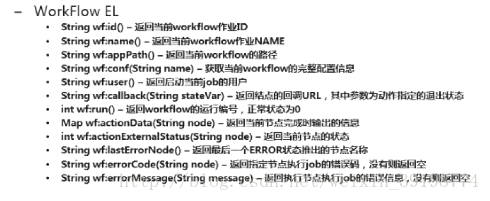

oozie任务由job.properties开始提交任务,job.properties中可以配置key/value对,在Workflow、Coordinator、Bundle中可以通过EL表达式来取value的值

节点:

Oozie的节点分成两种,流程控制节点和动作节点。所谓的节点实际就是一组标签。两种节点分别如下:

1. 流程控制节点(action)

2. <start />——定义workflow的开始

3. <end />——定义workflow的结束

4. <decision />——实现switch功能<switch><case /><default /></switch>标签连用

5. <sub-workflow>——调用子workflow

6. <kill />——程序出错后跳转到这个节点执行相关操作

7. <fork />——并发执行workflow

8. <join />——并发执行结束(与fork一起使用)

安装oozie

由于我们之前使用了cdh版本的集群,这个集群在以前就安装了oozie,这里不再赘述,如果不会安装的请参考以下安装教程:

http://blog.51cto.com/tianxingzhe/1649707

https://blog.csdn.net/fine_weather/article/details/53666149

https://www.cnblogs.com/ilinuxer/p/6804742.html

https://blog.csdn.net/weixin_39198774/article/details/79412726

常用命令

我们提交任务时,需要用oozie的客户端提交(也可以通过hue提交任务),这里我们介绍一些常用的命令,其中node6是我们的oozie服务器,11000是我们的oozie服务器端口号,所有的任务都是以oozie job为前缀,跟上oozie服务器的地址

#提交任务。-config是指定oozie任务的job.properties文件位置,submit是提交任务,每次提交任务后会把任务放到服务器并生产一个jobId,但是并不会运行这个任务

oozie job -oozie http://node6:11000/oozie/ -config /home/xccm/oozie/examples/apps/shell/job.properties -submit

#执行该任务,0000000-180927111227906-oozie-oozi-W这个是jobId,每个任务的id是唯一的,这个是由提交任务之后产生的

oozie job -oozie http://node6:11000/oozie/ -start 0000000-180927111227906-oozie-oozi-W

#运行该任务,运行=提交+执行

oozie job -oozie http://node6:11000/oozie/ -config /home/xccm/oozie/examples/apps/shell/job.properties -run

#查看任务的信息,可以查看到每个执行项状态

oozie job -oozie http://node6:11000/oozie -info 0000000-180927111227906-oozie-oozi-W

#查看任务的日志,可以查看每个任务的输出内容及日志内容

oozie job -oozie http://node6:11000/oozie -log 0000003-180927111227906-oozie-oozi-W

#可以查看任务的指定项的状态,比如查看action的name为process-select的执行状态

oozie job -oozie http://node6:11000/oozie -info 0000007-180927111227906-oozie-oozi-W@process-select

#验证workflow.xml文件是否有语法问题

oozie validate -oozie http://node6:11000/oozie workflow.xml

编写demo

注意:由于oozie的任务是在yarn上运行的,所以我们需要配置yarn的地址,然后oozie本身的作业信息和定时信息等也保存在hdfs上,所以我们还需要设置hdfs的地址,任务可以分队列存放和调度,我们使用默认队列来调度任务,以下多项分别是配置这些值的

nameNode=hdfs://node1:8020

jobTracker=node1:8032

queueName=default

examplesRoot=examples

demo01:

该任务通过执行hdfs命令在hdfs服务器创建/tmp/test/input目录,如果创建成功,则在该目录下创建一个_SUCCESS文件(默认任务执行完成),如果成功,则在其中hdfs上会有一个/tmp/test/input/_SUCCESS文件,如果创建文件失败,则转到name为fail的动作节点(创建目录那里如果失败也会执行里面的error跳转到fail项)。oozie.wf.application.path是指定我们workflow.xml的存放路径,默认到这个路径下查找workflow.xml,如果不想用这个名字,那么可以自行指定名字,但是需要再路径中给出名字:

job.properties

#namenode address

nameNode=hdfs://node1:8020

#resource manager address

jobTracker=node1:8032

#job queue name

queueName=default

examplesRoot=examples

#workflow job path

oozie.wf.application.path=${nameNode}/user/oozie/examples/apps/shell/demo01

workflow:

<workflow-app xmlns="uri:oozie:workflow:0.4" name="shell-workflow">

<start to="shell-run-input"/>

<action name="shell-run-input">

<shell xmlns="uri:oozie:shell-action:0.2">

<job-tracker>${jobTracker}</job-tracker>

<name-node>${nameNode}</name-node>

<configuration>

<property>

<name>mapred.job.queue.name</name>

<value>${queueName}</value>

</property>

</configuration>

<exec>hdfs</exec>

<argument>dfs</argument>

<argument>-mkdir</argument>

<argument>-p</argument>

<argument>/tmp/test/input</argument>

<capture-output/>

</shell>

<ok to="shell-input-touchz"/>

<error to="fail"/>

</action>

<action name="shell-input-touchz">

<shell xmlns="uri:oozie:shell-action:0.2">

<job-tracker>${jobTracker}</job-tracker>

<name-node>${nameNode}</name-node>

<configuration>

<property>

<name>mapred.job.queue.name</name>

<value>${queueName}</value>

</property>

</configuration>

<exec>hdfs</exec>

<argument>dfs</argument>

<argument>-touchz</argument>

<argument>/tmp/test/input/_SUCCESS</argument>

<capture-output/>

</shell>

<ok to="process-select"/>

<error to="fail"/>

</action>

<decision name="process-select">

<switch>

<!--no input folder-->

<!--

<case to="shell-run-input">

${fs:exists(concat(concat(nameNode,"/tmp/test/input"),"/_SUCCESS"))=="false"}

</case>

-->

<case to="exception-input">

${fs:exists(concat(concat(nameNode,"/tmp/test/input"),"/_SUCCESS"))=="false"}

</case>

<!--not run application-->

<case to="shell-run-output">

${fs:exists(concat(nameNode,"/tmp/test/output"))=="false"}

</case>

<!--run application fail-->

<case to="exception-output">

${fs:exists(concat(concat(nameNode,"/tmp/test/output"),"_FAIL"))}

</case>

<!--application is running-->

<!--

<case to="shell-wait-output">

${fs:exists(concat(concat(nameNode,"/tmp/test/output"),"_SUCCESS"))=="false"}

</case>

-->

<case to="end">

${fs:exists(concat(concat(nameNode,"/tmp/test/output"),"/_SUCCESS"))}

</case>

<!--undefined operation-->

<default to="exception-output"/>

</switch>

</decision>

<action name="shell-run-output">

<shell xmlns="uri:oozie:shell-action:0.2">

<job-tracker>${jobTracker}</job-tracker>

<name-node>${nameNode}</name-node>

<configuration>

<property>

<name>mapred.job.queue.name</name>

<value>${queueName}</value>

</property>

</configuration>

<exec>hdfs</exec>

<argument>dfs</argument>

<argument>-mkdir</argument>

<argument>-p</argument>

<argument>/tmp/test/output</argument>

<capture-output/>

</shell>

<ok to="shell-output-touchz"/>

<error to="fail"/>

</action>

<action name="shell-output-touchz">

<shell xmlns="uri:oozie:shell-action:0.2">

<job-tracker>${jobTracker}</job-tracker>

<name-node>${nameNode}</name-node>

<configuration>

<property>

<name>mapred.job.queue.name</name>

<value>${queueName}</value>

</property>

</configuration>

<exec>hdfs</exec>

<argument>dfs</argument>

<argument>-touchz</argument>

<argument>/tmp/test/output/_SUCCESS</argument>

<capture-output/>

</shell>

<ok to="end"/>

<error to="fail"/>

</action>

<kill name="exception-input">

<message>exception input</message>

</kill>

<kill name="exception-output">

<message>exception output</message>

</kill>

<kill name="fail">

<message>fail , now kill</message>

</kill>

<end name="end"/>

</workflow-app>

Job的DAG图:

demo02

oozie调用外部shell,外部shell的功能很简单,只是在hdfs创建文件,实际上你可以编写其它功能的shell,由于创建hdfs方便我们查看,所以我就用的shell通过hdfs创建文件,这里我把shell和workflow都放到了hdfs下的同一个目录,并且在workflow.xml中通过file指定了文件名,脚本的内容是在/tmp目录下创建一个时间戳的文件,文件里的内容是时间戳内容,并把这个文件上传到hdfs

job.properties

###########################################################################

# call extends shell file

###########################################################################

#namenode address

nameNode=hdfs://node1:8020

#resource manager address

jobTracker=node1:8032

#job queue name

queueName=default

examplesRoot=examples

#workflow job path

oozie.wf.application.path=${nameNode}/user/oozie/examples/apps/shell/demo02

#exec shell

EXEC=create_conf.sh

#save configuration path

savePath=/user/oozie/examples/apps/conf

#save configuration file name

filename=oozie-test.conf

workflow.xml

<workflow-app xmlns="uri:oozie:workflow:0.4" name="shell-extends-workflow">

<start to="shell-node"/>

<action name="shell-node">

<shell xmlns="uri:oozie:shell-action:0.2">

<job-tracker>${jobTracker}</job-tracker>

<name-node>${nameNode}</name-node>

<configuration>

<property>

<name>mapred.job.queue.name</name>

<value>${queueName}</value>

</property>

</configuration>

<exec>${EXEC}</exec>

<argument>${savePath}</argument>

<argument>${wf:id()}</argument>

<argument>${filename}</argument>

<file>${EXEC}#${EXEC}</file>

</shell>

<ok to="end"/>

<error to="fail"/>

</action>

<kill name="fail">

<message>Shell action failed, error message[${wf:errorMessage(wf:lastErrorNode())}]</message>

</kill>

<end name="end"/>

</workflow-app>

create_conf.sh

#!/bin/bash

dir_name=$1/$2

hdfs dfs -mkdir -p $dir_name

current=`date "+%Y-%m-%d %H:%M:%S"`

timeStamp=`date -d "$current" +%s`

mkdir -p "/tmp/$timeStamp"

echo "now timestamp : $timeStamp" >> "/tmp/$timeStamp/$3"

hdfs dfs -put -f "/tmp/$timeStamp/$3" "$dir_name/$3"

这里我用到了oozie自带的EL函数,如果想查看更多的EL函数,请看oozie的官方文档

job的DAG图

demo03

这里有几个点需要注意,这里时间我们要选GMT+0800,因为默认GMT和UTC要比我们早8个小时,然后coordinator的版本是0.4,workflow的版本是0.5,然后我们通过CDH添加两个配置(cloudera manager -> oozie -> 配置 -> 类别 -> 高级 -> oozie-site.xml 的 Oozie Server 高级配置代码段(安全阀)),我们需要设置oozie.processing.timezone=GMT+0800和oozie.service.coord.check.maximum.frequency=false,定时任务可以是分钟、小时、天、月、自定义定时语法,如果web界面显示的时间相差8小时,那么我们需要把时间设置成CST(Asia/Shanghai),参考https://blog.csdn.net/abysscarry/article/details/82156686

job.properties

# 集群参数

#nameNode地址

nameNode=hdfs://node1:8020

#resourceManager地址

jobTracker=node1:8032

#oozie队列 这个属性一般不做修改

queueName=default

# oozie

#coordinator.xml在hdfs上的路径

oozie.coord.application.path=${nameNode}/user/oozie/examples/apps/shell/demo03

#workflow.xml在hdfs上的路径

workflowAppUri=${nameNode}/user/oozie/examples/apps/shell/demo03

#workflow的名字

workflowName=workflow

#定时任务的开始时间

start=2015-01-01T00:10+0800

#定时任务的结束时间

end=2020-12-31T23:10+0800

coordinator.xml

<coordinator-app name="demo03-coordinator" frequency="${coord:minutes(1)}"

start="${start}" end="${end}" timezone="GMT+0800" xmlns="uri:oozie:coordinator:0.4">

<action>

<workflow>

<app-path>${workflowAppUri}</app-path>

<configuration>

<property>

<name>nameNode</name>

<value>${nameNode}</value>

</property>

<property>

<name>jobTracker</name>

<value>${jobTracker}</value>

</property>

<property>

<name>queueName</name>

<value>${queueName}</value>

</property>

</configuration>

</workflow>

<action>

</coordinator-app>

workflow.xml

<workflow-app xmlns="uri:oozie:workflow:0.5" name="demo03-workflow">

<start to="shell-run"/>

<action name="shell-run">

<shell xmlns="uri:oozie:shell-action:0.2">

<job-tracker>${jobTracker}</job-tracker>

<name-node>${nameNode}</name-node>

<configuration>

<property>

<name>mapred.job.queue.name</name>

<value>${queueName}</value>

</property>

</configuration>

<exec>hdfs</exec>

<argument>dfs</argument>

<argument>-touchz</argument>

<argument>/tmp/test.tmp</argument>

</shell>

<ok to="end"/>

<error to="fail"/>

</action>

<kill name="fail">

<message>Shell action failed, error message[${wf:errorMessage(wf:lastErrorNode())}]</message>

</kill>

<end name="end"/>

</workflow-app>

job的DAG图

demo04

定时多流程任务

job.properties

###########################################################################

# call extends shell file

###########################################################################

#namenode address

nameNode=hdfs://node1:8020

#resource manager address

jobTracker=node1:8032

#job queue name

queueName=default

examplesRoot=examples

#exec shell

EXEC_COST=runCost.sh

EXEC_CREATE_CONF=createConf.sh

EXEC_SALE=runSale.sh

#save configuration path

savePath=/tmp

#save configuration file name

cityCode=510100

oozie.coord.application.path=${nameNode}/user/oozie/apps/pointvalue/shell/510100

#workflow.xml在hdfs上的路径

workflowAppUri=${nameNode}/user/oozie/apps/pointvalue/shell/510100

#workflow的名字

workflowName=workflow

#定时任务的开始时间

start=2015-01-01T00:10+0800

#定时任务的结束时间

end=2020-12-31T23:10+0800

coordinator.xml

<coordinator-app name="pointvalue-510100-coordinator" frequency="${coord:month(1)}" start="${start}" end="${end}" timezone="GMT+0800" xmlns="uri:oozie:coordinator:0.4">

<action>

<workflow>

<app-path>${workflowAppUri}</app-path>

<configuration>

<property>

<name>nameNode</name>

<value>${nameNode}</value>

</property>

<property>

<name>jobTracker</name>

<value>${jobTracker}</value>

</property>

<property>

<name>queueName</name>

<value>${queueName}</value>

</property>

</configuration>

</workflow>

<action>

</coordinator-app>

workflow.xml

<workflow-app xmlns="uri:oozie:workflow:0.5" name="pointvalue-510100-workflow">

<start to="run-cost"/>

<action name="run-cost">

<shell xmlns="uri:oozie:shell-action:0.2">

<job-tracker>${jobTracker}</job-tracker>

<name-node>${nameNode}</name-node>

<configuration>

<property>

<name>mapred.job.queue.name</name>

<value>${queueName}</value>

</property>

</configuration>

<exec>${EXEC_COST}</exec>

<argument>${nameNode}</argument>

<argument>${cityCode}</argument>

<argument>${wf:id()}</argument>

<file>${EXEC_COST}#${EXEC_COST}</file>

</shell>

<ok to="create-conf"/>

<error to="fail"/>

</action>

<action name="create-conf">

<shell xmlns="uri:oozie:shell-action:0.2">

<job-tracker>${jobTracker}</job-tracker>

<name-node>${nameNode}</name-node>

<configuration>

<property>

<name>mapred.job.queue.name</name>

<value>${queueName}</value>

</property>

</configuration>

<exec>${EXEC_CREATE_CONF}</exec>

<argument>${nameNode}</argument>

<argument>${savePath}</argument>

<argument>${cityCode}</argument>

<argument>${wf:id()}</argument>

<file>${EXEC_CREATE_CONF}#${EXEC_CREATE_CONF}</file>

</shell>

<ok to="run-sale"/>

<error to="fail"/>

</action>

<action name="run-sale">

<shell xmlns="uri:oozie:shell-action:0.2">

<job-tracker>${jobTracker}</job-tracker>

<name-node>${nameNode}</name-node>

<configuration>

<property>

<name>mapred.job.queue.name</name>

<value>${queueName}</value>

</property>

</configuration>

<exec>${EXEC_SALE}</exec>

<argument>${nameNode}</argument>

<argument>${cityCode}</argument>

<argument>${wf:id()}</argument>

<file>${EXEC_SALE}#${EXEC_SALE}</file>

</shell>

<ok to="end"/>

<error to="fail"/>

</action>

<kill name="fail">

<message>Shell action failed, error message[${wf:errorMessage(wf:lastErrorNode())}]</message>

</kill>

<end name="end"/>

</workflow-app>

runCost.sh

#!/bin/sh

#set name node address

namenode=$1

#set shell local save path

#savelocalconfpath=$2

#set city code

citycode=$2

year=$(date +%y)

month=$(date +%m)

premonth=$((month-1))

date=$(cal $premonth $year|xargs|awk '{print $NF}')

year=$(date +%Y)

premonth=$(printf "%02d" $premonth)

beginTime=`date -d "$year-$premonth-01 00:00:00" +%s`

endTime=`date -d "$year-$premonth-$date 23:59:59" +%s`

monthstr="$year$premonth"

datestr="$monthstr"

hdfsPath="$namenode/etl/dm1/point_value"

hdfsjarpath="$hdfsPath/jar/v1.0.1/point-value.jar"

hdfsprojectcostpath="$hdfsPath/project_cost/$monthstr/$citycode/"

hdfsprojectdevicepath="$hdfsPath/project_device/$monthstr/$citycode/"

hdfsoutputpath="$hdfsPath/cost/$monthstr/$citycode"

echo "------cost--path-------------"

echo "$hdfsjarpath"

echo "$hdfsprojectcostpath"

echo "$hdfsprojectdevicepath"

echo "$hdfsoutputpath"

echo "------cost--path-------------"

spark-submit --class com.xinchao.bigdata.pointvalue.PointCost \

--master yarn-cluster \

--num-executors 4 \

--driver-memory 4g \

--executor-memory 4g \

--executor-cores 4 \

"$hdfsjarpath" "$hdfsprojectcostpath" "$hdfsprojectdevicepath" "$hdfsoutputpath"

createConf.sh

#!/bin/sh

#set name node address

namenode=$1

#set shell local save path

savelocalconfpath=$2

#set city code

citycode=$3

year=$(date +%y)

month=$(date +%m)

premonth=$((month-1))

date=$(cal $premonth $year|xargs|awk '{print $NF}')

year=$(date +%Y)

premonth=$(printf "%02d" $premonth)

beginTime=`date -d "$year-$premonth-01 00:00:00" +%s`

endTime=`date -d "$year-$premonth-$date 23:59:59" +%s`

monthstr="$year$premonth"

datestr="$monthstr"

prefix="pointvalue"

suffix="properties"

namestring="$datestr-$citycode"

filename="$prefix-$namestring.$suffix"

localconfpath="$savelocalconfpath/$filename"

appName="PointValue"

runmode="cluster"

#hdfs path

hdfsPath="$namenode/etl/dm1/point_value"

#sale path

salePath="$hdfsPath/original/$monthstr/$citycode/"

#plan path

planPath="$hdfsPath/plan/$monthstr/$citycode/"

#plan device path

planDevicePath="$hdfsPath/plan_device/$monthstr/$citycode/"

#point cost path

costPath="$hdfsPath/cost/$monthstr/$citycode/part-*"

#all device path

devicePath="$hdfsPath/device/$monthstr/$citycode/"

#device day status path

statusPath="$hdfsPath/device_status/$monthstr/$citycode/"

#quota(output) path

outPath="$hdfsPath/quota/$monthstr/$citycode"

#point value run properties and conf

hdfsconfpath="$hdfsPath/conf/$monthstr/$citycode/"

#set enable save temp file.true will save,false not save

enableSaveTmpFile="true"

#temp file path

tmppath="$hdfsPath/tmp/"

#sale temp file path

saletmppath="$hdfsPath/tmp-sale/$monthstr/$citycode/"

#occupy temp file path

occupytmppath="$hdfsPath/tmp-occupy/$monthstr/$citycode/"

srcSplitMark="\\\\s+"

outSplitMark=","

#delete old local conf file

rm -rf "$localconfpath"

#spark job name

echo "bigdata.pointvalue.job.appname=$appName-$namestring" >> "$localconfpath"

#job mode:local or cluster,default cluster

echo "bigdata.pointvalue.job.mode=$runmode" >> "$localconfpath"

#sale file path

echo "bigdata.pointvalue.path.in.sale=$salePath" >> "$localconfpath"

#plan file path

echo "bigdata.pointvalue.path.in.plan=$planPath" >> "$localconfpath"

#plan and device map file path

echo "bigdata.pointvalue.path.in.plan-device=$planDevicePath" >> "$localconfpath"

#cost result file path

echo "bigdata.pointvalue.path.in.cost=$costPath" >> "$localconfpath"

#all device table file path

echo "bigdata.pointvalue.path.in.device=$devicePath" >> "$localconfpath"

#device status file path

echo "bigdata.pointvalue.path.in.status=$statusPath" >> "$localconfpath"

#output path

echo "bigdata.pointvalue.path.out=$outPath" >> "$localconfpath"

#tmp file path

echo "bigdata.pointvalue.tmp.save.enable=$enableSaveTmpFile" >> "$localconfpath"

#tmp file path

echo "bigdata.pointvalue.path.tmp=$tmppath" >> "$localconfpath"

#sale tmp file path

echo "bigdata.pointvalue.path.tmp.sale=$saletmppath" >> "$localconfpath"

#occupy tmp file path

echo "bigdata.pointvalue.path.tmp.occupy=$occupytmppath" >> "$localconfpath"

#data begin time

echo "bigdata.pointvalue.filter.time.begin=$beginTime" >> "$localconfpath"

#data end time

echo "bigdata.pointvalue.filter.time.end=$endTime" >> "$localconfpath"

#src split mark string

echo "bigdata.pointvalue.mark.split.src=$srcSplitMark" >> "$localconfpath"

#out split mark string

echo "bigdata.pointvalue.mark.split.out=$outSplitMark" >> "$localconfpath"

hdfs dfs -mkdir -p "$hdfsconfpath"

hdfs dfs -put -f "$localconfpath" "$hdfsconfpath"

rm -rf "$localconfpath"

runSale.sh

#!/bin/sh

#set name node address

namenode=$1

#set shell local save path

#savelocalconfpath=$2

#set city code

citycode=$2

year=$(date +%y)

month=$(date +%m)

premonth=$((month-1))

date=$(cal $premonth $year|xargs|awk '{print $NF}')

year=$(date +%Y)

premonth=$(printf "%02d" $premonth)

beginTime=`date -d "$year-$premonth-01 00:00:00" +%s`

endTime=`date -d "$year-$premonth-$date 23:59:59" +%s`

monthstr="$year$premonth"

datestr="$monthstr"

prefix="pointvalue"

suffix="properties"

namestring="$datestr-$citycode"

conffilename="$prefix-$namestring.$suffix"

hdfsPath="$namenode/etl/dm1/point_value"

hdfsconfpath="$hdfsPath/conf/$monthstr/$citycode/$conffilename"

hdfsjarpath="$hdfsPath/jar/v1.0.1/point-value.jar"

echo "------sale--path-------------"

echo "$hdfsconfpath"

echo "$hdfsjarpath"

echo "$conffilename"

echo "------sale--path-------------"

spark-submit --class com.xinchao.bigdata.pointvalue.PointSale \

--master yarn-cluster --num-executors 4 \

--driver-memory 4g --executor-memory 4g --executor-cores 4 \

--files "$hdfsconfpath" \

"$hdfsjarpath" "$conffilename"

参考资料

http://oozie.apache.org/

https://blog.csdn.net/oracle8090/article/details/54666543

https://blog.csdn.net/u011026329/article/details/79173624

https://blog.csdn.net/mafuli007/article/details/17071519

https://blog.csdn.net/weixin_39198774/article/details/79412726

http://www.mamicode.com/info-detail-1861407.html

https://www.cnblogs.com/cenzhongman/p/7259226.html

https://blog.csdn.net/oracle8090/article/details/54666543

https://blog.csdn.net/abysscarry/article/details/82156686

https://blog.csdn.net/xiao_jun_0820/article/details/40370783