Hortworks Hadoop生态圈简介

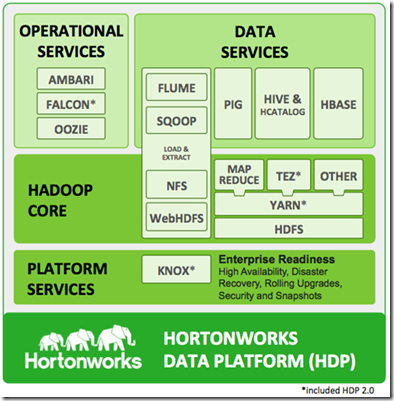

Hortworks 作为Apache Hadoop2.0社区的开拓者,构建了一套自己的Hadoop生态圈,包括存储数据的HDFS,资源管理框架YARN,计算模型MAPREDUCE、TEZ等,服务于数据平台的PIG、HIVE&HCATALOG、HBASE,HDFS存储的数据通过FLUME和SQOOP导入导出,集群监控AMBARI、数据生命周期管理FALCON、作业调度系统OOZIE。本文简要介绍了各个系统的概念。另外大多系统都通过Apache开源,读者可以自行下载试用。

Hortworks Hadoop生态圈架构如图1所示。

图1 Hortworks hadoop生态 架构图

HDFS,YARN,MAPREDUCE,TEZ这里不再介绍。

1. HDP Hortonworks Data Platform

Hortonworks数据平台,简称为HDP,

2. Apache™ Accumulo

是一个采用单元级别的高性能数据存储和检索系统。

Apache™ Accumulo is a high performance data storage and retrieval system with cell-level access control. It is a scalable implementation of Google’s Big Table design that works on top of Apache Hadoop® and Apache ZooKeeper.

3. Apache™ Flume

是一个分布式,稳定,有效的数据收集,聚合工具,并能把大量流式数据存到hdfs。

Flume is a distributed, reliable, and available service for efficiently collecting, aggregating, and moving large amounts of streaming data into the Hadoop Distributed File System (HDFS). It has a simple and flexible architecture based on streaming data flows; and is robust and fault tolerant with tunable reliability mechanisms for failover and recovery.

4. Apache™ HBase

是一个运行在HDFS上NoSQL数据库,采用列式存储,提供快速访问、更新、插入与删除。

Apache™ HBase is a non-relational (NoSQL) database that runs on top of the Hadoop® Distributed File System (HDFS). It is columnar and provides fault-tolerant storage and quick access to large quantities of sparse data. It also adds transactional capabilities to Hadoop, allowing users to conduct updates, inserts and deletes.

5. Apache™ HCatalog

是基于Hadoop的表格和存储管理层,允许用户使用不同的数据处理工具-Apache Pig, Apache MapReduce, and Apache Hive-在相应框架内更方便的读取和写入。

Apache™ HCatalog is a table and storage management layer for Hadoop that enables users with different data processing tools – Apache Pig, Apache MapReduce, and Apache Hive – to more easily read and write data on the grid. HCatalog’s table abstraction presents users with a relational view of data in the Hadoop Distributed File System (HDFS) and ensures that users need not worry about where or in what format their data is stored. HCatalog displays data from RCFile format, text files, or sequence files in a tabular view. It also provides REST APIs so that external systems can access these tables’ metadata.

6. Apache Hive

是一个构建于Hadoop上数据仓库基础设施,目的是提供数据摘要、点对点查询和大数据分析。它提供一种叫做HiveQL(SQL-like的语言)查询存储于Hadoop上结构化数据机制。Hive简化了Hadoop和商业智能与试图工具的集成。

Apache Hive is data warehouse infrastructure built on top of Apache™ Hadoop® for providing data summarization, ad-hoc query, and analysis of large datasets. It provides a mechanism to project structure onto the data in Hadoop and to query that data using a SQL-like language called HiveQL (HQL). Hive eases integration between Hadoop and tools for business intelligence and visualization.

7. Apache™ Mahout

是基于Hadoop使用Mapreduce规范的大规模机器学习算法库。机器学习是一门专注于让机器学习而没有明确编程的商业智能学科,它普遍基于之前的输出来完善未来的性能。一旦数据存储到HDFS,Mahout提供数据科学工具用于自动找出该数据中有用的模式。Apache Mahout项目专注于更快和更容易把大数据转换为大量信息。

Apache™ Mahout is a library of scalable machine-learning algorithms, implemented on top of Apache Hadoop® and using the MapReduce paradigm. Machine learning is a discipline of artificial intelligence focused on enabling machines to learn without being explicitly programmed, and it is commonly used to improve future performance based on previous outcomes. Once big data is stored on the Hadoop Distributed File System (HDFS), Mahout provides the data science tools to automatically find meaningful patterns in those big data sets. The Apache Mahout project aims to make it faster and easier to turn big data into big information.

8. Apache™ Pig

允许你使用简单的脚本语言编写复杂的MapReduce转换。Pig Latin定义一系列的数据集转换方法,如聚合、join和排序。Pig 把Pig Latin脚本转换为MapReduce,然后就可以允许在Hadoop上。Pig Latin有时可以使用UDFs (User Defined Functions)执行,即用户可以使用Java或脚本语言写好后由Pig Latin调用。

Apache™ Pig allows you to write complex MapReduce transformations using a simple scripting language. Pig Latin (the language) defines a set of transformations on a data set such as aggregate, join and sort. Pig translates the Pig Latin script into MapReduce so that it can be executed within Hadoop®. Pig Latin is sometimes extended using UDFs (User Defined Functions), which the user can write in Java or a scripting language and then call directly from the Pig Latin.

9. Apache Sqoop

是一个批量转换Hadoop和结构化存储数据如关系型数据库 的工具。

Apache Sqoop is a tool designed for efficiently transferring bulk data between Apache Hadoop and structured datastores such as relational databases. Sqoop imports data from external structured datastores into HDFS or related systems like Hive and HBase. Sqoop can also be used to extract data from Hadoop and export it to external structured datastores such as relational databases and enterprise data warehouses. Sqoop works with relational databases such as: Teradata, Netezza, Oracle, MySQL, Postgres, and HSQLDB.

10. Apache Ambari

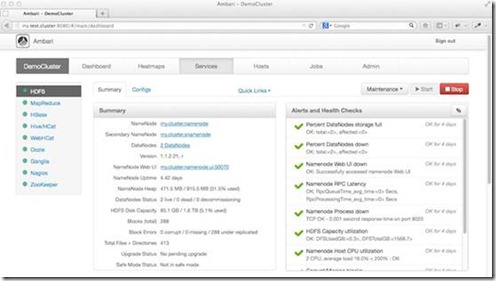

Apache Ambari是对Hadoop进行监控、管理和生命周期管理的开源项目。它也是一个为Hortonworks数据平台选择管理组建的项目。Ambari向Hadoop MapReduce、HDFS、 HBase、Pig, Hive、HCatalog以及Zookeeper提供服务。

Apache Ambari is a 100-percent open source operational framework for provisioning, managing and monitoring Apache Hadoop clusters. Ambari includes an intuitive collection of operator tools and a robust set of APIs that hide the complexity of Hadoop, simplifying the operation of clusters.

10.1 Ambari provides tools to simplify cluster management. The Web interface allows you to start/stop/test Hadoop services, change configurations and manage ongoing growth of your cluster.

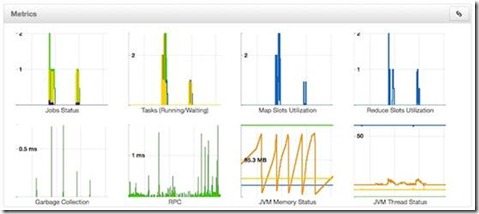

10.2 Monitor a Hadoop cluster

Gain instant insight into the health of your cluster. Ambari pre-configures alerts for watching Hadoop services and visualizes cluster operational data in a simple Web interface.

监控Hadoop集群,可配置监控Hadoop的服务

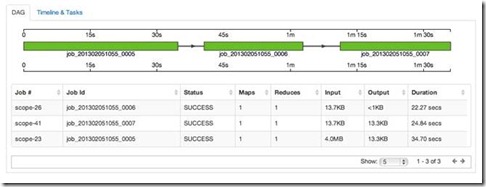

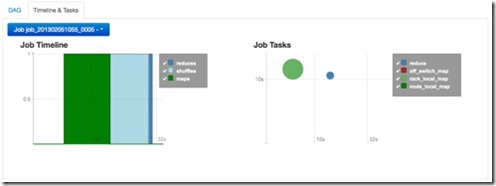

Ambari also includes job diagnostic tools to visualize job interdependencies and view task timelines as a way to troubleshoot historic job performance execution.

监控作业执行状况,监控作业的性能问题。

Integrate Hadoop with other applications

Ambari provides a RESTful API that enables integration with existing tools, such as Microsoft System Center and Teradata Viewpoint. Ambari also leverages standard technologies and protocols with Nagios and Ganglia for deeper customization.

与其它应用集成,

11. Apache™ Falcon

是一个基于Hadoop为了方便数据生命周期管理和处理的数据管理框架。

Apache™ Falcon is a data management framework for simplifying data lifecycle management and processing pipelines on Apache Hadoop®. It enables users to configure, manage and orchestrate data motion, pipeline processing, disaster recovery, and data retention workflows. Instead of hard-coding complex data lifecycle capabilities, Hadoop applications can now rely on the well-tested Apache Falcon framework for these functions. Falcon’s simplification of data management is quite useful to anyone building apps on Hadoop.

12. Apache™ Oozie

是一个用于调度Hadoop作业的Web应用。

Apache™ Oozie is a Java Web application used to schedule Apache Hadoop jobs. Oozie combines multiple jobs sequentially into one logical unit of work. It is integrated with the Hadoop stack and supports Hadoop jobs for Apache MapReduce, Apache Pig, Apache Hive, and Apache Sqoop. It can also be used to schedule jobs specific to a system, like Java programs or shell scripts.

13. Apache ZooKeeper

为Hadoop集群提供操作服务。ZooKeeper提供一个分布式配置服务、一个同步服务和一个用于分布式系统的名字注册。分布式应用使用ZooKeeper存储和更新重要的配置信息。

Apache ZooKeeper provides operational services for a Hadoop cluster. ZooKeeper provides a distributed configuration service, a synchronization service and a naming registry for distributed systems. Distributed applications use Zookeeper to store and mediate updates to important configuration information.

14. Knox

是一个基于Hadoop集群提供单点认证和访问的系统。

The Knox Gateway (“Knox”) is a system that provides a single point of authentication and access for Apache™ Hadoop® services in a cluster. The goal of the project is to simplify Hadoop security for users who access the cluster data and execute jobs, and for operators who control access and manage the cluster. Knox runs as a server (or cluster of servers) that serve one or more Hadoop clusters.