Flink| CDC

CDC

CDC是Change Data Capture(变更数据获取)的简称。核心思想是,监测并捕获数据库的变动(包括数据或数据表的插入、更新以及删除等),将这些变更按发生的顺序完整记录下来,写入到消息中间件中以

供其他服务进行订阅及消费。

CDC主要分为基于查询和基于Binlog两种方式,这两种之间的区别:

|

|

基于查询的CDC |

基于Binlog的CDC |

|

开源产品 |

Sqoop、Kafka JDBC Source |

Canal、Maxwell、Debezium |

|

执行模式 |

Batch |

Streaming |

|

是否可以捕获所有数据变化 |

否 |

是 |

|

延迟性 |

高延迟 |

低延迟 |

|

是否增加数据库压力 |

是 |

否 |

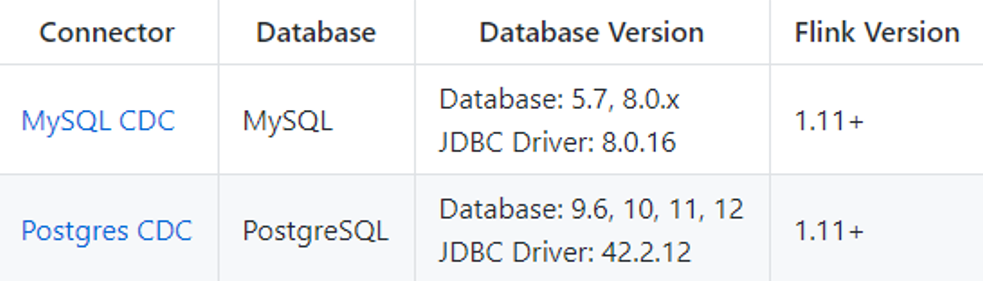

Flink社区开发了 flink-cdc-connectors 组件,这是一个可以直接从 MySQL、PostgreSQL 等数据库直接读取全量数据和增量变更数据的 source 组件。目前也已开源,开源地址:

https://github.com/ververica/flink-cdc-connectors

https://ververica.github.io/flink-cdc-connectors/master/

Caused by: org.apache.flink.table.api.ValidationException: Currently Flink MySql CDC connector only supports MySql whose version is larger or equal to 5.7, but actual is 5.6.

Mysql的配置

修改my.cnf配置

[kris@hadoop101 ~]$ sudo vim /etc/my.cnf [mysqld] max_allowed_packet=1024M server_id=1 log-bin=master binlog_format=row binlog-do-db=gmall binlog-do-db=test #添加test库

重启mysql

sudo service mysql start Starting MySQL [确定]

或(mysql版本)

sudo service mysqld start

查看mysql的binlog文件

kris@hadoop101 ~]$ cd /var/lib/mysql/

[kris@hadoop101 mysql]$ ll

总用量 178740

-rw-rw---- 1 mysql mysql 1076 4月 18 2021 mysql-bin.000095

-rw-rw---- 1 mysql mysql 143 5月 9 2021 mysql-bin.000096

-rw-rw---- 1 mysql mysql 846 7月 26 2021 mysql-bin.000097

-rw-rw---- 1 mysql mysql 143 11月 3 08:26 mysql-bin.000098

-rw-rw---- 1 mysql mysql 143 2月 4 22:05 mysql-bin.000099

-rw-rw---- 1 mysql mysql 120 2月 6 11:21 mysql-bin.000100

-rw-rw---- 1 mysql mysql 1900 2月 6 11:21 mysql-bin.index

srwxrwxrwx 1 mysql mysql 0 2月 6 11:21 mysql.sock

drwx------ 2 mysql mysql 4096 5月 17 2019 online_edu

drwx------ 2 mysql mysql 4096 3月 15 2019 performance_schema

-rw-r--r-- 1 root root 125 3月 15 2019 RPM_UPGRADE_HISTORY

-rw-r--r-- 1 mysql mysql 125 3月 15 2019 RPM_UPGRADE_MARKER-LAST

drwx------ 2 mysql mysql 4096 4月 19 2019 sparkmall

drwxr-xr-x 2 mysql mysql 4096 4月 26 2020 test

案例

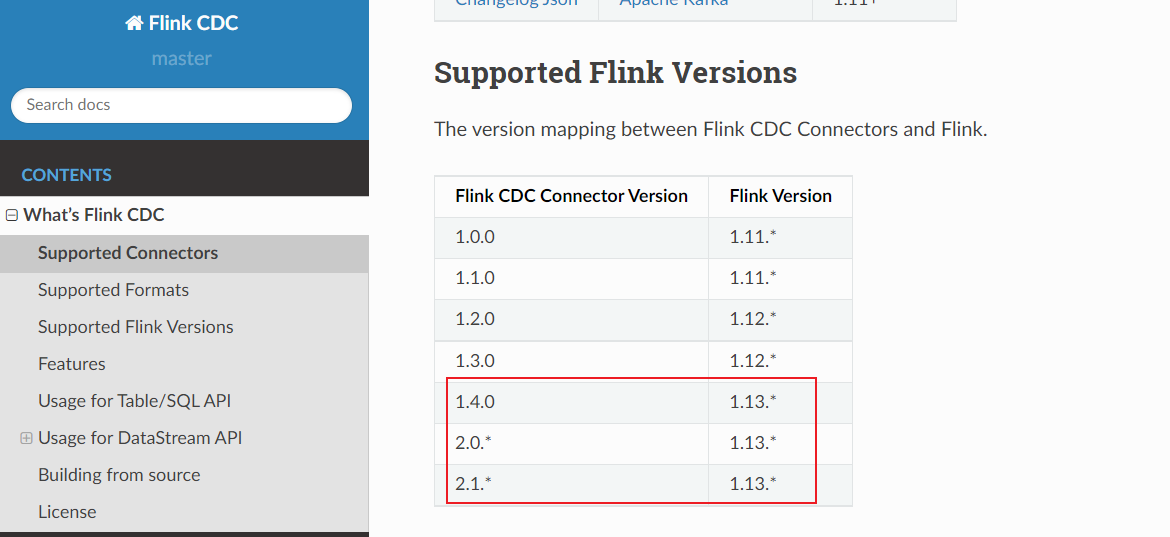

Maven 依赖:

<properties>

<flink-version>1.13.0</flink-version>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>${flink-version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_2.12</artifactId>

<version>${flink-version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_2.12</artifactId>

<version>${flink-version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>3.1.3</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.49</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-planner-blink_2.12</artifactId>

<version>${flink-version}</version>

</dependency>

<dependency>

<groupId>com.ververica</groupId>

<artifactId>flink-connector-mysql-cdc</artifactId>

<version>2.0.0</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.75</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-assembly-plugin</artifactId>

<version>3.0.0</version>

<configuration>

<descriptorRefs>

<descriptorRef>jar-with-dependencies</descriptorRef>

</descriptorRefs>

</configuration>

<executions>

<execution>

<id>make-assembly</id>

<phase>package</phase>

<goals>

<goal>single</goal>

</goals>

</execution>

</executions>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<configuration>

<source>8</source>

<target>8</target>

</configuration>

</plugin>

</plugins>

</build>

DataStream方式的应用

测试代码

package com.stream.flinkcdc;

import com.ververica.cdc.connectors.mysql.MySqlSource;

import com.ververica.cdc.connectors.mysql.table.StartupOptions;

import com.ververica.cdc.debezium.DebeziumSourceFunction;

import com.ververica.cdc.debezium.StringDebeziumDeserializationSchema;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

/**

* DataStream方式的应用

*/

public class FlinkCDC {

public static void main(String[] args) throws Exception {

//1.获取Flink 执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

DebeziumSourceFunction<String> sourceFunction = MySqlSource.<String>builder()

.hostname("hadoop101")

.port(3306)

.username("root")

.password("123456")

.databaseList("test")

.tableList("test.student_info") //库名.表名 不加读取当前库下所有表

.deserializer(new StringDebeziumDeserializationSchema())

.startupOptions(StartupOptions.initial()) //initial 这种模式先初始化全部读取表中的数据, 再去读取最新的binlog文件

//latest() 这种模式是只会读取最新binlog的数据,不会读历史数据。

.build();

DataStreamSource<String> mysqlDS = env.addSource(sourceFunction);

//5. 打印数据

mysqlDS.print();

//6. 执行任务

env.execute();

}

}

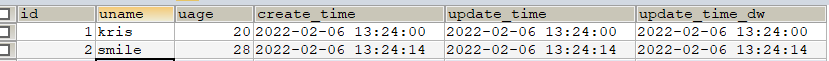

initial初始化,将表中的数据全部打印出,op = r

SourceRecord{sourcePartition={server=mysql_binlog_source}, sourceOffset={ts_sec=1644126182, file=master.000001, pos=154, snapshot=true}} ConnectRecord{topic='mysql_binlog_source.test.student_info', kafkaPartition=null, key=Struct{id=1}, keySchema=Schema{mysql_binlog_source.test.student_info.Key:STRUCT}, value=Struct{after=Struct{id=1,uname=kris,uage=20,create_time=2022-02-06T19:24:00Z,update_time=2022-02-06T19:24:00Z,update_time_dw=2022-02-06T19:24:00Z},source=Struct{version=1.5.2.Final,connector=mysql,name=mysql_binlog_source,ts_ms=1644126182017,snapshot=true,db=test,table=student_info,server_id=0,file=master.000001,pos=154,row=0},op=r,ts_ms=1644126182026}, valueSchema=Schema{mysql_binlog_source.test.student_info.Envelope:STRUCT}, timestamp=null, headers=ConnectHeaders(headers=)}

SourceRecord{sourcePartition={server=mysql_binlog_source}, sourceOffset={ts_sec=1644126182, file=master.000001, pos=154}} ConnectRecord{topic='mysql_binlog_source.test.student_info', kafkaPartition=null, key=Struct{id=2}, keySchema=Schema{mysql_binlog_source.test.student_info.Key:STRUCT}, value=Struct{after=Struct{id=2,uname=smile,uage=18,create_time=2022-02-06T19:24:14Z,update_time=2022-02-06T19:24:14Z,update_time_dw=2022-02-06T19:24:14Z},source=Struct{version=1.5.2.Final,connector=mysql,name=mysql_binlog_source,ts_ms=1644126182036,snapshot=last,db=test,table=student_info,server_id=0,file=master.000001,pos=154,row=0},op=r,ts_ms=1644126182037}, valueSchema=Schema{mysql_binlog_source.test.student_info.Envelope:STRUCT}, timestamp=null, headers=ConnectHeaders(headers=)}

新增 op = c

SourceRecord{sourcePartition={server=mysql_binlog_source}, sourceOffset={transaction_id=null, ts_sec=1644126223, file=master.000001, pos=219, row=1, server_id=1, event=2}} ConnectRecord{topic='mysql_binlog_source.test.student_info', kafkaPartition=null, key=Struct{id=3}, keySchema=Schema{mysql_binlog_source.test.student_info.Key:STRUCT}, value=Struct{after=Struct{id=3,uname=CC,uage=18,create_time=2022-02-06T05:43:43Z,update_time=2022-02-06T05:43:43Z,update_time_dw=2022-02-06T05:43:43Z},source=Struct{version=1.5.2.Final,connector=mysql,name=mysql_binlog_source,ts_ms=1644126223000,db=test,table=student_info,server_id=1,file=master.000001,pos=356,row=0},op=c,ts_ms=1644126223433}, valueSchema=Schema{mysql_binlog_source.test.student_info.Envelope:STRUCT}, timestamp=null, headers=ConnectHeaders(headers=)}

修改 op = u

SourceRecord{sourcePartition={server=mysql_binlog_source}, sourceOffset={transaction_id=null, ts_sec=1644126435, file=master.000001, pos=511, row=1, server_id=1, event=2}} ConnectRecord{topic='mysql_binlog_source.test.student_info', kafkaPartition=null, key=Struct{id=2}, keySchema=Schema{mysql_binlog_source.test.student_info.Key:STRUCT}, value=Struct{before=Struct{id=2,uname=smile,uage=18,create_time=2022-02-06T05:24:14Z,update_time=2022-02-06T05:24:14Z,update_time_dw=2022-02-06T05:24:14Z},after=Struct{id=2,uname=smile,uage=28,create_time=2022-02-06T05:24:14Z,update_time=2022-02-06T05:24:14Z,update_time_dw=2022-02-06T05:24:14Z},source=Struct{version=1.5.2.Final,connector=mysql,name=mysql_binlog_source,ts_ms=1644126435000,db=test,table=student_info,server_id=1,file=master.000001,pos=648,row=0},op=u,ts_ms=1644126435149}, valueSchema=Schema{mysql_binlog_source.test.student_info.Envelope:STRUCT}, timestamp=null, headers=ConnectHeaders(headers=)}

删除 op = d

SourceRecord{sourcePartition={server=mysql_binlog_source}, sourceOffset={transaction_id=null, ts_sec=1644126510, file=master.000001, pos=834, row=1, server_id=1, event=2}} ConnectRecord{topic='mysql_binlog_source.test.student_info', kafkaPartition=null, key=Struct{id=3}, keySchema=Schema{mysql_binlog_source.test.student_info.Key:STRUCT}, value=Struct{before=Struct{id=3,uname=CC,uage=18,create_time=2022-02-06T05:43:43Z,update_time=2022-02-06T05:43:43Z,update_time_dw=2022-02-06T05:43:43Z},source=Struct{version=1.5.2.Final,connector=mysql,name=mysql_binlog_source,ts_ms=1644126510000,db=test,table=student_info,server_id=1,file=master.000001,pos=971,row=0},op=d,ts_ms=1644126510055}, valueSchema=Schema{mysql_binlog_source.test.student_info.Envelope:STRUCT}, timestamp=null, headers=ConnectHeaders(headers=)}

问题所在 查看mysql中时间和 同步回的时间,initial相差了6h; op in (c, u, d)时相差8h ;

增加flink 的checkpoint 的配置

// Flink-CDC将读取binlog的位置信息以状态的方式保存在CK,如果想要做到断点续传,需要从Checkpoint或者Savepoint启动程序

env.enableCheckpointing(5000L); //开启Checkpoint,每隔5秒钟做一次CK

env.getCheckpointConfig().setCheckpointTimeout(10000);

env.getCheckpointConfig().setMaxConcurrentCheckpoints(1); //最大并发的 checkpoint

env.getCheckpointConfig().setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE); //指定CK的一致性语义

env.getCheckpointConfig().enableExternalizedCheckpoints(CheckpointConfig.ExternalizedCheckpointCleanup.RETAIN_ON_CANCELLATION);//设置任务关闭的时候保留最后一次CK数据

env.setRestartStrategy(RestartStrategies.fixedDelayRestart(3, 2000L)); //指定从CK自动重启策略

env.setStateBackend(new FsStateBackend("hdfs:hadoop101:8020/flinkCDC")); //状态后端

System.setProperty("HADOOP_USER_NAME", "kris"); //设置访问HDFS的用户名

详细代码如下:

package com.stream.flinkcdc;

import com.ververica.cdc.connectors.mysql.MySqlSource;

import com.ververica.cdc.connectors.mysql.table.StartupOptions;

import com.ververica.cdc.debezium.DebeziumSourceFunction;

import com.ververica.cdc.debezium.StringDebeziumDeserializationSchema;

import org.apache.flink.api.common.restartstrategy.RestartStrategies;

import org.apache.flink.runtime.state.filesystem.FsStateBackend;

import org.apache.flink.streaming.api.CheckpointingMode;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.CheckpointConfig;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

/**

* DataStream方式的应用

*/

public class FlinkCDC {

public static void main(String[] args) throws Exception {

//1.获取Flink 执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

//2. 开启CK

// Flink-CDC将读取binlog的位置信息以状态的方式保存在CK,如果想要做到断点续传,需要从Checkpoint或者Savepoint启动程序

env.enableCheckpointing(5000L); //开启Checkpoint,每隔5秒钟做一次CK

env.getCheckpointConfig().setCheckpointTimeout(10000);

env.getCheckpointConfig().setMaxConcurrentCheckpoints(1); //最大并发的 checkpoint

env.getCheckpointConfig().setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE); //指定CK的一致性语义

env.getCheckpointConfig().enableExternalizedCheckpoints(CheckpointConfig.ExternalizedCheckpointCleanup.RETAIN_ON_CANCELLATION);//设置任务关闭的时候保留最后一次CK数据

env.setRestartStrategy(RestartStrategies.fixedDelayRestart(3, 2000L)); //指定从CK自动重启策略

env.setStateBackend(new FsStateBackend("hdfs:hadoop101:8020/flinkCDC")); //状态后端

System.setProperty("HADOOP_USER_NAME", "kris"); //设置访问HDFS的用户名

//3.通过FlinkCDC构建SourceFunction

// 创建Flink-MySQL-CDC的Source

//initial (default): Performs an initial snapshot on the monitored database tables upon first startup, and continue to read the latest binlog.

//latest-offset: Never to perform snapshot on the monitored database tables upon first startup, just read from the end of the binlog which means only have the changes since the connector was started.

//timestamp: Never to perform snapshot on the monitored database tables upon first startup, and directly read binlog from the specified timestamp. The consumer will traverse the binlog from the beginning and ignore change events whose timestamp is smaller than the specified timestamp.

//specific-offset: Never to perform snapshot on the monitored database tables upon first startup, and directly read binlog from the specified offset.

DebeziumSourceFunction<String> sourceFunction = MySqlSource.<String>builder()

.hostname("hadoop101")

.port(3306)

.username("root")

.password("123456")

.databaseList("test")

.tableList("test.student_info") //库名.表名 不加读取当前库下所有表

.deserializer(new StringDebeziumDeserializationSchema())

.startupOptions(StartupOptions.initial()) //initial 这种模式先初始化全部读取表中的数据, 再去读取最新的binlog文件

.build();

DataStreamSource<String> mysqlDS = env.addSource(sourceFunction);

//4. 打印数据

mysqlDS.print();

//5. 执行任务

env.execute();

}

}

① 启动Hadoop、 zookeeper、 Flink_standalone模式 yarn模式

② 启动flincdc 程序

3个Task Manager,每个Task Manager有2个slot,一共有6个Task Slots

bin/flink run -m hadoop101:8081 -c com.stream.flinkcdc.FlinkCDC ./flink-1.0-SNAPSHOT-jar-with-dependencies.jar

③ 在MySQL表中添加、修改或者删除数据

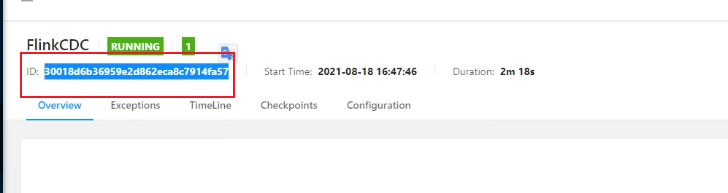

④ 给当前的Flink程序创建Savepoint(测试从当前的savepoint的位置重启)

bin/flink savepoint JobId hdfs://hadoop101:8020/flink/save

bin/flink savepoint 30018d6b36959e2d862eca8c7914fa57 hdfs://hadoop101:8020/flinkcdc/save Savepoint completed. Path:hdfs://hadoop101:8020/flinkcdc/save/savepoint-30018d-b5b60b62399f You can resume your program from this savepoint with the run command.

⑤ 在MySQL表中添加、修改或者删除数据

⑥ 关闭程序以后从Savepoint重启程序

bin/flink run \

-m hadoop101:8081 \

-s hdfs://hadoop101:8020/flink/save/savepoint-30018d-b5b60b62399f \

-c com.stream.flinkcdc.FlinkCDC \

./flink-1.0-SNAPSHOT-jar-with-dependencies.jar

可以看到initial之后,去读取最新的bin-log信息,当前打印的还是mysql中最新的数据。

FlinkSQL方式的应用

① 不需要反序列化器,因为它会根据创建的表格进行解析;

② initial初始化没有了改为ddl中的配置;

③ 这种方式一次只能读取一张表,不能像dataStream样读取库下的所有表;

④ cdc 2.0的dataStream方式可以兼容flink1.12,flinksql这种方式只能flink1.13

代码:

package com.stream.flinkcdc;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.types.Row;

/**

* FlinkSQL方式的应用

*

*/

public class FlinkSQLCDC {

public static void main(String[] args) throws Exception {

//1.创建执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

//2.创建FlinkSQL DDL模式构建CDC表

tableEnv.executeSql("CREATE TABLE student_info (" +

" id INT primary key," +

" uname STRING," +

" uage INT," +

" create_time STRING," +

" update_time STRING," +

" update_time_dw STRING" +

") WITH (" +

" 'connector' = 'mysql-cdc'," +

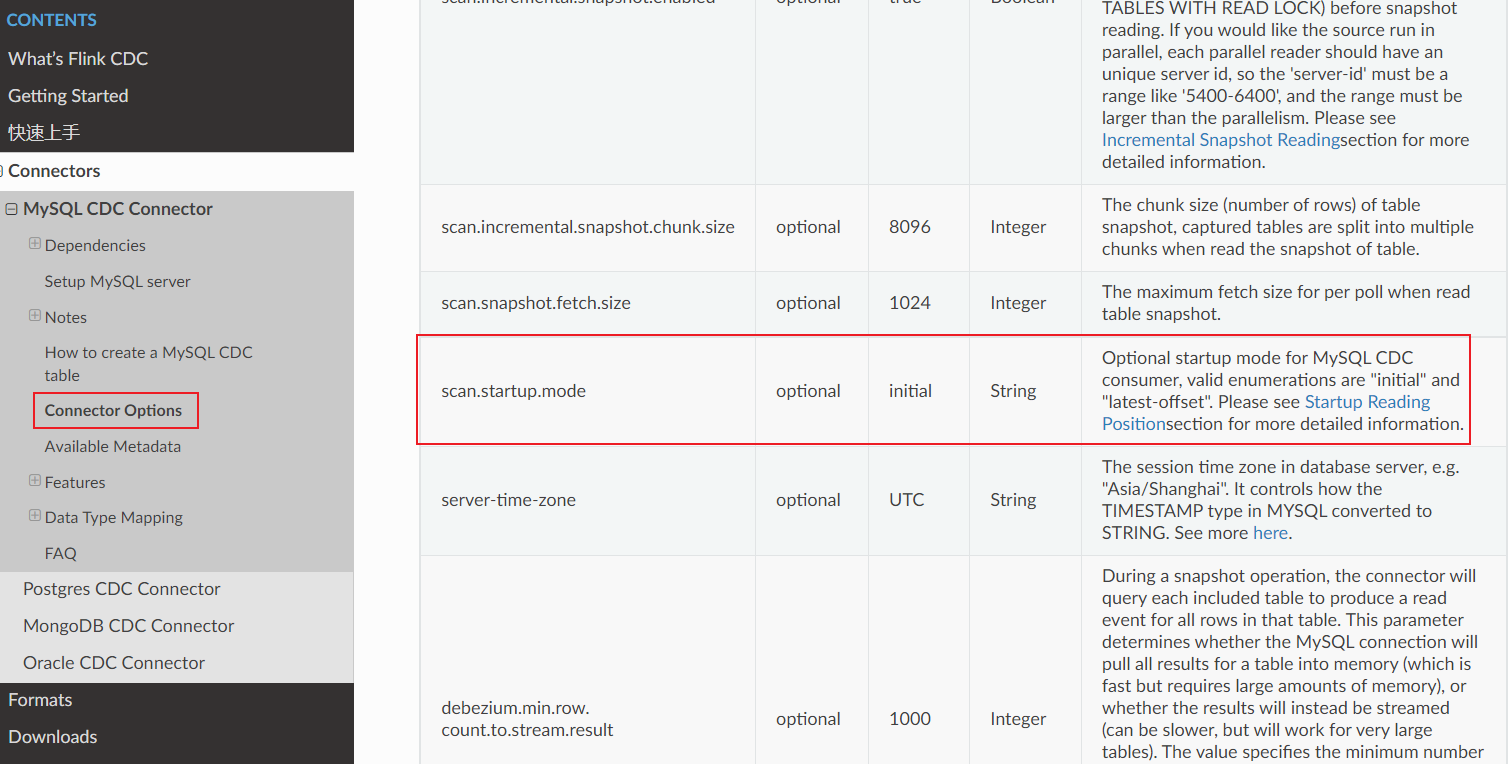

" 'scan.startup.mode' = 'latest-offset', " + // initial初始化历史所有的数据;

" 'hostname' = 'hadoop101'," +

" 'port' = '3306'," +

" 'username' = 'root'," +

" 'password' = '123456'," +

" 'database-name' = 'test'," +

" 'table-name' = 'student_info'" +

")");

//3. 查询数据并转换为流输出

//tableEnv.executeSql("select * from student_info").print();

Table table = tableEnv.sqlQuery("select * from student_info");

DataStream<Tuple2<Boolean, Row>> retractStream = tableEnv.toRetractStream(table, Row.class);

retractStream.print();

//4. 启动

env.execute("FlinkSQL CDC");

}

}

测试如下:

"'scan.startup.mode' = 'initial', " initial模式只会初始化历史数据,不会监控最新的bin-log变更:

(true,+I[2, smile, 28, 2022-02-06T13:24:14Z, 2022-02-06T13:24:14Z, 2022-02-06T13:24:14Z])

(true,+I[1, kris, 20, 2022-02-06T13:24:00Z, 2022-02-06T13:24:00Z, 2022-02-06T13:24:00Z])

"'scan.startup.mode' = 'latest-offset', 这种模式只监控最新的变更:

变更 -U

(false,-U[3, DD, 20, 2022-02-06T09:28:08Z, 2022-02-06T09:28:08Z, 2022-02-06T09:28:08Z])

(true,+U[3, DD, 18, 2022-02-06T09:28:08Z, 2022-02-06T09:28:08Z, 2022-02-06T09:28:08Z])

新增 +I

(true,+I[4, EE, 22, 2022-02-06T09:31:30Z, 2022-02-06T09:31:30Z, 2022-02-06T09:31:30Z])

删除 -D

(false,-D[3, DD, 18, 2022-02-06T09:28:08Z, 2022-02-06T09:28:08Z, 2022-02-06T09:28:08Z])

自定义反序列化器

代码如下:

/* 自定义反序列化器 */ public static class CustomerDeserializationSchema implements DebeziumDeserializationSchema<String> { /** * { * "db":"", * "tableName":"", * "before":{"id":"1001","name":""...}, * "after":{"id":"1001","name":""...}, * "op":"" * } */ @Override public void deserialize(SourceRecord sourceRecord, Collector<String> collector) throws Exception { //创建JSON对象用于封装结果数据 JSONObject result = new JSONObject(); //获取库名&表名 String topic = sourceRecord.topic(); String[] fields = topic.split("\\."); result.put("db", fields[1]); result.put("tableName", fields[2]); //获取before数据 Struct value = (Struct) sourceRecord.value(); Struct before = value.getStruct("before"); JSONObject beforeJson = new JSONObject(); if (before != null) { //获取列信息 Schema schema = before.schema(); List<Field> fieldList = schema.fields(); for (Field field : fieldList) { beforeJson.put(field.name(), before.get(field)); } } result.put("before", beforeJson); //获取after数据 Struct after = value.getStruct("after"); JSONObject afterJson = new JSONObject(); if (after != null) { //获取列信息 Schema schema = after.schema(); List<Field> fieldList = schema.fields(); for (Field field : fieldList) { afterJson.put(field.name(), after.get(field)); } } result.put("after", afterJson); //获取操作类型 Envelope.Operation operation = Envelope.operationFor(sourceRecord); result.put("op", operation); //输出数据 collector.collect(result.toJSONString()); } @Override public TypeInformation<String> getProducedType() { return BasicTypeInfo.STRING_TYPE_INFO; } }

package com.stream.flinkcdc; import com.alibaba.fastjson.JSONObject; import com.ververica.cdc.connectors.mysql.MySqlSource; import com.ververica.cdc.connectors.mysql.table.StartupOptions; import com.ververica.cdc.debezium.DebeziumDeserializationSchema; import com.ververica.cdc.debezium.DebeziumSourceFunction; import io.debezium.data.Envelope; import org.apache.flink.api.common.typeinfo.BasicTypeInfo; import org.apache.flink.api.common.typeinfo.TypeInformation; import org.apache.flink.streaming.api.datastream.DataStreamSource; import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment; import org.apache.flink.util.Collector; import org.apache.kafka.connect.data.Field; import org.apache.kafka.connect.data.Schema; import org.apache.kafka.connect.data.Struct; import org.apache.kafka.connect.source.SourceRecord; import java.util.List; import java.util.Properties; /** * 自定义反序列化器 */ public class CDCWithCustomerSchema { public static void main(String[] args) throws Exception { //1.获取Flink 执行环境 StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); env.setParallelism(1); //1.1 开启CK // env.enableCheckpointing(5000); // env.getCheckpointConfig().setCheckpointTimeout(10000); // env.getCheckpointConfig().setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE); // env.getCheckpointConfig().setMaxConcurrentCheckpoints(1); // // env.setStateBackend(new FsStateBackend("hdfs://hadoop101:8020/cdc-test/ck")); //2.通过FlinkCDC构建SourceFunction DebeziumSourceFunction<String> sourceFunction = MySqlSource.<String>builder() .hostname("hadoop101") .port(3306) .username("root") .password("123456") .databaseList("test") .tableList("test.student_info") .deserializer(new CustomerDeserializationSchema()) .startupOptions(StartupOptions.initial()) .build(); DataStreamSource<String> dataStreamSource = env.addSource(sourceFunction); //3.数据打印 dataStreamSource.print(); //4.启动任务 env.execute("FlinkCDC"); } /* 自定义反序列化器 */ public static class CustomerDeserializationSchema implements DebeziumDeserializationSchema<String> { /** * { * "db":"", * "tableName":"", * "before":{"id":"1001","name":""...}, * "after":{"id":"1001","name":""...}, * "op":"" * } */ @Override public void deserialize(SourceRecord sourceRecord, Collector<String> collector) throws Exception { //创建JSON对象用于封装结果数据 JSONObject result = new JSONObject(); //获取库名&表名 String topic = sourceRecord.topic(); String[] fields = topic.split("\\."); result.put("db", fields[1]); result.put("tableName", fields[2]); //获取before数据 Struct value = (Struct) sourceRecord.value(); Struct before = value.getStruct("before"); JSONObject beforeJson = new JSONObject(); if (before != null) { //获取列信息 Schema schema = before.schema(); List<Field> fieldList = schema.fields(); for (Field field : fieldList) { beforeJson.put(field.name(), before.get(field)); } } result.put("before", beforeJson); //获取after数据 Struct after = value.getStruct("after"); JSONObject afterJson = new JSONObject(); if (after != null) { //获取列信息 Schema schema = after.schema(); List<Field> fieldList = schema.fields(); for (Field field : fieldList) { afterJson.put(field.name(), after.get(field)); } } result.put("after", afterJson); //获取操作类型 Envelope.Operation operation = Envelope.operationFor(sourceRecord); result.put("op", operation); //输出数据 collector.collect(result.toJSONString()); } @Override public TypeInformation<String> getProducedType() { return BasicTypeInfo.STRING_TYPE_INFO; } } }

测试:

StartupOptions.initial() 模式:

信息: Connected to hadoop101:3306 at master.000001/3155 (sid:5498, cid:31)

{"op":"READ","before":{},"after":{"update_time":"2022-02-06T19:24:00Z","uname":"kris","create_time":"2022-02-06T19:24:00Z","id":1,"uage":20,"update_time_dw":"2022-02-06T19:24:00Z"},"db":"test","tableName":"student_info"}

{"op":"READ","before":{},"after":{"update_time":"2022-02-06T19:24:14Z","uname":"smile","create_time":"2022-02-06T19:24:14Z","id":2,"uage":28,"update_time_dw":"2022-02-06T19:24:14Z"},"db":"test","tableName":"student_info"}

{"op":"READ","before":{},"after":{"update_time":"2022-02-06T23:31:30Z","uname":"EE","create_time":"2022-02-06T23:31:30Z","id":4,"uage":22,"update_time_dw":"2022-02-06T23:31:30Z"},"db":"test","tableName":"student_info"}

{"op":"CREATE","before":{},"after":{"update_time":"2022-02-06T09:46:37Z","uname":"DD","create_time":"2022-02-06T09:46:37Z","id":3,"uage":17,"update_time_dw":"2022-02-06T09:46:37Z"},"db":"test","tableName":"student_info"}

{"op":"UPDATE","before":{"update_time":"2022-02-06T09:46:37Z","uname":"DD","create_time":"2022-02-06T09:46:37Z","id":3,"uage":17,"update_time_dw":"2022-02-06T09:46:37Z"},"after":{"update_time":"2022-02-06T09:46:37Z","uname":"DD","create_time":"2022-02-06T09:46:37Z","id":3,"uage":27,"update_time_dw":"2022-02-06T09:46:37Z"},"db":"test","tableName":"student_info"}

{"op":"DELETE","before":{"update_time":"2022-02-06T09:31:30Z","uname":"EE","create_time":"2022-02-06T09:31:30Z","id":4,"uage":22,"update_time_dw":"2022-02-06T09:31:30Z"},"after":{},"db":"test","tableName":"student_info"}

DataStreamAPI和Flinksql方式的区别

DataStreamAPI可以在Flink1.12/1.13 用,sql的方式只能在1.13用;

DataStreamAPI支持多库多表,sql只能单表;

DataStreamAPI需要自定义反序列化,sql不需要反序列化;

FlinkCDC2.0

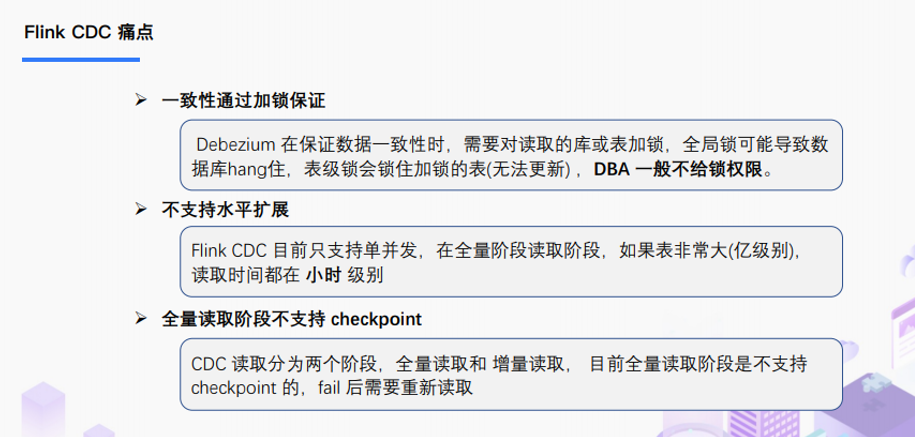

1.X的痛点

数据初始化时,先要查询所有的表,需要去加锁;

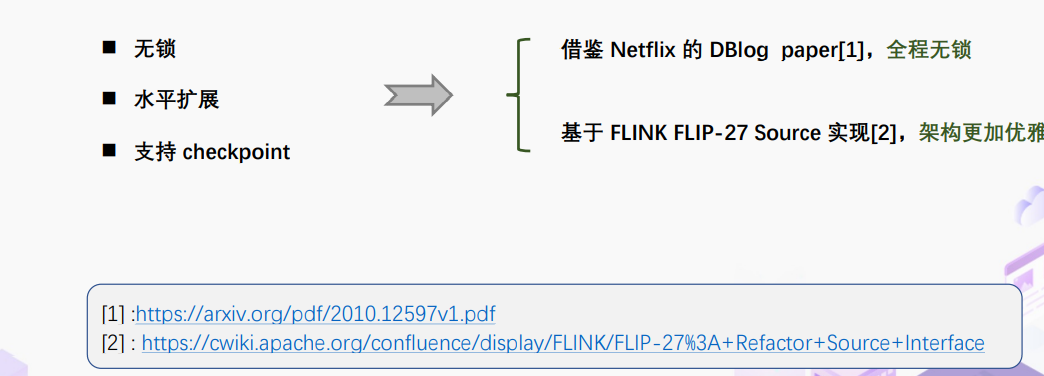

2.0的设计

DBLog: A Watermark Based Change-Data-Capture Framework (arxiv.org)

FLIP-27: Refactor Source Interface - Apache Flink - Apache Software Foundation

概述

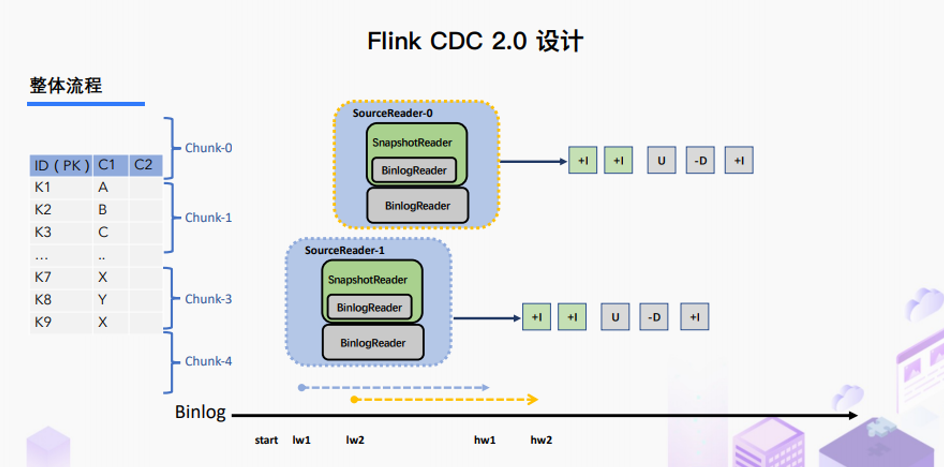

在对于有主键的表做初始化模式,整体的流程主要分为5个阶段:

- 1.Chunk切分;2.Chunk分配;(实现并行读取数据&CheckPoint)

- 3.Chunk读取;(实现无锁读取)

- 4.Chunk汇报;

- 5.Chunk分配。

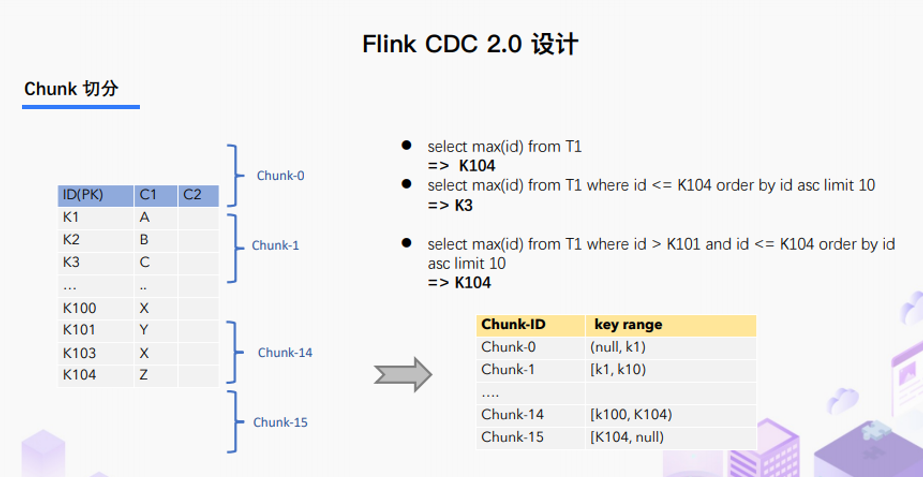

① Chunk切分

根据Netflix DBlog的论文中的无锁算法原理,对于目标表按照主键进行数据分片,设置每个切片的区间为左闭右开或者左开右闭来保证数据的连续性。

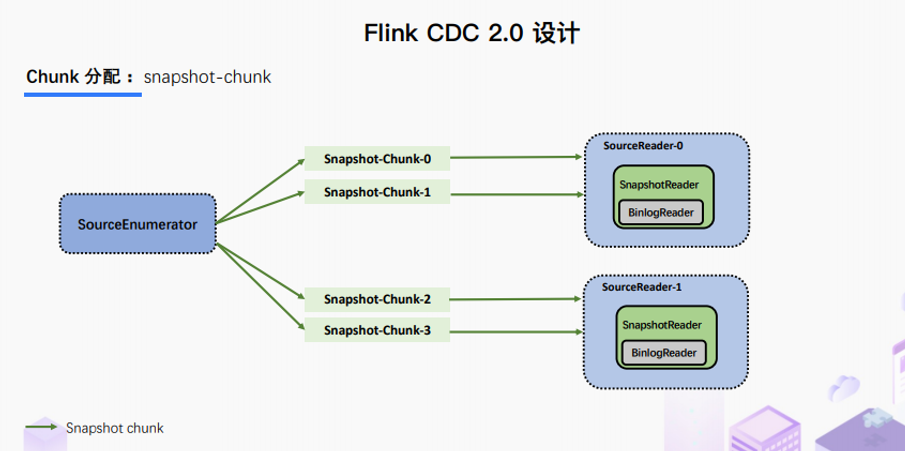

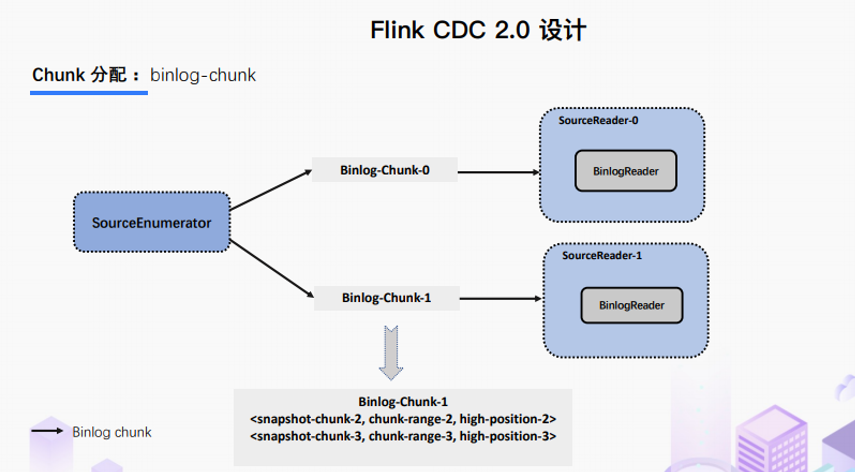

② Chunk分配

将划分好的Chunk分发给多个 SourceReader,每个SourceReader读取表中的一部分数据,实现了并行读取的目标。

同时在每个Chunk读取的时候可以单独做CheckPoint,某个Chunk读取失败只需要单独执行该Chunk的任务,而不需要像1.x中失败了只能从头读取。

若每个SourceReader保证了数据一致性,则全表就保证了数据一致性。

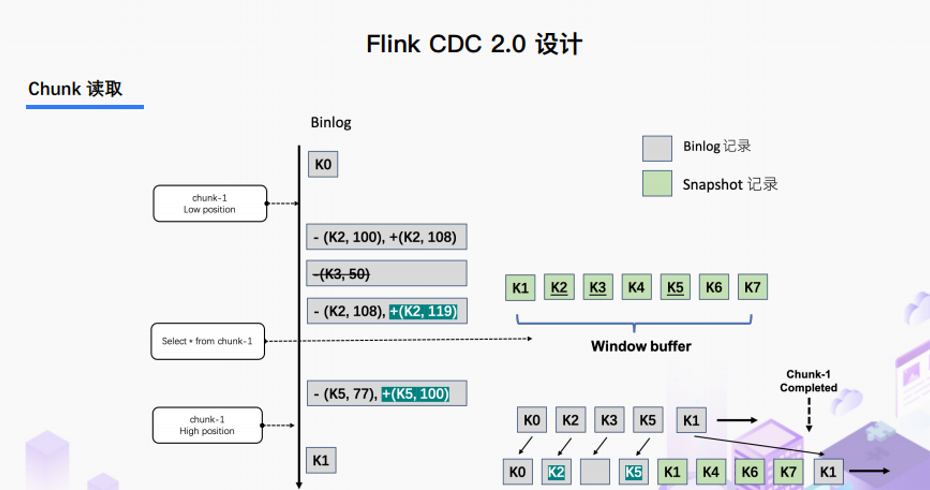

③ Chunk读取

读取可以分为5个阶段

1)SourceReader读取表数据之前先记录当前的Binlog位置信息记为低位点;

2)SourceReader将自身区间内的数据查询出来并放置在buffer中;

3)查询完成之后记录当前的Binlog位置信息记为高位点;

4)在增量部分消费从低位点到高位点的Binlog;

5)根据主键,对buffer中的数据进行修正并输出。

通过以上5个阶段可以保证每个Chunk最终的输出就是在高位点时该Chunk中最新的数据,但是目前只是做到了保证单个Chunk中的数据一致性。

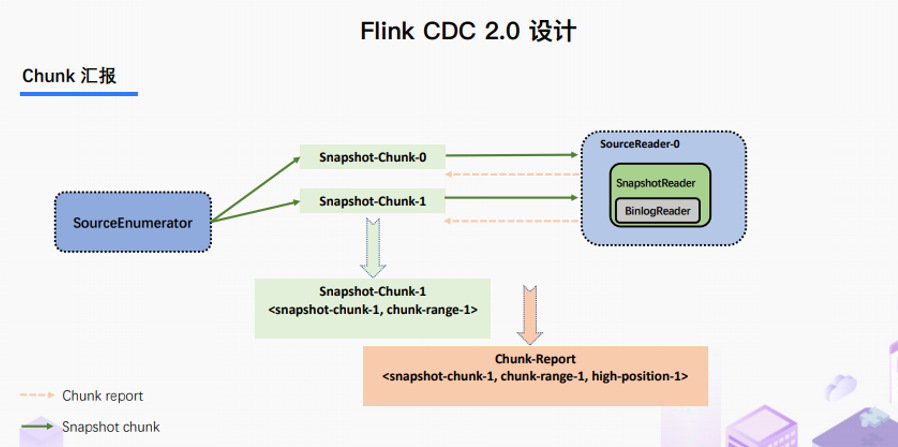

④Chunk汇报

在Snapshot Chunk读取完成之后,有一个汇报的流程,如上图所示,即SourceReader需要将Snapshot Chunk完成信息汇报给SourceEnumerator。

⑤ Chunk分配

FlinkCDC是支持全量+增量数据同步的,在SourceEnumerator接收到所有的Snapshot Chunk完成信息之后,还有一个消费增量数据(Binlog)的任务,此时是通过下发Binlog Chunk给任意一个SourceReader进

行单并发读取来实现的。

核心原理分析

Binlog Chunk中开始读取位置源码

MySqlHybridSplitAssigner

private MySqlBinlogSplit createBinlogSplit() { final List<MySqlSnapshotSplit> assignedSnapshotSplit = snapshotSplitAssigner.getAssignedSplits().values().stream() .sorted(Comparator.comparing(MySqlSplit::splitId)) .collect(Collectors.toList()); Map<String, BinlogOffset> splitFinishedOffsets = snapshotSplitAssigner.getSplitFinishedOffsets(); final List<FinishedSnapshotSplitInfo> finishedSnapshotSplitInfos = new ArrayList<>(); final Map<TableId, TableChanges.TableChange> tableSchemas = new HashMap<>(); BinlogOffset minBinlogOffset = BinlogOffset.INITIAL_OFFSET; for (MySqlSnapshotSplit split : assignedSnapshotSplit) { // find the min binlog offset BinlogOffset binlogOffset = splitFinishedOffsets.get(split.splitId()); if (binlogOffset.compareTo(minBinlogOffset) < 0) { minBinlogOffset = binlogOffset; } finishedSnapshotSplitInfos.add( new FinishedSnapshotSplitInfo( split.getTableId(), split.splitId(), split.getSplitStart(), split.getSplitEnd(), binlogOffset)); tableSchemas.putAll(split.getTableSchemas()); } final MySqlSnapshotSplit lastSnapshotSplit = assignedSnapshotSplit.get(assignedSnapshotSplit.size() - 1).asSnapshotSplit(); return new MySqlBinlogSplit( BINLOG_SPLIT_ID, lastSnapshotSplit.getSplitKeyType(), minBinlogOffset, BinlogOffset.NO_STOPPING_OFFSET, finishedSnapshotSplitInfos, tableSchemas); }

读取低位点到高位点之间的Binlog

BinlogSplitReader

/** * Returns the record should emit or not. * * <p>The watermark signal algorithm is the binlog split reader only sends the binlog event that * belongs to its finished snapshot splits. For each snapshot split, the binlog event is valid * since the offset is after its high watermark. * * <pre> E.g: the data input is : * snapshot-split-0 info : [0, 1024) highWatermark0 * snapshot-split-1 info : [1024, 2048) highWatermark1 * the data output is: * only the binlog event belong to [0, 1024) and offset is after highWatermark0 should send, * only the binlog event belong to [1024, 2048) and offset is after highWatermark1 should send. * </pre> */ private boolean shouldEmit(SourceRecord sourceRecord) { if (isDataChangeRecord(sourceRecord)) { TableId tableId = getTableId(sourceRecord); BinlogOffset position = getBinlogPosition(sourceRecord); // aligned, all snapshot splits of the table has reached max highWatermark if (position.isAtOrBefore(maxSplitHighWatermarkMap.get(tableId))) { return true; } Object[] key = getSplitKey( currentBinlogSplit.getSplitKeyType(), sourceRecord, statefulTaskContext.getSchemaNameAdjuster()); for (FinishedSnapshotSplitInfo splitInfo : finishedSplitsInfo.get(tableId)) { if (RecordUtils.splitKeyRangeContains( key, splitInfo.getSplitStart(), splitInfo.getSplitEnd()) && position.isAtOrBefore(splitInfo.getHighWatermark())) { return true; } } // not in the monitored splits scope, do not emit return false; } // always send the schema change event and signal event // we need record them to state of Flink return true; }

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· winform 绘制太阳,地球,月球 运作规律

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· AI与.NET技术实操系列(五):向量存储与相似性搜索在 .NET 中的实现

· 超详细:普通电脑也行Windows部署deepseek R1训练数据并当服务器共享给他人

2020-07-26 数据结构-07| 堆

2020-07-26 数据结构-06| 字典树Trie| 并查集Disjoint Set