flink IDEA 项目配置实践

参考文章:https://www.cnblogs.com/wyh-study/p/12901186.html

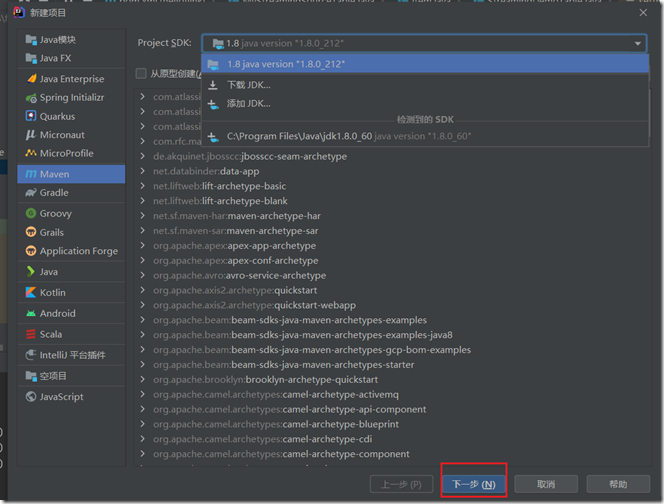

新建flink项目

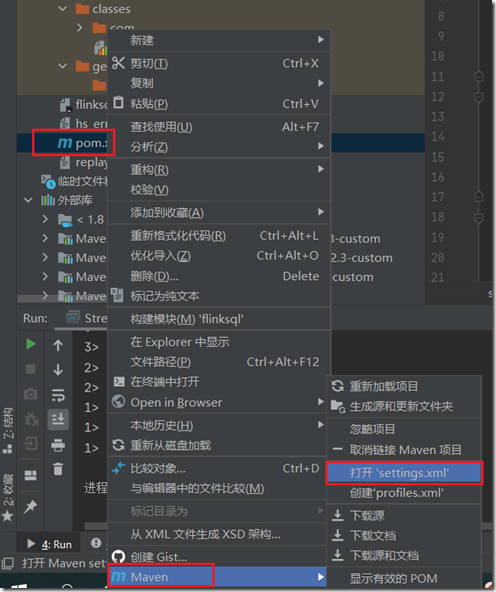

设置maven源:pom.xml点右键-》maven-》settings.xml

配置settings.xml

<?xml version="1.0" encoding="UTF-8"?> <settings xmlns="http://maven.apache.org/SETTINGS/1.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/SETTINGS/1.0.0 http://maven.apache.org/xsd/settings-1.0.0.xsd"> <mirrors> <mirror> <id>ali maven</id> <name>aliyun maven</name> <url>https://maven.aliyun.com/repository/public/</url> <mirrorOf>central</mirrorOf> </mirror> <mirror> <id>ui</id> <mirrorOf>central</mirrorOf> <name>Human Readable Name for this Mirror.</name> <url>http://uk.maven.org/maven2/</url> </mirror> <mirror> <id>ibiblio</id> <mirrorOf>central</mirrorOf> <name>Human Readable Name for this Mirror.</name> <url>http://mirrors.ibiblio.org/pub/mirrors/maven2/</url> </mirror> <mirror> <id>jboss-public-repository-group</id> <mirrorOf>central</mirrorOf> <name>JBoss Public Repository Group</name> <url>http://repository.jboss.org/nexus/content/groups/public</url> </mirror> <!--访问慢的网址放入到后面--> <mirror> <id>CN</id> <name>OSChina Central</name> <url>http://maven.oschina.net/content/groups/public/</url> <mirrorOf>central</mirrorOf> </mirror> <mirror> <id>net-cn</id> <mirrorOf>central</mirrorOf> <name>Human Readable Name for this Mirror.</name> <url>http://maven.net.cn/content/groups/public/</url> </mirror> <mirror> <id>JBossJBPM</id> <mirrorOf>central</mirrorOf> <name>JBossJBPM Repository</name> <url>https://repository.jboss.org/nexus/content/repositories/releases/</url> </mirror> </mirrors> </settings>

配置pom.xml

<!-- Licensed to the Apache Software Foundation (ASF) under one or more contributor license agreements. See the NOTICE file distributed with this work for additional information regarding copyright ownership. The ASF licenses this file to you under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. --> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>org.example</groupId> <artifactId>helloflink</artifactId> <version>1.0-SNAPSHOT</version> <properties> <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding> <flink.version>1.12.0</flink.version> <flink.table.version>2.12</flink.table.version> <java.version>1.8</java.version> <scala.version>2.12</scala.version> <scala.compat.version>2.12</scala.compat.version> <scala.binary.version>2.12</scala.binary.version> <maven.compiler.source>${java.version}</maven.compiler.source> <maven.compiler.target>${java.version}</maven.compiler.target> <log4j.version>2.12.1</log4j.version> </properties> <repositories> <repository> <id>apache.snapshots</id> <name>Apache Development Snapshot Repository</name> <url>https://repository.apache.org/content/repositories/snapshots/</url> <releases> <enabled>false</enabled> </releases> <snapshots> <enabled>true</enabled> </snapshots> </repository> </repositories> <dependencies> <!-- Apache Flink dependencies --> <!-- These dependencies are provided, because they should not be packaged into the JAR file. --> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-java</artifactId> <version>${flink.version}</version> <!--<scope>provided</scope>--> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-streaming-java_${scala.binary.version}</artifactId> <version>${flink.version}</version> <!--<scope>provided</scope>--> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-streaming-scala_${scala.binary.version}</artifactId> <version>${flink.version}</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-clients_${scala.binary.version}</artifactId> <version>${flink.version}</version> <!--<scope>provided</scope>--> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-table-api-java-bridge_${flink.table.version}</artifactId> <version>${flink.version}</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-table-planner-blink_${flink.table.version}</artifactId> <version>${flink.version}</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-table-planner_${flink.table.version}</artifactId> <version>${flink.version}</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-table-api-scala-bridge_${flink.table.version}</artifactId> <version>${flink.version}</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-table-common</artifactId> <version>${flink.version}</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-table_2.12</artifactId> <version>1.8.0</version> <scope>system</scope> <systemPath>C:\Program Files\flink-1.8.1\opt\flink-table_2.12-1.8.1.jar</systemPath> </dependency> <!-- Add connector dependencies here. They must be in the default scope (compile). --> <!-- Example: <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-connector-kafka_${scala.binary.version}</artifactId> <version>${flink.version}</version> </dependency> --> <!-- Add logging framework, to produce console output when running in the IDE. --> <!-- These dependencies are excluded from the application JAR by default. --> <dependency> <groupId>org.apache.logging.log4j</groupId> <artifactId>log4j-slf4j-impl</artifactId> <version>${log4j.version}</version> <scope>runtime</scope> </dependency> <dependency> <groupId>org.apache.logging.log4j</groupId> <artifactId>log4j-api</artifactId> <version>${log4j.version}</version> <scope>runtime</scope> </dependency> <dependency> <groupId>org.apache.logging.log4j</groupId> <artifactId>log4j-core</artifactId> <version>${log4j.version}</version> <scope>runtime</scope> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-streaming-java_2.12</artifactId> <version>1.12-SNAPSHOT</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-table-api-java-bridge_2.11</artifactId> <version>1.13-SNAPSHOT</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-table-api-java-bridge_2.11</artifactId> <version>1.10-SNAPSHOT</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-table-uber-blink_2.11</artifactId> <version>1.13-SNAPSHOT</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-streaming-java_2.10</artifactId> <version>1.1-SNAPSHOT</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-streaming-java_2.10</artifactId> <version>1.2-SNAPSHOT</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-streaming-java_2.10</artifactId> <version>1.3-SNAPSHOT</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-streaming-java_2.10</artifactId> <version>1.4-SNAPSHOT</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-streaming-java_2.11</artifactId> <version>1.4-SNAPSHOT</version> </dependency> </dependencies> <build> <plugins> <!-- Java Compiler --> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-compiler-plugin</artifactId> <version>3.1</version> <configuration> <source>${java.version}</source> <target>${java.version}</target> </configuration> </plugin> <!-- We use the maven-shade plugin to create a fat jar that contains all necessary dependencies. --> <!-- Change the value of <mainClass>...</mainClass> if your program entry point changes. --> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-shade-plugin</artifactId> <version>3.1.1</version> <executions> <!-- Run shade goal on package phase --> <execution> <phase>package</phase> <goals> <goal>shade</goal> </goals> <configuration> <artifactSet> <excludes> <exclude>org.apache.flink:force-shading</exclude> <exclude>com.google.code.findbugs:jsr305</exclude> <exclude>org.slf4j:*</exclude> <exclude>org.apache.logging.log4j:*</exclude> </excludes> </artifactSet> <filters> <filter> <!-- Do not copy the signatures in the META-INF folder. Otherwise, this might cause SecurityExceptions when using the JAR. --> <artifact>*:*</artifact> <excludes> <exclude>META-INF/*.SF</exclude> <exclude>META-INF/*.DSA</exclude> <exclude>META-INF/*.RSA</exclude> </excludes> </filter> </filters> <transformers> <transformer implementation="org.apache.maven.plugins.shade.resource.ManifestResourceTransformer"> <mainClass>org.example.StreamingJob</mainClass> </transformer> </transformers> </configuration> </execution> </executions> </plugin> </plugins> <pluginManagement> <plugins> <!-- This improves the out-of-the-box experience in Eclipse by resolving some warnings. --> <plugin> <groupId>org.eclipse.m2e</groupId> <artifactId>lifecycle-mapping</artifactId> <version>1.0.0</version> <configuration> <lifecycleMappingMetadata> <pluginExecutions> <pluginExecution> <pluginExecutionFilter> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-shade-plugin</artifactId> <versionRange>[3.1.1,)</versionRange> <goals> <goal>shade</goal> </goals> </pluginExecutionFilter> <action> <ignore/> </action> </pluginExecution> <pluginExecution> <pluginExecutionFilter> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-compiler-plugin</artifactId> <versionRange>[3.1,)</versionRange> <goals> <goal>testCompile</goal> <goal>compile</goal> </goals> </pluginExecutionFilter> <action> <ignore/> </action> </pluginExecution> </pluginExecutions> </lifecycleMappingMetadata> </configuration> </plugin> </plugins> </pluginManagement> </build> </project>

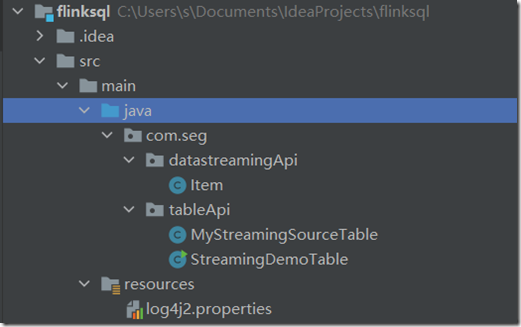

新建包

Item.java

package com.seg.datastreamingApi; public class Item { public String name; public Integer id; public Item() { } public String getName() { return name; } public void setName(String name) { this.name = name; } public Integer getId() { return id; } public void setId(Integer id) { this.id = id; } @Override public String toString() { return "Item{" + "name='" + name + '\'' + ", id=" + id + '}'; } }

MyStreamingSourceTable.java

package com.seg.tableApi; import org.apache.flink.streaming.api.functions.source.SourceFunction; import com.seg.datastreamingApi.Item; import java.util.ArrayList; import java.util.Random; class MyStreamingSourceTable implements SourceFunction<Item> { private boolean isRunning = true; /** * 重写run方法产生一个源源不断的数据发送源 * @param ctx * @throws Exception */ @Override public void run(SourceContext<Item> ctx) throws Exception { while(isRunning){ Item item = generateItem(); ctx.collect(item); //每秒产生一条数据 Thread.sleep(1000); } } @Override public void cancel() { isRunning = false; } //随机产生一条商品数据 private Item generateItem(){ int i = new Random().nextInt(1000); ArrayList<String> list = new ArrayList<>(); list.add("HAT"); list.add("TIE"); list.add("SHOE"); Item item = new Item(); item.setName(list.get(new Random().nextInt(3))); item.setId(i); return item; } }

StreamingDemoTable.java

package com.seg.tableApi; import org.apache.flink.api.common.functions.MapFunction; import org.apache.flink.api.common.typeinfo.TypeHint; import org.apache.flink.api.common.typeinfo.TypeInformation; import org.apache.flink.api.java.tuple.Tuple4; import org.apache.flink.streaming.api.datastream.DataStream; import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator; import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment; import org.apache.flink.table.api.EnvironmentSettings; import org.apache.flink.table.api.Table; import org.apache.flink.table.api.bridge.java.StreamTableEnvironment; import com.seg.datastreamingApi.Item; public class StreamingDemoTable { public static void main(String[] args) throws Exception{ //BlinkPlanner 对SQl进行了一些优化 比如去重,取TopN EnvironmentSettings bsSettings = EnvironmentSettings.newInstance().useBlinkPlanner().inStreamingMode().build(); //流处理环境 StreamExecutionEnvironment bsEnv = StreamExecutionEnvironment.getExecutionEnvironment(); //支持table sql环境 StreamTableEnvironment bsTableEnv = StreamTableEnvironment.create(bsEnv,bsSettings); SingleOutputStreamOperator<Item> source = bsEnv.addSource(new MyStreamingSourceTable()).map(new MapFunction<Item, Item>() { @Override public Item map(Item item) throws Exception { return item; } }); //将两个流筛选出来 DataStream<Item> evenSelect = source.filter((x->x.getId()%2==0)); DataStream<Item> oddSelect = source.filter((x->x.getId()%2!=0)); //把这两个流在我们的Flink环境中注册为临时表 bsTableEnv.createTemporaryView("evenTable",evenSelect,"name,id"); bsTableEnv.createTemporaryView("oddTable",oddSelect,"name,id"); Table quertTable = bsTableEnv.sqlQuery("select a.id,a.name,b.id,b.name from evenTable as a join oddTable as b on a.name=b.name"); quertTable.printSchema(); bsTableEnv.toRetractStream(quertTable,TypeInformation.of(new TypeHint<Tuple4<Integer,String,Integer,String>>() {})).print(); bsEnv.execute("streaming sql job"); } }

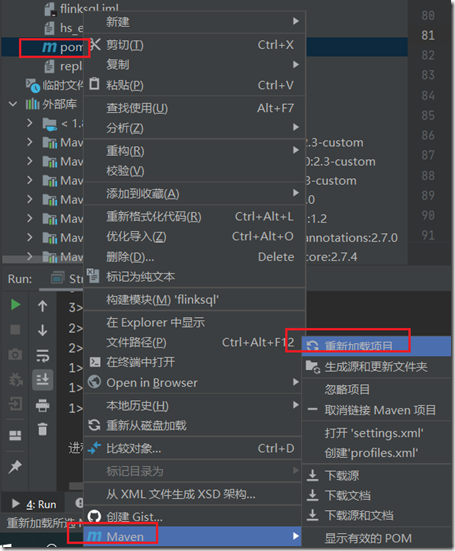

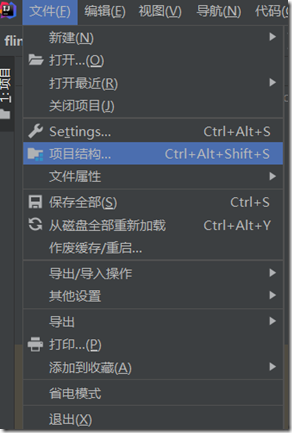

重新加载pom文件,形成依赖项:

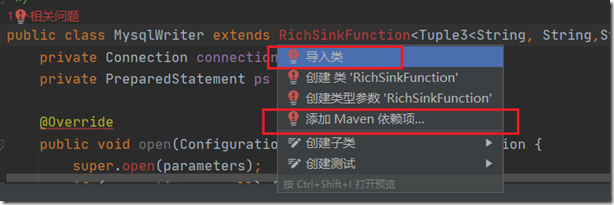

若代码中出现红色字体的依赖未找到情况,可以点击maven选项,自动添加依赖到pom文件中:

若有导入类选项,说明依赖存在,可以优先选择导入。

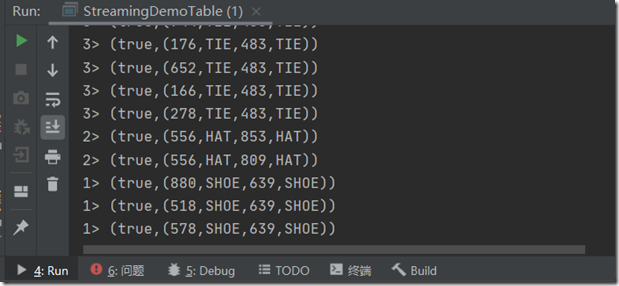

Shift f10运行截图:

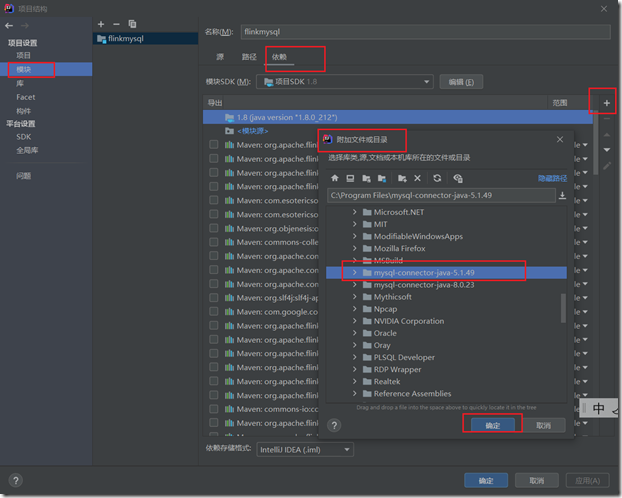

案例2:flink mysql

参考文章:https://blog.csdn.net/allensandy/article/details/106668432

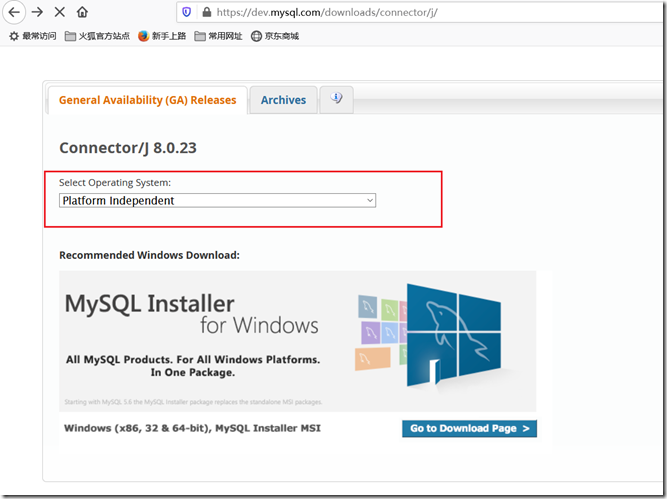

首先下载mysql-connector

首先跟版本有关,可参考https://blog.csdn.net/dylgs314118/article/details/102677942

Connector/J version Driver Type JDBC version MySQL Server version Status

5.1 4 3.0, 4.0, 4.1, 4.2 4.1, 5.0, 5.1, 5.5, 5.6, 5.7 Recommended version

5.0 4 3.0 4.1, 5.0 Released version

3.1 4 3.0 4.1, 5.0 Obsolete

3.0 4 3.0 3.x, 4.1 Obsolete

Connector/J version JDBC version MySQL Server version JRE Supported JDK Required for Compilation Status

6.0 4.2 5.5, 5.6, 5.7 1.8.x 1.8.x Developer Milestone

5.1 3.0, 4.0, 4.1, 4.2 4.1, 5.0, 5.1, 5.5, 5.6*, 5.7* 1.5.x, 1.6.x, 1.7.x, 1.8.x* 1.5.x and 1.8.x Recommended version

MysqlReader.java

package com.seg.flink.db; import org.apache.flink.api.java.tuple.Tuple3; import org.apache.flink.configuration.Configuration; import org.apache.flink.streaming.api.functions.source.RichSourceFunction; import java.sql.Connection; import java.sql.DriverManager; import java.sql.PreparedStatement; import java.sql.ResultSet; public class MysqlReader extends RichSourceFunction<Tuple3<String, String,String>> { private Connection connection = null; private PreparedStatement ps = null; //该方法主要用于打开数据库连接,下面的ConfigKeys类是获取配置的类 @Override public void open(Configuration parameters) throws Exception { super.open(parameters); Class.forName("com.mysql.jdbc.Driver");//加载数据库驱动 // connection = DriverManager.getConnection("jdbc:mysql://106.54.170.224:10328", "root", "Bmsoft2020datateam");//获取连接 connection = DriverManager.getConnection("jdbc:mysql://10.0.144.186:3306/instrumentdb", "root", "123");//获取连接 ps = connection.prepareStatement("select id,tm,answer,analysis from lfdt"); } @Override public void run(SourceContext<Tuple3<String, String,String>> sourceContext) throws Exception { ResultSet resultSet = ps.executeQuery(); while (resultSet.next()) { Tuple3<String, String,String> tuple = new Tuple3<String, String,String>(); tuple.setFields(resultSet.getString(1), resultSet.getString(2), resultSet.getString(3)); sourceContext.collect(tuple); } } @Override public void cancel() { try { super.close(); if (connection != null) { connection.close(); } if (ps != null) { ps.close(); } } catch (Exception e) { e.printStackTrace(); } } }

MysqlWriter.java

package com.seg.flink.db; import org.apache.flink.api.java.tuple.Tuple1; import org.apache.flink.api.java.tuple.Tuple2; import org.apache.flink.api.java.tuple.Tuple3; import org.apache.flink.configuration.Configuration; import org.apache.flink.streaming.api.functions.sink.RichSinkFunction; import java.sql.Connection; import java.sql.DriverManager; import java.sql.PreparedStatement; /** * @author steven */ public class MysqlWriter extends RichSinkFunction<Tuple3<String, String,String>> { private Connection connection = null; private PreparedStatement ps = null; @Override public void open(Configuration parameters) throws Exception { super.open(parameters); if (connection == null) { Class.forName("com.mysql.jdbc.Driver");//加载数据库驱动 connection = DriverManager.getConnection("jdbc:mysql://10.0.144.186:3306/instrumentdb", "root", "123");//获取连接 } ps = connection.prepareStatement("insert into lfdt(tm,answer,analysis) values (?,?,?)"); System.out.println("123123"); } @Override public void invoke(Tuple3<String, String,String> value, Context context) throws Exception { //获取JdbcReader发送过来的结果 try { ps.setString(1, value.f0); ps.setString(2, value.f1); ps.setString(3, value.f2); ps.executeUpdate(); } catch (Exception e) { e.printStackTrace(); } } @Override public void close() throws Exception { super.close(); if (ps != null) { ps.close(); } if (connection != null) { connection.close(); } super.close(); } }

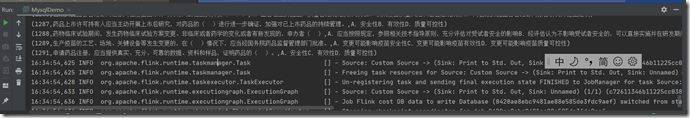

MysqlDemo.java

package com.seg.flink.db; import org.apache.flink.api.common.functions.FlatMapFunction; import org.apache.flink.api.java.DataSet; import org.apache.flink.api.java.ExecutionEnvironment; import org.apache.flink.api.java.aggregation.Aggregations; import org.apache.flink.api.java.tuple.Tuple3; import org.apache.flink.streaming.api.datastream.DataStreamSource; import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment; import org.apache.flink.util.Collector; public class MysqlDemo { public static void main(String[] args) throws Exception { // ExecutionEnvironment env = ExecutionEnvironment.createRemoteEnvironment("localhost",8081,"D:\\flink-steven\\target\\flink-0.0.1-SNAPSHOT.jar"); // final StreamExecutionEnvironment env =StreamExecutionEnvironment.getExecutionEnvironment(); //final StreamExecutionEnvironment env = StreamExecutionEnvironment.createRemoteEnvironment("node01", 8032);//, "D:\\flink-steven\\target\\flink-0.0.1-SNAPSHOT.jar"); StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); env.setParallelism(1); DataStreamSource<Tuple3<String, String,String>> dataStream = env.addSource(new MysqlReader()); dataStream.print(); dataStream.addSink(new MysqlWriter()); env.execute("Flink cost DB data to write Database"); } }

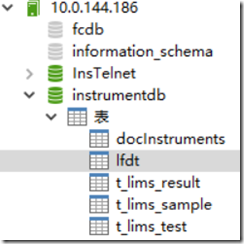

mysql数据库结构

DROP TABLE IF EXISTS `lfdt`;

CREATE TABLE `lfdt` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`tm` varchar(1000) NOT NULL,

`answer` varchar(500) NOT NULL,

`analysis` varchar(1000) NOT NULL,

PRIMARY KEY (`id`)

) ENGINE=MyISAM AUTO_INCREMENT=2543 DEFAULT CHARSET=utf8;

INSERT INTO `lfdt` VALUES (41, '《疫苗管理法》规定,应对重大突发公共卫生事件急需的疫苗或者国务院卫生健康主管部门认定急需的其他疫苗经,评估( ),国务院药品监督管理部门可以附条件批准疫苗注册申请。', 'B.获益大于风险的', '出题依据:《疫苗管理法》第二十条第一款 应对重大突发公共卫生事件急需的疫苗或者国务院卫生健康主管部门认定急需的其他疫苗,经评估获益大于风险的,国务院药品监督管理部门可以附条件批准疫苗注册申请。');

INSERT INTO `lfdt` VALUES (42, '药品生产企业应当建立药品出厂放行规程,药品符合标准、条件的,经( )签字后方可出厂放行。', 'C.质量受权人', '出题依据:《药品生产监督管理办法》第三十七条第一款 药品生产企业应当建立药品出厂放行规程,明确出厂放行的标准、条件,并对药品质量检验结果、关键生产记录和偏差控制情况进行审核,对药品进行质量检验。符合标准、条件的,经质量受权人签字后方可出厂放行。');

运行截图: