[MapReduce_1] 运行 Word Count 示例程序

0. 说明

MapReduce 实现 Word Count 示意图 && Word Count 代码编写

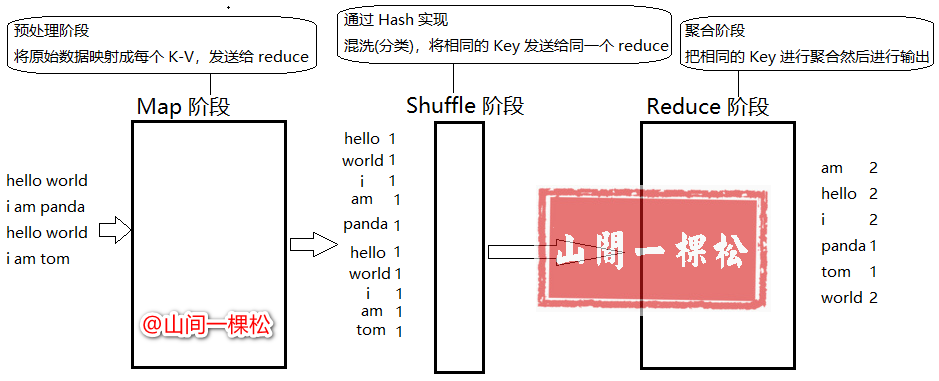

1. MapReduce 实现 Word Count 示意图

1. Map:预处理阶段,将原始数据映射成每个 K-V,发送给 reduce

2. Shuffle:混洗(分类),将相同的 Key发送给同一个 reduce

3. Reduce:聚合阶段,把相同的 Key 进行聚合然后进行输出

2. Word Count 代码编写

[2.1 WCMapper.java]

package hadoop.mr.wc; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper; import java.io.IOException; /** * Mapper 程序 */ public class WCMapper extends Mapper<LongWritable, Text, Text, IntWritable> { /** * map 函数,被调用过程是通过 while 循环每行调用一次 */ @Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { // 将 value 变为 String 格式 String line = value.toString(); // 将一行文本进行截串 String[] arr = line.split(" "); for (String word : arr) { context.write(new Text(word), new IntWritable(1)); } } }

[2.2 WCReducer.java]

package hadoop.mr.wc; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Reducer; import java.io.IOException; /** * Reducer 类 */ public class WCReducer extends Reducer<Text, IntWritable, Text, IntWritable> { /** * 通过迭代所有的 key 进行聚合 */ @Override protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException { int sum = 0; for (IntWritable value : values) { sum += value.get(); } context.write(key,new IntWritable(sum)); } }

[2.3 WCApp.java]

package hadoop.mr.wc; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; /** * Word Count APP */ public class WCApp { public static void main(String[] args) throws Exception { // 初始化配置文件 Configuration conf = new Configuration(); // 仅在本地开发时使用 // conf.set("fs.defaultFS", "file:///"); // 通过配置文件初始化 job Job job = Job.getInstance(conf); // 设置 job 名称 job.setJobName("Word Count"); // job 入口函数类 job.setJarByClass(WCApp.class); // 设置 mapper 类 job.setMapperClass(WCMapper.class); // 设置 reducer 类 job.setReducerClass(WCReducer.class); // 设置 map 的输出 K-V 类型 job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(IntWritable.class); // 设置 reduce 的输出 K-V 类型 job.setOutputKeyClass(Text.class); job.setOutputValueClass(IntWritable.class); // 设置输入路径和输出路径 // Path pin = new Path("E:/test/wc/1.txt"); // Path pout = new Path("E:/test/wc/out"); Path pin = new Path(args[0]); Path pout = new Path(args[1]); FileInputFormat.addInputPath(job, pin); FileOutputFormat.setOutputPath(job, pout); // 执行 job job.waitForCompletion(true); } }

且将新火试新茶,诗酒趁年华。

浙公网安备 33010602011771号

浙公网安备 33010602011771号