rdd简单操作

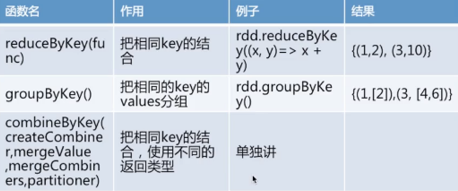

1.原始数据 Key value Transformations(example: ((1, 2), (3, 4), (3, 6)))

2. flatMap测试示例

object FlatMapTran {

//与map相似,区别是源rdd中的元素经map处理后只能生成一个元素,而原有的rdd中的元素经过flatmap处理后可以生成多个元素

def main(args: Array[String]) {

val spark = SparkSession.builder().appName("FlatMapTran").master("local[1]").getOrCreate()

val sc = spark.sparkContext;

val lines = sc.parallelize(Array("hi shao", "scala test", "good", "every"))

lines.foreach(println)

val line2 = lines.map(line => line.split(" "))

line2.foreach(println)

val line3 = lines.map(line => (line,1))

line3.foreach(println)

val line4=lines.flatMap(line => line.split(" "))

line4.foreach(println)

}

}

执行结果:

hi shao scala test good every [Ljava.lang.String;@129af42 [Ljava.lang.String;@1c9136 [Ljava.lang.String;@1927273 [Ljava.lang.String;@3b9611 (hi shao,1) (scala test,1) (good,1) (every,1) hi shao scala test good every

3.distinct、reducebykey、groupbykey

object RddDistinct {

def main(args: Array[String]): Unit = {

val spark = SparkSession.builder().appName("FlatMapTran").master("local[1]").getOrCreate()

val sc = spark.sparkContext

//val datas=sc.parallelize(List(("g","23"),(1,"shao"),("haha","23"),("g","23")))

val datas=sc.parallelize(Array(("g","23"),(1,"shao"),("haha","23"),("g","23")))

datas.distinct().foreach(println(_))

/**结果:

* (haha,23)

(1,shao)

(g,23)

*/

datas.reduceByKey((x,y)=>x+y).foreach(println)

/**结果:

* (haha,23)

(1,shao)

(g,2323)

*/

datas.groupByKey().foreach(println(_))

/**结果:

* (haha,CompactBuffer(23))

(1,CompactBuffer(shao))

(g,CompactBuffer(23, 23))

*

*/

}

}

4.combineByKey(create Combiner, merge Value, merge Combiners, partitioner)

最常用的基于key的聚合函数,返回的类型可以与输入类型不一样许多基于key的聚合函数都用到了它,像 groupbykey0

遍历 partition中的元素,元素的key,要么之前见过的,要么不是。如果是新元素,使用我们提供的 createcombiner()函数如果是这个partition中已经存在的key,

就会使用 mergevalue()函数合计每个 partition的结果的时候,使用 merge Combiners()函数

object CombineByKeyTest { def main(args: Array[String]): Unit = { val spark = SparkSession.builder().appName("FlatMapTran").master("local[1]").getOrCreate() val sc = spark.sparkContext val scores=sc.parallelize(Array(("jack",99.0),("jack",80.0),("jack",85.0),("jack",89.0),("lily",95.0),("lily",87.0),("lily",87.0),("lily",77.0))) //combineByKey(create Combiner, mergevalue, merge Combiners, partitioner) //(创建合并器、合并值、合并合并合并器、分区器) val scores2=scores.combineByKey(score=>(1,score), (c1:(Int,Double),newScore)=>(c1._1+1,c1._2+newScore), (c1:(Int,Double),c2:(Int,Double))=>(c1._1+c2._1,c1._2+c2._2)) /** * 结果: * (lily,(4,346.0)) (jack,(4,353.0)) */ scores2.foreach(println(_)) scores2.map(score=>{ (score._1,score._2,score._2._2/score._2._1) }).foreach(println(_)) /** * 结果: * (lily,(4,346.0),86.5) (jack,(4,353.0),88.25) */ scores2.map{case (name,(num,totalScore))=>{ (name,num,totalScore,totalScore/num) }}.foreach(println(_)) /** * 结果: * (lily,4,346.0,86.5) (jack,4,353.0,88.25) */ } }