kubernetes组件helm

1.安装helm

Helm由客户端helm命令行工具和服务端tiller组成,Helm的安装十分简单。 下载helm命令行工具到master节点node1的/usr/local/bin下(只需要在其中一台master节点安装就行)

wget https://storage.googleapis.com/kubernetes-helm/helm-v2.11.0-linux-amd64.tar.gz tar -zxvf helm-v2.11.0-linux-amd64.tar.gz cd linux-amd64/ cp helm /usr/local/bin/

因为Kubernetes APIServer开启了RBAC访问控制,所以需要创建tiller使用的service account: tiller并分配合适的角色给它。 详细内容可以查看helm文档中的Role-based Access Control。 这里简单起见直接分配cluster-admin这个集群内置的ClusterRole给它。创建rbac-config.yaml文件:

apiVersion: v1 kind: ServiceAccount metadata: name: tiller namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: name: tiller roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: tiller namespace: kube-system

[root@k8s-master ~]# kubectl apply -f rbac-helm.yaml serviceaccount/tiller created clusterrolebinding.rbac.authorization.k8s.io/tiller created

接下来使用helm部署tiller:

[root@k8s-master ~]# helm init --service-account tiller --skip-refresh Creating /root/.helm Creating /root/.helm/repository Creating /root/.helm/repository/cache Creating /root/.helm/repository/local Creating /root/.helm/plugins Creating /root/.helm/starters Creating /root/.helm/cache/archive Creating /root/.helm/repository/repositories.yaml Adding stable repo with URL: https://kubernetes-charts.storage.googleapis.com Adding local repo with URL: http://127.0.0.1:8879/charts $HELM_HOME has been configured at /root/.helm. Tiller (the Helm server-side component) has been installed into your Kubernetes Cluster. Please note: by default, Tiller is deployed with an insecure 'allow unauthenticated users' policy. To prevent this, run `helm init` with the --tiller-tls-verify flag. For more information on securing your installation see: https://docs.helm.sh/using_helm/#securing-your-helm-installation Happy Helming!

tiller默认被部署在k8s集群中的kube-system这个namespace下

[root@k8s-master ~]# kubectl get pod -n kube-system -l app=helm NAME READY STATUS RESTARTS AGE tiller-deploy-6f6fd74b68-cvn8v 0/1 Running 0 36s

注意由于某些原因需要网络可以访问gcr.io和kubernetes-charts.storage.googleapis.com,如果无法访问可以通过helm init –service-account tiller –tiller-image <your-docker-registry>/tiller:v2.11.0 –skip-refresh使用私有镜像仓库中的tiller镜像

2.使用Helm部署Nginx Ingress

Nginx Ingress Controller被部署在Kubernetes的边缘节点上,即有公网IP的node节点上,为了防止单点故障,可以多个边缘节点做高可用,具体可参考:https://blog.frognew.com/2018/09/ingress-edge-node-ha-in-bare-metal-k8s.html

我这里演示环境只有一台,就以一个Edga为例

我们将k8s-master(192.168.100.3)同时做为边缘节点,打上Label

[root@k8s-master ~]# kubectl label node k8s-master node-role.kubernetes.io/edge= node/k8s-master labeled [root@k8s-master ~]# kubectl get node NAME STATUS ROLES AGE VERSION k8s-master Ready edge,master 3d22h v1.12.1

stable/nginx-ingress chart的值文件ingress-nginx.yaml

controller: service: externalIPs: - 192.168.100.3 nodeSelector: node-role.kubernetes.io/edge: '' tolerations: - key: node-role.kubernetes.io/master operator: Exists effect: NoSchedule defaultBackend: nodeSelector: node-role.kubernetes.io/edge: '' tolerations: - key: node-role.kubernetes.io/master operator: Exists effect: NoSchedule

helm repo update

helm install stable/nginx-ingress \

-n nginx-ingress \

--namespace ingress-nginx \

-f ingress-nginx.yaml

[root@k8s-master ~]# helm install stable/nginx-ingress \ > -n nginx-ingress \ > --namespace ingress-nginx \ > -f ingress-nginx.yaml NAME: nginx-ingress LAST DEPLOYED: Mon Oct 29 13:45:01 2018 NAMESPACE: ingress-nginx STATUS: DEPLOYED RESOURCES: ==> v1beta1/ClusterRoleBinding NAME AGE nginx-ingress 1s ==> v1beta1/Role nginx-ingress 0s ==> v1/Service nginx-ingress-controller 0s nginx-ingress-default-backend 0s ==> v1/ServiceAccount nginx-ingress 1s ==> v1beta1/ClusterRole nginx-ingress 1s ==> v1beta1/RoleBinding nginx-ingress 0s ==> v1beta1/Deployment nginx-ingress-controller 0s nginx-ingress-default-backend 0s ==> v1/Pod(related) NAME READY STATUS RESTARTS AGE nginx-ingress-controller-8658f85fd-g5kbd 0/1 ContainerCreating 0 0s nginx-ingress-default-backend-684f76869d-rcjjv 0/1 ContainerCreating 0 0s ==> v1/ConfigMap NAME AGE nginx-ingress-controller 1s NOTES: The nginx-ingress controller has been installed. It may take a few minutes for the LoadBalancer IP to be available. You can watch the status by running 'kubectl --namespace ingress-nginx get services -o wide -w nginx-ingress-controller' An example Ingress that makes use of the controller: apiVersion: extensions/v1beta1 kind: Ingress metadata: annotations: kubernetes.io/ingress.class: nginx name: example namespace: foo spec: rules: - host: www.example.com http: paths: - backend: serviceName: exampleService servicePort: 80 path: / # This section is only required if TLS is to be enabled for the Ingress tls: - hosts: - www.example.com secretName: example-tls If TLS is enabled for the Ingress, a Secret containing the certificate and key must also be provided: apiVersion: v1 kind: Secret metadata: name: example-tls namespace: foo data: tls.crt: <base64 encoded cert> tls.key: <base64 encoded key> type: kubernetes.io/tls

查看状态 (下载镜像时间有点长,可通过describe查看pod状态)

[root@k8s-master ~]# kubectl get pod -n ingress-nginx -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE nginx-ingress-controller-8658f85fd-g5kbd 0/1 ContainerCreating 0 31s <none> k8s-master <none> nginx-ingress-default-backend-684f76869d-rcjjv 1/1 Running 0 31s 10.244.0.25 k8s-master <none>

如果访问http://192.168.100.3返回default backend,则部署完成

[root@k8s-master ~]# curl http://192.168.100.3 404 page not found

2.1将TLS证书配置到Kubernetes中

当使用Ingress将HTTPS的服务暴露到集群外部时,需要HTTPS证书,这里将*.abc.com的证书和秘钥配置到Kubernetes中。

后边部署在kube-system命名空间中的dashboard要使用这个证书,因此这里先在kube-system中创建证书的secret

创建一个私有演示证书,我这直接使用脚本创建了

[root@k8s-master ~]# sh create_https_cert.sh Enter your domain [www.example.com]: k8s.abc.com Create server key... Generating RSA private key, 1024 bit long modulus ..............++++++ ............................++++++ e is 65537 (0x10001) Enter pass phrase for k8s.abc.com.key: Verifying - Enter pass phrase for k8s.abc.com.key: Create server certificate signing request... Enter pass phrase for k8s.abc.com.key: Remove password... Enter pass phrase for k8s.abc.com.origin.key: writing RSA key Sign SSL certificate... Signature ok subject=/C=US/ST=Mars/L=iTranswarp/O=iTranswarp/OU=iTranswarp/CN=k8s.abc.com Getting Private key TODO: Copy k8s.abc.com.crt to /etc/nginx/ssl/k8s.abc.com.crt Copy k8s.abc.com.key to /etc/nginx/ssl/k8s.abc.com.key Add configuration in nginx: server { ... listen 443 ssl; ssl_certificate /etc/nginx/ssl/k8s.abc.com.crt; ssl_certificate_key /etc/nginx/ssl/k8s.abc.com.key; }

将证书加入到kubernetes中

[root@k8s-master ~]# kubectl create secret tls dashboard-abc-com-tls-secret --cert=k8s.abc.com.crt --key=k8s.abc.com.key -n kube-system

secret/dashboard-abc-com-tls-secret created

3.使用Helm部署dashboard

k8s-dashboard.yaml

注意证书名称

ingress: enabled: true hosts: - k8s.abc.com annotations: nginx.ingress.kubernetes.io/ssl-redirect: "true" nginx.ingress.kubernetes.io/secure-backends: "true" tls: - secretName: dashboard-abc-com-tls-secret hosts: - k8s.abc.com rbac: clusterAdminRole: true

[root@k8s-master ~]# helm install stable/kubernetes-dashboard -n kubernetes-dashboard --namespace kube-system -f k8s-dashboard.yaml NAME: kubernetes-dashboard LAST DEPLOYED: Mon Oct 29 13:26:42 2018 NAMESPACE: kube-system STATUS: DEPLOYED RESOURCES: ==> v1beta1/Deployment NAME AGE kubernetes-dashboard 0s ==> v1beta1/Ingress kubernetes-dashboard 0s ==> v1/Pod(related) NAME READY STATUS RESTARTS AGE kubernetes-dashboard-5746dd4544-4c5lw 0/1 ContainerCreating 0 0s ==> v1/Secret NAME AGE kubernetes-dashboard 0s ==> v1/ServiceAccount kubernetes-dashboard 0s ==> v1beta1/ClusterRoleBinding kubernetes-dashboard 0s ==> v1/Service kubernetes-dashboard 0s NOTES: ********************************************************************************* *** PLEASE BE PATIENT: kubernetes-dashboard may take a few minutes to install *** ********************************************************************************* From outside the cluster, the server URL(s) are: https://k8s.abc.com

查看登录token

[root@k8s-master ~]# kubectl -n kube-system get secret | grep kubernetes-dashboard-token kubernetes-dashboard-token-grj4g kubernetes.io/service-account-token 3 3m13s [root@k8s-master ~]# kubectl describe -n kube-system secret/kubernetes-dashboard-token-grj4g Name: kubernetes-dashboard-token-grj4g Namespace: kube-system Labels: <none> Annotations: kubernetes.io/service-account.name: kubernetes-dashboard kubernetes.io/service-account.uid: a1b4dd5d-db40-11e8-a4f2-000c29994344 Type: kubernetes.io/service-account-token Data ==== ca.crt: 1025 bytes namespace: 11 bytes token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi1ncmo0ZyIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImExYjRkZDVkLWRiNDAtMTFlOC1hNGYyLTAwMGMyOTk5NDM0NCIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.pgV7QmAg5nM17k2idbmtjbv_wVxVqZz63yXy0SLSrSvRSH2-hKcAjrYngXPP-0cQ_FUfl8IJcK3FnfotmSFxz5upO7bKCF_ztu-A8g-HF3SPpcT2XXtCoc-Jf0hzlJbZyLfeFRJnmotQ05tGHe5kOpfaT9uXCwMK7BxGmiLlutJeGb_8xDtWWqt_1Si5znHJFZoM50gRBqToeFxz5ccBseIhAMJiFD4WgzLixfRJuHF56dkEqNlfm6D_GupNMm8IMFk2PuA_u12TzB1xpX340cBS_S0HqdrQlh6cnUzON0pBDekSeeYgelIAGA-hJWlOoILdAoHs3WoFuTpePlhEUA

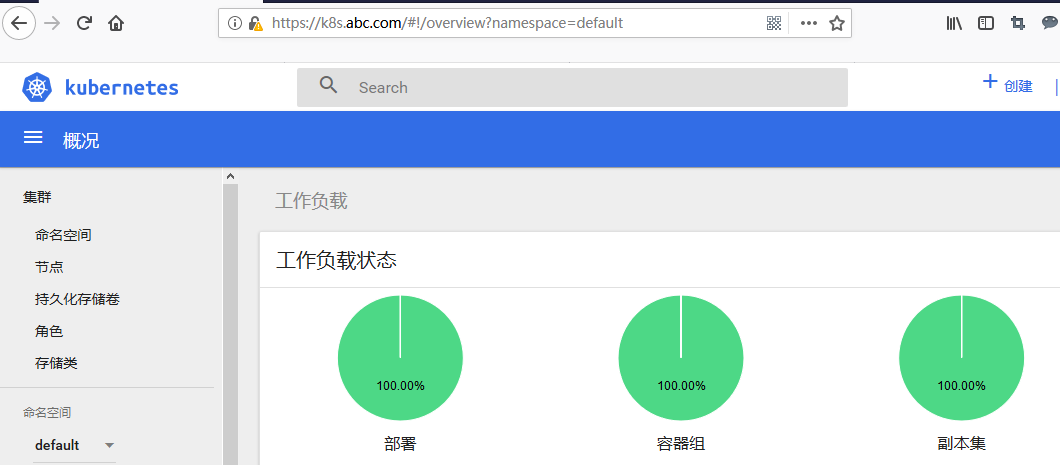

在客户端添加hosts解析,在火狐浏览器中访问 https://k8s.abc.com ,复制上面的token登录

4.helm命令

卸载部署的版本

helm del --purge kubernetes-dashboard

查看部署的状态

helm status kubernetes-dashboard

查看版本默认配置,(通过-f参数创建自定义yaml文件,可以覆盖默认配置,比如修改下载镜像地址)

helm inspect values stable/mysql

查看已安装版本列表(--all可以查看所有包含删除和失败的)

helm list

查看存储库

helm repo list

版本不一致的问题

[root@stg-k8s-master ~]# helm install ./php

Error: incompatible versions client[v2.12.1] server[v2.11.0]

[root@stg-k8s-master ~]# helm version

Client: &version.Version{SemVer:"v2.12.1", GitCommit:"02a47c7249b1fc6d8fd3b94e6b4babf9d818144e", GitTreeState:"clean"}

Server: &version.Version{SemVer:"v2.11.0", GitCommit:"2e55dbe1fdb5fdb96b75ff144a339489417b146b", GitTreeState:"clean"}

执行helm init --upgrade

[root@stg-k8s-master ~]# helm version

Client: &version.Version{SemVer:"v2.12.1", GitCommit:"02a47c7249b1fc6d8fd3b94e6b4babf9d818144e", GitTreeState:"clean"}

Error: could not find a ready tiller pod

[root@stg-k8s-master ~]# helm version

Client: &version.Version{SemVer:"v2.12.1", GitCommit:"02a47c7249b1fc6d8fd3b94e6b4babf9d818144e", GitTreeState:"clean"}

Server: &version.Version{SemVer:"v2.12.1", GitCommit:"02a47c7249b1fc6d8fd3b94e6b4babf9d818144e", GitTreeState:"clean"}