二进制包搭建k8s集群

环境信息:

|node-1 |10.2.0.4 |k8s master-1 |

|node-2 |10.2.0.5 |k8s master-2 |

|node-3 |10.2.0.6 |k8s master-3 |

|node-4 |10.2.0.7 |k8s slave-1 |

|node-5 |10.2.0.8 |k8s slave-2 |

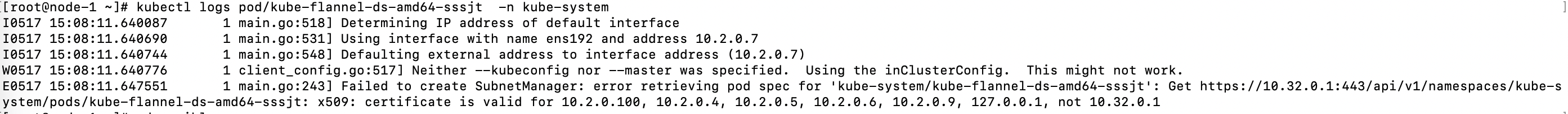

|node-6 |10.2.0.9 |k8s master vip|

部署过程:

1. 系统升级

由于k8s在较低内核中存在某些bug,因此需要先升级下内核。建议使用4.10或以上版本。

1.1 下载地址

软件包百度网盘:

https://pan.baidu.com/s/1JtecfQoZISxN2EQRVrjKdg 6taj

1.2 执行以下命令进行内核升级

关于内核版本的定义: 版本性质:主分支ml(mainline),稳定版(stable),长期维护版lt(longterm) 版本命名格式为 “A.B.C”: 数字 A 是内核版本号:版本号只有在代码和内核的概念有重大改变的时候才会改变,历史上有两次变化: 第一次是1994年的 1.0 版,第二次是1996年的 2.0 版,第三次是2011年的 3.0 版发布,但这次在内核的概念上并没有发生大的变化 数字 B 是内核主版本号:主版本号根据传统的奇-偶系统版本编号来分配:奇数为开发版,偶数为稳定版 数字 C 是内核次版本号:次版本号是无论在内核增加安全补丁、修复bug、实现新的特性或者驱动时都会改变 rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm [root@k8s-node2 ~]# uname -r 3.10.0-1062.el7.x86_64 [root@k8s-node2 ~]# cat /etc/redhat-release CentOS Linux release 7.7.1908 (Core) rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm yum -y --enablerepo=elrepo-kernel install kernel-ml.x86_64 kernel-ml-devel.x86_64 ###通过ansible对所有机器批量操作 ansible all -i hosts -m shell -a "rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org" ansible all -i hosts -m shell -a "rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm" ansible all -i hosts -m shell -a "yum -y --enablerepo=elrepo-kernel install kernel-ml.x86_64 kernel-ml-devel.x86_64" -f 5 ansible all -i hosts -m shell -a "cat /boot/grub2/grub.cfg |grep menuentry" ansible all -i hosts -m shell -a "grub2-set-default 'CentOS Linux (5.6.11-1.el7.elrepo.x86_64) 7 (Core)'"

1.3 IPVS的支持开启

# 确认内核版本后,开启 IPVS

$ uname -a

$ cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

ipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack"

for kernel_module in \${ipvs_modules}; do

/sbin/modinfo -F filename \${kernel_module} > /dev/null 2>&1

if [ $? -eq 0 ]; then

/sbin/modprobe \${kernel_module}

fi

done

EOF

$ chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep ip_vs

1.4 关闭交换分区,Selinux及Firewalld等

# 关闭 Selinux/firewalld

$ systemctl stop firewalld && systemctl disable firewalld

$ setenforce 0

$ sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

# 关闭交换分区

$ swapoff -a

$ cp /etc/{fstab,fstab.bak}

$ cat /etc/fstab.bak | grep -v swap > /etc/fstab

# 设置 iptables

$ echo """

vm.swappiness = 0

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

""" > /etc/sysctl.conf

$ sysctl -p

1.5 配置主机名的解析及时间的同步(略)

1.6 配置各个主机能够免密钥进行通信

# 此处配置node-1能够和其它几个主机免密钥互通 [root@c720111 ~]# ssh-keygen [root@c720111 ~]# ssh-copy-id root@$IP

2. 签发证书

2.1 证书签发配置

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod +x cfssl cfssljson mv cfssl cfssljson /usr/local/bin/

将会分别为下列组件签发证书:

- admin user

- kubelet

- kube-controller-manager

- kube-proxy

- kube-scheduler

- kube-api

2.2 创建CA证书配置请求

(1)产生ca证书

CA证书配置请求

$ cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "8760h"

},

"profiles": {

"kubernetes": {

"usages": ["signing", "key encipherment", "server auth", "client auth"],

"expiry": "8760h"

}

}

}

}

EOF

CA证书签名请求

$ cat > ca-csr.json <<EOF

{

"CN": "Kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Shanghai",

"O": "xiodi",

"OU": "CA",

"ST": "Winterfell"

}

]

}

EOF

产生CA证书

$ cfssl gencert -initca ca-csr.json | cfssljson -bare ca # 查看产生的证书 [root@c720111 tmp]# ls -al -rw------- 1 root root 1675 Mar 23 11:04 ca-key.pem -rw-r--r-- 1 root root 1314 Mar 23 11:04 ca.pem

(2)产生admin用户证书

证书签名请求配置:

$ cat > admin-csr.json <<EOF

{

"CN": "admin",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "Westeros",

"L": "The North",

"O": "system:masters",

"OU": "Kubernetes The Hard Way",

"ST": "Winterfell"

}

]

}

EOF

产生admin用户证书并校验结果:

# 产生证书 $ cfssl gencert \ -ca=ca.pem \ -ca-key=ca-key.pem \ -config=ca-config.json \ -profile=kubernetes \ admin-csr.json | cfssljson -bare admin # 查看产生的证书 [root@c720111 tmp]# ls admin-key.pem admin.pem

3. kubelet Client端证书

3.1 节点授权 节点的授权是一种特殊用途的授权模式,由kubelets API请求明确授权。

节点的授权允许kubelet去执行API操作,包括: - 读操作 - services - endpoints - nodes - pods secret,configmaps,persistent volume claims 和persistent volues相关缩写的pod - 写操作 - 节点和节点的状态(使NodeRestriction准入插件限制kubelet修改自己的节点) - pods和po的状态(使NodeRestriction准入插件限制kubelet修改绑定到本身的Pods) - 事件 - 认证相关的操作 - 读/写访问certificationsigningrequests TLS引导的API - 能够创建tokenreviews和subjectaccessreviews委托认证/授权检查 为了能够通过节点授权,kubeletes必须使用一个凭证来标识它们是`system:nodes`组,是带有`systema:node:<nodeName>`名称 。 具体详情参考:https://kubernetes.io/docs/reference/access-authn-authz/node/

3.2 执行如下命令产生关于kubelet的证书

# 配置worker节点相关信息

$ vim workers.txt

c720114.xiodi.cn 192.168.20.114

c720115.xiodi.cn 192.168.20.115

# 创建脚本文件,内容如下

$ cat generate-kubelet-certificate.sh

IFS=$'\n'

for line in `cat workers.txt`; do

instance=`echo $line | awk '{print $1}'`

INTERNAL_IP=`echo $line | awk '{print $2}'`

cat > ${instance}-csr.json <<EOF

{

"CN": "system:node:${instance}",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "Westeros",

"L": "The North",

"O": "system:nodes",

"OU": "Kubernetes The Hard Way",

"ST": "Winterfell"

}

]

}

EOF

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-hostname=${instance},${INTERNAL_IP} \

-profile=kubernetes \

${instance}-csr.json | cfssljson -bare ${instance}

done

# 产生证书并进行查看

$ sh generate-kubelet-certificate.sh

$ ls -l

-rw------- 1 root root 1679 Mar 23 11:28 c720114.xiodi.cn-key.pem

-rw-r--r-- 1 root root 1521 Mar 23 11:28 c720114.xiodi.cn.pem

-rw------- 1 root root 1679 Mar 23 11:28 c720115.xiodi.cn-key.pem

-rw-r--r-- 1 root root 1521 Mar 23 11:28 c720115.xiodi.cn.pem

4. Controller Manager客户端证书

1. kube-controller-manager证书签名请求内容如下:

$ cat > kube-controller-manager-csr.json <<EOF

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "Westeros",

"L": "The North",

"O": "system:kube-controller-manager",

"OU": "Kubernetes The Hard Way",

"ST": "Winterfell"

}

]

}

EOF

2. 针对kube-controller-manager进行签发证书

$ cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

# 校验产生的证书

$ ls kube-controller-manager*

kube-controller-manager.csr kube-controller-manager-key.pem

kube-controller-manager-csr.json kube-controller-manager.pem

5. Kube Proxy客户端证书

5.1 kube-proxy证书签名请求配置

$ cat > kube-proxy-csr.json <<EOF

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "Westeros",

"L": "The North",

"O": "system:node-proxier",

"OU": "Kubernetes The Hard Way",

"ST": "Winterfell"

}

]

}

EOF

5.2 针对kube proxy签发证书

$ cfssl gencert \ -ca=ca.pem \ -ca-key=ca-key.pem \ -config=ca-config.json \ -profile=kubernetes \ kube-proxy-csr.json | cfssljson -bare kube-proxy # 校验产生的证书 $ ls kube-proxy* kube-proxy.csr kube-proxy-csr.json kube-proxy-key.pem kube-proxy.pem

6. Scheduler 客户端证书

6.1 kube scheduler客户端证书签名请求

cat > kube-scheduler-csr.json <<EOF

{

"CN": "system:kube-scheduler",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "Westeros",

"L": "The North",

"O": "system:kube-scheduler",

"OU": "Kubernetes The Hard Way",

"ST": "Winterfell"

}

]

}

EOF

6.2 针对kube scheduler签发证书

$ cfssl gencert \ -ca=ca.pem \ -ca-key=ca-key.pem \ -config=ca-config.json \ -profile=kubernetes \ kube-scheduler-csr.json | cfssljson -bare kube-scheduler # 校验产生的证书 $ ls kube-scheduler* kube-scheduler.csr kube-scheduler-csr.json kube-scheduler-key.pem kube-scheduler.pem

7. Kuberenetes API证书

kube-api服务器证书主机名称应该包含如下: - 所有控制器的主机名称 - 所有控制器的IP - 负载均衡器的主机名称 - 负载均衡器的IP - Kubernetes的服务(kubernetes默认的服务名称和IP均是10.32.0.1) - localhost

CERT_HOSTNAME=10.32.0.1,<master node 1 Private IP>,<master node 1 hostname>,<master node 2 Private IP>,<master node 2 hostname>,<master node 3 Private IP>,<master node 3 hostname><API load balancer Private IP>,<API load balancer hostname>,127.0.0.1,localhost,kubernetes.default

$ CERT_HOSTNAME=10.32.0.1,c720111.xiodi.cn,192.168.20.111,c720112.xiodi.cn,192.168.20.112,c720113.xiodi.cn,192.168.20.113,c720116.xiodi.cn,192.168.20.116,127.0.0.1,localhost,kubernetes.default

7.1 创建证书签名请求

$ cat > kubernetes-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "Westeros",

"L": "The North",

"O": "Kubernetes",

"OU": "Kubernetes The Hard Way",

"ST": "Winterfell"

}

]

}

EOF

7.2 产生kubernetes API证书

$ cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-hostname=${CERT_HOSTNAME} \

-profile=kubernetes \

kubernetes-csr.json | cfssljson -bare kubernetes

7.3 查看证书

$ ls kubernetes* kubernetes.csr kubernetes-csr.json kubernetes-key.pem kubernetes.pem

8. 服务帐户密钥对

服务帐户密钥对常用来签署服务帐户的token. 关于服务帐户参考链接:https://kubernetes.io/docs/reference/access-authn-authz/service-accounts-admin/

8.1 配置服务帐户的证书签名请求

$ cat > service-account-csr.json <<EOF

{

"CN": "service-accounts",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "Westeros",

"L": "The North",

"O": "Kubernetes",

"OU": "Kubernetes The Hard Way",

"ST": "Winterfell"

}

]

}

EOF

8.2 针对服务帐户签发证书

$ cfssl gencert \ -ca=ca.pem \ -ca-key=ca-key.pem \ -config=ca-config.json \ -profile=kubernetes \ service-account-csr.json | cfssljson -bare service-account # 查看针对服务帐户签发的证书 $ ls service-account* service-account.csr service-account-csr.json service-account-key.pem service-account.pem

9. 复制证书到各个节点

$ cat workers.txt

c720114.xiodi.cn 192.168.20.114 root

c720115.xiodi.cn 192.168.20.115 root

$ cat controllers.txt

c720111.xiodi.cn 192.168.20.111 root

c720112.xiodi.cn 192.168.20.112 root

c720113.xiodi.cn 192.168.20.113 root

# Minion

$ IFS=$'\n'

$ for line in `cat workers.txt`; do

instance=`echo $line | awk '{print $1}'`

user=`echo $line | awk '{print $3}'`

rsync -zvhe ssh ca.pem ${instance}-key.pem ${instance}.pem ${user}@${instance}:/root/

done

# Master

$ for instance in node-1 node-2 node-3; do

rsync -zvhe ssh ca.pem ca-key.pem kubernetes-key.pem kubernetes.pem \

service-account-key.pem service-account.pem root@${instance}:/root/

done

===4. 针对k8s各个组件创建kubeconfig===

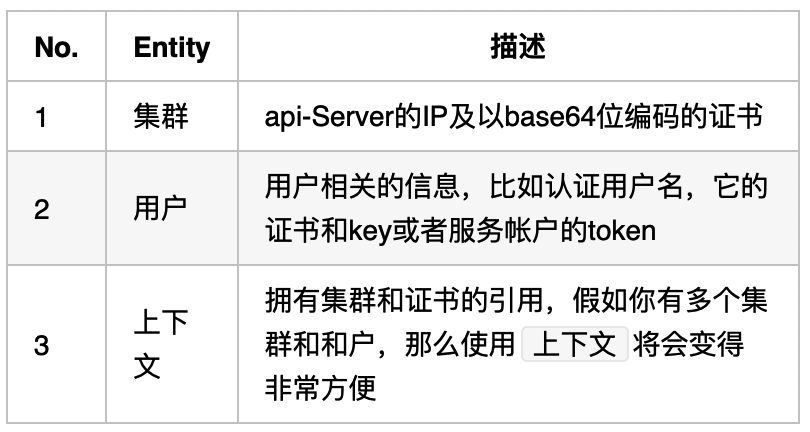

kubeconfig常用来在kubernetes组件之间和用户到kubernetes之间。kubeconfig主要包含三件事情:

在本小节中,我们将会产生如下组件的kubeconfig:

1. kubelet kubeconfig

2. kube Proxy kubeconfig

3. Controller Manager kubeconfig

4. Kube Scheduler kubeconfig

5. admin用户的kubeconfig

4.1 产生kubelet的kubeconfig

在kubeconfig中的user应该是system:node:<Worker_name>,它应该匹配我们在产生kubelet client证书的主机名称。

$ cat haproxy.txt

lb-1 10.2.0.9

$ chmod +x kubectl

$ cp kubectl /usr/local/bin/

$ KUBERNETES_PUBLIC_ADDRESS=`cat haproxy.txt | awk '{print $2}'`

$ IFS=$'\n'

$ for instance in node-4 node-5; do

kubectl config set-cluster kubernetes-the-hard-way \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://${KUBERNETES_PUBLIC_ADDRESS}:6443 \

--kubeconfig=${instance}.kubeconfig

kubectl config set-credentials system:node:${instance} \

--client-certificate=${instance}.pem \

--client-key=${instance}-key.pem \

--embed-certs=true \

--kubeconfig=${instance}.kubeconfig

kubectl config set-context default \

--cluster=kubernetes-the-hard-way \

--user=system:node:${instance} \

--kubeconfig=${instance}.kubeconfig

kubectl config use-context default --kubeconfig=${instance}.kubeconfig

done

$ ls

node-4.kubeconfig node-5.kubeconfig

4.2 产生kube-proxy kubeconfig

$ KUBERNETES_PUBLIC_ADDRESS=`cat haproxy.txt | awk '{print $2}'`

$ {

kubectl config set-cluster kubernetes-the-hard-way \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://${KUBERNETES_PUBLIC_ADDRESS}:6443 \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials system:kube-proxy \

--client-certificate=kube-proxy.pem \

--client-key=kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes-the-hard-way \

--user=system:kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

}

$ ls kube-proxy.kubeconfig

kube-proxy.kubeconfig

4.3 创建kue-controller-manager kubeconfig

{

kubectl config set-cluster kubernetes-the-hard-way \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://127.0.0.1:6443 \

--kubeconfig=kube-controller-manager.kubeconfig

kubectl config set-credentials system:kube-controller-manager \

--client-certificate=kube-controller-manager.pem \

--client-key=kube-controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=kube-controller-manager.kubeconfig

kubectl config set-context default \

--cluster=kubernetes-the-hard-way \

--user=system:kube-controller-manager \

--kubeconfig=kube-controller-manager.kubeconfig

kubectl config use-context default --kubeconfig=kube-controller-manager.kubeconfig

}

$ ls kube-controller-manager.kubeconfig

kube-controller-manager.kubeconfig

4.4 产生kube-scheduler kubeconfig

{

kubectl config set-cluster kubernetes-the-hard-way \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://127.0.0.1:6443 \

--kubeconfig=kube-scheduler.kubeconfig

kubectl config set-credentials system:kube-scheduler \

--client-certificate=kube-scheduler.pem \

--client-key=kube-scheduler-key.pem \

--embed-certs=true \

--kubeconfig=kube-scheduler.kubeconfig

kubectl config set-context default \

--cluster=kubernetes-the-hard-way \

--user=system:kube-scheduler \

--kubeconfig=kube-scheduler.kubeconfig

kubectl config use-context default --kubeconfig=kube-scheduler.kubeconfig

}

4.5 产生admin的kubeconfig

{

kubectl config set-cluster kubernetes-the-hard-way \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://127.0.0.1:6443 \

--kubeconfig=admin.kubeconfig

kubectl config set-credentials admin \

--client-certificate=admin.pem \

--client-key=admin-key.pem \

--embed-certs=true \

--kubeconfig=admin.kubeconfig

kubectl config set-context default \

--cluster=kubernetes-the-hard-way \

--user=admin \

--kubeconfig=admin.kubeconfig

kubectl config use-context default --kubeconfig=admin.kubeconfig

}

4.6 复制kubeconfig文件到各个节点

$ IFS=$'\n'

$ for instance in node-4 node-5; do

rsync -zvhe ssh ${instance}.kubeconfig kube-proxy.kubeconfig ${user}@${instance}:~/

done

$ for instance in node-1 node-2 node-3; do

rsync -zvhe ssh admin.kubeconfig kube-controller-manager.kubeconfig kube-scheduler.kubeconfig root@${instance}:/root/

done

5. 通信加密配置设置

Kubernetes存储不同类型的数据,包括集群状态,应用程序配置,和密钥。Kubernetes支持集群数据加密。在本节中,我们将生成一个加密密钥和加密配置适合加密Kubernetes密钥

1.加密的Key ENCRYPTION_KEY=$(head -c 32 /dev/urandom | base64)

2. 加密的config文件

cat > encryption-config.yaml <<EOF kind: EncryptionConfig apiVersion: v1 resources: - resources: - secrets providers: - aescbc: keys: - name: key1 secret: ${ENCRYPTION_KEY} - identity: {} EOF

3. 复制到控制节点

IFS=$'\n'

for instance in node-1 node-2 node-3; do

rsync -zvhe ssh encryption-config.yaml root@${instance}:~/

done

总结:到目前为止,我们做了如下事情:

1. 产生证书 2. 产生kubeconfig文件 3. 复制证书和kubeconfigs到节点

6. etcd集群的部署

由于我们把etcd安装在控制节点,因此以下在控制节点上执行:

1. 解压缩etcd文件并复制相关的证书到适当的位置

$ tar -xvf etcd-v3.3.5-linux-amd64.tar.gz $ mv etcd-v3.3.5-linux-amd64/etcd* /usr/local/bin/ $ mkdir -p /etc/etcd /var/lib/etcd $ cp ca.pem kubernetes-key.pem kubernetes.pem /etc/etcd/

for i in 2 3;do scp etcd-v3.3.5-linux-amd64.tar.gz node-$i:/root/ tar -xvf etcd-v3.3.5-linux-amd64.tar.gz mv etcd-v3.3.5-linux-amd64/etcd* /usr/local/bin/

2. 产生下列环境变量,用来产生etcd systemd的服务配置文件

ETCD_NAME=`hostname`

INTERNAL_IP=`hostname -i`

INITIAL_CLUSTER=node-1=https://10.2.0.4:2380,node-2=https://10.2.0.5:2380,node-3=https://10.2.0.6:2380

3. 创建服务的配置文件

mkdir /etc/etcd/ cp kubernetes-key.pem /etc/etcd/ cp kubernetes.pem /etc/etcd/ cp ca.pem /etc/etcd/

cat << EOF | sudo tee /etc/systemd/system/etcd.service

[Unit]

Description=etcd

Documentation=https://github.com/coreos

[Service]

ExecStart=/usr/local/bin/etcd \\

--name ${ETCD_NAME} \\

--cert-file=/etc/etcd/kubernetes.pem \\

--key-file=/etc/etcd/kubernetes-key.pem \\

--peer-cert-file=/etc/etcd/kubernetes.pem \\

--peer-key-file=/etc/etcd/kubernetes-key.pem \\

--trusted-ca-file=/etc/etcd/ca.pem \\

--peer-trusted-ca-file=/etc/etcd/ca.pem \\

--peer-client-cert-auth \\

--client-cert-auth \\

--initial-advertise-peer-urls https://${INTERNAL_IP}:2380 \\

--listen-peer-urls https://${INTERNAL_IP}:2380 \\

--listen-client-urls https://${INTERNAL_IP}:2379,https://127.0.0.1:2379 \\

--advertise-client-urls https://${INTERNAL_IP}:2379 \\

--initial-cluster-token etcd-cluster-0 \\

--initial-cluster ${INITIAL_CLUSTER} \\

--initial-cluster-state new \\

--data-dir=/var/lib/etcd

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

4. 启动etcd服务

systemctl daemon-reload systemctl enable etcd systemctl start etcd

5. 在etcd安装和配置完成后,用以下命令校验它是否正常运行

ETCDCTL_API=3 etcdctl member list \ --endpoints=https://127.0.0.1:2379 \ --cacert=/etc/etcd/ca.pem \ --cert=/etc/etcd/kubernetes.pem \ --key=/etc/etcd/kubernetes-key.pem

7. k8s master各组件的部署详解

控制节点包括: - kube-apiserver - kube-controller-manager - kube-scheduler

7.1. 移动二进制文件到/usr/local/bin目录下

$ chmod +x kube-apiserver kube-controller-manager kube-scheduler kubectl $ mv kube-apiserver kube-controller-manager kube-scheduler kubectl /usr/local/bin/

1. Kubernetes API服务器配置

1.1. 移动证书到Kubernetes 目录

$ mkdir -p /var/lib/kubernetes/

$ mv ca.pem ca-key.pem kubernetes-key.pem kubernetes.pem \

service-account-key.pem service-account.pem \

encryption-config.yaml /var/lib/kubernetes/

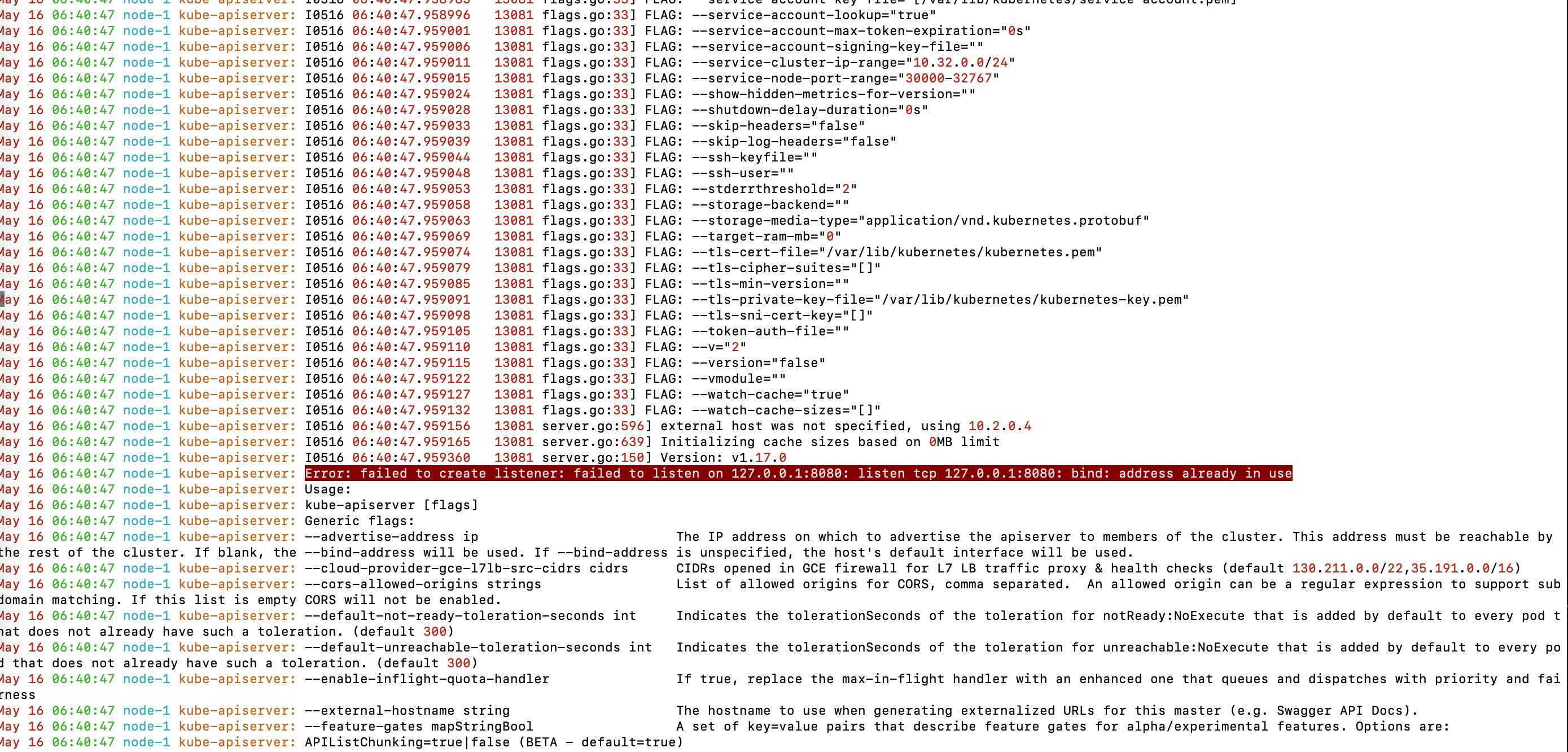

1.2. 创建kube-api服务器的服务配置文件

# On controller nodes

$ CONTROLLER0_IP=10.2.0.4

$ CONTROLLER1_IP=10.2.0.5

$ CONTROLLER2_IP=10.2.0.6

$ INTERNAL_IP=`hostname -i`

$ cat << EOF | sudo tee /etc/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-apiserver \\

--advertise-address=${INTERNAL_IP} \\

--allow-privileged=true \\

--apiserver-count=3 \\

--audit-log-maxage=30 \\

--audit-log-maxbackup=3 \\

--audit-log-maxsize=100 \\

--audit-log-path=/var/log/audit.log \\

--authorization-mode=Node,RBAC \\

--bind-address=0.0.0.0 \\

--client-ca-file=/var/lib/kubernetes/ca.pem \\

--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \\

--etcd-cafile=/var/lib/kubernetes/ca.pem \\

--etcd-certfile=/var/lib/kubernetes/kubernetes.pem \\

--etcd-keyfile=/var/lib/kubernetes/kubernetes-key.pem \\

--etcd-servers=https://$CONTROLLER0_IP:2379,https://$CONTROLLER1_IP:2379,https://$CONTROLLER2_IP:2379 \\

--event-ttl=1h \\

--encryption-provider-config=/var/lib/kubernetes/encryption-config.yaml \\

--kubelet-certificate-authority=/var/lib/kubernetes/ca.pem \\

--kubelet-client-certificate=/var/lib/kubernetes/kubernetes.pem \\

--kubelet-client-key=/var/lib/kubernetes/kubernetes-key.pem \\

--kubelet-https=true \\

--runtime-config=api/all=true \\

--service-account-key-file=/var/lib/kubernetes/service-account.pem \\

--service-cluster-ip-range=10.32.0.0/24 \\

--service-node-port-range=30000-32767 \\

--tls-cert-file=/var/lib/kubernetes/kubernetes.pem \\

--tls-private-key-file=/var/lib/kubernetes/kubernetes-key.pem \\

--v=2 \\

--kubelet-preferred-address-types=InternalIP,InternalDNS,Hostname,ExternalIP,ExternalDNS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

2. Kubernetes Controller Manager配置

2.1 移动kubeconfig文件到kubernetes目录

mv kube-controller-manager.kubeconfig /var/lib/kubernetes/

2.2. 创建kube-controller-manager服务配置文件

cat <<EOF | sudo tee /etc/systemd/system/kube-controller-manager.service [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes [Service] ExecStart=/usr/local/bin/kube-controller-manager \\ --address=0.0.0.0 \\ --cluster-cidr=10.200.0.0/16 \\ --cluster-name=kubernetes \\ --cluster-signing-cert-file=/var/lib/kubernetes/ca.pem \\ --cluster-signing-key-file=/var/lib/kubernetes/ca-key.pem \\ --kubeconfig=/var/lib/kubernetes/kube-controller-manager.kubeconfig \\ --leader-elect=true \\ --root-ca-file=/var/lib/kubernetes/ca.pem \\ --service-account-private-key-file=/var/lib/kubernetes/service-account-key.pem \\ --service-cluster-ip-range=10.32.0.0/24 \\ --use-service-account-credentials=true \\ --allocate-node-cidrs=true \ --cluster-cidr=10.100.0.0/16 \ --v=2 Restart=on-failure RestartSec=5 [Install] WantedBy=multi-user.target EOF

3. Kubernetes Scheduler配置

3.1 移动kube-scheduler kubeconfig到kubernetes目录

mv kube-scheduler.kubeconfig /var/lib/kubernetes/

3.2. 创建kube-scheduler配置文件

$ mkdir -p /etc/kubernetes/config $ cat <<EOF | tee /etc/kubernetes/config/kube-scheduler.yaml apiVersion: kubescheduler.config.k8s.io/v1alpha1 kind: KubeSchedulerConfiguration clientConnection: kubeconfig: "/var/lib/kubernetes/kube-scheduler.kubeconfig" leaderElection: leaderElect: true EOF

3.3 创建kube-scheduler服务配置文件

$ cat <<EOF | tee /etc/systemd/system/kube-scheduler.service [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] ExecStart=/usr/local/bin/kube-scheduler \\ --config=/etc/kubernetes/config/kube-scheduler.yaml \\ --v=2 Restart=on-failure RestartSec=5 [Install] WantedBy=multi-user.target EOF

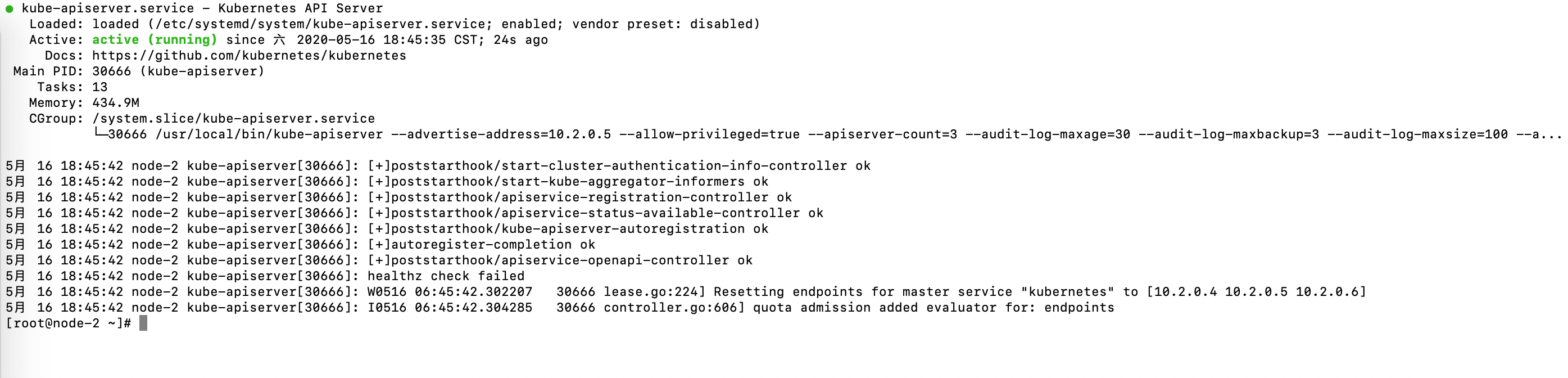

3.4. 启动服务

$ systemctl daemon-reload $ systemctl enable kube-apiserver kube-controller-manager kube-scheduler $ systemctl start kube-apiserver kube-controller-manager kube-scheduler

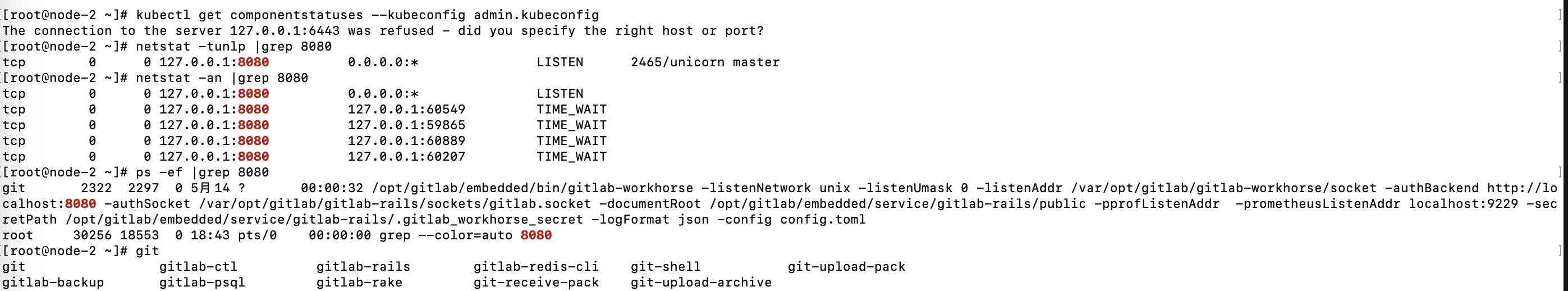

4. HTTP健康检测

4.1 使用以下命令检查各个组件的状态

$ kubectl get componentstatuses --kubeconfig admin.kubeconfig

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-1 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

5. 针对kubelet授权

将下来配置RBAC的授权,来允许kube-api server去访问在每个worker节点上kubelet API. 访问kubelet API主要是用来检索度量,日志和执行Pods中的命令等。

5.1. 创建`system:kube-apiserver-to-kubelet`集群角色,并附与权限去访问kubelet

cat <<EOF | kubectl apply --kubeconfig admin.kubeconfig -f -

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

verbs:

- "*"

EOF

5.2. kube-api服务器认证kubelet,是使用`kubernetes`用户证书,通过`--kubelet-client-certificate`来指定的。详情可以查看上面定义的kube-apiserver服务配置文件。

绑定`system:kube-apiserver-to-kubelet`集群角色到kubernetes用户

cat <<EOF | kubectl apply --kubeconfig admin.kubeconfig -f -

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kubernetes

EOF

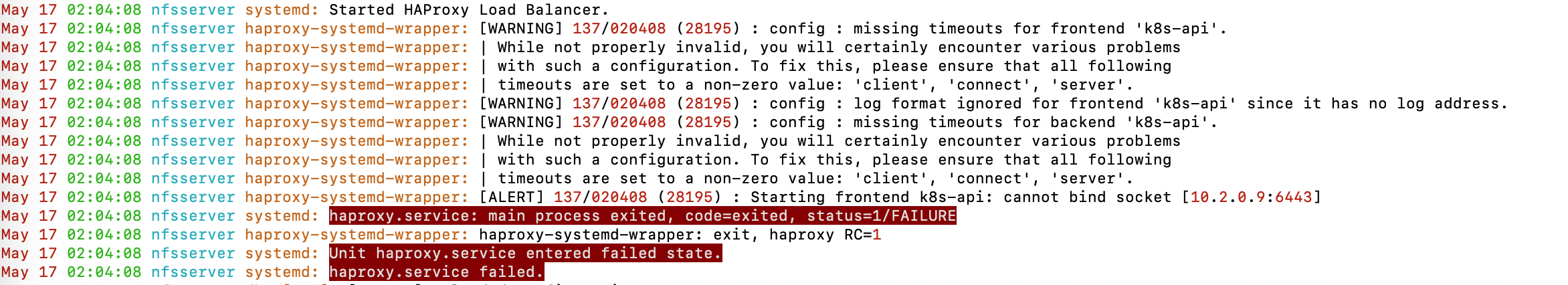

6. Kubernetes前端负载均衡器

# 安装haproxy

$ yum install haproxy -y

# haproxy配置如下所示:

$ cat /etc/haproxy/haproxy.cfg

frontend k8s-api

bind 192.168.20.116:6443

bind 192.168.20.116:443

mode tcp

option tcplog

default_backend k8s-api

backend k8s-api

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server k8s-api-1 192.168.20.111:6443 check

server k8s-api-2 192.168.20.112:6443 check

server k8s-api-3 192.168.20.113:6443 check

# 启动服务

$ systemctl start haproxy

$ systemctl enable haproxy

# 如果配置完全Ok,应该会看到如下信息

$ curl --cacert ca.pem https://192.168.20.116:6443/version

{

"major": "1",

"minor": "17",

"gitVersion": "v1.17.0",

"gitCommit": "70132b0f130acc0bed193d9ba59dd186f0e634cf",

"gitTreeState": "clean",

"buildDate": "2019-12-07T21:12:17Z",

"goVersion": "go1.13.4",

"compiler": "gc",

"platform": "linux/amd64"

}

haproxy 无法起来,selinux的问题https://stackoverflow.com/questions/34793885/haproxy-cannot-bind-socket-0-0-0-08888

error: unable to recognize "deployment.yaml": no matches for kind "Deployment" in version "extensions/v1beta1

解决方案: