【ROS】4.1 Turtlebot3仿真Waffle循线跟踪

原视频

本节内容较多,

请根据左侧目录针对性阅读。

一、准备工作

-

这一章我们先用gazebo仿真做,不使用真小车,使用的是Waffle模型。

需要下载的库gazebo-ros、turtlebot3_simulations。

如果下载过程中显示虚拟机又连不上网了,暂时切换成NAT模式。以后远程连真小车的时候再改回桥接。注意IP地址的变化。

新建工作空间

# 新建工作空间并初始化

mkdir -p ~/catkin_ws/src

cd ~/catkin_ws/src

catkin_init_workspace

cd ~/catkin_ws/

catkin_make

# 添加环境变量

source ~/catkin_ws/devel/setup.bash

source ~/.bashrc

功能包程序下载

cd ~/catkin_ws/src/ # 进自己的工作空间的源文件目录

git clone https://github.com/ROBOTIS-GIT/turtlebot3_simulations.git

# git clone https://gitee.com/YuChuXiang/turtlebot3_simulations.git

git clone https://github.com/ROBOTIS-GIT/hls_lfcd_lds_driver.git

# git clone https://gitee.com/qianlong_workspace/hls_lfcd_lds_driver.git

git clone https://github.com/ROBOTIS-GIT/turtlebot3.git

# git clone https://gitee.com/Walkerxrc/turtlebot3.git

git clone https://github.com/ROBOTIS-GIT/turtlebot3_msgs.git

# git clone https://gitee.com/sophtech/turtlebot3_msgs.git

cd ~/catkin_ws && catkin_make

注:最好别用注释里的,因为它更新不及时。

Gazebo下载安装(如果运行提示缺包漏包也重装一下)

注意版本替换(noetic->自己的版本)。

-y参数是默认yes,以免询问“是否继续下载……”

sudo apt-get install ros-noetic-gazebo-ros-pkgs ros-noetic-gazebo-ros-control -y

sudo apt-get install ros-noetic-turtlebot3-gazebo -y

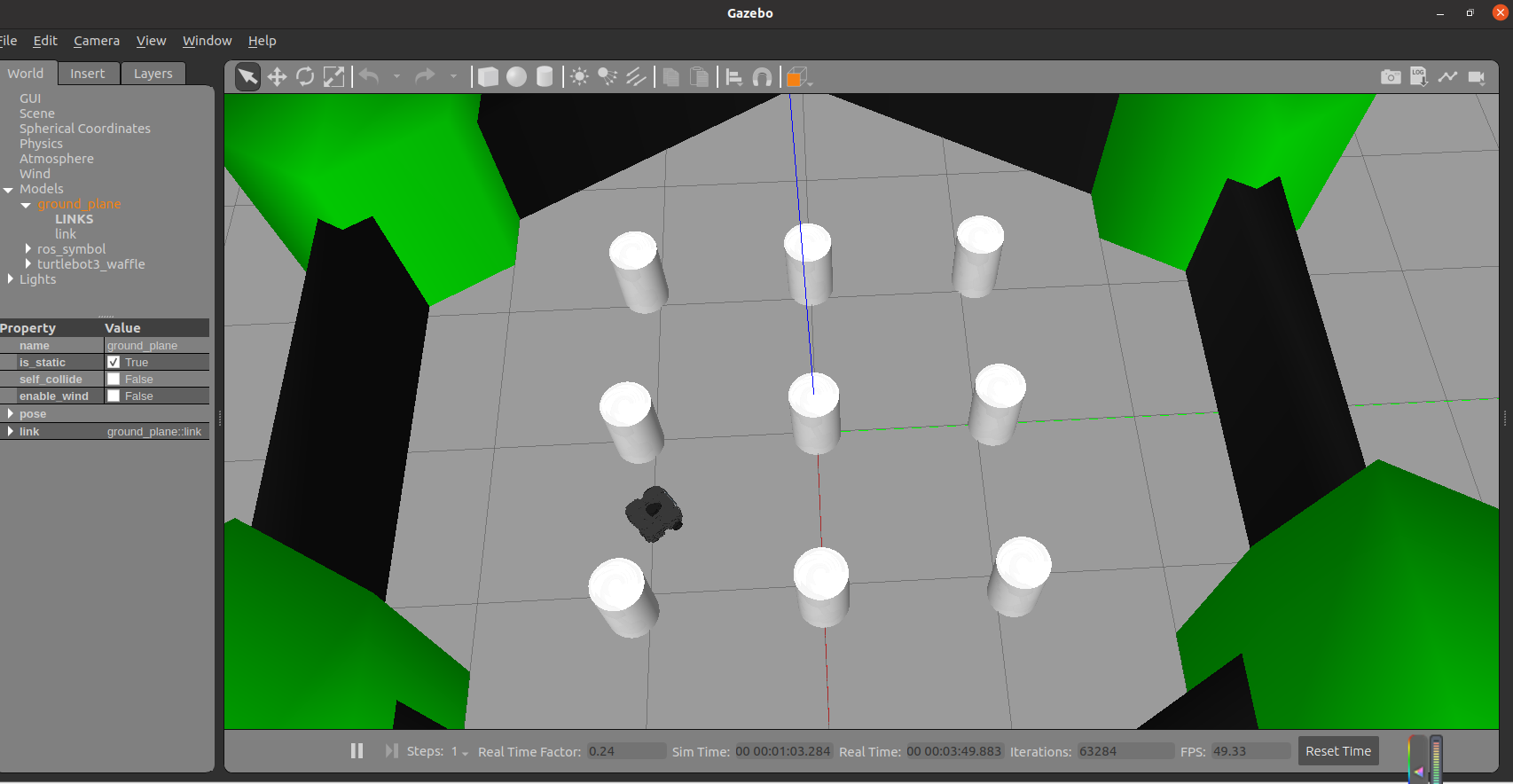

测试Gazebo是否安装成功

运行:

roslaunch turtlebot3_gazebo turtlebot3_world.launch

成功时显示:

二、测试功能包是否安装成功

1. 测试(如有异常请看2.Gazebo运行异常)

# 启动终端A

roslaunch turtlebot3_gazebo turtlebot3_world.launch

# 另起终端B

roslaunch turtlebot3_teleop turtlebot3_teleop_key.launch

# 上一条注:键盘控制小车。若gazebo中小车有移动,则说明控制程序正确

# 续注:验证完毕后,结束键盘控制进程(Ctrl+C)

# 在终端B中

roslaunch turtlebot3_gazebo turtlebot3_simulation.launch

# 上一条注:启动仿真模拟。若gazebo中小车自主移动,则说明模拟程序正确

# 续注:若gazebo卡住并在A中显示process has died,重复尝试两次。若仍然失败,考虑gazebo运行异常

# 在终端C中

rostopic list

# 上一条注:将会看到/camera/rgb/camera_info和/camera/rgb/image_raw

# 续注:若没有,则检测.bashrc文件中,模型是否为waffle

# 在终端C中

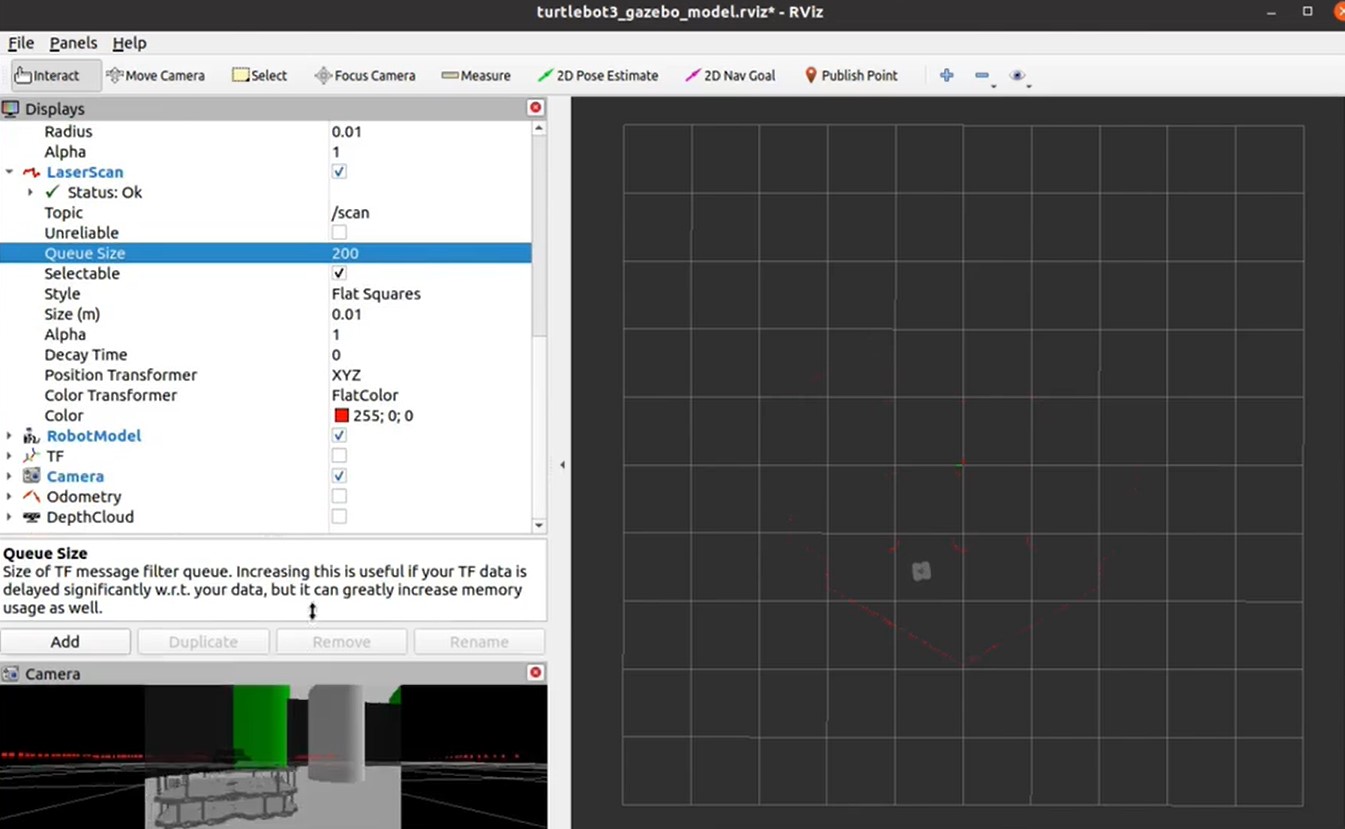

roslaunch turtlebot3_gazebo turtlebot3_gazebo_rviz.launch

# 上一条注:打开左侧Camera,等待良久后,能看到图二

# 续注:验证完毕后,结束终端B、C的进程(Ctrl+C)

图二

Gazebo运行异常时

gazebo 启动异常以及解决

问题1:RLException: Invalid tag: environment variable 'TURTLEBOT3_MODEL' is not set.

在.bashrc中添加一行配置:

export TURTLEBOT3_MODEL=waffle

意思是选择仿真的模型,为waffle。

问题2:gazebo黑屏

检查~/.gazebo下的models是否安装完成,如果没有安装完成,运行下面的代码。

# 预加载Gazebo模型以免过慢。

cd ~/.gazebo/

# 第一种方案(官网下载,速度较慢):

mkdir -p models

cd ~/.gazebo/models/

wget http://file.ncnynl.com/ros/gazebo_models.txt

wget -i gazebo_models.txt

ls model.tar.g* | xargs -n1 tar xzvf

# 第二种方案(下载现成的models):

git clone https://gitee.com/yltzdhbc/gazebo_models

mv gazebo_models models #将下载的文件夹改名为models

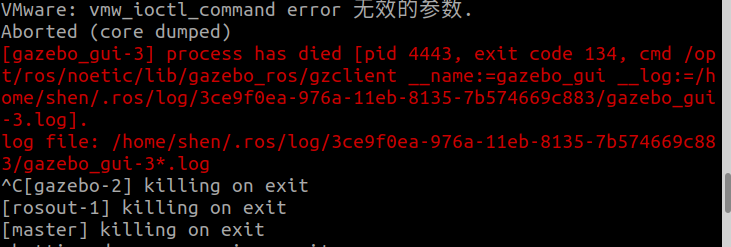

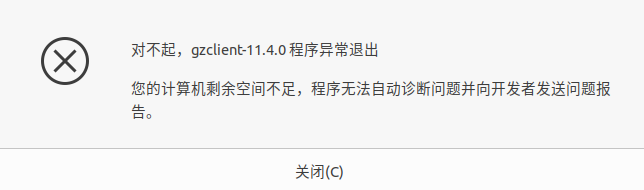

问题3:VMware: vmw_ioctl_command error Invalid argument(无效的参数)

解决:

echo "export SVGA_VGPU10=0" >> ~/.bashrc

source ~/.bashrc

问题4:[Err] [REST.cc:205] Error in REST request

解决:

sudo gedit ~/.ignition/fuel/config.yaml

# 将url : https://api.ignitionfuel.org使用 # 注释掉

# 再添加url: https://api.ignitionrobotics.org

问题5:启动时抛出异常:[gazebo-2] process has died [pid xxx, exit code 255, cmd.....

解决:

killall gzserver

killall gzclient

# 等待一会儿或者重启也行,这个问题仅仅是因为你上一个gazebo没关彻底,残留了点东西,导致新的打不开。

如果还有其他问题再做以下的事情:

除了Ubuntu20.04,尽量不要第一时间尝试更新,可能会越解决越糟糕!

# 添加源

sudo sh -c 'echo "deb http://packages.osrfoundation.org/gazebo/ubuntu-stable `lsb_release -cs` main" > /etc/apt/sources.list.d/gazebo-stable.list'

wget https://packages.osrfoundation.org/gazebo.key -O - | sudo apt-key add -

# 安装或更新

sudo apt-get update

sudo apt-get upgrade

sudo apt-get install gazebo11

# Ubuntu16.04对应gazebo7,Ubuntu18.04对应gazebo9,下同

sudo apt-get install libgazebo11-dev

三、导入程序并理解作用

直接git clone(也可以手动复制导入)

cd ~/catkin_ws/src

git clone https://gitee.com/shandianchengzi/learning_topic.git # 克隆我已经测试过的程序

cd ~/catkin_ws/ && catkin_make

chmod +x ~/catkin_ws/src/learning_topic/scripts/*.py # 将所有的python程序设置为允许执行的文件

理解程序

在你自己的功能包的src下面(比如我的功能包叫catkin_ws/src/learning_topic)

~/catkin_ws/src/learning_topic/scripts/ # 用于存放python脚本

~/catkin_ws/src/learning_topic/launch/ # 用于存放启动程序(.launch文件)

~/catkin_ws/src/learning_topic/worlds/ # 用于存放gazebo仿真模拟的地图模型(.worlds文件)

01follower.py

源代码

~/catkin_ws/src/learning_topic/scripts/目录下。

#!/usr/bin/env python3

# 若使用的是python2,将第一行改成python2

# 若使用的是python,将第一行改成python

# 之后的程序同理

import rospy

from sensor_msgs.msg import Image # 从传感器图片的库中导入Image数据类型

def image_callback(msg):

pass

rospy.init_node('follower') # 建立结点follower

image_sub = rospy.Subscriber('camera/rgb/image_raw', Image, image_callback)

# 以上括号内分别是 接受的topic名称, 数据类型, 触发的回调函数

rospy.spin()

运行方式

chmod +x 01follower.py

rosrun learning_topic 01follower.py

运行结果

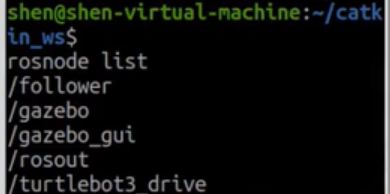

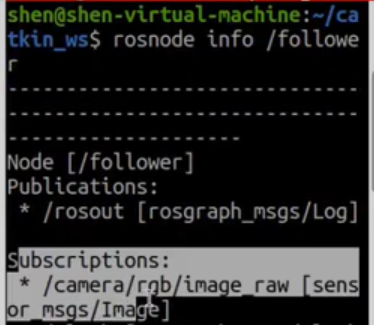

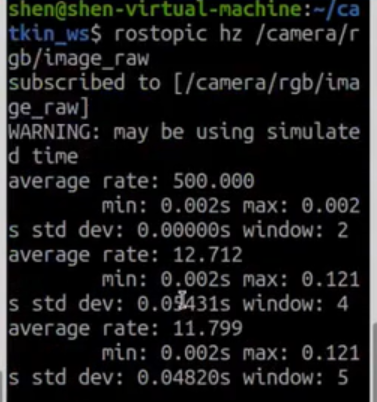

结果是生成了一个名叫'follower'的结点,它订阅了'camera/rgb/image_raw'话题。

可用下列指令检验:

rosnode list # 检查是否出现/follower结点

rosnode info /follower # 如果出现了/follower结点,用这个指令查看/follower结点的详细信息

rostopic hz /camera/rgb/image_raw # 不出意外的话,/follower结点订阅的话题是/camera/rgb/image_raw。用这个指令查看话题发布的情况。

检验结果应如下图所示:

02follower_opencv.py

源代码

~/catkin_ws/src/learning_topic/scripts/目录下。

#!/usr/bin/env python3

import rospy

from sensor_msgs.msg import Image

import cv2, cv_bridge # 导入opencv模块和协议模块

def image_callback(msg):

image = bridge.imgmsg_to_cv2(msg,desired_encoding="bgr8")

# 将ros_image通过蓝绿红8位色空间转换为OpenCV图像,结果返回给image,类参数bridge转换函数

if(image.all() == None):

print("Can't get your image, please check your code!")

else :

# print(image.size, image.shape) # 输出图像大小以及形状

cv2.namedWindow("showImage",cv2.WINDOW_NORMAL) # 建立名为showImage的窗口 窗口类为 cv2内置的NORMAL窗口

cv2.imshow("showImage",image[:,:,0]) # 在showImage中显示二维图像

cv2.waitKey(3) # waitkey()延时显示图像,在imshow之后,没有waitkey()函数图像将无法显示

rospy.init_node('follower',anonymous = True) # anonymous 同步选项 每公布一条消息就接受一个消息

bridge = cv_bridge.CvBridge() # 创建CvBridge对象

image_sub=rospy.Subscriber('/camera/rgb/image_raw',Image,image_callback)

# 以上括号内分别是 接受的topic名称, 数据类型, 触发的回调函数

rospy.spin()

运行结果

能看到一个名字叫做showImage的窗口(不是下图的窗口名)。

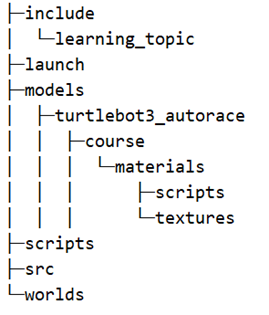

名为course的model

~/catkin_ws/src/learning_topic/models/目录下。

-

这个model可以在turtlebot3_simulations库中找到。

具体位置是turtlebot3_simulations/turtlebot3_gazebo/models/turtlebot3_autorace/course。

拷贝到自己的功能包下即可。

-

若想了解model的自定义机制,可以从model的文件组织,以及如何调用model入手。

文件组织的逻辑:参考gazebo中给地面添加纹理,写得很清晰。

文件的调用关系:参考0course.launch、course.world两个文件中的下列部分:

<!-- 0course.launch中 --> <env name="GAZEBO_RESOURCE_PATH" value="$(find learning_topic)/models/turtlebot3_autorace/ground_picture" /> <!-- course.world中 --> <!-- Our ground image --> <include> <uri>model://turtlebot3_autorace/course</uri> <pose> 0 0 0 0 0 -1.54</pose> </include>

course.world

~/catkin_ws/src/learning_topic/worlds/目录下。

<sdf version="1.4">

<world name="default">

<scene>

<ambient>0.4 0.4 0.4 1</ambient>

<background>0.7 0.7 0.7 1</background>

<shadows>true</shadows>

</scene>

<!-- A global light source -->

<include>

<uri>model://sun</uri>

</include>

<!-- A ground plane -->

<include>

<uri>model://ground_plane</uri>

</include>

<!-- Our ground image -->

<include>

<uri>model://turtlebot3_autorace/course</uri>

<pose> 0 0 0 0 0 -1.54</pose>

</include>

<physics type="ode">

<real_time_update_rate>1000.0</real_time_update_rate>

<max_step_size>0.001</max_step_size>

<real_time_factor>1</real_time_factor>

<ode>

<solver>

<type>quick</type>

<iters>150</iters>

<precon_iters>0</precon_iters>

<sor>1.400000</sor>

<use_dynamic_moi_rescaling>1</use_dynamic_moi_rescaling>

</solver>

<constraints>

<cfm>0.00001</cfm>

<erp>0.2</erp>

<contact_max_correcting_vel>2000.000000</contact_max_correcting_vel>

<contact_surface_layer>0.01000</contact_surface_layer>

</constraints>

</ode>

</physics>

</world>

</sdf>

0course.launch

源代码

这个程序需要根据你自己的功能包名字修改一些地方。

<arg name="world_name" value="$(find turtlebot3_gazebo)/worlds/empty.world"/>改成<arg name="world_name" value="$(find learning_topic)/worlds/course.world"/>。(改成自己的功能包的名字)

~/catkin_ws/src/learning_topic/launch/目录下。

<launch>

<!-- $(find learning_topic)/models/turtlebot3_autorace是你的model文件的路径 -->

<env name="GAZEBO_RESOURCE_PATH" value="$(find learning_topic)/models/turtlebot3_autorace/ground_picture" />

<arg name="model" default="$(env TURTLEBOT3_MODEL)" doc="model type [burger, waffle, waffle_pi]"/>

<arg name="x_pos" default="0.0"/>

<arg name="y_pos" default="0.0"/>

<arg name="z_pos" default="0.0"/>

<include file="$(find gazebo_ros)/launch/empty_world.launch">

<!-- $(find learning_topic)/worlds/course.world是你的world文件的路径 -->

<arg name="world_name" value="$(find learning_topic)/worlds/course.world"/>

<arg name="paused" value="false"/>

<arg name="use_sim_time" value="true"/>

<arg name="gui" value="true"/>

<arg name="headless" value="false"/>

<arg name="debug" value="false"/>

</include>

<param name="robot_description" command="$(find xacro)/xacro $(find turtlebot3_description)/urdf/turtlebot3_$(arg model).urdf.xacro" />

<!-- 增设一个状态发布结点robot_state_publisher -->

<node pkg="robot_state_publisher" type="robot_state_publisher" name="robot_state_publisher">

<param name="publish_frequency" type="double" value="30.0" />

</node>

<node pkg="gazebo_ros" type="spawn_model" name="spawn_urdf" args="-urdf -model turtlebot3_$(arg model) -x $(arg x_pos) -y $(arg y_pos) -z $(arg z_pos) -param robot_description" />

</launch>

可以和官方给的turtlebot3_empty_world.launch做对比。

<launch>

<arg name="model" default="$(env TURTLEBOT3_MODEL)" doc="model type [burger, waffle, waffle_pi]"/>

<arg name="x_pos" default="0.0"/>

<arg name="y_pos" default="0.0"/>

<arg name="z_pos" default="0.0"/>

<include file="$(find gazebo_ros)/launch/empty_world.launch">

<arg name="world_name" value="$(find turtlebot3_gazebo)/worlds/empty.world"/>

<arg name="paused" value="false"/>

<arg name="use_sim_time" value="true"/>

<arg name="gui" value="true"/>

<arg name="headless" value="false"/>

<arg name="debug" value="false"/>

</include>

<param name="robot_description" command="$(find xacro)/xacro --inorder $(find turtlebot3_description)/urdf/turtlebot3_$(arg model).urdf.xacro" />

<node pkg="gazebo_ros" type="spawn_model" name="spawn_urdf" args="-urdf -model turtlebot3_$(arg model) -x $(arg x_pos) -y $(arg y_pos) -z $(arg z_pos) -param robot_description" />

</launch>

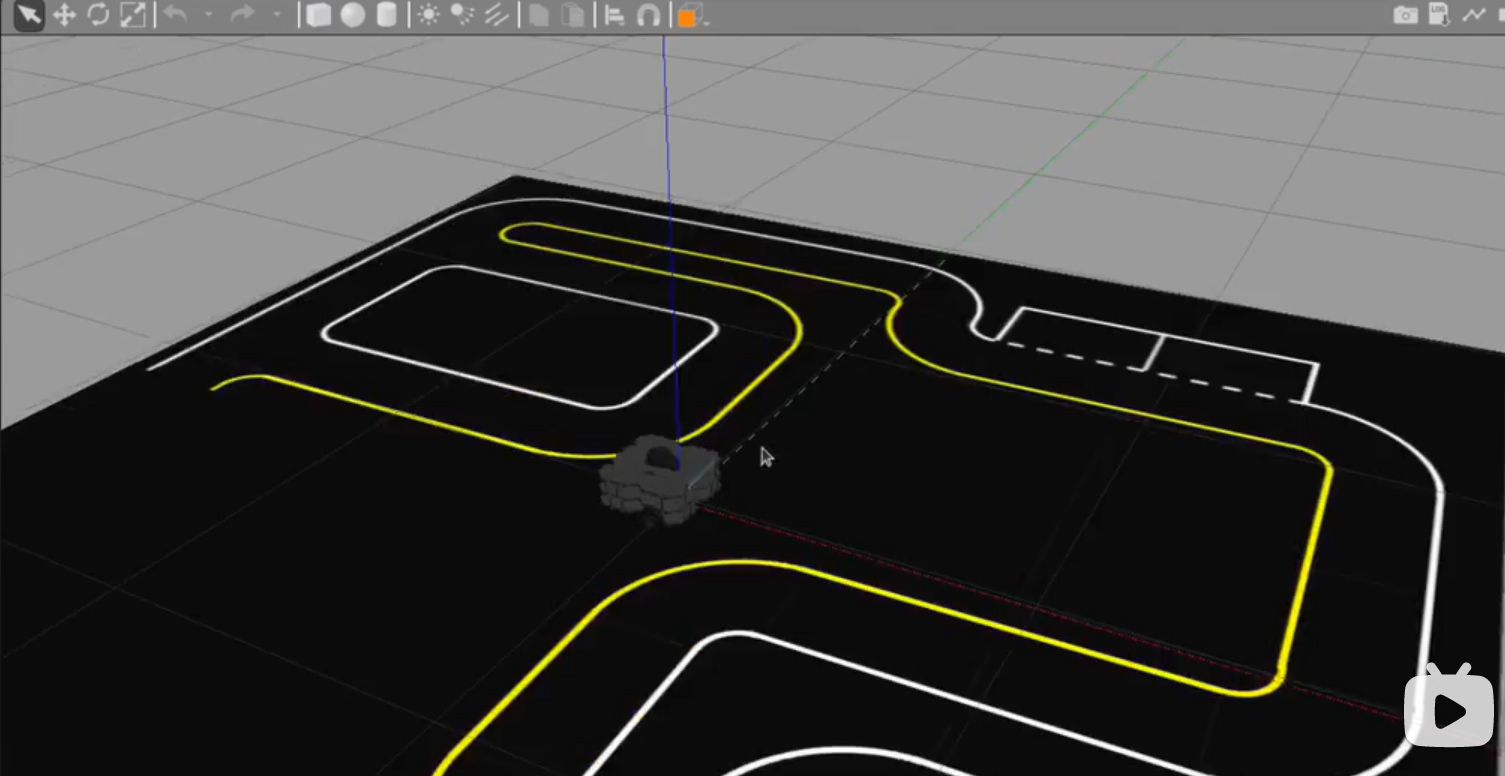

运行方式

理想的文件夹组织结构:

roslaunch learning_topic 0course.launch

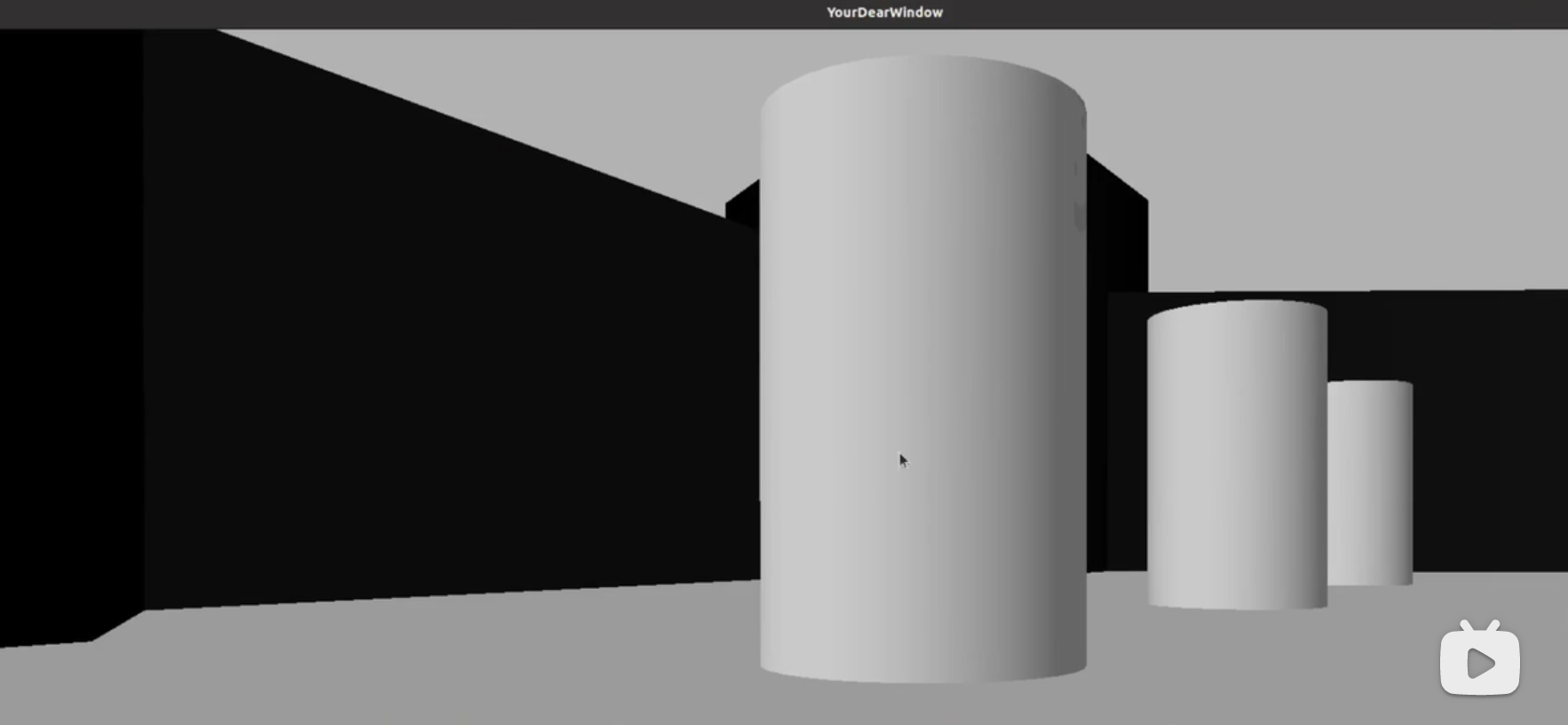

运行结果

03follower_color_filter.py

源代码

~/catkin_ws/src/learning_topic/scripts/目录下。

#!/usr/bin/env python3

# 若使用的是python3,将第一行改成python3

import rospy ,cv2, cv_bridge , numpy

from sensor_msgs.msg import Image

class Follower:

def __init__(self):

self.bridge = cv_bridge.CvBridge() #创建CvBridge对象

self.image_sub=rospy.Subscriber('/camera/rgb/image_raw',Image,self.image_callback)

# 函数变量分别为:接受节点 、 接受消息类型、 回调函数

def image_callback(self,msg):

image = self.bridge.imgmsg_to_cv2(msg)

if(image.all()!=None):

hsv = cv2.cvtColor(image, cv2.COLOR_BGR2HSV)

# 进行消息-> rgb -> hsv格式变量 的两步转换

lower_yellow = numpy.array([20,43,46])

upper_yellow = numpy.array([90,255,255])

# 建立蒙版参量 参量使用指针格式(inRange函数的要求)

mask = cv2.inRange(hsv, lower_yellow, upper_yellow)

masked = cv2.bitwise_and(image,image,mask=mask)

# 使用蒙版进行二值化 bitwise

cv2.namedWindow("showYellowOnly",cv2.WINDOW_NORMAL)

cv2.imshow("showYellowOnly",mask) #进行显示

cv2.waitKey(3)

rospy.init_node('follower',anonymous = True)

follower = Follower()

rospy.spin()

运行结果

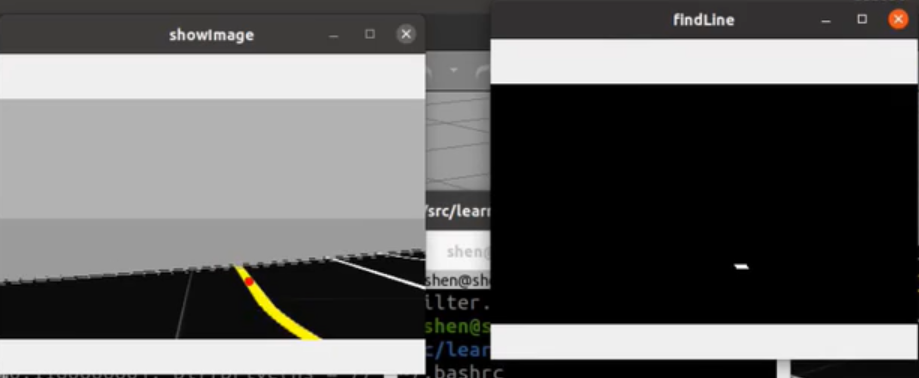

04follower_line_finder.py

源代码

~/catkin_ws/src/learning_topic/scripts/目录下。

#!/usr/bin/env python3

# 若使用的是python3,将第一行改成python3

import rospy ,cv2, cv_bridge , numpy

from sensor_msgs.msg import Image

class Follower:

def __init__(self):

self.bridge = cv_bridge.CvBridge()

self.image_sub = rospy.Subscriber(

'/camera/rgb/image_raw',

Image,

self.image_callback )

def image_callback(self,msg):

image = self.bridge.imgmsg_to_cv2(msg,

desired_encoding='bgr8')

hsv = cv2.cvtColor(image, cv2.COLOR_BGR2HSV)

lower_yellow = numpy.array([20,43,46])

upper_yellow = numpy.array([90,255,255])

mask = cv2.inRange(hsv, lower_yellow, upper_yellow)

# 同Exp4-2相同 不多做介绍

h,w,d= image.shape

top = 3*h/4

bot = top + 20

# 在图像的下3/4处进行切片 注意:image纵向向下为x正向 横向向右为y正向

mask[int(0):int(top),:] = 0

mask[int(bot):int(h),:] = 0

M = cv2.moments(mask)

# moments(): Opencv中用于计算图像中心的函数类 参见Opencv官网

if M['m00']>0:

cx = int(M['m10']/M['m00'])

cy = int(M['m01']/M['m00'])

cv2.circle(image,(cx,cy),20,(0,0,255),-1)

# 对切片之后的图像计算中心,并标记小圆圈

cv2.namedWindow("showImage",cv2.WINDOW_NORMAL)

cv2.imshow("showImage",image)

cv2.namedWindow("findLine",cv2.WINDOW_NORMAL)

cv2.imshow("findLine",mask)

cv2.waitKey(3)

rospy.init_node('follower',anonymous = True)

follower = Follower()

rospy.spin()

运行结果

注意红点和白点,这是小车即将前往的方向。

05follower_proporation.py

源代码

~/catkin_ws/src/learning_topic/scripts/目录下。

#!/usr/bin/env python3

# 若使用的是python3,将第一行改成python3

import rospy ,cv2S, cv_bridge , numpy

from sensor_msgs.msg import Image

from geometry_msgs.msg import Twist

class Follower:

def __init__(self):

self.bridge = cv_bridge.CvBridge()

self.image_sub = rospy.Subscriber('/camera/rgb/image_raw',Image,self.image_callback)

self.cmd_vel_pub = rospy.Publisher('cmd_vel',Twist,queue_size =1) # 发布话题使小车运动

self.twist =Twist()

def image_callback(self,msg):

image = self.bridge.imgmsg_to_cv2(msg,desired_encoding='bgr8')

hsv = cv2.cvtColor(image, cv2.COLOR_BGR2HSV)

lower_yellow = numpy.array([20,43,46])

upper_yellow = numpy.array([90,255,255])

mask = cv2.inRange(hsv, lower_yellow, upper_yellow)

h,w,d= image.shape

top = 5*h/6

bot = top + 20

mask[int(0):int(top),:] = 0

mask[int(bot):int(h),:] = 0

#cut the image to a blade

M = cv2.moments(mask)

#class MOMENTS

if M['m00']>0:

cx = int(M['m10']/M['m00'])

cy = int(M['m01']/M['m00'])

cv2.circle(image,(cx,cy),20,(0,0,255),-1)

err = cx-w/2

self.twist.linear.x = 0.15 # 小车的线速度不易设置太快,否则容易脱离跑道

self.twist.angular.z = -float(err)/1000 # 小车运动的角度

self.cmd_vel_pub.publish(self.twist)

cv2.namedWindow("showImage",cv2.WINDOW_NORMAL)

cv2.imshow("showImage",image)

cv2.waitKey(3)

rospy.init_node('follower',anonymous = True)

follower = Follower()

rospy.spin()

运行结果

小车自动沿着黄线前进。(窗口名字应叫做showImage)

order.txt

#-#-# 视频开始时,以下准备工作全部就绪 #-#-#

# sudo apt-get install ros-noetic-gazebo-ros-pkgs ros-noetic-gazebo-ros-control

# sudo apt-get install ros-noetic-turtlebot3-gazebo

# sudo apt-get install ros-noetic-image-view

# 预加载Gazebo模型以免过慢。

cd ~/.gazebo/

# 第一种方案(官网下载,速度较慢):

mkdir -p models

cd ~/.gazebo/models/

wget http://file.ncnynl.com/ros/gazebo_models.txt

wget -i gazebo_models.txt

ls model.tar.g* | xargs -n1 tar xzvf

# 第二种方案(下载现成的models):

git clone https://gitee.com/yltzdhbc/gazebo_models

mv gazebo_models models #将下载的文件夹改名为models

cd ~/catkin_ws/src/

# git clone https://github.com/ROBOTIS-GIT/turtlebot3_simulations.git

git clone https://gitee.com/zthcool/turtlebot3_simulations

# git clone https://github.com/ROBOTIS-GIT/hls_lfcd_lds_driver

git clone https://gitee.com/hhdang/hls_lfcd_lds_driver

# git clone https://github.com/ROBOTIS-GIT/turtlebot3.git

git clone https://gitee.com/zthcool/turtlebot3

# git clone https://github.com/ROBOTIS-GIT/turtlebot3_msgs

git clone https://gitee.com/mieximiemie/turtlebot3_msgs

cd ~/catkin_ws && catkin_make

# 上一条注:一定要等catkin_make执行完!!!不然之后找不到包的时候非常难受

# 未遇到问题时不建议更新

# 添加源

sudo sh -c 'echo "deb http://packages.osrfoundation.org/gazebo/ubuntu-stable `lsb_release -cs` main" > /etc/apt/sources.list.d/gazebo-stable.list'

wget https://packages.osrfoundation.org/gazebo.key -O - | sudo apt-key add -

# 安装或更新

sudo apt-get update

sudo apt-get upgrade

sudo apt-get install gazebo11

# Ubuntu16.04对应gazebo7,Ubuntu18.04对应gazebo9,下同

sudo apt-get install libgazebo11-dev

# 将小车模型改成waffle

gedit ~/.bashrc

# 将export TURTLEBOT3_MODEL=burger改成export TURTLEBOT3_MODEL=waffle

source ~/.bashrc

#-#-# 视频开始时,以上准备工作全部就绪 #-#-#

#-#-# 测试环境是否正确 #-#-#

# 启动终端A

roslaunch turtlebot3_gazebo turtlebot3_world.launch

# 上一条注:若gazebo正确,应该是能看到下图(见图一)

# 另起终端B

roslaunch turtlebot3_teleop turtlebot3_teleop_key.launch

# 上一条注:键盘控制小车。若gazebo中小车有移动,则说明控制程序正确

# 续注:验证完毕后,结束键盘控制进程(Ctrl+C)

# 在终端B中

roslaunch turtlebot3_gazebo turtlebot3_simulation.launch

# 上一条注:启动仿真模拟。若gazebo中小车自主移动,则说明模拟程序正确

# 续注:若gazebo卡住并在A中显示process has died,重复尝试两次。若仍然失败,考虑gazebo运行异常

# 在终端C中

rostopic list

# 上一条注:将会看到/camera/rgb/camera_info和/camera/rgb/image_raw

# 续注:若没有,则检测.bashrc文件中,模型是否为waffle

# 在终端C中

roslaunch turtlebot3_gazebo turtlebot3_gazebo_rviz.launch

# 上一条注:打开左侧Camera,如果等待良久后,能看到图像,说明你的仿真非常对

# 续注:验证完毕后,结束终端B、C的进程(Ctrl+C)

#-#-# 测试结束 #-#-#

#-#-# 测试的过程中我要演示一个卡了我一下午的坑 #-#-#

# 注意,出现模型压根加载不了的时候,终端不一定会提示VMware: vmw_ioctl_command error Invalid argument(无效的参数)

# 但是有幸等到进程自然结束的时候,就会出现这一行报错。并且还会提示剩余空间不足。

# 如果有兴趣可以自己去等一会儿试试,这里我不浪费时间了。

# 解决方式:

echo "export SVGA_VGPU10=0" >> ~/.bashrc

source ~/.bashrc

# 更多问题请参考《ROS|⑥Turtlebot3仿真Waffle循线跟踪》

# https://shandianchengzi.gitee.io/2021/04/04/ROS%E5%BE%AA%E7%BA%BF%E8%B7%9F%E8%B8%AA/

#-#-# 演示结束 #-#-#

#-#-# 正式开始循线跟踪 #-#-#

# 注:在windows下编辑文件,并直接拖动到linux虚拟机运行,可能会报错说/r不存在。

# 因为windows和linux的换行是不一样的,所以最好在linux中复制粘贴程序,或者直接拷贝我的程序包。

# 拷贝操作如下:

cd ~/catkin_ws/src

git clone https://gitee.com/shandianchengzi/learning_topic.git # 克隆我已经测试过的程序

cd ~/catkin_ws/ && catkin_make

chmod +x ~/catkin_ws/src/learning_topic/scripts/*.py # 将所有的python程序设置为允许执行的文件

# 下面所有的程序都放在learning_topic功能包下,

# 如果你们的在别的功能包,就把learning_topic改成自己的功能包的名字

---------------01follower.py Beg----------------

#!/usr/bin/env python3

# 若使用的是python2,将第一行改成python2

# 若使用的是python,将第一行改成python

# 之后的程序同理

import rospy

from sensor_msgs.msg import Image # 从传感器图片的库中导入Image数据类型

def image_callback(msg):

pass

rospy.init_node('follower') # 建立结点follower

image_sub = rospy.Subscriber('camera/rgb/image_raw', Image, image_callback)

# 以上括号内分别是 接受的topic名称, 数据类型, 触发的回调函数

rospy.spin()

---------------01follower.py End----------------

rosrun learning_topic 01follower.py # 运行learning_topic功能包中的01follower.py

rosnode list # 如果出现了/follower,恭喜你!!!

rosnode info /follower

rostopic hz /camera/rgb/image_raw # 能看到图片信息的反馈

# 终止01follower

---------------02follower_opencv.py Beg----------------

#!/usr/bin/env python3

import rospy

from sensor_msgs.msg import Image

import cv2, cv_bridge # 导入opencv模块和协议模块

def image_callback(msg):

image = bridge.imgmsg_to_cv2(msg,desired_encoding="bgr8")

# 将ros_image通过蓝绿红8位色空间转换为OpenCV图像,结果返回给image,类参数bridge转换函数

if(image.all() == None):

print("Can't get your image, please check your code!")

else :

# print(image.size, image.shape) # 输出图像大小以及形状

cv2.namedWindow("showImage",cv2.WINDOW_NORMAL) # 建立名为showImage的窗口 窗口类为 cv2内置的NORMAL窗口

cv2.imshow("showImage",image[:,:,0]) # 在showImage中显示二维图像

cv2.waitKey(3) # waitkey()延时显示图像,在imshow之后,没有waitkey()函数图像将无法显示

rospy.init_node('follower',anonymous = True) # anonymous 同步选项 每公布一条消息就接受一个消息

bridge = cv_bridge.CvBridge() # 创建CvBridge对象

image_sub=rospy.Subscriber('/camera/rgb/image_raw',Image,image_callback)

# 以上括号内分别是 接受的topic名称, 数据类型, 触发的回调函数

rospy.spin()

---------------02follower_opencv.py End----------------

roslaunch turtlebot3_gazebo turtlebot3_simulation.launch # 启动仿真模拟

rosrun learning_topic 02follower_opencv.py

# 可以看到showImage窗口,并看到图像在动

# 终止所有终端的进程

# 将turtlebot3_simulation/turtlebot3_gazebo/models全部拷贝到自己的功能包的models文件夹下

cp ~/catkin_ws/src/turtlebot3_simulation/turtlebot3_gazebo/models/* ~/catkin_ws/src/learning_topic/models/

---------------0course.launch Beg----------------

<launch>

<env name="GAZEBO_RESOURCE_PATH" value="$(find learning_topic)/models/turtlebot3_autorace/ground_picture" />

<arg name="model" default="$(env TURTLEBOT3_MODEL)" doc="model type [burger, waffle, waffle_pi]"/>

<arg name="x_pos" default="0.0"/>

<arg name="y_pos" default="0.0"/>

<arg name="z_pos" default="0.0"/>

<include file="$(find gazebo_ros)/launch/empty_world.launch">

<arg name="world_name" value="$(find learning_topic)/worlds/course.world"/>

<arg name="paused" value="false"/>

<arg name="use_sim_time" value="true"/>

<arg name="gui" value="true"/>

<arg name="headless" value="false"/>

<arg name="debug" value="false"/>

</include>

<param name="robot_description" command="$(find xacro)/xacro $(find turtlebot3_description)/urdf/turtlebot3_$(arg model).urdf.xacro" />

<node pkg="robot_state_publisher" type="robot_state_publisher" name="robot_state_publisher">

<param name="publish_frequency" type="double" value="30.0" />

</node>

<node pkg="gazebo_ros" type="spawn_model" name="spawn_urdf" args="-urdf -model turtlebot3_$(arg model) -x $(arg x_pos) -y $(arg y_pos) -z $(arg z_pos) -param robot_description" />

</launch>

---------------0course.launch End----------------

# 在自己的功能包下新建worlds文件夹,并自行设置0course.launch的内容

---------------course.world Beg----------------

<sdf version="1.4">

<world name="default">

<scene>

<ambient>0.4 0.4 0.4 1</ambient>

<background>0.7 0.7 0.7 1</background>

<shadows>true</shadows>

</scene>

<!-- A global light source -->

<include>

<uri>model://sun</uri>

</include>

<!-- A ground plane -->

<include>

<uri>model://ground_plane</uri>

</include>

<!-- Our ground image -->

<include>

<uri>model://turtlebot3_autorace/course</uri>

<pose> 0 0 0 0 0 -1.54</pose>

</include>

<physics type="ode">

<real_time_update_rate>1000.0</real_time_update_rate>

<max_step_size>0.001</max_step_size>

<real_time_factor>1</real_time_factor>

<ode>

<solver>

<type>quick</type>

<iters>150</iters>

<precon_iters>0</precon_iters>

<sor>1.400000</sor>

<use_dynamic_moi_rescaling>1</use_dynamic_moi_rescaling>

</solver>

<constraints>

<cfm>0.00001</cfm>

<erp>0.2</erp>

<contact_max_correcting_vel>2000.000000</contact_max_correcting_vel>

<contact_surface_layer>0.01000</contact_surface_layer>

</constraints>

</ode>

</physics>

</world>

</sdf>

---------------course.world End----------------

roslaunch learning_topic 0course.launch

---------------03follower_color_filter.py Beg----------------

#!/usr/bin/env python3

# 若使用的是python3,将第一行改成python3

import rospy ,cv2, cv_bridge , numpy

from sensor_msgs.msg import Image

# 导入模块

class Follower:

def __init__(self):

self.bridge = cv_bridge.CvBridge() #创建CvBridge对象

self.image_sub=rospy.Subscriber('/camera/rgb/image_raw',Image,self.image_callback)

# 函数变量分别为:接受节点 、 接受消息类型、 回调函数

def image_callback(self,msg):

image = self.bridge.imgmsg_to_cv2(msg)

if(image.all()!=None):

hsv = cv2.cvtColor(image, cv2.COLOR_BGR2HSV)

# 进行消息-> rgb -> hsv格式变量 的两步转换

lower_yellow = numpy.array([20,43,46])

upper_yellow = numpy.array([90,255,255])

# 建立蒙版参量 参量使用指针格式(inRange函数的要求)

mask = cv2.inRange(hsv, lower_yellow, upper_yellow)

masked = cv2.bitwise_and(image,image,mask=mask)

# 使用蒙版进行二值化 bitwise

cv2.namedWindow("showYellowOnly",cv2.WINDOW_NORMAL)

cv2.imshow("showYellowOnly",mask) #进行显示

cv2.waitKey(3)

rospy.init_node('follower',anonymous = True)

follower = Follower()

rospy.spin()

---------------03follower_color_filter.py End----------------

---------------04follower_line_finder.py Beg----------------

#!/usr/bin/env python3

# 若使用的是python3,将第一行改成python3

import rospy ,cv2, cv_bridge , numpy

from sensor_msgs.msg import Image

class Follower:

def __init__(self):

self.bridge = cv_bridge.CvBridge()

self.image_sub = rospy.Subscriber(

'/camera/rgb/image_raw',

Image,

self.image_callback )

def image_callback(self,msg):

image = self.bridge.imgmsg_to_cv2(msg,

desired_encoding='bgr8')

hsv = cv2.cvtColor(image, cv2.COLOR_BGR2HSV)

lower_yellow = numpy.array([20,43,46])

upper_yellow = numpy.array([90,255,255])

mask = cv2.inRange(hsv, lower_yellow, upper_yellow)

# 同Exp4-2相同 不多做介绍

h,w,d= image.shape

top = 3*h/4

bot = top + 20

# 在图像的下3/4处进行切片 注意:image纵向向下为x正向 横向向右为y正向

mask[int(0):int(top),:] = 0

mask[int(bot):int(h),:] = 0

M = cv2.moments(mask)

#moments(): Opencv中用于计算图像中心的函数类 参见Opencv官网

if M['m00']>0:

cx = int(M['m10']/M['m00'])

cy = int(M['m01']/M['m00'])

cv2.circle(image,(cx,cy),20,(0,0,255),-1)

# 对切片之后的图像计算中心,并标记小圆圈

cv2.namedWindow("showImage",cv2.WINDOW_NORMAL)

cv2.imshow("showImage",image)

cv2.namedWindow("findLine",cv2.WINDOW_NORMAL)

cv2.imshow("findLine",mask)

cv2.waitKey(3)

rospy.init_node('follower',anonymous = True)

follower = Follower()

rospy.spin()

---------------04follower_line_finder.py End----------------

---------------05follower_proporation.py Beg----------------

#!/usr/bin/env python3

# 若使用的是python3,将第一行改成python3

import rospy ,cv2, cv_bridge , numpy

from sensor_msgs.msg import Image

from geometry_msgs.msg import Twist

class Follower:

def __init__(self):

self.bridge = cv_bridge.CvBridge()

self.image_sub = rospy.Subscriber('/camera/rgb/image_raw',Image,self.image_callback)

self.cmd_vel_pub = rospy.Publisher('cmd_vel',Twist,queue_size =1)

self.twist =Twist()

def image_callback(self,msg):

image = self.bridge.imgmsg_to_cv2(msg,desired_encoding='bgr8')

hsv = cv2.cvtColor(image, cv2.COLOR_BGR2HSV)

lower_yellow = numpy.array([20,43,46])

upper_yellow = numpy.array([90,255,255])

mask = cv2.inRange(hsv, lower_yellow, upper_yellow)

h,w,d= image.shape

top = 5*h/6

bot = top + 20

mask[int(0):int(top),:] = 0

mask[int(bot):int(h),:] = 0

#cut the image to a blade

M = cv2.moments(mask)

#class MOMENTS

if M['m00']>0:

cx = int(M['m10']/M['m00'])

cy = int(M['m01']/M['m00'])

cv2.circle(image,(cx,cy),20,(0,0,255),-1)

err = cx-w/2

self.twist.linear.x = 0.15 #(注:小车的速度不易设置太快,否则容易脱离跑道)

self.twist.angular.z = -float(err)/1000

self.cmd_vel_pub.publish(self.twist)

cv2.namedWindow("showImage",cv2.WINDOW_NORMAL)

cv2.imshow("showImage",image)

cv2.waitKey(3)

rospy.init_node('follower',anonymous = True)

follower = Follower()

rospy.spin()

---------------05follower_proporation.py End----------------

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 记一次.NET内存居高不下排查解决与启示

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY

· 【自荐】一款简洁、开源的在线白板工具 Drawnix