集中化日志管理平台的应用day04 暂时没用

4、项⽬实施

4.1 基本思路

从访问源头开始,按链路逐个写⼊⽇志,使⽤不同的⼿段,实现⽤户请求的各个点的⽇志收集

4.2 前端请求

4.2.1 概述

⽬前项⽬多采⽤动静分离⽅式,静态⻚由nginx处理。那么nginx上的请求⽇志如何收集处理呢?

第⼀可以采⽤输出到log⽂件,fifilebeat采集,送⼊kafka。第⼆可以采⽤lua脚本⽅式,直接输出到

kafka,本章节采⽤第⼆种⽅式,完成静态⽂件部分的请求⽇志追踪。

4.2.2 openresty

1)官⽹

http://openresty.org/cn/

2)下载地址

https://openresty.org/download/openresty-1.15.8.2-win64.zip

3)解压,启动与测试

4.2.3 lua-kafka

1)下载:https://github.com/doujiang24/lua-resty-kafka/archive/master.zip

2)解压,将resty⽬录解压到openresty的安装⽬录/lualib下

3)修改配置nginx配置⽂件

4)测试log

location / {

root html;

index index.html index.htm;

log_by_lua '

local

cjson = require "cjson"

local producer = require "resty.kafka.producer"

local broker_list = {

{

host = "39.98.133.153", port = 9103 },

}

local log_json = {}

log_json["message"]="this is a test"

log_json["from"]="nginx"

log_json["rid"]="a"

log_json["sid"]="b"

log_json["tid"]="c"

local message = cjson.encode(log_json);

local bp = producer:new(broker_list, { producer_type = "async" })

-- 发送⽇志消息,send第⼆个参数key,⽤于kafka路由控制:

-- key为nill(空)时,⼀段时间向同⼀partition写⼊数据

-- 指定key,按照key的hash写⼊到对应的partition

local ok, err = bp:send("demo", nil, message)

if not ok then

ngx.log(ngx.ERR, "kafka send err:", err)

return

end

';

}

Q&A:

问:为什么会有,nginx,kafka两个from?

答:logstash定义的fifield追加from导致,查看logstash配置及⽇志debug信息:

4.2.4 rid的⽣成

1)rid⽣成策略:前端的nginx⽣成请求rid,向下传递到整个请求环节,⼀直到请求结束。

2)⽣成⽅式:使⽤lua⽣成随机字符串即可

kafka {

bootstrap_servers => ["39.98.133.153:9103"]

group_id => "logstash"

topics => ["demo"]

consumer_threads => 1

decorate_events => true

add_field => {"from" => "kafka"}

codec => "json"

}

{

"@version" => "1",

"rid" => "a",

"message" => "this is a test",

"from" => [

[0] "nginx",

[1] "kafka"

],

"@timestamp" => 2020-02-27T10:00:50.464Z,

"tid" => "c",

"sid" => "b"

}

3)定义⼀个lua函数,lualib/resty/kafka/tools.lua

4)修改nginx.conf ,随机⻓度可以根据实际业务调整,这⾥使⽤10

5)重启ningx -s reload , 请求80端⼝,刷新⼏次,查看kibana是不是⽣成了不同的rid

tools={}

function tools.getRandomStr(len)

local rankStr = ""

local randNum = 0

for i=0,len do

if math.random(1,3)==1 then

randNum

=string.char

(math.random

(0,26)+65)

elseif math.random

(1,3

)==2 then

randNum

=string.char(math.random(0.26)+97)

else

randNum=math.random(0,10)

end

rankStr=rankStr..randNum

end

return rankStr

end

return tools

local kafkatools = require "resty.kafka.tools"

log_json["rid"]=kafkatools.getRandomStr(10)

4.2.5 tid的⽣成

1)tid,也就是终端的id,可以区分不同设备。常⻅于⼿机端和pc端多终端的场景分析中

2)策略:获取head头⾥的user-agent即可,浏览器debug信息如下:

3)lua函数

4)修改tid,调⽤函数

5)通过chrome调试模式进⾏验证

function tools.getDevice()

local headers=ngx.req.get_headers()

local userAgent=headers["User-Agent"]

local mobile = {

"iphone", "android", "touch", "ipad", "symbian", "htc", "palmos",

"blackberry", "opera mini", "windows ce", "nokia", "fennec","macintosh",

"hiptop", "kindle", "mot", "webos", "samsung", "sonyericsson", "wap",

"avantgo", "eudoraweb", "minimo", "netfront", "teleca","windows nt"

}

userAgent = string.lower(userAgent)

for i, v in ipairs(mobile) do

if string.match(userAgent, v) then

return v

end

end

return userAgent

end

log_json["tid"]=kafkatools.getDevice()

4.2.6 ip与url

1)使⽤lua脚本获取真实的ip,传递到下游。因为代理服务器作为请求的第⼀道关卡,可以⾸先获取到

客户端的ip,⽽url可以从req中获取

2)修改tools,添加函数

3)修改nginx配置,加上ip信息

4)kibana验证

function tools.getClientIp()

local headers=ngx.req.get_headers()

local ip=headers["X-REAL-IP"] or headers["X_FORWARDED_FOR"] or

ngx.var.remote_addr or "0.0.0.0"

return ip

end

log_json["ip"]=kafkatools.getClientIp()

log_json["message"]="nginx:"..(ngx.var.uri)

还少⼀个sid即当前登录⽤户,这个不是nginx能解决的,我们下⽂再讲。

4.3 微服务层

4.3.1 概述

后台服务同样需要⽣成上⾯的

rid,tid,sid和基本的ip与url,这些在java端被封装在了

HttpServletRequest中。通过request对象,获取相应的信息后,借助上⼀章的框架集成kafka,可以将

访问信息送⼊⽇志平台。

4.3.2 代理转发

1)修改nignx配置⽂件,将静态⽂件剥离,api发往后台gateway,注意headers的设置和下放

2)在web中新增⼀个ApiController , 新增 /test 请求

3)测试nginx⼊⼝是否正常转发到后台

4.3.3 fifilter第⼀关

请求进⼊后台后,第⼀道关卡可以通过fifilter或者interceptor拿到相关信息,记录其调⽤⽇志。

1)在utils项⽬下增加fifilter过滤器

#添加location,将 /api 请求转发给后台

location ^~ /api/ {

proxy_pass http://127.0.0.1:8002;

}

@RequestMapping("/api")

public class ApiController {

@GetMapping("/test")

public Object test(){

return "this is a test";

}

}

package com.itheima.logdemo.utils;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.context.annotation.Configuration;

import org.springframework.core.annotation.Order;

2)给web的启动类添加扫描,将fifilter扫⼊spring

3)启动web测试fifilter⽇志是否进⼊kibana

4.3.4 id的⽣成

上⾯⽇志可以正常进⼊采集通道,但是各个id采⽤的是字符串,怎么取得真实的场景数据呢?本节讨论

id的⽣成

1)utils下定义⼀个CommonUtils,与前台⼀样,rid使⽤随机字符串,定义⼀个⼯具函数

import javax.servlet.*;

import javax.servlet.annotation.WebFilter;

import java.io.IOException;

@Configuration

@Order(1)

@WebFilter(filterName = "logFilter", urlPatterns = "/*")

public class LogFilter

implements

Filter {

//知识点:被哪个

app引⽤,当前

from

的⽇志记录就是当前

app的名字

@Value(

"${spring.application.name}"

)

String

appName

;

//知识点:

slf4j的好处,

utils

被其他项⽬引⽤时不会给对⽅的⽇志产⽣⼲扰

private

Logger

logger

= LoggerFactory

.getLogger("kafka");

@Override

public void doFilter(ServletRequest request, ServletResponse response,

FilterChain chain) throws IOException,

ServletException {

logger.info(new LogBean("rid","sid","tid",appName,"I am

filter").toString());

chain.doFilter(request, response);

}

}

@ComponentScan("com.itheima.logdemo")

public static String getRandomStr(int len){

char[]

chars="abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ0123456789".toCharAr

ray();

char[] str = new char[len];

for (int i = 0; i < len; i++) {

str[i] = chars[RandomUtils.nextInt(0,61)];

}

2)tid,同样的⽅式,取

header

3)ip与url

return new String(str);

}

//测试:

public static void main(String[] args) {

for (int i = 0; i < 5; i++) {

System.out.println(getRandomStr(10));

}

}

public static String getDevice(String userAgent){

String[] terminals = {

"iphone", "android", "touch", "ipad", "symbian", "htc", "palmos",

"blackberry", "opera mini", "windows ce", "nokia", "fennec","macintosh",

"hiptop", "kindle", "mot", "webos", "samsung", "sonyericsson",

"wap", "avantgo", "eudoraweb", "minimo", "netfront", "teleca","windows nt"

};

userAgent = userAgent.toLowerCase();

for (String terminal : terminals) {

if (userAgent.indexOf(terminal) != -1){

return terminal;

}

}

return userAgent;

}

//LogBean中新增ip和url属性,添加get,set⽅法

//CommonUtils添加获取ip的⽅法

public static String getIpAddress(HttpServletRequest request) {

String ip = request.getHeader("x-forwarded-for");

if (ip == null || ip.length() == 0 || "unknown".equalsIgnoreCase(ip)) {

ip = request.getHeader("Proxy-Client-IP");

}

if (ip == null || ip.length() == 0 || "unknown".equalsIgnoreCase(ip)) {

ip = request.getHeader("WL-Proxy-Client-IP");

}

if (ip == null || ip.length() == 0 || "unknown".equalsIgnoreCase(ip)) {

ip = request.getHeader("HTTP_CLIENT_IP");

}

if (ip == null || ip.length() == 0 || "unknown".equalsIgnoreCase(ip)) {

ip = request.getHeader("HTTP_X_FORWARDED_FOR");

}

4)进⼊kibana测试采集情况

4.3.5 参数传递

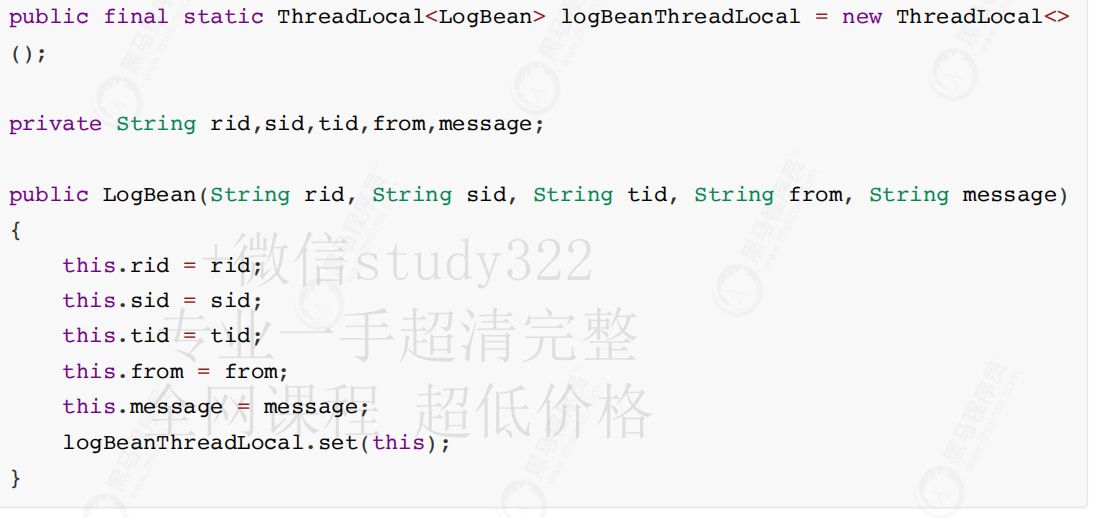

以上步骤,fifilter中⽣成了相关的id信息,如何传递到下⾯的⽅法中呢?答案就是threadlocal

1)修改LogBean的构造函数,⽣成的时候,将⾃⼰放⼊当前threadlocal,fifilter不需要改动

if (ip == null || ip.length() == 0 || "unknown".equalsIgnoreCase(ip)) {

ip = request.getRemoteAddr();

}

return ip;

}

//修改filter的构造,

url可以从request取到

@Override

public void

doFilter(ServletRequest request, ServletResponse response,

FilterChain chain) throws IOException,

ServletException

{

HttpServletRequest httpServletRequest = (HttpServletRequest) request;

LogBean logBean = new LogBean(CommonUtils.getRandomStr(10), "sid",

CommonUtils.getDevice(httpServletRequest.getHeader("User

Agent")),

appName, "I am filter");

logBean.setIp(CommonUtils.getIpAddress(httpServletRequest));

logBean.setUrl("java:" + httpServletRequest.getRequestURI());

logger.info(logBean.toString());

chain.doFilter(request, response);

}

2)新建ApiService

3)修改ApiController

public final static ThreadLocal<LogBean> logBeanThreadLocal = new ThreadLocal<>

();

private String rid,sid,tid,from,message;

public LogBean(String rid, String sid, String tid, String from, String message)

{

this.rid =

rid;

this.sid = sid;

this.tid

= tid;

this.from = from;

this.message

= message

;

logBeanThreadLocal

.set

(this);

}

@Service

public class ApiService {

private final static Logger logger = LoggerFactory.getLogger("kafka");

public void test(){

LogBean logBean = LogBean.logBeanThreadLocal.get();

logBean.setMessage("I am service");

logger.info(logBean.toString());

}

}

@RestController

@RequestMapping("/api")

public class ApiController {

private final static Logger logger = LoggerFactory.getLogger("kafka");

@Autowired ApiService apiService;

@GetMapping("/test")

public Object test(){

LogBean logBean = LogBean.logBeanThreadLocal.get();

logBean.setMessage("I am Controller");

logger.info(logBean.toString());

4)请求/api/test,查看

kibana确认⽇志采集情况,可⻅同⼀请求的rid和tid相同,成功传递。⽽两次不

同请求的rid不同,终端相同,

tid相同。

4.3.6 切⾯

以上⽅式,每个⽅法调⽤都需要⼿写,那有没有办法将注意⼒放在业务上,⽽不必硬编码⽇志的打印

呢?

1)utils引⼊相关坐标

2)定义⼀个注解⽤于⽅法标示

apiService.test();

return "this is a test" ;

}

}

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-aop</artifactId>

</dependency>

3)定义切⾯

package com.itheima.logdemo.utils;

import java.lang.annotation.*;

@Target(ElementType.METHOD)

@Retention(RetentionPolicy.RUNTIME)

@Documented

public @interface

LogInfo {

String value() default "";

}

package com.itheima.logdemo.utils;

import org.aspectj.lang.ProceedingJoinPoint;

import org.aspectj.lang.annotation.Around;

import org.aspectj.lang.annotation.Aspect;

import org.aspectj.lang.annotation.Pointcut;

import org.aspectj.lang.reflect.MethodSignature;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.stereotype.Component;

/**

* ⽇志切⾯

*/

@Aspect

@Component

public class LogAspect {

private final static Logger logger = LoggerFactory.getLogger("kafka");

@Pointcut("@annotation(com.itheima.logdemo.utils.LogInfo)")

public void log() {}

/**

* 环绕通知

*/

@Around(value = "log()")

public Object arround(ProceedingJoinPoint pjp) {

try {

MethodSignature signature = (MethodSignature) pjp.getSignature();

String className = pjp.getTarget().getClass().getSimpleName();

String methodName = signature.getName();

4)对service打注解,controller不要加,再次访问

5)进kibana验证信息采集情况

LogBean logBean = LogBean.logBeanThreadLocal.get();

logBean.setMessage("before "+className+"."+methodName);

logger.info(logBean.toString());

//⽅法执⾏

Object o = pjp.proceed();

logBean

.setMessage

("after "

+className

+"."+methodName);

logger

.info

(logBean

.toString

());

return o;

}

catch

(Throwable e) {

e.printStackTrace

();

return null;

}

}

}

@LogInfo

public void test(){

LogBean logBean = LogBean.logBeanThreadLocal.get();

logBean.setMessage("I am service");

try {

Thread.sleep(500);

} catch (InterruptedException e) {

e.printStackTrace();

}

logger.info(logBean.toString());

}

4.3.7 乱序问题

注意上⾯的结果,信息全了,但是为什么是乱序的呢?

原因:来⾃timestamp的值是logstash取出kafka的时间,因为消息通过kafka异步传送后,这个时间不

再能精确反映⽇志诞⽣的时间,也就⽆法保证顺序性,如何解决呢?

1)修改LogBean,再需要打印,也就是调toString时,将当前时间戳放进去

package com.itheima.logdemo.utils;

import com.alibaba.fastjson.JSON;

public class LogBean {

public final static ThreadLocal<LogBean> logBeanThreadLocal = new

ThreadLocal<>();

private String rid, sid, tid, from, message;

private long time;

public LogBean(String rid, String sid, String tid, String from, String

message) {

this.rid = rid;

this.sid = sid;

this.tid = tid;

this.from = from;

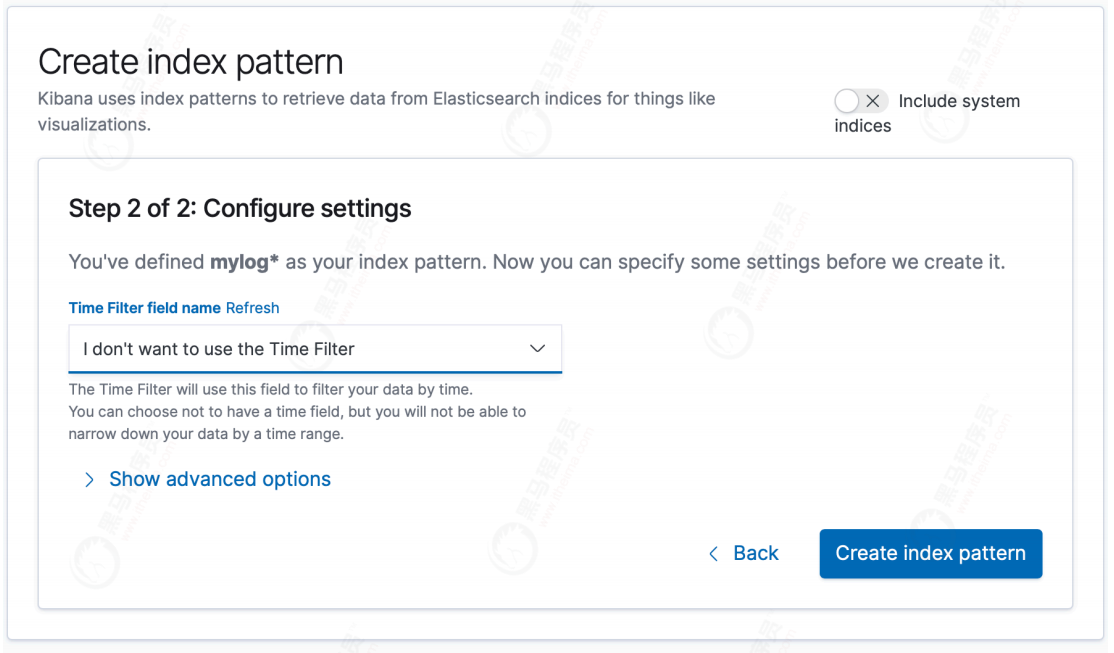

2)进⼊kibana重新创建⼀个索引,不再使⽤timestamp

3)kibana展示时,选time,并倒序

this.message = message;

logBeanThreadLocal.set(this);

}

//getters and setters ....

public long getTime() {

return

time;

}

public void

setTime(long time) {

this

.time = time;

}

@Override

public String toString() {

this.time = System.currentTimeMillis();

return JSON.toJSONString(this);

}

}

可以看到,message

严格按照时间顺序打印

4.4 跨服务调⽤

问题:threadlocal解决了log信息在本应⽤内的传递,但是项⽬中存在跨服务的调⽤,例如web调user

微服务,那在web模块中⽣成的相关id如何传递给user呢?本章我们讨论这个问题

4.4.1 默认RestTemplate

1)修改web模块的App,添加restTemplate

2)修改service⽅法改为远程调⽤

@LoadBalanced

@Bean

RestTemplate getRestTemplate() {

return new RestTemplate();

}

package com.itheima.logdemo.web;

import com.itheima.logdemo.utils.LogBean;

import com.itheima.logdemo.utils.LogInfo;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Service;

import org.springframework.web.client.RestTemplate;

@Service

public class ApiService {

private final static Logger logger = LoggerFactory.getLogger("kafka");

@Autowired

RestTemplate restTemplate;

@LogInfo

3)user模块新增UserController,加⼀个info⽅法

4)不要忘记user模块上App的扫包路径

public void test(){

LogBean logBean = LogBean.logBeanThreadLocal.get();

logBean.setMessage("I am service");

logger.info(logBean.toString());

logBean.setMessage("before call user");

logger.

info(logBean.toString());

String

res =

restTemplate

.getForObject

("http://user/info",String.class);

logBean

.setMessage

("after call user, res="

+res);

logger

.info

(logBean

.toString());

}

}

package com.itheima.logdemo.user;

import com.itheima.logdemo.utils.LogBean;

import com.itheima.logdemo.utils.LogInfo;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

@RestController

public class UserController {

private static final Logger logger = LoggerFactory.getLogger("kafka");

@LogInfo

@RequestMapping("/info")

public String info(){

LogBean logBean = LogBean.logBeanThreadLocal.get();

logBean.setMessage("I am user.controller");

logger.info(logBean.toString());

return "zhangsan";

}

}

5)访问web的api/test请求,查看kibana验证

注意:user的rid和tid,以及ip来源,是由fifilter重新⽣成的。我们希望的值应该是与web同⼀个才对,

这就涉及到远程服务调⽤时,链路信息的传递问题。

4.4.2 ⾃定义RestTemplate

问题:上⼀个微服务⽣成的链路,到下⼀个微服务调⽤时,中断了。怎么追踪呢?

1)⾃定义MyRestTemplate,继承默认的RestTemplate,并覆盖execute⽅法

@ComponentScan("com.itheima.logdemo")

@Override

protected <T> T doExecute(URI url, HttpMethod method, RequestCallback

requestCallback, ResponseExtractor<T> responseExtractor) throws

RestClientException {

Assert.notNull(url, "URI is required");

Assert.notNull(method, "HttpMethod is required");

ClientHttpResponse response = null;

Object var14;

try {

ClientHttpRequest request = this.createRequest(url, method);

if (requestCallback != null) {

requestCallback.doWithRequest(request);

}

//重点在这⾥!

LogBean logBean = LogBean.logBeanThreadLocal.get();

2)修改web的App启动类,获取⾃定义的RestTemplate

3)改造Filter,优先取header的值

HttpHeaders httpHeaders = request.getHeaders();

httpHeaders.add("rid",logBean.getRid());

httpHeaders.add("sid",logBean.getSid());

httpHeaders.add("tid",logBean.getTid());

httpHeaders.add("ip",logBean.getIp());

response = request.execute();

this.handleResponse

(url, method

,

response

);

var14 =

responseExtractor

!= null

?

responseExtractor

.extractData

(response

) : null;

} catch (

IOException

var12

) {

String

resource

= url

.toString();

String

query

=

url

.getRawQuery

();

resource = query != null ? resource.substring(0, resource.indexOf(63))

: resource;

throw new ResourceAccessException("I/O error on " + method.name() + "

request for \"" + resource + "\": " + var12.getMessage(), var12);

} finally {

if (response != null) {

response.close();

}

}

return (T) var14;

}

@LoadBalanced

@Bean

RestTemplate getRestTemplate() {

// return new RestTemplate();

return new MyRestTemplate();

}

@Override

public void doFilter(ServletRequest request, ServletResponse response,

FilterChain chain) throws IOException, ServletException {

HttpServletRequest httpServletRequest = (HttpServletRequest) request;

String rid =

StringUtils.defaultIfBlank(httpServletRequest.getHeader("rid"),CommonUtils.getR

andomStr(10));

4)再次请求进kibana验证链路传递情况

结论:rid等链路信息得以传递

4.5 sid的⽣成

截⽌到⽬前为⽌,整个链路已基本完成,但是不要忘记,还有⼀种特殊场景:⽤户登陆,这就涉及到我

们的sid

4.5.1 登陆业务

1)修改user模块,新增⼀个⽤户名密码校验微服务,模拟登陆校验

String sid =

StringUtils.defaultIfBlank(httpServletRequest.getHeader("sid"),CommonUtils.getR

andomStr(10));

String tid =

StringUtils.defaultIfBlank(httpServletRequest.getHeader("tid"),CommonUtils.getD

evice(httpServletRequest.getHeader("User-Agent")));

String ip =

StringUtils.defaultIfBlank

(httpServletRequest.getHeader("ip"),CommonUtils.getIp

Address(httpServletRequest

));

LogBean

logBean = new LogBean(rid,sid,tid,appName, "I am filter");

logBean

.

setIp

(ip

);

logBean

.

setUrl

("java:"

+ httpServletRequest.getRequestURI());

logger.info(logBean.toString());

chain.doFilter(request, response);

}

2)在web中新增login请求,模拟登陆操作,由web调user微服务

4.5.2 后台sid⽣成

1)修改fifilter,sid从cookie获取

@LogInfo

@RequestMapping("/check")

public boolean check(@RequestParam("username") String username ,

@RequestParam("password") String password){

//check username and password

LogBean logBean

= LogBean.logBeanThreadLocal.get();

logBean.setMessage("correct user");

logger.

info(logBean.toString());

return

true;

}

@LogInfo

@RequestMapping("/login")

public String login(HttpServletRequest request, HttpServletResponse response){

String username = request.getParameter("username");

String password = request.getParameter("password");

boolean ok = apiService.login(username,password);

if (ok){

Cookie cookie = new Cookie("sid",username);

//设置path,让所有请求均可以获取

cookie.setPath("/");

response.addCookie(cookie);

return "success";

}else {

return "error";

}

}

@LogInfo

public boolean login(String username, String password) {

return restTemplate.getForObject("http://user/check?

username="+username+"&password="+password,boolean.class);

}

2)调⽤登陆接⼝ : http://localhost/api/login?username=zhangsan&password=123

3)登陆成功后,再次访问:http://localhost/api/test

2)验证kibana⾥的sid

@Override

public void doFilter(ServletRequest request, ServletResponse response,

FilterChain chain) throws IOException, ServletException {

HttpServletRequest httpServletRequest = (HttpServletRequest) request;

String cookieVal = null;

Cookie[] cookies

= httpServletRequest

.getCookies();

if (cookies

!= null

){

for (Cookie cookie : cookies) {

if ("sid".equals(cookie.getName())){

cookieVal = cookie.getValue();

break;

}

}

}

String rid =

StringUtils.defaultIfBlank(httpServletRequest.getHeader("rid"),CommonUtils.getR

andomStr(10));

String sid =

StringUtils.defaultIfBlank(httpServletRequest.getHeader("sid"),cookieVal);

String tid =

StringUtils.defaultIfBlank(httpServletRequest.getHeader("tid"),CommonUtils.getD

evice(httpServletRequest.getHeader("User-Agent")));

String ip =

StringUtils.defaultIfBlank(httpServletRequest.getHeader("ip"),CommonUtils.getIp

Address(httpServletRequest));

LogBean logBean = new LogBean(rid,sid,tid,appName, "I am filter");

logBean.setIp(ip);

logBean.setUrl("java:" + httpServletRequest.getRequestURI());

logger.info(logBean.toString());

chain.doFilter(request, response);

}

4.5.3 前端nginx的sid

通过上节的cookie设置和fifilter获取,后端的链路中sid可以正常⽣成,那么前端nginx和lua部分如何⽣

成呢?

1)修改lua脚本

log_by_lua '

-- 引⼊lua所有api

local cjson = require "cjson"

local producer = require "resty.kafka.producer"

local kafkatools = require "resty.kafka.tools"

-- 定义kafka broker地址,ip需要和kafka的host.name配置⼀致

local broker_list = {

{ host = "39.98.133.153", port = 9103 },

}

-- 定义json便于⽇志数据整理收集

local log_json = {}

log_json["message"]="nginx:"..(ngx.var.uri)

log_json["from"]="nginx"

log_json["rid"]=kafkatools.getRandomStr(10)

-- 取cookie⾥的sid

log_json["sid"]=ngx.var.cookie_sid

-- 拼接时间参数

ngx.update_time()

log_json["time"]=ngx.now()

log_json["tid"]=kafkatools.getDevice()

log_json["ip"]=kafkatools.getClientIp()

-- 转换json为字符串

local message = cjson.encode(log_json);

-- 定义kafka异步⽣产者

local bp = producer:new(broker_list, { producer_type = "async" })

2)重新请求登陆,注意,必须是经过

nginx代理的地址:

http://localhost/api/login?username=zhang

san&password=123

如果直接请求微服务端⼝上的

rest,⽣成的

cookie会因为不同域,⽽取不到响应的

值。

3)重新请求⾸⻚index.html

4)进⼊kibana查看采集情况

4.5.4 整体测试

1)清除所有cookie

2)nginx中新建登录⻚login.html

3)请求 index.html验证sid为空

4)请求login.html

5)登陆,再次请求index.html验证sid

6)请求后台接⼝ /api/test ,验证完整的请求链路

4.6 总结

-- 发送⽇志消息,send第⼆个参数key,⽤于kafka路由控制:

-- key为nill(空)时,⼀段时间向同⼀partition写⼊数据

-- 指定key,按照key的hash写⼊到对应的partition

local ok, err = bp:send("demo2", nil, message)

if not ok then

ngx.log(ngx.ERR, "kafka send err:", err)

return

end

';

<form action="/api/login">

<input name="username"/>

<input type="password"/>

<button type="submit">submit</button>

</form>

4.6 总结

1. 前端链路收集:lua+kafka

2. 微服务fifilter,第⼀道关卡

3. threadlocal服务内上下⽂传递

4. 切⾯和注解配合完成⽇志的⾃动打印

5. 乱序问题

6. 跨服务的传递

7. ⽤户登陆的

sid

附:

kafka可以配置多个topic,为每个微服务提供特定的topic,有助于提升性能