一、初始化

1. 代码结构

main_informer.py:

exp = Exp(args) # set experiments

exp_basic.py:

class Exp_Informer(Exp_Basic):

class Exp_Basic(object):

def __init__(self, args):

self._build_model() # 搭建模型,继承了父类Exp_Basic中的_build_model()函数

model = model_dict[self.args.model] # 创建模型对象

model.py:

class Informer(nn.Module):

# DataEmbedding, 编码操作(对输入序列进行向量化、位置编码以及时间特征编码过程)

self.enc_embedding = DataEmbedding(...)

self.dec_embedding = DataEmbedding(...)

self.encoder = Encoder(...) # Encoder, 创建编码器对象,搭建编码器结构,并实现初始化

self.decoder = Decoder(...) # Decoder, 创建解码器对象,搭建解码器结构,并实现初始化

self.projection = nn.Linear(...)

2. 功能结构

(1)编码操作(DataEmbedding)

models(文件夹)

embed.py:

class DataEmbedding(nn.Module):

self.value_embedding = TokenEmbedding(...) # 向量化操作

self.position_embedding = PositionalEmbedding(...) # 位置编码操作

self.temporal_embedding = TemporalEmbedding(...) # 时间特征编码操作

self.dropout = nn.Dropout(p=dropout) # 防止或减轻过拟合, p=0.05,每个神经元有0.05的可能性不被激活

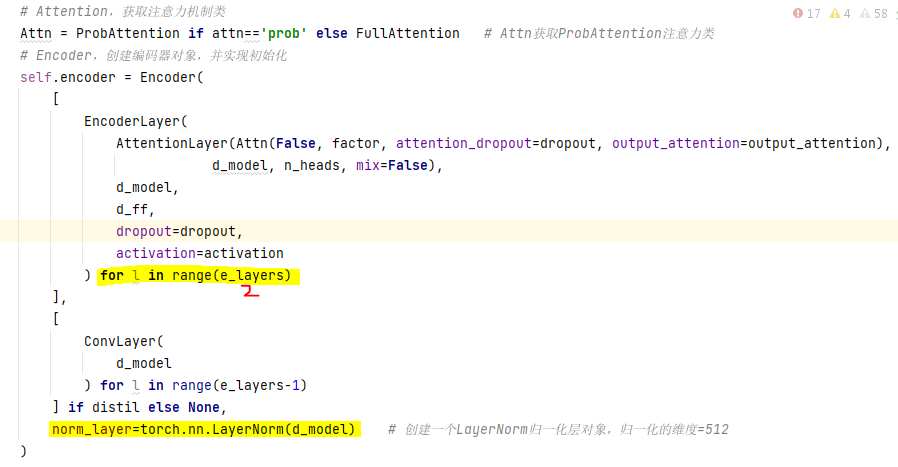

(2)编码器(Encoder)

models(文件夹)

model.py:

![]()

attn.py:

class ProbAttention(nn.Module):

self.dropout = nn.Dropout(attention_dropout) # 防止或减轻过拟合, p=0.05,每个神经元有0.05的可能性不被激活

class AttentionLayer(nn.Module):

# query查询项,初始化一个线性层对象(int_features=512,out_features=512,bias=True)

self.query_projection = nn.Linear(...)

# key键项,初始化一个线性层对象(int_features=512,out_features=512,bias=True)

self.key_projection = nn.Linear(...)

# value值项, 初始化一个线性层对象(int_features=512,out_features=512,bias=True)

self.value_projection = nn.Linear(...)

# out输出项,初始化一个线性层对象(int_features=512,out_features=512,bias=True)

self.out_projection = nn.Linear(...)

class EncoderLayer(nn.Module):

# 创建一个1维卷积层对象(输入序列的通道数=512,卷积产生的通道数=2048, 卷积核的大小=1)

self.conv1 = nn.Conv1d(...)

# 创建一个1维卷积层对象(输入序列的通道数=2048,卷积产生的通道数=512, 卷积核的大小=1)

self.conv2 = nn.Conv1d(...)

self.norm1 = nn.LayerNorm(...) # 创建一个归一化层对象,归一化的维度=512

self.norm2 = nn.LayerNorm(...) # 创建一个归一化层对象,归一化的维度=512

self.dropout = nn.Dropout(...) # 防止或减轻过拟合, p=0.05,每个神经元有0.05的可能性不被激活

encoder.py:

class ConvLayer(nn.Module):

# 创建一个1维卷积层对象(输入..道数=512,卷积..道数=512, 卷积核的大小=3,填充=1, 填充类型=circular)

self.downConv = nn.Conv1d(...)

self.norm = nn.BatchNorm1d(...) # 创建一个归一化层对象,归一化的维度=512

self.activation = nn.ELU() # 创建elu激活函数对象

self.maxPool = nn.MaxPool1d(...) # 创建一个1维最大化池化层(卷积核的大小=3,步长为2,填充=1)

class Encoder(nn.Module):

# nn.ModuleList,一个存储不同model,并自动将每个model的parameters添加到网络之中的容器

self.attn_layers = nn.ModuleList(attn_layers)

self.conv_layers = nn.ModuleList(conv_layers)

|

ModuleList(

(0): EncoderLayer(

(attention): AttentionLayer(

(inner_attention): ProbAttention(

(dropout): Dropout(p=0.05, inplace=False)

)

(query_projection): Linear(in_features=512, out_features=512, bias=True)

(key_projection): Linear(in_features=512, out_features=512, bias=True)

(value_projection): Linear(in_features=512, out_features=512, bias=True)

(out_projection): Linear(in_features=512, out_features=512, bias=True)

)

(conv1): Conv1d(512, 2048, kernel_size=(1,), stride=(1,))

(conv2): Conv1d(2048, 512, kernel_size=(1,), stride=(1,))

(norm1): LayerNorm((512,), eps=1e-05, elementwise_affine=True)

(norm2): LayerNorm((512,), eps=1e-05, elementwise_affine=True)

(dropout): Dropout(p=0.05, inplace=False)

) # (0): EncoderLayer

(1): EncoderLayer(

(attention): AttentionLayer(

(inner_attention): ProbAttention(

(dropout): Dropout(p=0.05, inplace=False)

)

(query_projection): Linear(in_features=512, out_features=512, bias=True)

(key_projection): Linear(in_features=512, out_features=512, bias=True)

(value_projection): Linear(in_features=512, out_features=512, bias=True)

(out_projection): Linear(in_features=512, out_features=512, bias=True)

)

(conv1): Conv1d(512, 2048, kernel_size=(1,), stride=(1,))

(conv2): Conv1d(2048, 512, kernel_size=(1,), stride=(1,))

(norm1): LayerNorm((512,), eps=1e-05, elementwise_affine=True)

(norm2): LayerNorm((512,), eps=1e-05, elementwise_affine=True)

(dropout): Dropout(p=0.05, inplace=False)

) # (1): EncoderLayer

) # ModuleList

ModuleList(

(0): ConvLayer(

(downConv): Conv1d(512, 512, kernel_size=(3,), stride=(1,), padding=(1,), padding_mode=circular)

(norm): BatchNorm1d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(activation): ELU(alpha=1.0)

(maxPool): MaxPool1d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

) # (0): ConvLayer

) # ModuleList

norm_layer=torch.nn.LayerNorm(d_model) # 创建一个LayerNorm归一化层对象,归一化的维度=512

|

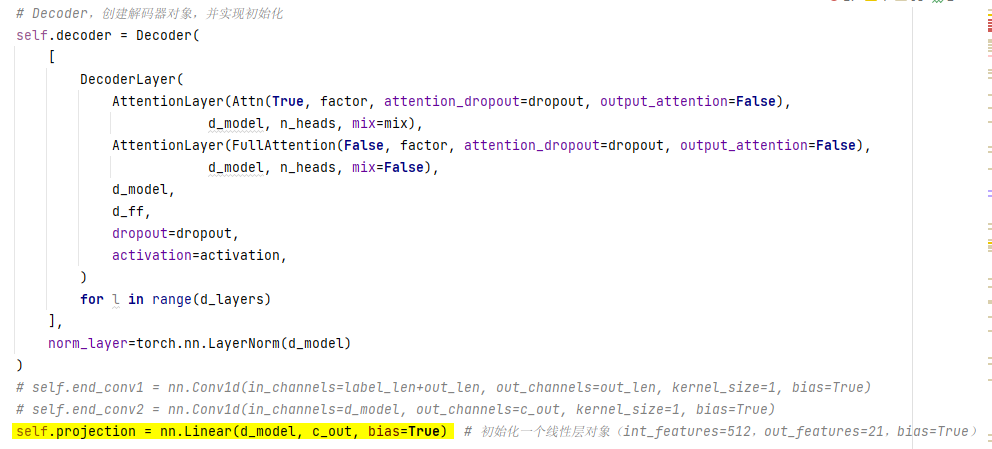

(3)解码器(Decoder)

model.py:

![]()

|

ModuleList(

(0): DecoderLayer(

(self_attention): AttentionLayer(

(inner_attention): ProbAttention(

(dropout): Dropout(p=0.05, inplace=False)

)# AttentionLayer

(query_projection): Linear(in_features=512, out_features=512, bias=True)

(key_projection): Linear(in_features=512, out_features=512, bias=True)

(value_projection): Linear(in_features=512, out_features=512, bias=True)

(out_projection): Linear(in_features=512, out_features=512, bias=True)

) # (self_attention): AttentionLayer

(cross_attention): AttentionLayer(

(inner_attention): FullAttention(

(dropout): Dropout(p=0.05, inplace=False)

) # FullAttention

(query_projection): Linear(in_features=512, out_features=512, bias=True)

(key_projection): Linear(in_features=512, out_features=512, bias=True)

(value_projection): Linear(in_features=512, out_features=512, bias=True)

(out_projection): Linear(in_features=512, out_features=512, bias=True)

) # (cross_attention): AttentionLayer

(conv1): Conv1d(512, 2048, kernel_size=(1,), stride=(1,))

(conv2): Conv1d(2048, 512, kernel_size=(1,), stride=(1,))

(norm1): LayerNorm((512,), eps=1e-05, elementwise_affine=True)

(norm2): LayerNorm((512,), eps=1e-05, elementwise_affine=True)

(norm3): LayerNorm((512,), eps=1e-05, elementwise_affine=True)

(dropout): Dropout(p=0.05, inplace=False)

) # (0): DecoderLayer

) # ModuleList

norm_layer=torch.nn.LayerNorm(d_model) # 创建一个LayerNorm归一化层对象,归一化的维度=512

|

浙公网安备 33010602011771号

浙公网安备 33010602011771号