scrapy 爬取小说(解决章节错乱问题ing)

爬虫页面

import scrapy

from firstblood.items import FirstbloodItem

class FirstSpider(scrapy.Spider):

name = 'second'

# allowed_domains = ['www.xxx.com']

start_urls = ['https://www.zhenhunxiaoshuo.com/shapolang/']

def parse_detail(self,response):

# 回调函数接收item

item = response.meta['item']

page_detail = response.xpath('/html/body/section/div[1]/div/article//text()').extract()

page_detail = ''.join(page_detail)

item['page'] = page_detail

yield item

# print(page_detail)

def parse(self, response):

# //这个基本就是默认

li_list = response.xpath('//div[@class="excerpts-wrapper"]/div/article')

for li in li_list:

item = FirstbloodItem()

title = li.xpath('./a/text()')[0].extract()

detail_url = li.xpath('./a/@href').extract_first()

item['title'] = title

# print(title)

# print(detail_url)

# 手动对详情页发请求

# 请求传参

yield scrapy.Request(detail_url,callback = self.parse_detail,meta={'item':item})

settings页面打开管道存储

from itemadapter import ItemAdapter

import pymysql

class FirstbloodPipeline(object):

def process_item(self,item,spider):

print(item['title']) #这是刚才看了一下章节顺序

return item

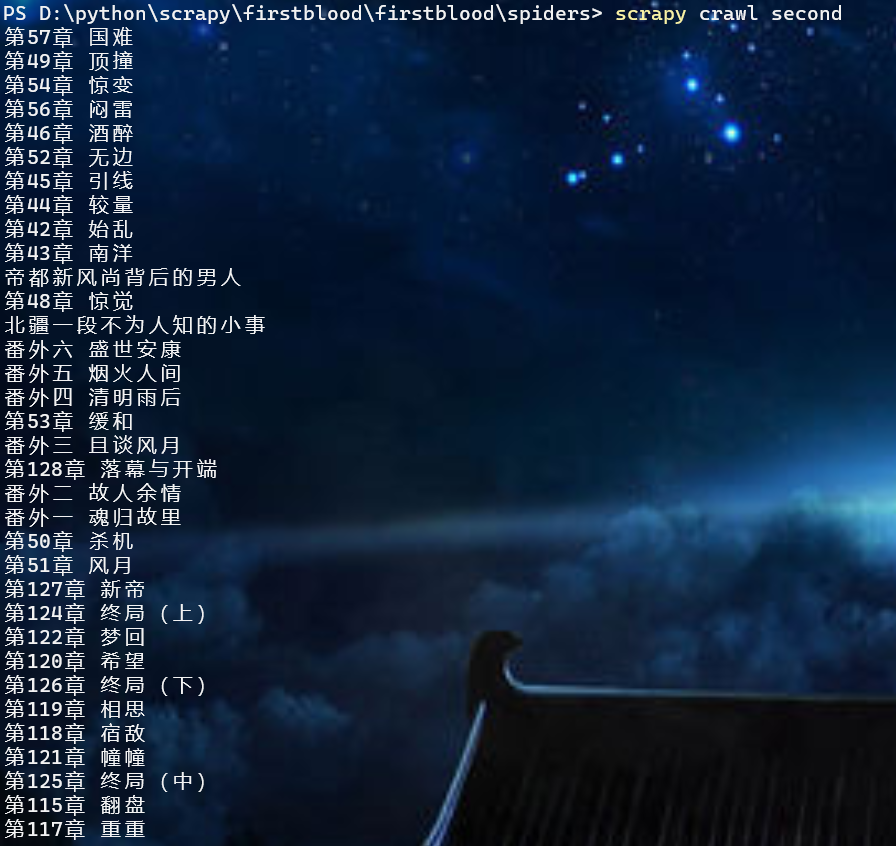

问题:章节错乱

原因好像是异步存储

很多小说章节前面都没有数字什么的,所以需要自己设定自增id

posted on

posted on