python 爬虫基础

1、robots.txt: 限制互联网爬取引擎的爬取规则。君子协定

2、import request

request.get(url)

with open('girl.png','wb') as fp:

fp.write()

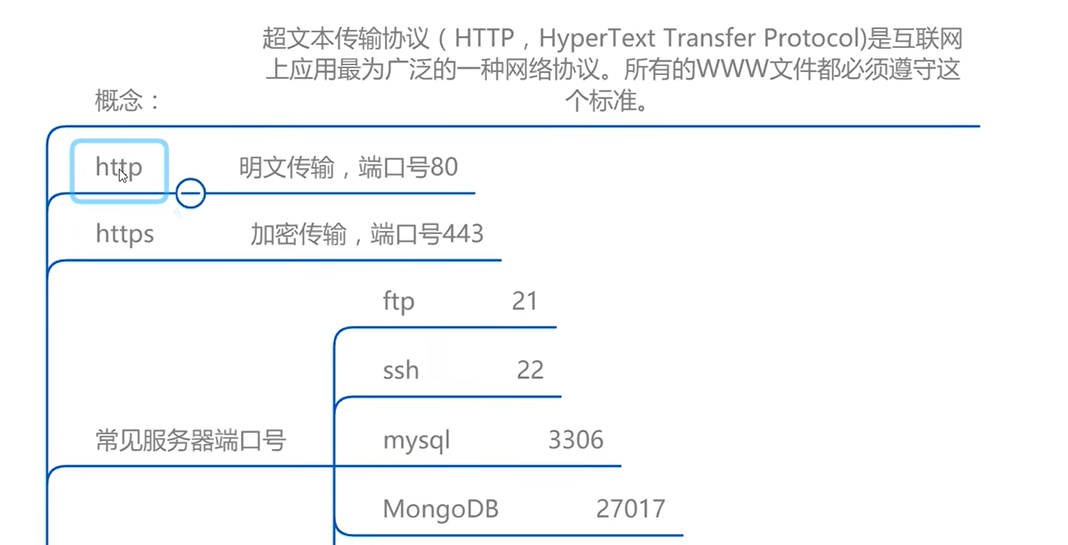

常见协议端口:

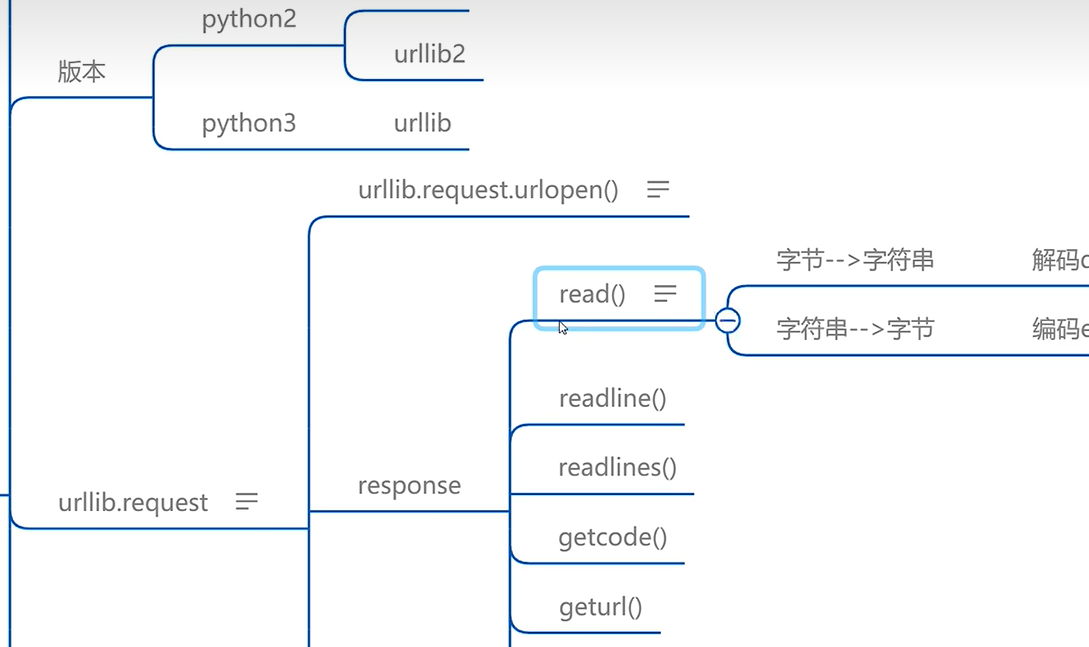

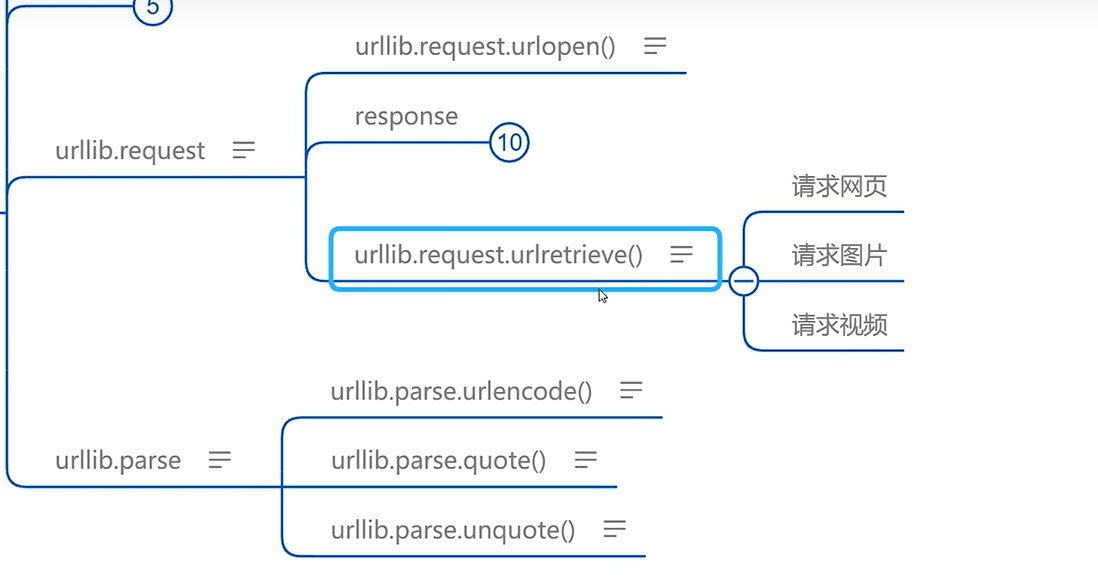

from urllib import request

url="http://www.baidu.com"

response = request.urlopen(url)

print(response.read().encode("utf-8"))

浙公网安备 33010602011771号

浙公网安备 33010602011771号