pytorch笔记

torch和torchvision版本匹配查询

https://github.com/pytorch/vision#installation

查看torch支持的cuda版本

>>> import torchvision

>>> torchvision.__version__

'0.10.0+cu102'

>>> import torch

>>> torch.__version__

'1.9.0'

>>> torch.version.cuda

'11.1'

>>>

Tensor create

#创建特定shape value为random值的tensor

input = torch.rand((64,64,3))

创建bool tensor

>>> mask = torch.zeros(3,4,dtype=torch.bool)

>>> mask

tensor([[False, False, False, False],

[False, False, False, False],

[False, False, False, False]])

tensor copy

"""

torch里tensor的复制

"""

a=torch.tensor([1])

b=a

a[0]=2

print('a={},b={}'.format(a,b))

#a,b是同一个东西

a=torch.tensor([1])

c = a * torch.ones(3)

#做完运算后a,c是两个东西

a[0]=2

print('a={},c={}'.format(a,c))

c[0]=5

print('a={},c={}'.format(a,c))

a=torch.tensor([1])

d=a.clone()

#a,d是两个东西

a[0]=2

print('a={},d={}'.format(a,d))

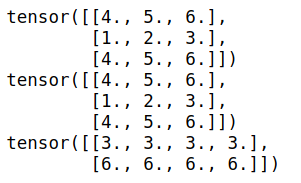

Tensor slice

- 以[2,3]矩阵为例,slice后可以得到任意shape的矩阵,并不是说一定会小于2行3列.

import torch

truths=torch.Tensor([[1,2,3],[4,5,6]])

#代表新生成一个[3,]的矩阵,行位置分别取原先矩阵的第1,第0,第1行.

print(truths[[1,0,1],:])

print(truths[[1,0,1]]) #等同于truths[[1,0,1],:]

#代表新生成一个[,4]的矩阵,列位置分别取原先矩阵的第2,第2,第2,第2列

print(truths[:,[2,2,2,2]])

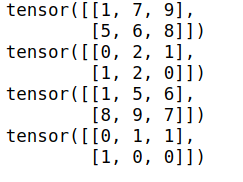

输出

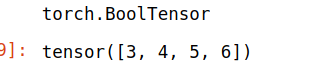

- 用bool型的tensor去切片

import torch

x = torch.tensor([[1,2,3],[4,5,6]])

index = x>2

print(index.type())

x[index]

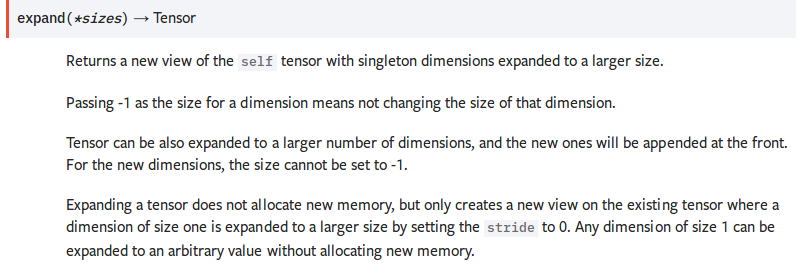

tensor扩展

Expanding a tensor does not allocate new memory, but only creates a new view on the existing tensor where a dimension of size one is expanded to a larger size by setting the stride to 0. Any dimension of size 1 can be expanded to an arbitrary value without allocating new memory.

并不分配新内存. 只是改变了已有tensor的view. size为1的维度被扩展为更大的size.

>>> x = torch.tensor([[1], [2], [3]])

>>> x.size()

torch.Size([3, 1])

>>> x.expand(3, 4)

tensor([[ 1, 1, 1, 1],

[ 2, 2, 2, 2],

[ 3, 3, 3, 3]])

>>> x.expand(-1, 4) # -1 means not changing the size of that dimension

tensor([[ 1, 1, 1, 1],

[ 2, 2, 2, 2],

[ 3, 3, 3, 3]])

gather

torch.gather(input, dim, index, out=None, sparse_grad=False) → Tensor

即dim维度的下标由index替换.input是n维的,index也得是n维的,tensor在第dim维度上的size可以和input不一致. 最终的output和index的shape是一致的.

即对dim维度的数据按照index来索引.

比如

import torch

t = torch.tensor([[1,2],[3,4]])

index=torch.tensor([[0,0],[1,0]])

torch.gather(t,1,index)

输出

tensor([[1, 1],

[4, 3]])

gather(t,1,index)替换第1维度的数据(即列方向),替换成哪些列的值呢?[[0,0],[1,0]],对第一行,分别为第0列,第0列,对第二行,分别为第1列,第0列.

从而得到tensor([[1, 1],[4, 3]])

sum

沿着第n维度,求和.keepdim表示是否保持维度数目不变.

import torch

t = torch.tensor([[1,2],[3,4]])

a=torch.sum(t,0)

b=torch.sum(t,1,keepdim=True)

print(a.shape,b.shape)

print(a)

print(b)

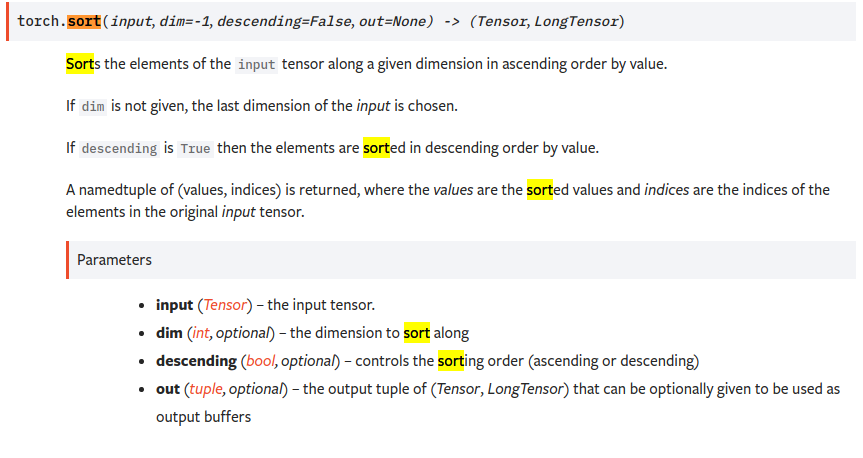

sort

沿着第n个维度的方向排序

import torch

t = torch.tensor([[1,9,7],[8,5,6]])

_sorted,_index = t.sort(1)

print(_sorted)

print(_index)

_sorted,_index = t.sort(0)

print(_sorted)

print(_index)

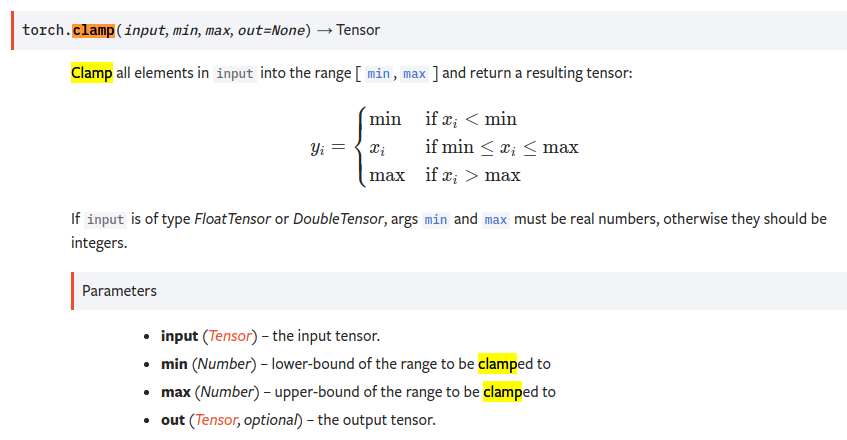

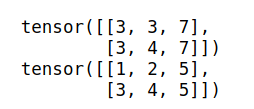

clamp

import torch

print()

t = torch.tensor([[1,2,7],[3,4,8]])

res = t.clamp(3,7) #<3的变为3,>7的变为7 中间范围的不变

print(res)

res2 = torch.clamp(t,max=5) #所有大于5的都改为5

print(res2)

各种损失函数

https://blog.csdn.net/zhangxb35/article/details/72464152

有用link:

torch.repeat()

沿着某个维度对tensor做复制.

比如

>>> x=torch.tensor([1,2,3])

>>> x.shape

torch.Size([3])

>>> x.repeat(4,2)

tensor([[1, 2, 3, 1, 2, 3],

[1, 2, 3, 1, 2, 3],

[1, 2, 3, 1, 2, 3],

[1, 2, 3, 1, 2, 3]])

>>> x.repeat(4,2,1)

tensor([[[1, 2, 3],

[1, 2, 3]],

[[1, 2, 3],

[1, 2, 3]],

[[1, 2, 3],

[1, 2, 3]],

[[1, 2, 3],

[1, 2, 3]]])

这个过程实际上相当于

>>> y=x.unsqueeze(0).unsqueeze(0)

>>> y.shape

torch.Size([1, 1, 3])

>>> y.repeat(4,2,1)

tensor([[[1, 2, 3],

[1, 2, 3]],

[[1, 2, 3],

[1, 2, 3]],

[[1, 2, 3],

[1, 2, 3]],

[[1, 2, 3],

[1, 2, 3]]])

由于x的初始维度只有一个dim,当在三个维度上想做(4,2,1)的倍数放大时,需要先把x扩展为3个维度,即[1,1,3]这个shape. 然后再做复制.

torch.min

https://pytorch.org/docs/stable/generated/torch.minimum.html#torch.minimum

当对两个tensor做比较时,如果两个tensor的shape不同,实际上要先将两个tensor repeat到相同的shape,再做element-wise的比较.

比如

>>> a=torch.tensor([[1,3,5],[2,6,4]])

>>> b=torch.tensor([[1,3],[2,4]])

>>> torch.min(a,b)

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

RuntimeError: The size of tensor a (3) must match the size of tensor b (2) at non-singleton dimension 1

这样是无法比较的,因为a,b的shape不同.

而:

>>> a=torch.tensor([[1,3],[2,4]])

>>> b= torch.tensor([[1,2],[3,4],[5,6]])

>>> a=a.unsqueeze(0)

>>> b=b.unsqueeze(1)

>>> a.shape,b.shape

(torch.Size([1, 2, 2]), torch.Size([3, 1, 2]))

>>> c=torch.min(a,b)

>>> c

tensor([[[1, 2],

[1, 2]],

[[1, 3],

[2, 4]],

[[1, 3],

[2, 4]]])

a和b的shape是不同的.但是他们都含有1维的维度.所以实际上会将其扩展为[3,2,2]后比较.

>>> a.shape,b.shape

(torch.Size([1, 2, 2]), torch.Size([3, 1, 2]))

>>> a=a.repeat(3,1,1)

>>> b=b.repeat(1,2,1)

>>> a.shape,b.shape

(torch.Size([3, 2, 2]), torch.Size([3, 2, 2]))

>>> torch.min(a,b)

tensor([[[1, 2],

[1, 2]],

[[1, 3],

[2, 4]],

[[1, 3],

[2, 4]]])

可以看到结果是相同的

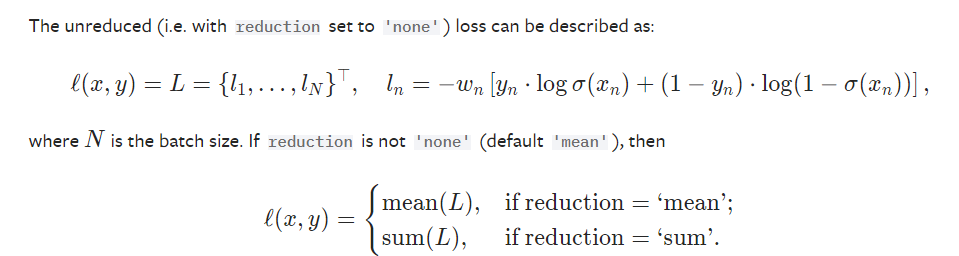

Loss函数

BCEWithLogitsLoss

a=torch.tensor([0.3,0.9,0.8])

gt=torch.tensor([0.,1.,0.])

mask=torch.tensor([0.,1.,0.],dtype=torch.uint8)

l=torch.nn.BCEWithLogitsLoss(reduction='sum')

l(a,gt)

与

pos=a[mask]

neg=a[~mask]

pos_gt=gt[mask]

neg_gt=gt[~mask]

l(pos,pos_gt)+l(neg,neg_gt)

输出相同.tensor(2.3666)