k8s prometheus平台部署相关组件

k8s-prometheus平台部署相关组件

1. K8s-prometheus平台部署相关组件

-

prometheus-deployment.yaml #部署Prometheus

-

**prometheus-configmap.yaml ** #Prometheus配置文件,主要配置Kubernetes服务发现

-

prometheus-rules.yaml #Prometheus告警规则

-

grafana.yaml #可视化展示

-

node-exporter.yml #采集节点资源,通过DaemonSet方式部署,并声明让Prometheus收集

-

kube-state-metrics.yaml #采集K8s资源,并声明让Prometheus收集

-

alertmanager-configmap.yaml #配置文件,配置发件人和收件人

-

alertmanager-deployment.yaml #部署Alertmanager告警组件

2. 案例部署

-

配置文件编写

[root@k8s-master prometheus]# cat prometheus-configmap.yaml apiVersion: v1 kind: ConfigMap metadata: name: prometheus-config namespace: ops data: prometheus.yml: | rule_files: - /etc/config/rules/*.rules scrape_configs: - job_name: prometheus static_configs: - targets: - localhost:9090 - job_name: kubernetes-apiservers kubernetes_sd_configs: - role: endpoints relabel_configs: - action: keep regex: default;kubernetes;https source_labels: - __meta_kubernetes_namespace - __meta_kubernetes_service_name - __meta_kubernetes_endpoint_port_name scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt insecure_skip_verify: true bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token - job_name: kubernetes-nodes-kubelet kubernetes_sd_configs: - role: node # 发现集群中的节点 relabel_configs: # 将标签(.*)作为新标签名,原有值不变 - action: labelmap regex: __meta_kubernetes_node_label_(.+) scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt insecure_skip_verify: true bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token - job_name: kubernetes-nodes-cadvisor kubernetes_sd_configs: - role: node relabel_configs: # 将标签(.*)作为新标签名,原有值不变 - action: labelmap regex: __meta_kubernetes_node_label_(.+) # 实际访问指标接口 https://NodeIP:10250/metrics/cadvisor,这里替换默认指标URL路径 - target_label: __metrics_path__ replacement: /metrics/cadvisor scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt insecure_skip_verify: true bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token - job_name: kubernetes-service-endpoints kubernetes_sd_configs: - role: endpoints # 从Service列表中的Endpoint发现Pod为目标 relabel_configs: # Service没配置注解prometheus.io/scrape的不采集 - action: keep regex: true source_labels: - __meta_kubernetes_service_annotation_prometheus_io_scrape # 重命名采集目标协议 - action: replace regex: (https?) source_labels: - __meta_kubernetes_service_annotation_prometheus_io_scheme target_label: __scheme__ # 重命名采集目标指标URL路径 - action: replace regex: (.+) source_labels: - __meta_kubernetes_service_annotation_prometheus_io_path target_label: __metrics_path__ # 重命名采集目标地址 - action: replace regex: ([^:]+)(?::\d+)?;(\d+) replacement: $1:$2 source_labels: - __address__ - __meta_kubernetes_service_annotation_prometheus_io_port target_label: __address__ # 将K8s标签(.*)作为新标签名,原有值不变 - action: labelmap regex: __meta_kubernetes_service_label_(.+) # 生成命名空间标签 - action: replace source_labels: - __meta_kubernetes_namespace target_label: kubernetes_namespace # 生成Service名称标签 - action: replace source_labels: - __meta_kubernetes_service_name target_label: kubernetes_name - job_name: kubernetes-pods kubernetes_sd_configs: - role: pod # 发现所有Pod为目标 # 重命名采集目标协议 relabel_configs: - action: keep regex: true source_labels: - __meta_kubernetes_pod_annotation_prometheus_io_scrape # 重命名采集目标指标URL路径 - action: replace regex: (.+) source_labels: - __meta_kubernetes_pod_annotation_prometheus_io_path target_label: __metrics_path__ # 重命名采集目标地址 - action: replace regex: ([^:]+)(?::\d+)?;(\d+) replacement: $1:$2 source_labels: - __address__ - __meta_kubernetes_pod_annotation_prometheus_io_port target_label: __address__ # 将K8s标签(.*)作为新标签名,原有值不变 - action: labelmap regex: __meta_kubernetes_pod_label_(.+) # 生成命名空间标签 - action: replace source_labels: - __meta_kubernetes_namespace target_label: kubernetes_namespace # 生成Service名称标签 - action: replace source_labels: - __meta_kubernetes_pod_name target_label: kubernetes_pod_name alerting: alertmanagers: - static_configs: - targets: ["alertmanager:80"][root@k8s-master prometheus]# cat prometheus-deployment.yaml apiVersion: apps/v1 kind: Deployment metadata: name: prometheus namespace: ops labels: k8s-app: prometheus spec: replicas: 1 selector: matchLabels: k8s-app: prometheus template: metadata: labels: k8s-app: prometheus spec: serviceAccountName: prometheus initContainers: - name: "init-chown-data" image: "busybox:latest" imagePullPolicy: "IfNotPresent" command: ["chown", "-R", "65534:65534", "/data"] volumeMounts: - name: prometheus-data mountPath: /data subPath: "" containers: - name: prometheus-server-configmap-reload image: "jimmidyson/configmap-reload:v0.1" imagePullPolicy: "IfNotPresent" args: - --volume-dir=/etc/config - --webhook-url=http://localhost:9090/-/reload volumeMounts: - name: config-volume mountPath: /etc/config readOnly: true resources: limits: cpu: 10m memory: 10Mi requests: cpu: 10m memory: 10Mi - name: prometheus-server image: "prom/prometheus:v2.20.0" imagePullPolicy: "IfNotPresent" args: - --config.file=/etc/config/prometheus.yml - --storage.tsdb.path=/data - --web.console.libraries=/etc/prometheus/console_libraries - --web.console.templates=/etc/prometheus/consoles - --web.enable-lifecycle ports: - containerPort: 9090 readinessProbe: httpGet: path: /-/ready port: 9090 initialDelaySeconds: 30 timeoutSeconds: 30 livenessProbe: httpGet: path: /-/healthy port: 9090 initialDelaySeconds: 30 timeoutSeconds: 30 resources: limits: cpu: 500m memory: 1500Mi requests: cpu: 200m memory: 1000Mi volumeMounts: - name: config-volume mountPath: /etc/config - name: prometheus-data mountPath: /data subPath: "" - name: prometheus-rules mountPath: /etc/config/rules volumes: - name: config-volume configMap: name: prometheus-config - name: prometheus-rules configMap: name: prometheus-rules - name: prometheus-data persistentVolumeClaim: claimName: prometheus --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: prometheus namespace: ops spec: storageClassName: "managed-nfs-storage" accessModes: - ReadWriteMany resources: requests: storage: 10Gi --- apiVersion: v1 kind: Service metadata: name: prometheus namespace: ops spec: type: NodePort ports: - name: http port: 9090 protocol: TCP targetPort: 9090 nodePort: 30090 selector: k8s-app: prometheus --- apiVersion: v1 kind: ServiceAccount metadata: name: prometheus namespace: ops --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: prometheus rules: - apiGroups: - "" resources: - nodes - nodes/metrics - services - endpoints - pods verbs: - get - list - watch - apiGroups: - "" resources: - configmaps verbs: - get - nonResourceURLs: - "/metrics" verbs: - get --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: prometheus roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: prometheus subjects: - kind: ServiceAccount name: prometheus namespace: ops[root@k8s-master prometheus]# cat prometheus-rules.yaml apiVersion: v1 kind: ConfigMap metadata: name: prometheus-rules namespace: ops data: general.rules: | groups: - name: general.rules rules: - alert: InstanceDown expr: up == 0 for: 1m labels: severity: error annotations: summary: "Instance {{ $labels.instance }} 停止工作" description: "{{ $labels.instance }} job {{ $labels.job }} 已经停止5分钟以上." node.rules: | groups: - name: node.rules rules: - alert: NodeFilesystemUsage expr: | 100 - (node_filesystem_free{fstype=~"ext4|xfs"} / node_filesystem_size{fstype=~"ext4|xfs"} * 100) > 80 for: 1m labels: severity: warning annotations: summary: "Instance {{ $labels.instance }} : {{ $labels.mountpoint }} 分区使用率过高" description: "{{ $labels.instance }}: {{ $labels.mountpoint }} 分区使用大于80% (当前值: {{ $value }})" - alert: NodeMemoryUsage expr: | 100 - (node_memory_MemFree+node_memory_Cached+node_memory_Buffers) / node_memory_MemTotal * 100 > 80 for: 1m labels: severity: warning annotations: summary: "Instance {{ $labels.instance }} 内存使用率过高" description: "{{ $labels.instance }}内存使用大于80% (当前值: {{ $value }})" - alert: NodeCPUUsage expr: | 100 - (avg(irate(node_cpu_seconds_total{mode="idle"}[5m])) by (instance) * 100) > 60 for: 1m labels: severity: warning annotations: summary: "Instance {{ $labels.instance }} CPU使用率过高" description: "{{ $labels.instance }}CPU使用大于60% (当前值: {{ $value }})" - alert: KubeNodeNotReady expr: | kube_node_status_condition{condition="Ready",status="true"} == 0 for: 1m labels: severity: error annotations: message: '{{ $labels.node }} 已经有10多分钟没有准备好了.' pod.rules: | groups: - name: pod.rules rules: - alert: PodCPUUsage expr: | sum(rate(container_cpu_usage_seconds_total{image!=""}[1m]) * 100) by (pod, namespace) > 80 for: 5m labels: severity: warning annotations: summary: "命名空间: {{ $labels.namespace }} | Pod名称: {{ $labels.pod }} CPU使用大于80% (当前值: {{ $value }})" - alert: PodMemoryUsage expr: | sum(container_memory_rss{image!=""}) by(pod, namespace) / sum(container_spec_memory_limit_bytes{image!=""}) by(pod, namespace) * 100 != +inf > 80 for: 5m labels: severity: warning annotations: summary: "命名空间: {{ $labels.namespace }} | Pod名称: {{ $labels.pod }} 内存使用大于80% (当前值: {{ $value }})" - alert: PodNetworkReceive expr: | sum(rate(container_network_receive_bytes_total{image!="",name=~"^k8s_.*"}[5m]) /1000) by (pod,namespace) > 30000 for: 5m labels: severity: warning annotations: summary: "命名空间: {{ $labels.namespace }} | Pod名称: {{ $labels.pod }} 入口流量大于30MB/s (当前值: {{ $value }}K/s)" - alert: PodNetworkTransmit expr: | sum(rate(container_network_transmit_bytes_total{image!="",name=~"^k8s_.*"}[5m]) /1000) by (pod,namespace) > 30000 for: 5m labels: severity: warning annotations: summary: "命名空间: {{ $labels.namespace }} | Pod名称: {{ $labels.pod }} 出口流量大于30MB/s (当前值: {{ $value }}/K/s)" - alert: PodRestart expr: | sum(changes(kube_pod_container_status_restarts_total[1m])) by (pod,namespace) > 0 for: 1m labels: severity: warning annotations: summary: "命名空间: {{ $labels.namespace }} | Pod名称: {{ $labels.pod }} Pod重启 (当前值: {{ $value }})" - alert: PodFailed expr: | sum(kube_pod_status_phase{phase="Failed"}) by (pod,namespace) > 0 for: 5s labels: severity: error annotations: summary: "命名空间: {{ $labels.namespace }} | Pod名称: {{ $labels.pod }} Pod状态Failed (当前值: {{ $value }})" - alert: PodPending expr: | sum(kube_pod_status_phase{phase="Pending"}) by (pod,namespace) > 0 for: 1m labels: severity: error annotations: summary: "命名空间: {{ $labels.namespace }} | Pod名称: {{ $labels.pod }} Pod状态Pending (当前值: {{ $value }})" -

创建命名空间

[root@k8s-master prometheus]# kubectl create namespace ops namespace/ops created -

部署prometheus服务

[root@k8s-master prometheus]# kubectl apply -f prometheus-configmap.yaml configmap/prometheus-config created [root@k8s-master prometheus]# kubectl apply -f prometheus-deployment.yaml deployment.apps/prometheus created persistentvolumeclaim/prometheus created service/prometheus created serviceaccount/prometheus created clusterrole.rbac.authorization.k8s.io/prometheus created clusterrolebinding.rbac.authorization.k8s.io/prometheus created [root@k8s-master prometheus]# kubectl apply -f prometheus-rules.yaml -

验证prometheus服务是否启动

[root@k8s-master prometheus]# kubectl get pods -n ops NAME READY STATUS RESTARTS AGE prometheus-859dbbc5f7-rlsqp 2/2 Running 0 4h8m -

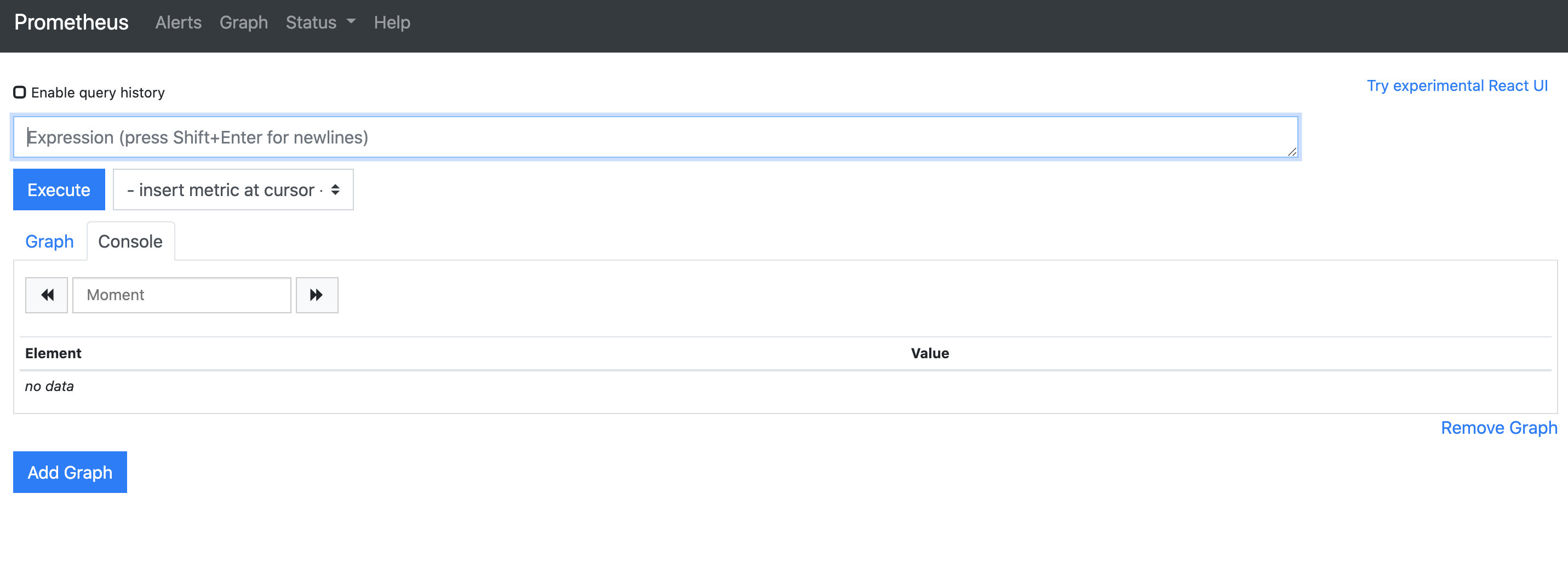

浏览器验证