k8s service负载均衡实现之IPVS

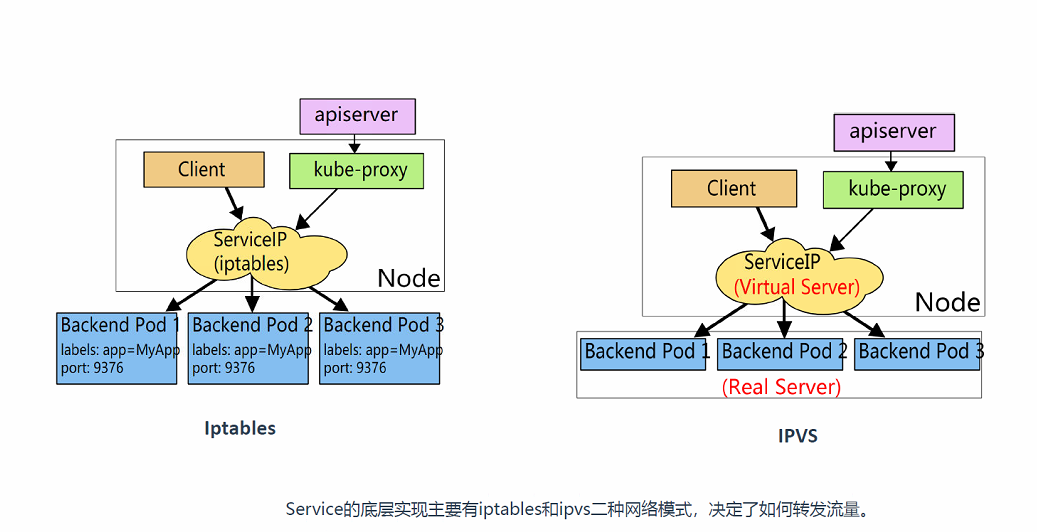

1. k8s-service代理模式

-

iptables和ipvs工作流程图

![image]()

-

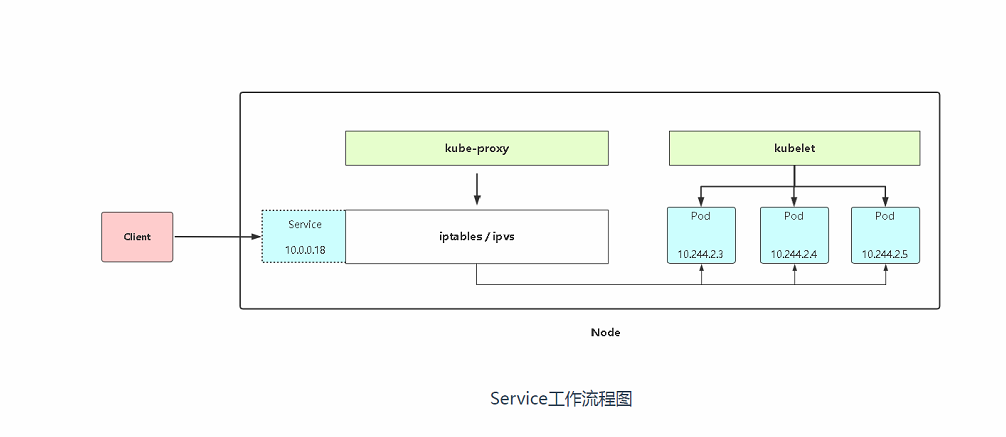

k8s-service工作流程图

![image]()

2. k8s-service代理模式IPVS

-

IPVS: 有两种启动模式

-

kubeadm方式修改ipvs模式:

# kubectl edit configmap kube-proxy -n kube-system ... mode: “ipvs“ ... # kubectl delete pod kube-proxy-btz4p -n kube-system 注: 1、kube-proxy配置文件以configmap方式存储 2、如果让所有节点生效,需要重建所有节点kube-proxy pod -

使用二进制方式修改IPVS模式

# vi kube-proxy-config.yml mode: ipvs ipvs: scheduler: "rr“ # systemctl restart kube-proxy 注:参考不同资料,文件名可能不同。

-

3. k8s-service代理模式IPVS案例

-

使用kubeadm方式修改

[root@k8s-master service]# kubectl edit configmap kube-proxy -n kube-system ... mode: “ipvs“ # 找到mode这里,填写ipvs模式 ... -

我们修改之后没有马上应用

[root@k8s-master service]# kubectl get pods -n kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES calico-kube-controllers-5dc87d545c-nscfb 1/1 Running 3 7d22h 10.244.235.203 k8s-master <none> <none> calico-node-j6rhw 1/1 Running 3 7d22h 192.168.0.201 k8s-master <none> <none> calico-node-n7d6s 1/1 Running 3 7d22h 192.168.0.203 k8s-node2 <none> <none> calico-node-x86s2 1/1 Running 3 7d22h 192.168.0.202 k8s-node1 <none> <none> coredns-6d56c8448f-hkgnk 1/1 Running 4 8d 10.244.235.204 k8s-master <none> <none> coredns-6d56c8448f-jfbjs 1/1 Running 3 8d 10.244.235.202 k8s-master <none> <none> etcd-k8s-master 1/1 Running 4 8d 192.168.0.201 k8s-master <none> <none> kube-apiserver-k8s-master 1/1 Running 9 8d 192.168.0.201 k8s-master <none> <none> kube-controller-manager-k8s-master 1/1 Running 9 8d 192.168.0.201 k8s-master <none> <none> kube-proxy-fhgbd 1/1 Running 3 7d23h 192.168.0.202 k8s-node1 <none> <none> kube-proxy-l7q4r 1/1 Running 3 8d 192.168.0.201 k8s-master <none> <none> kube-proxy-qwpjp 1/1 Running 3 7d23h 192.168.0.203 k8s-node2 <none> <none> kube-scheduler-k8s-master 1/1 Running 10 8d 192.168.0.201 k8s-master <none> <none> [root@k8s-master service]# kubectl delete pod kube-proxy-fhgbd -n kube-system pod "kube-proxy-fhgbd" deleted [root@k8s-master service]# kubectl get pods -n kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES calico-kube-controllers-5dc87d545c-nscfb 1/1 Running 3 7d22h 10.244.235.203 k8s-master <none> <none> calico-node-j6rhw 1/1 Running 3 7d22h 192.168.0.201 k8s-master <none> <none> calico-node-n7d6s 1/1 Running 3 7d22h 192.168.0.203 k8s-node2 <none> <none> calico-node-x86s2 1/1 Running 3 7d22h 192.168.0.202 k8s-node1 <none> <none> coredns-6d56c8448f-hkgnk 1/1 Running 4 8d 10.244.235.204 k8s-master <none> <none> coredns-6d56c8448f-jfbjs 1/1 Running 3 8d 10.244.235.202 k8s-master <none> <none> etcd-k8s-master 1/1 Running 4 8d 192.168.0.201 k8s-master <none> <none> kube-apiserver-k8s-master 1/1 Running 9 8d 192.168.0.201 k8s-master <none> <none> kube-controller-manager-k8s-master 1/1 Running 9 8d 192.168.0.201 k8s-master <none> <none> kube-proxy-g5d56 1/1 Running 0 30s 192.168.0.202 k8s-node1 <none> <none> kube-proxy-l7q4r 1/1 Running 3 8d 192.168.0.201 k8s-master <none> <none> kube-proxy-qwpjp 1/1 Running 3 7d23h 192.168.0.203 k8s-node2 <none> <none> kube-scheduler-k8s-master 1/1 Running 10 8d 192.168.0.201 k8s-master <none> <none> -

在k8s-node1上安装ipvs查看工具ipvsadm

[root@k8s-node1 ~]# yum install ipvsadm -

在k8s-node1上查看是否生效

[root@k8s-node1 ~]# ipvsadm -L -n IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 192.168.0.202:30001 rr -> 10.244.169.155:8443 Masq 1 0 0 TCP 192.168.0.202:30009 rr -> 10.244.36.79:80 Masq 1 0 0 -> 10.244.36.83:80 Masq 1 0 0 -> 10.244.169.169:80 Masq 1 0 0 TCP 10.96.0.1:443 rr -> 192.168.0.201:6443 Masq 1 0 0 TCP 10.96.0.10:53 rr -> 10.244.235.202:53 Masq 1 0 0 -> 10.244.235.204:53 Masq 1 0 0 TCP 10.96.0.10:9153 rr -> 10.244.235.202:9153 Masq 1 0 0 -> 10.244.235.204:9153 Masq 1 0 0 TCP 10.97.234.249:443 rr -> 10.244.169.155:8443 Masq 1 0 0 TCP 10.100.222.42:80 rr -> 10.244.36.79:80 Masq 1 0 0 -> 10.244.36.83:80 Masq 1 0 0 -> 10.244.169.169:80 Masq 1 0 0 TCP 10.104.161.168:80 rr TCP 10.110.198.136:8000 rr -> 10.244.169.154:8000 Masq 1 0 0 TCP 10.244.36.64:30001 rr -> 10.244.169.155:8443 Masq 1 0 0 TCP 10.244.36.64:30009 rr -> 10.244.36.79:80 Masq 1 0 0 -> 10.244.36.83:80 Masq 1 0 0 -> 10.244.169.169:80 Masq 1 0 0 TCP 127.0.0.1:30001 rr -> 10.244.169.155:8443 Masq 1 0 0 TCP 127.0.0.1:30009 rr -> 10.244.36.79:80 Masq 1 0 0 -> 10.244.36.83:80 Masq 1 0 0 -> 10.244.169.169:80 Masq 1 0 0 TCP 172.17.0.1:30001 rr -> 10.244.169.155:8443 Masq 1 0 0 TCP 172.17.0.1:30009 rr -> 10.244.36.79:80 Masq 1 0 0 -> 10.244.36.83:80 Masq 1 0 0 -> 10.244.169.169:80 Masq 1 0 0 UDP 10.96.0.10:53 rr -> 10.244.235.202:53 Masq 1 0 0 -> 10.244.235.204:53 Masq 1 0 0 -

注释:我们可以看到已经

浙公网安备 33010602011771号

浙公网安备 33010602011771号