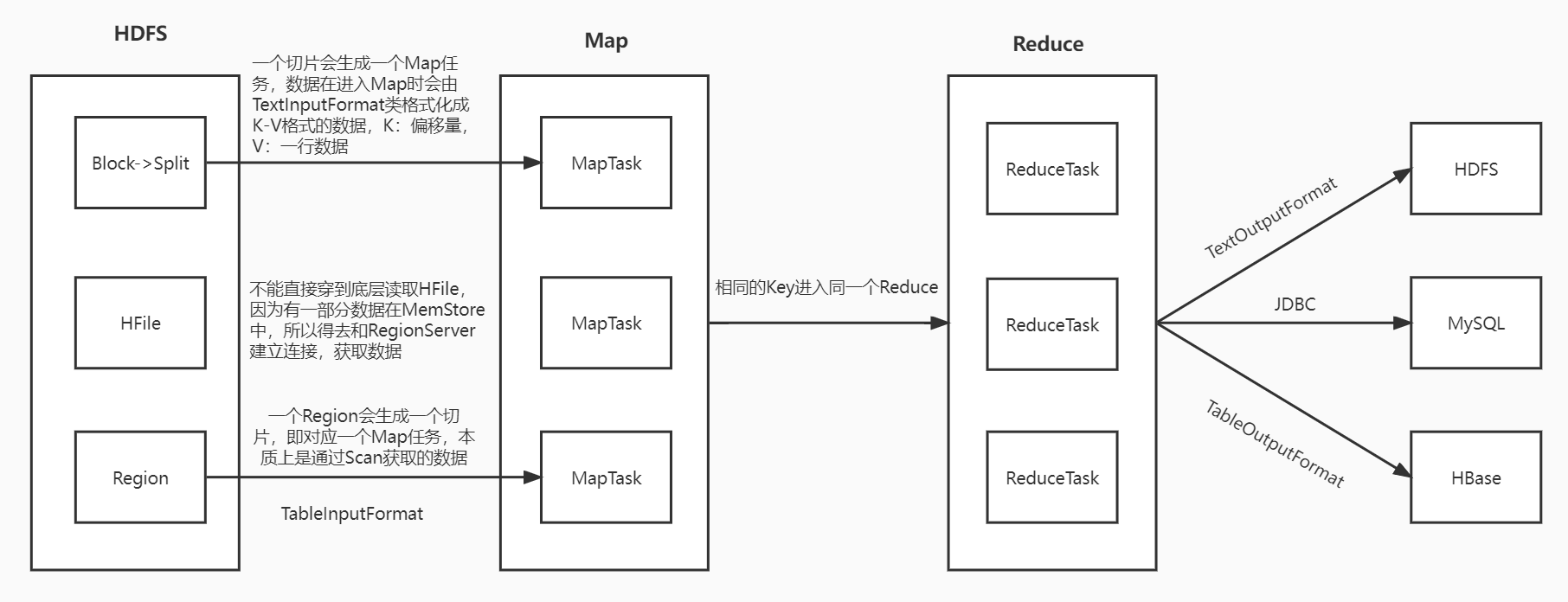

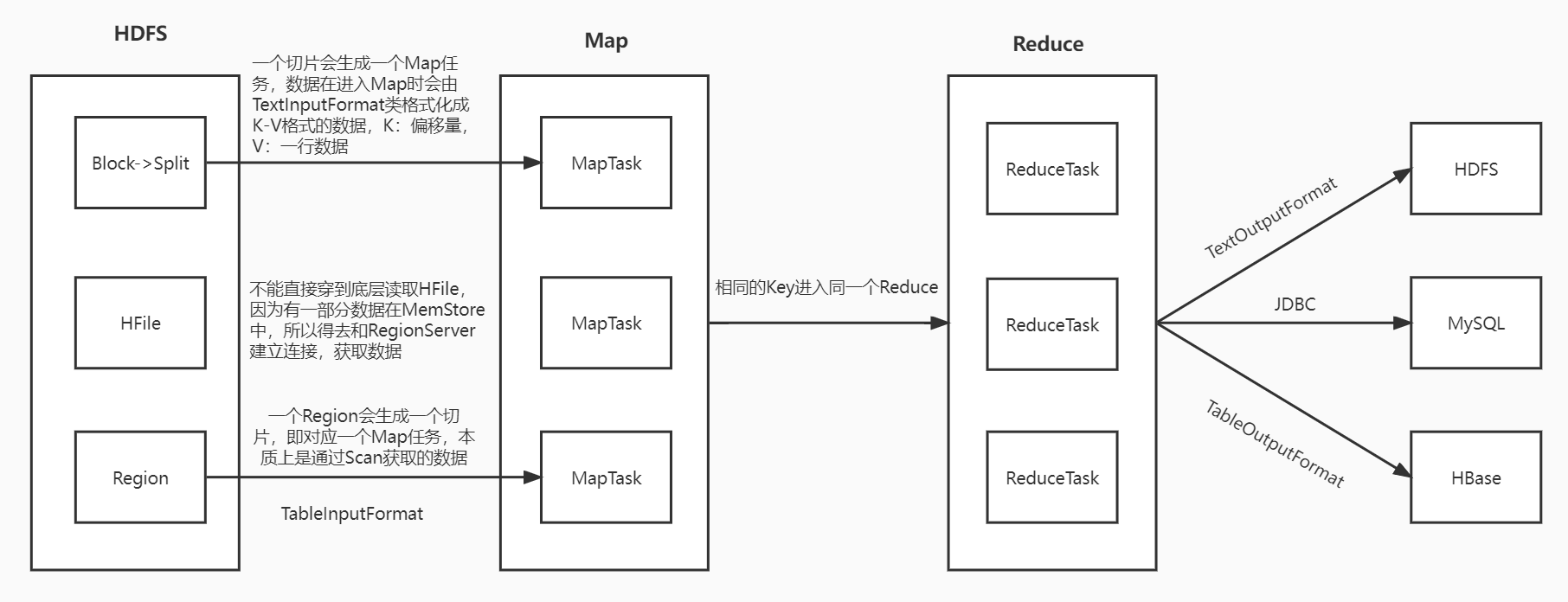

MapReduce读写HBase架构图及其示例代码

1、MapReduce读写HBase架构图

2、MapReduce读HBase代码示例

package com.shujia;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.io.ImmutableBytesWritable;

import org.apache.hadoop.hbase.mapreduce.TableMapReduceUtil;

import org.apache.hadoop.hbase.mapreduce.TableMapper;

import org.apache.hadoop.hbase.util.Bytes;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

public class Demo05MRReadHBase {

public static class MRReadHBase extends TableMapper<Text, IntWritable> {

@Override

protected void map(ImmutableBytesWritable key, Result value, Mapper<ImmutableBytesWritable, Result, Text, IntWritable>.Context context) throws IOException, InterruptedException {

String rowkey = Bytes.toString(key.get());

String clazz = Bytes.toString(value.getValue("info".getBytes(), "clazz".getBytes()));

context.write(new Text(clazz), new IntWritable(1));

}

}

public static class MyReducer extends Reducer<Text, IntWritable, Text, IntWritable> {

@Override

protected void reduce(Text key, Iterable<IntWritable> values, Reducer<Text, IntWritable, Text, IntWritable>.Context context) throws IOException, InterruptedException {

int cnt = 0;

for (IntWritable value : values) {

cnt += value.get();

}

context.write(key, new IntWritable(cnt));

}

}

public static void main(String[] args) throws IOException, InterruptedException, ClassNotFoundException {

Configuration conf = HBaseConfiguration.create();

conf.set("hbase.zookeeper.quorum", "master:2181,node1:2181,node2:2181");

conf.set("fs.defaultFS", "hdfs://master:9000");

Job job = Job.getInstance(conf);

job.setJobName("Demo05MRReadHBase");

job.setJarByClass(Demo05MRReadHBase.class);

TableMapReduceUtil.initTableMapperJob(

"stu",

new Scan(),

MRReadHBase.class,

Text.class,

IntWritable.class,

job

);

job.setReducerClass(MyReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

Path path = new Path("/MR/HBase/output/");

FileSystem fs = FileSystem.get(conf);

if (fs.exists(path)) {

fs.delete(path, true);

}

FileOutputFormat.setOutputPath(job, path);

job.waitForCompletion(true);

}

}

手动配置Hadoop运行时的依赖环境步骤:

cd /usr/local/soft/hbase-1.4.6/lib/

export HADOOP_CLASSPATH="$HBASE_HOME/lib/*"

3、MapReduce写HBase代码示例

package com.shujia;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.client.Mutation;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.io.ImmutableBytesWritable;

import org.apache.hadoop.hbase.mapreduce.TableMapReduceUtil;

import org.apache.hadoop.hbase.mapreduce.TableMapper;

import org.apache.hadoop.hbase.mapreduce.TableReducer;

import org.apache.hadoop.hbase.util.Bytes;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

public class Demo06MRReadAndWriteHBase {

public static class MRReadHBase extends TableMapper<Text, IntWritable> {

@Override

protected void map(ImmutableBytesWritable key, Result value, Mapper<ImmutableBytesWritable, Result, Text, IntWritable>.Context context) throws IOException, InterruptedException {

String rowkey = Bytes.toString(key.get());

String gender = Bytes.toString(value.getValue("info".getBytes(), "gender".getBytes()));

context.write(new Text(gender), new IntWritable(1));

}

}

public static class MRWriteHBase extends TableReducer<Text, IntWritable, NullWritable> {

@Override

protected void reduce(Text key, Iterable<IntWritable> values, Reducer<Text, IntWritable, NullWritable, Mutation>.Context context) throws IOException, InterruptedException {

int cnt = 0;

for (IntWritable value : values) {

cnt += value.get();

}

Put put = new Put(key.getBytes());

put.addColumn("info".getBytes(), "cnt".getBytes(), (cnt + "").getBytes());

context.write(NullWritable.get(), put);

}

}

public static void main(String[] args) throws IOException, InterruptedException, ClassNotFoundException {

Configuration conf = HBaseConfiguration.create();

conf.set("hbase.zookeeper.quorum", "master:2181,node1:2181,node2:2181");

conf.set("fs.defaultFS", "hdfs://master:9000");

Job job = Job.getInstance(conf);

job.setJobName("Demo06MRReadAndWriteHBase");

job.setJarByClass(Demo06MRReadAndWriteHBase.class);

TableMapReduceUtil.initTableMapperJob(

"stu",

new Scan(),

MRReadHBase.class,

Text.class,

IntWritable.class,

job

);

TableMapReduceUtil.initTableReducerJob(

"stu_gender_cnt",

MRWriteHBase.class,

job

);

job.waitForCompletion(true);

}

}

# 使用Maven插件将依赖打入Jar包中

# 在项目的 pom.xml 文件中添加 Maven 插件的依赖 然后重新导一下依赖

<!-- 将依赖打入Jar包-->

<build>

<plugins>

<!-- Java Compiler -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.1</version>

<configuration>

<source>1.8</source>

<target>1.8</target>

</configuration>

</plugin>

<!-- 带依赖jar 插件-->

<plugin>

<artifactId>maven-assembly-plugin</artifactId>

<configuration>

<descriptorRefs>

<descriptorRef>jar-with-dependencies</descriptorRef>

</descriptorRefs>

</configuration>

<executions>

<execution>

<id>make-assembly</id>

<phase>package</phase>

<goals>

<goal>single</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

# 之后打 jar 包的时候就会将所需依赖一并打入

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 单元测试从入门到精通

· 上周热点回顾(3.3-3.9)

· winform 绘制太阳,地球,月球 运作规律