20 python libs that might benefit.

from here, quotes:

Expanding Your Python Knowledge: Lesser-Known Libraries

The Python ecosystem is vast and far-reaching in both scope and depth. Starting out in this crazy, open-source forest is daunting, and even with years of experience, it still requires continual effort to keep up-to-date with the best libraries and techniques.

In this report we take a look at some of the lesser-known Python libraries and tools. Python itself already includes a huge number of high-quality libraries; collectively these are called the standard library. The standard library receives a lot of attention, but there are still some libraries within it that should be better known. We will start out by discussing several, extremely useful tools in the standard library that you may not know about.

We’re also going to discuss several exciting, lesser-known libraries from the third-party ecosystem. Many high-quality third-party libraries are already well-known, including Numpy and Scipy, Django, Flask, and Requests; you can easily learn more about these libraries by searching for information online. Rather than focusing on those standouts, this report is instead going to focus on several interesting libraries that are growing in popularity.

Let’s start by taking a look at the standard library.

The Standard Library

The libraries that tend to get all the attention are the ones heavily used for operating-system interaction, like sys, os, shutil, and to a slightly lesser extent, glob. This is understandable because most Python applications deal with input processing; however, the Python standard library is very rich and includes a bunch of additional functionality that many Python programmers take too long to discover. In this chapter we will mention a few libraries that every Python programmer should know very well.

collections

First up we have the collections module. If you’ve been working with Python for any length of time, it is very likely that you have made use of the this module; however, the batteries contained within are so important that we’ll go over them anyway, just in case.

collections.OrderedDict

collections.OrderedDict gives you a dict that will preserve the order in which items are added to it; note that this is not the same as a sorted order.1

The need for an ordered dict comes up surprisingly often. A common example is processing lines in a file where the lines (or something within them) maps to other data. A mapping is the right solution, and you often need to produce results in the same order in which the input data appeared. Here is a simple example of how the ordering changes with a normal dict:

>>>dict(zip(ascii_lowercase,range(4))){'a':0,'b':1,'c':2,'d':3}>>>dict(zip(ascii_lowercase,range(5))){'a':0,'b':1,'c':2,'d':3,'e':4}>>>dict(zip(ascii_lowercase,range(6))){'a':0,'b':1,'c':2,'d':3,'f':5,'e':4}>>>dict(zip(ascii_lowercase,range(7))){'a':0,'b':1,'c':2,'d':3,'g':6,'f':5,'e':4}

![1]()

-

See how the key "

f" now appears before the "e" key in the sequence of keys? They no longer appear in the order of insertion, due to how thedictinternals manage the assignment of hash entries.

The OrderedDict, however, retains the order in which items are inserted:

>>>fromcollectionsimportOrderedDict>>>OrderedDict(zip(ascii_lowercase,range(5)))OrderedDict([('a',0),('b',1),('c',2),('d',3),('e',4)])>>>OrderedDict(zip(ascii_lowercase,range(6)))OrderedDict([('a',0),('b',1),('c',2),('d',3),('e',4),('f',5)])>>>OrderedDict(zip(ascii_lowercase,range(7)))OrderedDict([('a',0),('b',1),('c',2),('d',3),('e',4),('f',5),('g',6)])

Warning

OrderedDict: Beware creation with keyword arguments

There is an unfortunate catch with OrderedDict you need to be aware of: it doesn’t work when you create the OrderedDict with keyword arguments, a very common Python idiom:

>>>collections.OrderedDict(a=1,b=2,c=3)OrderedDict([('b',2),('a',1),('c',3)])

This seems like a bug, but as explained in the documentation, it happens because the keyword arguments are first processed as a normal dict before they are passed on to the OrderedDict.

collections.defaultdict

collections.defaultdict is another special-case dictionary: it allows you to specify a default value for all new keys.

Here’s a common example:

>>>d=collections.defaultdict(list)>>>d['a'][]

![1]()

-

You didn’t create this item yet? No problem! Key lookups automatically create values using the function provided when creating the

defaultdictinstance.

By setting up the default value as the list constructor in the preceding example, you can avoid wordy code that looks like this:

d={}forkinkeydata:ifnotkind:d[k]=[]d[k].append(...)

The setdefault() method of a dict can be used in a somewhat similar way to initialize items with defaults, but defaultdict generally results in clearer code.2

In the preceding examples, we’re saying that every new element, by default, will be an empty list. If, instead, you wanted every new element to contain a dictionary, you might say defaultdict(dict).

collections.namedtuple

The next tool, collections.namedtuple, is magic in a bottle! Instead of working with this:

tup=(1,True,"red")

You get to work with this:

>>>fromcollectionsimportnamedtuple>>>A=namedtuple('A','count enabled color')>>>tup=A(count=1,enabled=True,color="red")>>>tup.count1>>>tup.enabledTrue>>>tup.color"red">>>tupA(count=1,enabled=True,color='red')

The best thing about namedtuple is that you can add it to existing code and use it to progressively replace tuples: it can appear anywhere a tuple is currently being used, without breaking existing code, and without using any extra resources beyond what plain tuples require. Using namedtupleincurs no extra runtime cost, and can make code much easier to read. The most common situation where a namedtuple is recommended is when a function returns multiple results, which are then unpacked into a tuple. Let’s look at an example of code that uses plain tuples, to see why such code can be problematic:

>>>deff():...return2,False,"blue">>>count,enabled,color=f()>>>tup=f()>>>enabled=tup[1]

![1]()

-

Simple function returning a tuple.

![2]()

-

When the function is evaluated, the results are unpacked into separate names.

![3]()

-

Worse, the caller might access values inside the returned tuple by index.

The problem with this approach is that this code is fragile to future changes. If the function changes (perhaps by changing the order of the returned items, or adding more items), the unpacking of the returned value will be incorrect. Instead, you can modify existing code to return a namedtuple instance:

>>>deff():...# Return a namedtuple!...returnA(2,False,"blue")>>>count,enabled,color=f()

You now also have the option of working with the returned namedtuple in the calling code:

>>>tup=f()>>>(tup.count)2

![1]()

-

Being able to use attributes to access data inside the tuple is much safer rather than relying on indexing alone; if future changes in the code added new fields to the

namedtuple, thetup.countwould continue to work.

The collections module has a few other tricks up its sleeve, and your time is well spent brushing up on the documentation. In addition to the classes shown here, there is also a Counter class for easily counting occurrences, a list-like container for efficiently appending and removing items from either end (deque), and several helper classes to make subclassing lists, dicts, and strings easier.

contextlib

A context manager is what you use with the with statement. A very common idiom in Python for working with file data demonstrates the context manager:

withopen('data.txt','r')asf:data=f.read()

This is good syntax because it simplifies the cleanup step where the file handle is closed. Using the context manager means that you don’t have to remember to do f.close() yourself: this will happen automatically when the with block exits.

You can use the contextmanager decorator from the contextlib library to benefit from this language feature in your own nefarious schemes. Here’s a creative demonstration where we create a new context manager to print out performance (timing) data.

This might be useful for quickly testing the time cost of code snippets, as shown in the following example. The numbered notes are intentionally not in numerical order in the code. Follow the notes in numerical order as shown following the code snippet.

fromtimeimportperf_counterfromarrayimportarrayfromcontextlibimportcontextmanager@contextmanagerdeftiming(label:str):t0=perf_counter()yieldlambda:(label,t1-t0)t1=perf_counter()withtiming('Array tests')astotal:withtiming('Array creation innermul')asinner:x=array('d',[0]*1000000)withtiming('Array creation outermul')asouter:x=array('d',[0])*1000000('Total [%s]:%.6fs'%total())('Timing [%s]:%.6fs'%inner())('Timing [%s]:%.6fs'%outer())

![1]()

-

The

arraymodule in the standard library has an unusual approach to initialization: you pass it an existing sequence, such as a large list, and it converts the data into the datatype of your array if possible; however, you can also create an array from a short sequence, after which you expand it to its full size. Have you ever wondered which is faster? In a moment, we’ll create a timing context manager to measure this and know for sure! ![2]()

-

The key step you need to do to make your own context manager is to use the

@contextmanagerdecorator. ![3]()

-

The section before the

yieldis where you can write code that must execute before the body of your context manager will run. Here we record the timestamp before the body will run. ![4]()

-

The

yieldis where execution is transferred to the body of your context manager; in our case, this is where our arrays get created. You can also return data: here I return a closure that will calculate the elapsed time when called. It’s a little clever but hopefully not excessively so: the final timet1is captured within the closure even though it will only be determined on the next line. ![5]()

-

After the

yield, we write the code that will be executed when the context manager finishes. For scenarios like file handling, this would be where you close them. In this example, this is where we record the final timet1. ![6]()

-

Here we try the alternative array-creation strategy: first, create the array and then increase size.

![7]()

-

For fun, we’ll use our awesome, new context manager to also measure the total time.

On my computer, this code produces this output:

Total [Array tests]: 0.064896 s

Timing [Array creation innermul]: 0.064195 s

Timing [Array creation outermul]: 0.000659 s

Quite surprisingly, the second method of producing a large array is around 100 times faster than the first. This means that it is much more efficient to create a small array, and then expand it, rather than to create an array entirely from a large list.

The point of this example is not to show the best way to create an array: rather, it is that the contextmanager decorator makes it exceptionally easy to create your own context manager, and context managers are a great way of providing a clean and safe means of managing before-and-after coding tasks.

concurrent.futures

The concurrent.futures module that was introduced in Python 3 provides a convenient way to manage pools of workers. If you have previously used the threading module in the Python standard library, you will have seen code like this before:

importthreadingdefwork():returnsum(xforxinrange(1000000))thread=threading.Thread(target=work)thread.start()thread.join()

This code is very clean with only one thread, but with many threads it can become quite tricky to deal with sharing work between them. Also, in this example the result of the sum is not obtained from the work function, simply to avoid all the extra code that would be required to do so. There are various techniques for obtaining the result of a work function, such as passing a queue to the function, or subclassing threading.Thread, but we’re not going discuss them any further, because the multiprocessingpackage provides a better method for using pools, and theconcurrent.futures module goes even further to simplify the interface. And, similar to multiprocessing, both thread-based pools and process-based pools have the same interface making it easy to switch between either thread-based or process-based approaches.

Here we have a trivial example using the ThreadPoolExecutor. We download the landing page of a plethora of popular social media sites, and, to keep the example simple, we print out the size of each. Note that in the results, we show only the first four to keep the output short.

fromconcurrent.futuresimportThreadPoolExecutorasExecutorurls="""google twitter facebook youtube pinterest tumblrinstagram reddit flickr meetup classmates microsoft applelinkedin xing renren disqus snapchat twoo whatsapp""".split()deffetch(url):fromurllibimportrequest,errortry:data=request.urlopen(url).read()return'{}: length {}'.format(url,len(data))excepterror.HTTPErrorase:return'{}: {}'.format(url,e)withExecutor(max_workers=4)asexe:template='http://www.{}.com'jobs=[exe.submit(fetch,template.format(u))foruinurls]results=[job.result()forjobinjobs]('\n'.join(results))

![1]()

-

Our work function,

fetch(), simply downloads the given URL. ![2]()

-

Yes, it is rather odd nowadays to see

urllibbecause the fantastic third-party library requests is a great choice for all your web-access needs. However,urllibstill exists and depending on your needs, may allow you to avoid an external dependency. ![3]()

-

We create a

ThreadPoolExecutorinstance, and here you can specify how many workers are required. ![4]()

-

Jobs are created, one for every URL in our considerable list. The executormanages the delivery of jobs to the four threads.

![5]()

-

This is a simple way of waiting for all the threads to return.

This produces the following output (I’ve shortened the number of results for brevity):

http://www.google.com: length 10560 http://www.twitter.com: length 268924 http://www.facebook.com: length 56667 http://www.youtube.com: length 437754 [snip]

Even though one job is created for every URL, we limit the number of active threads to only four using max_workers and the results are all captured in the results list as they become available. If you wanted to use processes instead of threads, all that needs to change is the first line, from this:

fromconcurrent.futuresimportThreadPoolExecutorasExecutor

To this:

fromconcurrent.futuresimportProcessPoolExecutorasExecutor

Of course, for this kind of application, which is limited by network latency, a thread-based pool is fine. It is with CPU-bound tasks that Python threads are problematic because of how thread safety has been in implemented inside the CPython3 runtime interpreter, and in these situations it is best to use a process-based pool instead.

The primary problem with using processes for parallelism is that each process is confined to its own memory space, which makes it difficult for multiple workers to chew on the same large chunk of data. There are ways to get around this, but in such situations threads provide a much simpler programming model. However, as we shall see in Cython, there is a third-party package called Cython that makes it very easy to circumvent this problem with threads.

logging

The logging module is very well known in the web development community, but is far less used in other domains, such as the scientific one; this is unfortunate, because even for general use, the logging module is far superior to the print() function. It doesn’t seem that way at first, because the print() function is so simple; however, once you initialize logging, it can look very similar. For instance, compare these two:

('This is output to the console')logger.debug('This is output to the console')

The huge advantage of the latter is that, with a single change to a setting on the logger instance, you can either show or hide all your debugging messages. This means you no longer have to go through the process of commenting and uncommenting your print() statements in order to show or hide them. logging also gives you a few different levels so that you can adjust the verbosity of output in your programs. Here’s an example of different levels:

logger.debug('This is for debugging. Very talkative!')logger.info('This is for normal chatter')logger.warning('Warnings should almost always be seen.')logger.error('You definitely want to see all errors!')logger.critical('Last message before a program crash!')

Another really neat trick is that when you use logging, writing messages during exception handling is a whole lot easier. You don’t have to deal with sys.exc_info() and the traceback module merely for printing out the exception message with a traceback. You can do this instead:

try:1/0except:logger.exception("Something failed:")

Just those four lines produces a full traceback in the output:

ERROR:root:Something failed: Traceback (most recent call last): File "logtb.py", line 5, in <module> 1/0 ZeroDivisionError: division by zero

Earlier I said that logging requires some setup. The documentation for the logging module is extensive and might seem overwhelming; here is a quick recipe to get you started:

# Top of the fileimportlogginglogger=logging.getLogger()# All your normal code goes heredefblah():return'blah'# Bottom of the fileif__name__=='__main__':logging.basicConfig(level=logging.DEBUG)

![1]()

-

Without arguments, the

getLogger()function returns the root logger, but it’s more common to create a named logger. If you’re just starting out, exploring with the root logger is fine. ![2]()

-

You need to call a

configmethod; otherwise, calls to the logger will not log anything. The config step is necessary.

In the preceding basicConfig() line, by changing only logging.DEBUG to, say, logging.WARNING, you can affect which messages get processed and which don’t.

Finally, there’s a neat trick you can use for easily changing the logging level on the command line. Python scripts that are directly executable usually have the startup code in a conditional block beginning with if __name__ == '__main__'. This is where command-line parameters are handled, e.g., using the argparse library in the Python standard library. We can create command-line arguments specifically for the logging level:

# Bottom of the fileif__name__=='__main__':fromargparseimportArgumentParserparser=ArgumentParser(description='My app which is mine')parser.add_argument('-ll','--loglevel',type=str,choices=['DEBUG','INFO','WARNING','ERROR','CRITICAL'],help='Set the logging level')args=parser.parseargs()logging.basicConfig(level=args.loglevel)

With this setup, if you call your program with

$ python main.py -ll DEBUG

it will run with the logging level set to DEBUG (so all logger messages will be shown), whereas if you run it with

$ python main.py -ll WARNING

it will run at the WARNING level for logging, so all INFO and DEBUG logger messages will be hidden.

There are many more features packed into the logging module, but I hope I’ve convinced you to consider using it instead of using print() for your next program. There is much more information about the logging module online, both in the official Python documentation and elsewhere in blogs and tutorials. The goal here is only to convince you that the loggingmodule is worth investigating, and getting started with it is easy to do.

sched

There is increasing interest in the creation of bots4 and other monitoring and automation applications. For these applications, a common requirement is to perform actions at specified times or specified intervals. This functionality is provided by the sched module in the standard library. There are already similar tools provided by operating systems, such as cron on Linux and Windows Task Scheduler, but with Python’s own schedmodule you can ignore these platform differences, as well as incorporate scheduled tasks into a program that might have many other functions.

The documentation for sched is rather terse, but hopefully these examples will get you started. The easy way to begin is to schedule a function to be executed after a specified delay (this is a complete example to make sure you can run it successfully):

importschedimporttimefromdatetimeimportdatetime,timedeltascheduler=sched.scheduler(timefunc=time.time)defsaytime():(time.ctime())scheduler.enter(10,priority=0,action=saytime)saytime()try:scheduler.run(blocking=True)exceptKeyboardInterrupt:('Stopped.')

![1]()

-

A scheduler instance is created.

![2]()

-

A work function is declared, in our case

saytime(), which simply prints out the current time. ![3]()

-

Note that we reschedule the function inside itself, with a ten-second delay.

![4]()

-

The scheduler is started with

run(blocking=True), and the execution point remains here until the program is terminated or CTRL-c is pressed.

There are a few annoying details about using sched: you have to passtimefunc=time.time as this isn’t set by default, and you have to supply a priority even when not required. However, overall, the sched module still provides a clean way to get cron-like behavior.

Working with delays can be frustrating if what you really want is for a task to execute at specific times. In addition to enter(), a sched instance also provides the enterabs() method with which you can trigger an event at a specific time. We can use that method to trigger a function, say, everywhole minute:

importschedimporttimefromdatetimeimportdatetime,timedeltascheduler=sched.scheduler(timefunc=time.time)defreschedule():new_target=datetime.now().replace(second=0,microsecond=0)new_target+=timedelta(minutes=1)scheduler.enterabs(new_target.timestamp(),priority=0,action=saytime)defsaytime():(time.ctime(),flush=True)reschedule()reschedule()try:scheduler.run(blocking=True)exceptKeyboardInterrupt:('Stopped.')

![1]()

-

Create a

schedulerinstance, as before. ![2]()

-

Get current time, but strip off seconds and microseconds to obtain a whole minute.

![3]()

-

The target time is exactly one minute ahead of the current whole minute.

![4]()

-

The

enterabs()method schedules the task.

This code produces the following output:

SatJun1818:14:002016SatJun1818:15:002016SatJun1818:16:002016SatJun1818:17:002016Stopped.

With the growing interest in "Internet of Things" applications, the built-in sched library provides a convenient way to manage repetitive tasks. Thedocumentation provides further information about how to cancel future tasks.

In the Wild

From here on we’ll look at some third-party Python libraries that you might not yet have discovered. There are thousands of excellent packages described at the Python guide.

There are quite a few guides similar to this report that you can find online. There could be as many "favorite Python libraries" lists as there are Python developers, and so the selection presented here is necessarily subjective. I spent a lot of time finding and testing various third-party libraries in order to find these hidden gems.

My selection criteria were that a library should be:

-

easy to use

-

easy to install

-

cross-platform

-

applicable to more than one domain

-

not yet super-popular, but likely to become so

-

the X factor

The last two items in that list bear further explanation.

Popularity is difficult to define exactly, since different libraries tend to get used to varying degrees within different Python communities. For instance, Numpy and Scipy are much more heavily used within the scientific Python community, while Django and Flask enjoy more attention in the web development community. Furthermore, the popularity of these libraries is such that everybody already knows about them. A candidate for this list might have been something like Dask, which seems poised to become an eventual successor to Numpy, but in the medium term it is likely to be mostly applicable to the scientific community, thus failing my applicability test.

The X factor means that really cool things are likely to be built with that Python library. Such a criterion is of course strongly subjective, but I hope, in making these selections, to inspire you to experiment and create something new!

Each of the following chapters describes a library that met all the criteria on my list, and which I think you’ll find useful in your everyday Python activities, no matter your specialization.

Easier Python Packaging with flit

flit is a tool that dramatically simplifies the process of submitting a Python package to the Python Package Index (PyPI). The traditional process begins by creating a setup.py file; simply figuring out how to do that requires a considerable amount of work even to understand what to do. In contrast, flit will create its config file interactively, and for typical simple packages you’ll be ready to upload to PyPI almost immediately. Let’s have a look: consider this simple package structure:

$tree.└──mypkg├──__init__.py└──main.py

![1]()

-

The package’s init file is a great place to add package information like documentation, version numbers, and author information.

After installing flit into your environment with pip install flit, you can run the interactive initializer, which will create your package configuration. It asks only five questions, most of which will have applicable defaults once you have made your first package with flit:

$ flit init Module name [mypkg]: Author [Caleb Hattingh]: Author email [caleb.hattingh@gmail.com]: Home page [https://github.com/cjrh/mypkg]: Choose a license 1. MIT 2. Apache 3. GPL 4. Skip - choose a license later Enter 1-4 [2]: 2 Written flit.ini; edit that file to add optional extra info.

The final line tells you that a flit.ini file was created. Let’s have a look at that:

$ cat flit.ini // [metadata] module = mypkg author = Caleb Hattingh author-email = caleb.hattingh@gmail.com home-page = https://github.com/cjrh/mypkg classifiers = License :: OSI Approved :: Apache Software License

It’s pretty much what we specified in the interactive flit init sequence. Before you can submit our package to the online PyPI, there are two more steps that you must complete. The first is to give your package a docstring. You add this to the mypkg/__init__.py file at the top using triple quotes ("""). The second is that you must add a line for the version to the same file. Your finished __init__.py file might look like this:

# file: __init__.py """ This is the documentation for the package. """__version__ = '1.0.0'

![1]()

-

This documentation will be used as your package description on PyPI.

![2]()

-

Likewise, the version tag within your package will also be reused for PyPI when the package is uploaded. These automatic integrations help to simplify the packaging process. It might not seem like much is gained, but experience with Python packaging will show that too many steps (even when they’re simple), when combined, can lead to a complex packaging experience.5

After filling in the basic description and the version, you are ready to build a wheel and upload it to PyPI:

$flitwheel--uploadCopyingpackagefile(s)frommypkgWritingmetadatafilesWritingtherecordoffilesWheelbuilt:dist/mypkg-1.0.0-py2.py3-none-any.whlUsingrepositoryathttps://pypi.python.org/pypiUploadingdist/mypkg-1.0.0-py2.py3-none-any.whl...StartingnewHTTPSconnection(1):pypi.python.orgUploadingforbidden;tryingtoregisteranduploadagainStartingnewHTTPSconnection(1):pypi.python.orgRegisteredmypkgwithPyPIUploadingdist/mypkg-1.0.0-py2.py3-none-any.whl...StartingnewHTTPSconnection(1):pypi.python.orgPackageisathttps://pypi.python.org/pypi/mypkg

And that’s it! Figure 1-1 shows our package on PyPI.

Figure 1-1. It’s alive! Our demo package on the PyPI.

Figure 1-1. It’s alive! Our demo package on the PyPI.

flit allows you to specify more options, but for simple packages what you see here can get you pretty far.

Command-Line Applications

If you’ve spent any time whatsoever developing Python code, you will surely have used several command-line applications. Some command-line programs seem much friendlier than others, and in this chapter we show two fantastic libraries that will make it easy for you to offer the best experience for users of your own command-line applications. coloramaallows you to use colors in your output, while begins makes it easy to provide a rich interface for specifying and processing command-line options.

colorama

Many of your desktop Python programs will only ever be used on the command line, so it makes sense to do whatever you can to improve the experience of your users as much as possible. The use of color can dramatically improve your user interface, and colorama makes it very easy to add splashes of color into your command-line applications.

Let’s begin with a simple example.

fromcoloramaimportinit,Fore,Back,Styleinit(autoreset=True)messages=['blah blah blah',(Fore.LIGHTYELLOW_EX+Style.BRIGHT+BACK.MAGENTA+'Alert!!!'),'blah blah blah']forminmessages:(m)

Notice in Figure 1-2 how the important message jumps right out at you? There are other packages like curses, blessings, and prompt-toolkitthat let you do a whole lot more with the terminal screen itself, but they also have a slightly steeper learning curve; with colorama the API is simple enough to be easy to remember.

Figure 1-2. Add color to your output messages with simple string concatenation.

Figure 1-2. Add color to your output messages with simple string concatenation.

The great thing about colorama is that it also works on Windows, in addition to Linux and Mac OS X. In the preceding example, we used theinit() function to enable automatic reset to default colors after each print(), but even when not required, init() should always be called (when your code runs on Windows, the init() call enables the mapping from ANSI color codes to the Windows color system).

fromcoloramaimportinitinit()

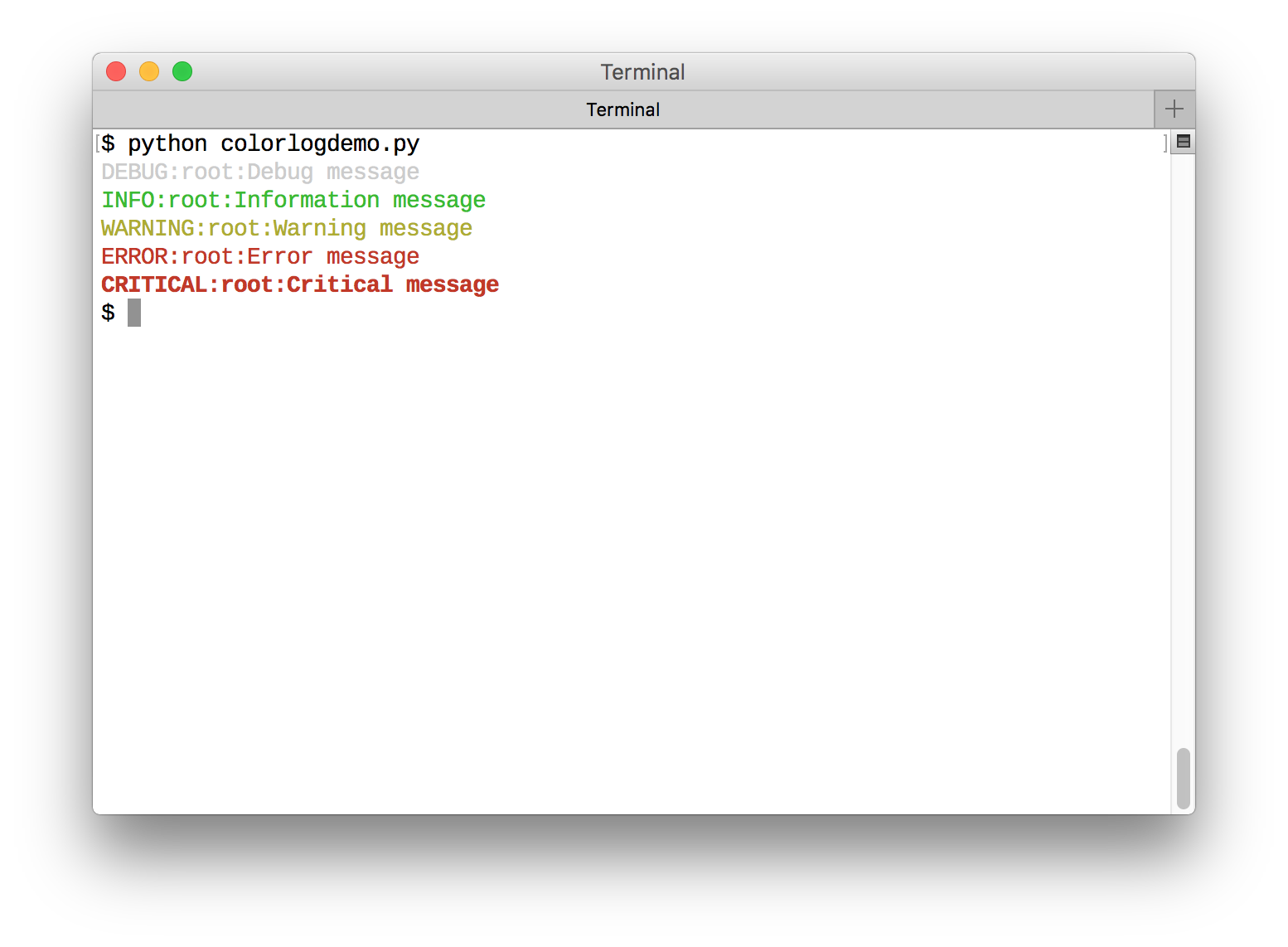

The preceding example is clear enough to follow, but I would be a sorry author if I—after having emphasized the benefits of the logging module—told you that the only way to get colors into your console was to use print(). As usual with Python, it turns out that the hard work has already been done for us. After installing the colorlog package,6 you can use colors in your log messages immediately:

importcolorloglogger=colorlog.getLogger()logger.setLevel(colorlog.colorlog.logging.DEBUG)handler=colorlog.StreamHandler()handler.setFormatter(colorlog.ColoredFormatter())logger.addHandler(handler)logger.debug("Debug message")logger.info("Information message")logger.warning("Warning message")logger.error("Error message")logger.critical("Critical message")

![1]()

-

Obtain a

loggerinstance, exactly as you would normally do. ![2]()

-

Set the logging level. You can also use the constants like

DEBUGandINFOfrom the logging module directly. ![3]()

-

Set the message formatter to be the

ColoredFormatterprovided by thecolorloglibrary.

This produces the output shown in Figure 1-3.

There are several other similar Python packages worth watching, such as the fabulous command-line beautifier, which would have made this list if it had been updated for Python 3 just a few weeks sooner!

Figure 1-3. Beautiful and automatic colors for your logging messages.

Figure 1-3. Beautiful and automatic colors for your logging messages.

begins

As far as user-interfaces go, most Python programs start out as command-line applications, and many remain so. It makes sense to offer your users the very best experience you can. For such programs, options are specified with command-line arguments, and the Python standard library offers theargparse library to help with that.

argparse is a robust, solid implementation for command-line processing, but it is somewhat verbose to use. For example, here we have an extremely simple script that will add two numbers passed on the command line:

importargparsedefmain(a,b):""" Short script to add two numbers """returna+bif__name__=='__main__':parser=argparse.ArgumentParser(description="Add two numbers")parser.add_argument('-a',help='First value',type=float,default=0)parser.add_argument('-b',help='Second value',type=float,default=0)args=parser.parse_args()(main(args.a,args.b))

As one would expect, help can be obtained by passing -h to this program:

$python argparsedemo.py -h usage: argparsedemo.py[-h][-a A][-b B]Add two numbers optional arguments: -h, --help show thishelpmessage andexit-a A First value -b B Second value

In contrast, the begins library takes a machete to the API of argparse and maximally exploits features of the Python language to simplify setting up the same command-line interface:

importbegin@begin.start(auto_convert=True)defmain(a:'First value'=0.0,b:'Second value'=0.0):""" Add two numbers """(a+b)

There is so much happening in so few lines, yet everything is still explicit:

-

Each parameter in the main function becomes a command-line argument.

-

The function annotations are exploited to provide an inline help description of each parameter.

-

The default value of each parameter (here,

0.0) is used both as a default value, as well as to indicate the required datatype (in this case, afloatnumber value). -

The

auto_convert=Trueis used to enforce type coercion from a string to the target parameter type. -

The docstring for the function now becomes the help documentation of the program itself.

Also, you may have noticed that this example lacks the usual if __name__ == '__main__' boilerplate: that’s because begins is smart enough to work with Python’s stack frames so that your target function becomes the starting point.

For completeness, here is the help for the begins-version, produced with -h:

$python beginsdemo.py -h usage: beginsdemo.py[-h][--a A][--b B]Add two numbers optional arguments: -h, --help show thishelpmessage andexit--a A, -a A First value(default: 0.0)--b B, -b B Second value(default: 0.0)

There are a bunch more tricks that begins makes available, and you are encouraged to read the documentation; for instance, if the parameters are words:

@begin.startdefmain(directory:'Target dir'):...

then both -d VALUE and --directory VALUE will work for specifying the value of the directory parameter on the command line. A sequence of positional arguments of unknown length is easily defined with unpacking:

#demo.py@begin.startdefmain(directory:'Target dir',*filters):...

When called with:

$pythondemo.py-d/home/usertmptempTEMP

the filters argument would be the list ['tmp', 'temp', 'TEMP'].

If that were all that begins supported, it would already be sufficient for the vast majority of simple programs; however, begins also provides support for subcommands:

importbegin@begin.subcommanddefstatus(compact:'Short or long format'=True):""" Report the current status. """ifcompact:('ok.')else:('Very well, thank-you.')@begin.subcommanddeffetch(url:'Data source'='http://google.com'):""" Fetch data from the URL. """('Work goes here')@begin.start(auto_convert=True)defmain(a:'First value'=0.0,b:'Second value'=0.0):""" Add two numbers """(a+b)

The main function is the same as before, but we’ve added two subcommands:

-

The first,

status, represents a subcommand that could be used to provide some kind of system status message (think "git status"). -

The second,

fetch, represents a subcommand for some kind of work function (think "git fetch").

Each subcommand has its own set of parameters, and the rules work in the same way as before with the main function. For instance, observe the updated help (obtained with the -h parameter) for the program:

$python beginssubdemo.py -h usage: beginssubdemo.py[-h][--a A][--b B]{fetch,status}... Add two numbers optional arguments: -h, --help show thishelpmessage andexit--a A, -a A First value(default: 0.0)--b B, -b B Second value(default: 0.0)Available subcommands:{fetch,status}fetch Fetch data from the URL. status Report the current status.

We still have the same documentation for the main program, but now additional help for the subcommands have been added. Note that the function docstrings for status and fetch have also been recycled into CLI help descriptions. Here is an example of how our program might be called:

$python beginssubdemo.py -a7-b7status --compact 14.0 ok.$python beginssubdemo.py -a7-b7status --no-compact 14.0 Very well, thank-you.

You can also see how boolean parameters get some special treatment: they evaluate True if present and False when no- is prefixed to the parameter name, as shown for the parameter compact.

begins has even more tricks up its sleeve, such as automatic handling forenvironment variables, config files, error handling, and logging, and I once again urge you to check out the project documentation.

If begins seems too extreme for you, there are a bunch of other tools for easing the creation of command-line interfaces. One particular option that has been growing in popularity is click.

Graphical User Interfaces

Python offers a wealth of options for creating graphical user interfaces (GUIs) including PyQt, wxPython, and tkinter, which is also available directly in the standard library. In this chapter we will describe two significant, but largely undiscovered, additions to the lineup. The first, pyqtgraph, is much more than simply a chart-plotting library, while pywebview gives you a full-featured web-technology interface for your desktop Python applications.

pyqtgraph

The most popular chart-plotting library in Python is matplotlib, but you may not yet have heard of the wonderful alternative, pyqtgraph. Pyqtgraph is not a one-to-one replacement for matplotlib; rather, it offers a different selection of features and in particular is excellent for real-time and interactive visualization.

It is very easy to get an idea of what pyqtgraph offers: simply run the built-in examples application:

$pip install pyqtgraph$python -m pyqtgraph.examples

This will install and run the examples browser. You can see a screenshot of one of the examples in Figure 1-4.

Figure 1-4. Interactive pyqtgraph window.

Figure 1-4. Interactive pyqtgraph window.

This example shows an array of interactive plots. You can’t tell from the screenshot, but when you run this example, the yellow chart on the right is a high-speed animation. Each plot, including the animated one (in yellow), can be panned and scaled in real-time with your cursor. If you’ve used Python GUI frameworks in the past, and in particular ones with free-form graphics, you would probably expect sluggish performance. This is not the case with pyqtgraph: because pyqtgraph uses, unsurprisingly, PyQt for the UI. This allows pyqtgraph itself to be a pure-python package, making installation and distribution easy.7

By default, the style configuration for graphs in pyqtgraph uses a black background with a white foreground. In Figure 1-4, I sneakily used a style configuration change to reverse the colors, since dark backgrounds look terrible in print. This information is also available in the documentation:

importpyqtgraphaspg# Switch to using white background and black foregroundpg.setConfigOption('background','w')pg.setConfigOption('foreground','k')

There is more on offer than simply plotting graphs: pyqtgraph also has features for 3D visualization, widget docking, and automatic data widgets with two-way binding. In Figure 1-5, the data-entry and manipulation widgets were generated automatically from a Numpy table array, and UI interaction with these widgets changes the graph automatically.

pyqtgraph is typically used as a direct visualizer, much like howmatplotlib is used, but with better interactivity. However, it is also quite easy to embed pyqtgraph into another separate PyQt application and the documentation for this is easy to follow. pyqtgraph also provides a few useful extras, like edit widgets that are units-aware (kilograms, meters and so on), and tree widgets that can be built automatically from (nested!) standard Python data structures like lists, dictionaries, and arrays.

The author of pyqtgraph is now working with the authors of other visualization packages on a new high-performance visualization package:vispy. Based on how useful pyqtgraph has been for me, I’ve no doubt that vispy is likely to become another indispensable tool for data visualization.

Figure 1-5. Another pyqtgraph example: the data fields and widgets on the left are automatically created from a Numpy table array.

Figure 1-5. Another pyqtgraph example: the data fields and widgets on the left are automatically created from a Numpy table array.

pywebview

There are a huge number of ways to make desktop GUI applications with Python, but in recent years the idea of using a browser-like interface as a desktop client interface has become popular. This approach is based around tools like cefpython (using the Chrome-embedded framework) andElectron, which has been used to build many popular tools such as the Atom text editor and Slack social messaging application.

Usually, such an approach involves bundling a browser engine with your application, but Pywebview gives you a one-line command to create a GUI window that wraps a system native "web view" window. By combining this with a Python web abb like Flask or Bottle, it becomes very easy to create a local application with a GUI, while also taking advantage of the latest GUI technologies that have been developed in the browser space. The benefit to using the system native browser widget is that you don’t have to distribute a potentially large application bundle to your users.

Let’s begin with an example. Since our application will be built up as a web-page template in HTML, it might be interesting to use a Python tool to build the HTML rather than writing it out by hand.

fromstringimportascii_lettersfromrandomimportchoice,randintimportwebviewimportdominatefromdominate.tagsimport*frombottleimportroute,run,templatebootstrap='https://maxcdn.bootstrapcdn.com/bootstrap/3.3.6/'bootswatch='https://maxcdn.bootstrapcdn.com/bootswatch/3.3.6/'doc=dominate.document()withdoc.head:link(rel='stylesheet',href=bootstrap+'css/bootstrap.min.css')link(rel='stylesheet',href=bootswatch+'paper/bootstrap.min.css')script(src='https://code.jquery.com/jquery-2.1.1.min.js')[script(src=bootstrap+'js/'+x)forxin['bootstrap.min.js','bootstrap-dropdown.js']]withdoc:withdiv(cls='container'):withh1():span(cls='glyphicon glyphicon-map-marker')span('My Heading')withdiv(cls='row'):withdiv(cls='col-sm-6'):p('{{body}}')withdiv(cls='col-sm-6'):p('Evaluate an expression:')input_(id='expression',type='text')button('Evaluate',cls='btn btn-primary')div(style='margin-top: 10px;')withdiv(cls='dropdown'):withbutton(cls="btn btn-default dropdown-toggle",type="button",data_toggle='dropdown'):span('Dropdown')span(cls='caret')items=('Action','Another action','Yet another action')ul((li(a(x,href='#'))forxinitems),cls='dropdown-menu')withdiv(cls='row'):h3('Progress:')withdiv(cls='progress'):withdiv(cls='progress-bar',role='progressbar',style='width: 60%;'):span('60%')withdiv(cls='row'):forvidin['4vuW6tQ0218','wZZ7oFKsKzY','NfnMJMkhDoQ']:withdiv(cls='col-sm-4'):withdiv(cls='embed-responsive embed-responsive-16by9'):iframe(cls='embed-responsive-item',src='https://www.youtube.com/embed/'+vid,frameborder='0')@route('/')defroot():word=lambda:''.join(choice(ascii_letters)foriinrange(randint(2,10)))nih_lorem=''.join(word()foriinrange(50))returntemplate(str(doc),body=nih_lorem)if__name__=='__main__':importthreadingthread=threading.Thread(target=run,kwargs=dict(host='localhost',port=8080),daemon=True)thread.start()webview.create_window("Not a browser, honest!","http://localhost:8080",width=800,height=600,resizable=False)

![1]()

-

webview, the star of the show! ![2]()

-

The other star of the show.

dominatelets you create HTML with a series of nested context handlers. ![3]()

-

The third star of the show! The

bottleframework provides a very simple interface for building a basic web app with templates and routing. ![4]()

-

Building up HTML in Python has the tremendous advantage of using all the syntax tools the language has to offer.

![5]()

-

By using Bootstrap, one of the oldest and most robust front-end frameworks, we get access to fun things like glyph icons.

![6]()

-

Template variables can still be entered into our HTML and substituted later using the web framework’s tools.

![7]()

-

To demonstrate that we really do have Bootstrap, here is a progress bar widget. With

pywebview, you can, of course, use any Bootstrap widget, and indeed any other tools that would normally be used in web browser user interfaces. ![8]()

-

I’ve embedded a few fun videos from YouTube.

Running this program produces a great-looking interface, as shown in Figure 1-6.

Figure 1-6. A beautiful and pleasing interface with very little effort.

Figure 1-6. A beautiful and pleasing interface with very little effort.

For this demonstration, I’ve used the Paper Bootstrap theme, but by changing a single word you can use an entirely different one! The Superhero Bootstrap theme is shown in Figure 1-7.

Figure 1-7. The same application, but using the Superhero theme from Bootswatch.

Figure 1-7. The same application, but using the Superhero theme from Bootswatch.

Note

In the preceding example we also used the dominate library, which is a handy utility for generating HTML procedurally. It’s not only for simple tasks: this demo shows how it can handle Bootstrap attributes and widgets quite successfully. dominate works with nested context managers to manage the scope of HTML tags, while HTML attributes are assigned with keyword arguments.

For the kind of application that Pywebview is aimed at, it would be very interesting to think about how dominate could be used to make an abstraction that completely hides browser technologies like HTML and JavaScript, and lets you create (e.g., button-click handlers) entirely in Python without worrying about the intermediate event processing within the JavaScript engine. This technique is already explored in existing tools like flexx.

System Tools

Python is heavily used to make tools that work closely with the operating system, and it should be no surprise to discover that there are excellent libraries waiting to be discovered. The psutil library gives you all the access to operating-system and process information you could possibly hope for, while the Watchdog library gives you hooks into system file-event notifications. Finally, we close out this chapter with a look at ptpython, which gives you a significantly enriched interactive Python prompt.

psutil

Psutil provides complete access to system information. Here’s a simple example of a basic report for CPU load sampled over 5 seconds:

importpsutilcpu=psutil.cpu_percent(interval=5,percpu=True)(cpu)

Output:

[21.4,1.2,18.0,1.4,15.6,1.8,17.4,1.6]

It produces one value for each logical CPU: on my computer, eight. The rest of the psutil API is as simple and clean as this example shows, and the API also provides access to memory, disk, and network information. It is very extensive.

There is also detailed information about processes. To demonstrate, here is a program that monitors its own memory consumption and throws in the towel when a limit is reached:

importpsutilimportos,sys,timepid=os.getpid()p=psutil.Process(pid)('Process info:')('name :',p.name())('exe :',p.exe())data=[]whileTrue:data+=list(range(100000))info=p.memory_full_info()# Convert to MBmemory=info.uss/1024/1024('Memory used: {:.2f} MB'.format(memory))ifmemory>40:('Memory too big! Exiting.')sys.exit()time.sleep(1)

![1]()

-

The

osmodule in the standard library provides our process ID (PID). ![2]()

-

psutilconstructs aProcess()object based on the PID. In this case the PID is our own, but it could be any running process on the system. ![3]()

-

This looks bad: an infinite loop and an ever-growing list!

Output:

Processinfo:name:Pythonexe:/usr/local/Cellar/.../PythonMemoryused:11.82MBMemoryused:14.91MBMemoryused:18.77MBMemoryused:22.63MBMemoryused:26.48MBMemoryused:30.34MBMemoryused:34.19MBMemoryused:38.05MBMemoryused:41.90MBMemorytoobig!Exiting.

![1]()

-

The full path has been shortened here to improve the appearance of the snippet. There are many more methods besides

nameandexeavailable onpsutil.Process()instances.

The type of memory shown here is the unique set size, which is the real memory released when that process terminates. The reporting of the unique set size is a new feature in version 4 of psutil, which also works on Windows.

There is an extensive set of process properties you can interrogate withpsutil, and I encourage you to read the documentation. Once you begin using psutil, you will discover more and more scenarios in which it can make sense to capture process properties. For example, it might be useful, depending on your application, to capture process information inside exception handlers so that your error logging can include information about CPU and memory load.

Watchdog

Watchdog is a high-quality, cross-platform library for receiving notifications of changes in the file system. Such file-system notifications is a fundamental requirement in many automation applications, and Watchdog handles all the low-level and cross-platform details of notifications for system events. And, the great thing about Watchdog is that it doesn’t use polling.

The problem with polling is that having many such processes running can sometimes consume more resources than you may be willing to part with, particularly on lower-spec systems like the Raspberry Pi. By using the native notification systems on each platform, the operating system tells you immediately when something has changed, rather than you having to ask. On Linux, the inotify API is used; on Mac OS X, either kqueue or FSEvents are used; and on Windows, the ReadDirectoryChangesW API is used. Watchdog allows you to write cross-platform code and not worry too much about how the sausage is made.

Watchdog has a mature API, but one way to get immediate use out of it is to use the included watchmedo command-line utility to run a shell command when something changes. Here are a few ideas for inspiration:

-

Compiling template and markup languages:

# Compile Jade template language into HTML$watchmedoshell-command\--patterns="*.jade"\--command='pyjade -c jinja "${watch_src_path}"'\--ignore-directories# Convert an asciidoc to HTML$watchmedoshell-command\--patterns="*.asciidoc"\--command='asciidoctor "${watch_src_path}"'\--ignore-directories

![1]()

-

Watchdog will substitute the name of the specific changed file using this template name.

-

Maintenance tasks, like backups or mirroring:

-

# Synchronize files to a server$watchmedo shell-command\--patterns="*"\--command='rsync -avz mydir/ host:/home/ubuntu"'

-

Convenience tasks, like automatically running formatters, linters, and tests:

# Automatically format Python source code to PEP8(!)$watchmedo shell-command\--patterns="*.py"\--command='pyfmt -i "${watch_src_path}"'\--ignore-directories

-

Calling API endpoints on the Web, or even sending emails and SMS messages:

# Mail a file every time it changes!$watchmedo shell-command\--patterns="*"\--command='cat "${watch_src_path}" | ↲mail -s "New version" me@domain.com'\--ignore-directories

The API of Watchdog as a library is fairly similar to the command-line interface introduced earlier. There are the usual quirks with thread-based programming that you have to be aware of, but the typical idioms are sufficient:

fromwatchdog.observersimportObserverfromwatchdog.eventsimport(PatternMatchingEventHandler,FileModifiedEvent,FileCreatedEvent)observer=Observer()classHandler(PatternMatchingEventHandler):defon_created(self,event:FileCreatedEvent):('File Created:',event.src_path)defon_modified(self,event:FileModifiedEvent):('File Modified:%s[%s]'%(event.src_path,event.event_type))observer.schedule(event_handler=Handler('*'),path='.')observer.daemon=Falseobserver.start()try:observer.join()exceptKeyboardInterrupt:('Stopped.')observer.stop()observer.join()

![1]()

-

Create an observer instance.

![2]()

-

You have to subclass one of the handler classes, and override the methods for events that you want to process. Here I’ve implemented handlers for creation and modification, but there are several other methods you can supply, as explained in the documentation.

![3]()

-

You schedule the event handler, and tell

watchdogwhat it should be watching. In this case I’ve asked for notifications on all files (*) n the current directory (.). ![4]()

-

Watchdogruns in a separate thread. By calling thejoin()method, you can force the program flow to block at this point.

With that code running, I cunningly executed a few of these:

$touch secrets.txt$touch secrets.txt$touch secrets.txt[etc]

This is the console output from the running Watchdog demo:

FileCreated:./secrets.txtFileModified:.[modified]FileModified:./secrets.txt[modified]FileModified:./secrets.txt[modified]FileModified:./secrets.txt[modified]FileModified:./secrets.txt[modified]Stopped.Processfinishedwithexitcode0

As we can see, Watchdog saw all the modifications I made to a file in the directory being watched.

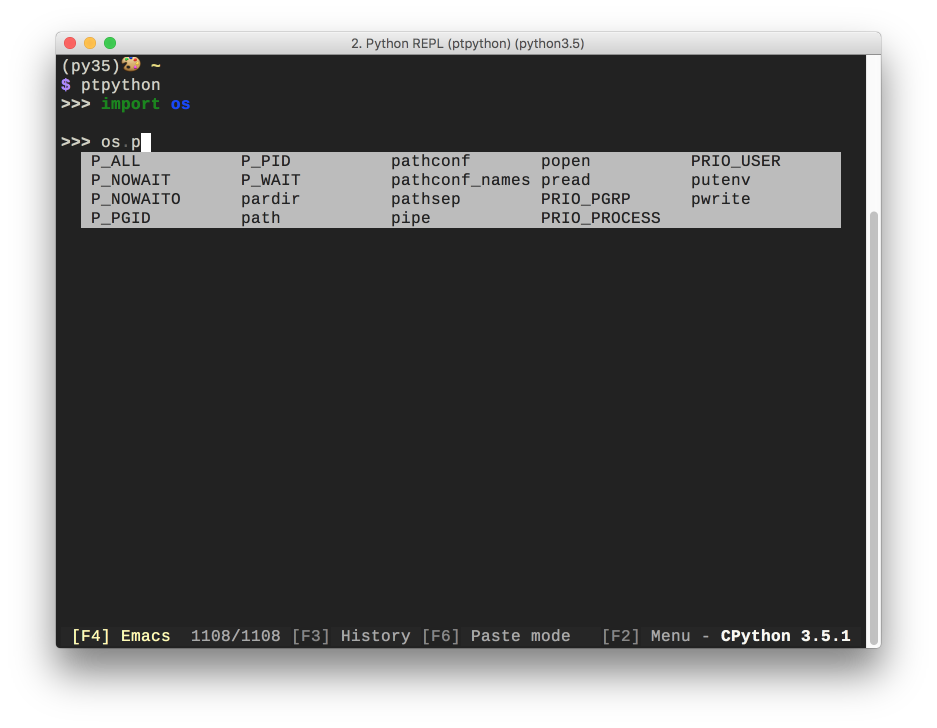

ptpython

ptpython is an alternative interpreter interface, offering a beautiful interactive Python experience. As such, ptpython is more like a tool than a library to include in your own projects, but what a tool! Figure 1-8 shows a screenshot showing the basic user interface.

When you need to work with blocks in the interpreter, like classes and functions, you will find that accessing the command history is much more convenient than the standard interpreter interface: when you scroll to a previous command, the entire block is shown, not only single lines, as shown in Figure 1-9.

Here, I retrieved the code for the function declaration by pressing the up arrow (or CTRL-p) and the entire block is shown, rather than having to scroll through lines separately.

The additional features include vi and Emacs keys, theming support, docstring hinting, and input validation, which checks a command (or block) for syntax errors before allowing evaluation. To see the full set of configurable options, press F2, as seen in Figure 1-10.

Figure 1-8. The code-completion suggestions pop up automatically as you type.

Figure 1-8. The code-completion suggestions pop up automatically as you type. Figure 1-9. When you scroll to previous entries, entire blocks are suggested (e.g., function f()), not only single lines.

Figure 1-9. When you scroll to previous entries, entire blocks are suggested (e.g., function f()), not only single lines. Figure 1-10. The settings menu accessible via F2.

Figure 1-10. The settings menu accessible via F2.

On the command line, ptpython offers highly productive features that are sure to enhance your workflow. I have found that ptpython is the fastest way to quickly test some syntax or a library call, without having to open an editor or integrated development environment (IDE). And if you prefer IPython, you’ll be glad to hear that ptpython includes an integration which you can launch with ptipython. This makes available the shell integration that IPython offers, as well as its wealth of magic commands.

Web APIs with hug

Python is famously used in a large number of web frameworks, and an extension of this area is the web services domain in which APIs are exposed for other users’ programs to consume. In this domain, Django REST framework and Flask are very popular choices, but you may not yet have heard of hug.

hug is a library that provides an extremely simple way to create Internet APIs for web services. By exploiting some of the language features of Python, much of the usual boilerplate normally required when creating APIs for web services is removed.

Here is a small web service that converts between the hex value of a color and its CSS3 name:

importhugimportwebcolors@hug.get()defhextoname(hex:hug.types.text):returnwebcolors.hex_to_name('#'+hex)@hug.get()defnametohex(name:hug.types.text):returnwebcolors.name_to_hex(name)

Note

We are also using the webcolors library here: your one-stop shop for converting web-safe colors between various formats like name, hex, and rgb. Don’t forget that you can also convert between rgb and other formats like hsl with the colorsys library that’s already included in the Python standard library!

In the hug example, we’ve done little more than wrap two functions from the webcolors package: one to convert from hex to name and one to do the opposite. It’s hard to believe at first, but those @hug.get decorators are all you need to start a basic API. The server is launched by using the included hug command-line tool (Figure 1-11).

Figure 1-11. Hug goes a long way to make you feel welcome.

Figure 1-11. Hug goes a long way to make you feel welcome.

And that’s it! We can immediately test our API. You could use a web browser for this, but it’s easy enough to use a tool like cURL. You call the URL endpoint with the correct parameters, and hug returns the answer:

$curlhttp://localhost:8000/hextoname?hex=ff0000"red"$curlhttp://localhost:8000/nametohex?name=lightskyblue"#87cefa"

![1]()

-

The function name was

hextoname, and the parameter name washex. Note that we don’t supply the hash,#, as part of the request data because it interferes with the parsing of the HTTP request. ![2]()

-

This API has a different endpoint function,

nametohex, and its parameter is calledname.

It’s quite impressive to get a live web API up with so little work. And the features don’t stop there: hug automatically generates API documentation, which is what you get by omitting an endpoint:

$curl http://localhost:8000/{"404":"The API call you tried to make was not defined. Here'sa definition of the API to help you get going :)","documentation":{"handlers":{"/hextoname":{"GET":{"outputs":{"format":"JSON (Javascript SerializedObject Notation)","content_type":"application/json"},"inputs":{"hex":{"type":"Basic text / string value"}}}},"/nametohex":{"GET":{"outputs":{"format":"JSON (Javascript SerializedObject Notation)","content_type":"application/json"},"inputs":{"name":{"type":"Basic text / string value"}}}}}}}

The types of the parameters we specified with hug.types.text are used not only for the documentation, but also for type conversions.

If these were all the features provided by hug, it would already be enough for many API tasks; however, one of hug’s best features is that it automatically handles versions. Consider the following example:

importhugimportinflectengine=inflect.engine()@hug.get(versions=1)defsingular(word:hug.types.text):""" Return the singular version of the word"""returnengine.singular_noun(word).lower()@hug.get(versions=1)defplural(word:hug.types.text):""" Return the plural version of the word"""returnengine.plural(word).lower()@hug.get(versions=2)defsingular(word:hug.types.text):""" Return the singular of word, preserving case """returnengine.singular_noun(word)@hug.get(versions=2)defplural(word:hug.types.text):""" Return the plural of word, preserving case """returnengine.plural(word)

![1]()

-

This new

hugAPI wraps theinflectpackage, which provides tools for word manipulation. ![2]()

-

The first version of the API returns lowercased results.

![3]()

-

The second, newer version of the same API returns the result as calculated by

inflect, without any alteration.

Note

We are also using the inflect library, which makes an enormous number of word transformations possible, including pluralization, gender transformation of pronouns, counting ("was no error" versus "were no errors"), correct article selection ("a," "an," and "the"), Roman numerals, and many more!

Imagine that we’ve created this web service to provide an API to transform words into their singular or plural version: one apple, many apples, and so on. For some reason that now escapes us, in the first release we lowercased the results before returning them:

$curlhttp://localhost:8000/v1/singular/?word=Silly%20Walks"silly walk"$curlhttp://localhost:8000/v1/plural?word=Crisis"crises"

It didn’t occur to us that some users may prefer to have the case of input words preserved: for example, if a word at the beginning of a sentence is being altered, it would be best to preserve the capitalization. Fortunately, the inflect library already does this by default, and hug provides versioning that allows us to provide a second version of the API (so as not to hurt existing users who may expect the lower-case transformation):

$curl http://localhost:8000/v2/singular/?word=Silly%20Walks"Silly Walk"$curl http://localhost:8000/v2/plural/?word=Crisis"Crises"

These API calls use version 2 of our web service. And finally, the documentation is also versioned, and for this example, you can see how the function docstrings are also incorporated as usage text:

$curlhttp://localhost:8000/v2/{"404":"The API call you tried to make was not defined. Here's a definition of the API to help you get going :)","documentation":{"version":2,"versions":[1,2],"handlers":{"/singular":{"GET":{"usage":" Return the singular of word, preserving case ","outputs":{"format":"JSON (Javascript Serialized Object Notation)","content_type":"application/json"},"inputs":{"word":{"type":"Basic text / string value"}}}},[snip...]

![1]()

-

Each version has separate documentation, and calling that version endpoint, i.e.,

v2, returns the documentation for that version. ![2]()

-

The docstring of each function is reused as the usage text for that API call.

hug has extensive documentation in which there are even more features to discover. With the exploding interest in internet-of-things applications, it is likely that simple and powerful libraries like hug will enjoy widespread popularity.

Dates and Times

Many Python users cite two major pain points: the first is packaging, and for this we covered flit in an earlier chapter. The second is working with dates and times. In this chapter we cover two libraries that will make an enormous difference in how you deal with temporal matters. arrow is a reimagined library for working with datetime objects in which timezones are always present, which helps to minimize a large class of errors that new Python programmers encounter frequently. parsedatetime is a library that lets your code parse natural-language inputs for dates and times, which can make it vastly easier for your users to provide such information.

arrow

Though opinions differ, there are those who have found the standard library’s datetime module confusing to use. The module documentation itself in the first few paragraphs explains why: it provides both naive and aware objects to represent dates and times. The naive ones are the source of confusion, because application developers increasingly find themselves working on projects in which timezones are critically important. The dramatic rise of cloud infrastructure and software-as-a-service applications have contributed to the reality that your application will frequently run in a different timezone (e.g., on a server) than where developers are located, and different again to where your users are located.

The naive datetime objects are the ones you typically see in demos and tutorials:

importdatetimedt=datetime.datetime.now()dt_utc=datetime.datetime.utcnow()difference=(dt-dt_utc).total_seconds()('Total difference:%.2fseconds'%difference)

This code could not be any simpler: we create two datetime objects, and calculate the difference. The problem is that we probably intended both now() and utcnow() to mean now as in "this moment in time," but perhaps in different timezones. When the difference is calculated, we get what seems an absurdly large result:

Totaldifference:36000.00seconds

The difference is, in fact, the timezone difference between my current location and UTC: +10 hours. This result is the worst kind of "incorrect" because it is, in fact, correct if timezones are not taken into account; and here lies the root of the problem: a misunderstanding of what the functions actually do. The now() and utcnow() functions do return the current time in local and UTC timezones, but unfortunately both results are naive datetime objects and therefore lack timezone data.

Note that it is possible to create datetime objects with timezone data attached, albeit in a subjectively cumbersome manner: you create an awaredatetime by setting the tzinfo attribute to the correct timezone. In our example, we could (and should!) have created a current datetime object in the following way, by passing the timezone into the now() function:

dt=datetime.datetime.now(tz=datetime.timezone.utc)

Of course, if you make this change on line 3 and try to rerun the code, the following error appears:

TypeError: can't subtract offset-naive and

offset-aware datetimes

This happens because even though the utcnow() function produces adatetime for the UTC timezone, the result is a naive datetime object, and Python prevents you from mixing naive and aware datetime objects. If you are suddenly scared to work with dates and times, that’s an understandable response, but I’d rather encourage you to instead be afraid of datetimes that lack timezone data. The TypeError just shown is the kind of error we really do want: it forces us to ensure that all our datetimeinstances are aware.

Naturally, to handle this in the general case requires some kind of database of timezones. Unfortunately, except for UTC, the Python standard library does not include timezone data; instead, the recommendation is to use a separately maintained library called pytz, and if you need to work with dates and times, I strongly encourage you to investigate that library in more detail.

Now we can finally delve into the main topic of this section, which isarrow: a third-party library that offers a much simpler API for working with dates and times. With arrow, everything has a timezone attached (and is thus "aware"):

importarrowt0=arrow.now()(t0)t1=arrow.utcnow()(t1)difference=(t0-t1).total_seconds()('Total difference:%.2fseconds'%difference)

Output:

2016-06-26T18:43:55.328561+10:00 2016-06-26T08:43:55.328630+00:00 Total difference: -0.00 seconds

As you can see, the now() function produces the current date and time with my local timezone attached (UTC+10), while the utcnow() function also produces the current date and time, but with the UTC timezone attached. As a consequence, the actual difference between the two times is as it should be: zero.

And the delightful arrow library just gets better from there. The objects themselves have convenient attributes and methods you would expect:

>>>t0=arrow.now()>>>t0<Arrow[2016-06-26T18:59:07.691803+10:00]>>>>t0.date()datetime.date(2016,6,26)>>>t0.time()datetime.time(18,59,7,691803)>>>t0.timestamp1466931547>>>t0.year2016>>>t0.month6>>>t0.day26>>>t0.datetimedatetime.datetime(2016,6,26,18,59,7,691803,tzinfo=tzlocal())

Note that the datetime produced from the same attribute correctly carries the essential timezone information.

There are several other features of the module that you can read more about in the documentation, but this last feature provides an appropriate segue to the next section:

>>>t0=arrow.now()>>>t0.humanize()'just now'>>>t0.humanize()'seconds ago'>>>t0=t0.replace(hours=-3,minutes=10)>>>t0.humanize()'2 hours ago'

The humanization even has built-in support for several locales!

>>> t0.humanize(locale='ko') '2시간 전' >>> t0.humanize(locale='ja') '2時間前' >>> t0.humanize(locale='hu') '2 órával ezelőtt' >>> t0.humanize(locale='de') 'vor 2 Stunden' >>> t0.humanize(locale='fr') 'il y a 2 heures' >>> t0.humanize(locale='el') '2 ώρες πριν' >>> t0.humanize(locale='hi') '2 घंटे पहले' >>> t0.humanize(locale='zh') '2小时前'

Tip

Alternative libraries for dates and times

There are several other excellent libraries for dealing with dates and times in Python, and it would be worth checking out both Delorean and the very new Pendulum library.

parsedatetime

parsedatetime is a wonderful library with a dedicated focus: parsing text into dates and times. As you’d expect, it can be obtained with pip install parsedatetime. The official documentation is very API-like, which makes it harder than it should be to get a quick overview of what the library offers, but you can get a pretty good idea of what’s available by browsing the extensive test suite.

The minimum you should expect of a datetime-parsing library is to handle the more common formats, and the following code sample demonstrates this:

importparsedatetimeaspdtcal=pdt.Calendar()examples=["2016-07-16","2016/07/16","2016-7-16","2016/7/16","07-16-2016","7-16-2016","7-16-16","7/16/16",]('{:30s}{:>30s}'.format('Input','Result'))('='*60)foreinexamples:dt,result=cal.parseDT(e)('{:<30s}{:>30}'.format('"'+e+'"',dt.ctime()))

This produces the following, and unsurprising, output:

InputResult============================================================"2016-07-16"Sat Jul1616:25:20 2016"2016/07/16"Sat Jul1616:25:20 2016"2016-7-16"Sat Jul1616:25:20 2016"2016/7/16"Sat Jul1616:25:20 2016"07-16-2016"Sat Jul1616:25:20 2016"7-16-2016"Sat Jul1616:25:20 2016"7-16-16"Sat Jul1616:25:20 2016"7/16/16"Sat Jul1616:25:20 2016

By default, if the year is given last, then month-day-year is assumed, and the library also conveniently handles the presence or absence of leading zeros, as well as whether hyphens (-) or slashes (/) are used as delimiters.

Significantly more impressive, however, is how parsedatetime handles more complicated, "natural language" inputs:

importparsedatetimeaspdtfromdatetimeimportdatetimecal=pdt.Calendar()examples=["19 November 1975","19 November 75","19 Nov 75","tomorrow","yesterday","10 minutes from now","the first of January, 2001","3 days ago","in four days' time","two weeks from now","three months ago","2 weeks and 3 days in the future",]('Now: {}'.format(datetime.now().ctime()),end='\n\n')('{:40s}{:>30s}'.format('Input','Result'))('='*70)foreinexamples:dt,result=cal.parseDT(e)('{:<40s}{:>30}'.format('"'+e+'"',dt.ctime()))

Incredibly, this all works just as you’d hope:

Now: Mon Jun2008:41:38 2016 InputResult================================================================"19 November 1975"Wed Nov1908:41:38 1975"19 November 75"Wed Nov1908:41:38 1975"19 Nov 75"Wed Nov1908:41:38 1975"tomorrow"Tue Jun2109:00:00 2016"yesterday"Sun Jun1909:00:00 2016"10 minutes from now"Mon Jun2008:51:38 2016"the first of January, 2001"Mon Jan108:41:38 2001"3 days ago"Fri Jun1708:41:38 2016"in four days' time"Fri Jun2408:41:38 2016"two weeks from now"Mon Jul408:41:38 2016"three months ago"Sun Mar2008:41:38 2016"2 weeks and 3 days in the future"Thu Jul708:41:38 2016

The urge to combine this with a speech-to-text package likeSpeechRecognition or watson-word-watcher (which provides confidence values per word) is almost irresistible, but of course you don’t need complex projects to make use of parsedatetime: even allowing a user to type in a friendly and natural description of a date or time interval might be much more convenient than the usual but frequently clumsy DateTimePicker widgets we’ve become accustomed to.

Note

Another library featuring excellent datetime parsing abilities is Chronyk.

General-Purpose Libraries

In this chapter we take a look at a few batteries that have not yet been included in the Python standard library, but which would make excellent additions.

General-purpose libraries are quite rare in the Python world because the standard library covers most areas sufficiently well that library authors usually focus on very specific areas. Here we discuss boltons (a play on the word builtins), which provides are large number of useful additions to the standard library. We also cover the Cython library, which provides facilities for both massively speeding up Python code, as well as bypassing Python’s famous global interpreter lock (GIL) to enable true multi-CPU multi-threading.

boltons

The boltons library is a general-purpose collection of Python modules that covers a wide range of situations you may encounter. The library is well-maintained and high-quality; it’s well worth adding to your toolset.

As a general-purpose library, boltons does not have a specific focus. Instead, it contains several smaller libraries that focus on specific areas. In this section I will describe a few of these libraries that boltons offers.

boltons.cacheutils

boltons.cacheutils provides tools for using a cache inside your code. Caches are very useful for saving the results of expensive operations and reusing those previously calculated results.

The functools module in the standard library already provides a decorator called lru_cache, which can be used to memoize calls: this means that the function remembers the parameters from previous calls, and when the same parameter values appear in a new call, the previous answer is returned directly, bypassing any calculation.

boltons provides similar caching functionality, but with a few convenient tweaks. Consider the following sample, in which we attempt to rewrite some lyrics from Taylor Swift’s 1989 juggernaut record. We will use tools from boltons.cacheutils to speed up processing time:

importjsonimportshelveimportatexitfromrandomimportchoicefromstringimportpunctuationfromvocabularyimportVocabularyasvbblank_space="""Nice to meet you, where you been?I could show you incredible thingsMagic, madness, heaven, sinSaw you there and I thoughtOh my God, look at that faceYou look like my next mistakeLove's a game, wanna play?New money, suit and tieI can read you like a magazineAin't it funny, rumors flyAnd I know you heard about meSo hey, let's be friendsI'm dying to see how this one endsGrab your passport and my handI can make the bad guys good for a weekend"""fromboltons.cacheutilsimportLRI,LRU,cached# Persistent LRU cache for the parts of speechcached_data=shelve.open('cached_data',writeback=True)atexit.register(cached_data.close)# Retrieve or create the "parts of speech" cachecache_POS=cached_data.setdefault('parts_of_speech',LRU(max_size=5000))@cached(cache_POS)defpart_of_speech(word):items=vb.part_of_speech(word.lower())ifitems:returnjson.loads(items)[0]['text']# Temporary LRI cache for word substitutionscache=LRI(max_size=30)@cached(cache)defsynonym(word):items=vb.synonym(word)ifitems:returnchoice(json.loads(items))['text']@cached(cache)defantonym(word):items=vb.antonym(word)ifitems:returnchoice(items['text'])forraw_wordinblank_space.strip().split(''):ifraw_word=='\n':(raw_word)continuealternate=raw_word# default is the original word.# Remove punctuationword=raw_word.translate({ord(x):Noneforxinpunctuation})ifpart_of_speech(word)in['noun','verb','adjective','adverb']:alternate=choice((synonym,antonym))(word)orraw_word(alternate,end='')

![1]()

-

Our code detects "parts of speech" in order to know which lyrics to change. Looking up words online is slow, so we create a small database using the

shelvemodule in the standard library to save the cache data between runs. ![2]()

-

We use the

atexitmodule, also in the standard library, to make sure that our "parts of speech" cache data will get saved when the program exits. ![3]()

-

Here we obtain the

LRUcache provided byboltons.cacheutilsthat we saved from a previous run. ![4]()

-

Here we use the

@cachedecorator provided byboltons.cacheutilsto enable caching of thepart_of_speech()function call. If thewordargument has been used in a previous call to this function, the answer will be obtained from the cache rather than a slow call to the Internet. ![5]()

-

For synonyms and antonyms, we used a different kind of cache, called a least recently inserted cache (this choice is explained later in this section). An LRI cache is not provided in the Python Standard Library.

![6]()

-

Here we restrict which kinds of words will be substituted.

Note

The excellent vocabulary package is used here to provide access to synonyms and antonyms. Install it with pip install vocabulary.

For brevity, I’ve included only the first verse and chorus. The plan is staggeringly unsophisticated: we’re going to simply swap words with either a synonym or antonym, and which is decided randomly! Iteration over the words is straightforward, but we obtain synonyms and antonyms using the vocabulary package, which internally calls APIs on the Internet to fetch the data. Naturally, this can be slow since the lookup is going to be performed for every word, and this is why a cache will be used. In fact, in this code sample we use two different kinds of caching strategies.

boltons.cacheutils offers two kinds of caches: the least recently used(LRU) version, which is the same as functools.lru_cache, and a simpler least recently inserted (LRI) version, which expires entries based on their insertion order.

In our code, we use an LRU cache to keep a record of the parts of speechlookups, and we even save this cache to disk so that it can be reused in successive runs.