From: http://balodeamit.blogspot.com/2013/10/receive-side-scaling-and-receive-packet.html

Lets cover some basic terminologies before we dig into Receive Side Scaling and Receive Packet Steering.

Network Interface Card: A network interface controller (NIC) (also known as a network interface card, network adapter) is an electronic device that connects a computer to a computer network/ Modern NIC usually comes up with speed of 1-10Gbps.

#Find your NIC speed

[root@machine1 ~]# ethtool eth0 | grep Speed

Speed: 1000Mb/s

Hardware Interrupt: Its a signal from a hardware device that is sent to the CPU when the device needs to perform an input or output operation. In other words, the device "interrupts" the CPU to tell it its attention. Once CPU is interrupted, it stops what its doing, and execute an interrupt service routine associated with that device.

Soft IRQ: This interrupt request is like hardware interrupt request but not as critical. Basically when packets arrive at NIC, an interrupt is generated to CPU so that it can stop whatever it doing, and acknowledge to NIC saying I am ready to serve you. This means taking data from NIC, copying it to kernel buffer, doing TCP/ IP processing and provide data to application stack. All this when done by interrupt request, could cause lot of latency on NIC and starvation of other devices for CPU. For this reason, the interrupt work is diving into 2 things. One where CPU will just acknowledge NIC saying I got it. At this point, the hardware interrupt will be completed and NIC will return back to what it was doing. Rest of the work of moving data up the TCP/ IP stack is put as backlog under CPU's poll queue as SoftIRQ.

Socket Buffer Pool: Its a region of RAM(kernel memory) allocated during boot up process to hold the packet data.

Rx Queues: This queue hold the socket descriptors for actual packets in socket buffer pool. These are mostly implemented as circular queues. When a packet first arrives at the network card, the device add the packet descriptor(reference) in matching Rx queue and its data into socket buffer. In modern NICs, there could be multiple queues possible which is also called as RSS (concept to distribute packet processing load across multiple processors).

Receive Side Scaling

When packets arrives at NIC, they are added to receive queue. Receive queue is assigned an IRQ number during device drive initialization and one of the available CPU processor is allocated to that receive queue. This processor is responsible for servicing IRQs interrupt service routine. Generally the data processing is also done by same processor which does ISR. Obviously, this works well, if your machine is single core. However today's machine are also multicores (MSP). In multicore system, we can see that this concept of data processing wont work well if you have large amount of network traffic. why? Because only single core is taking all responsibility of processing data. ISR routines are small so if they are being executed on single core does not make large difference in performance, but data processing and moving data up in TCP/ IP stack takes time. While this all is being done by single processor, all other processors are just idle.

Below shows "cat /proc/interrupts" output on centos5 2.6.18 kernel. Column1 shows IRQ 53 is used for "eth1-TxRx-0" mono queue. Output of "cat /proc/irq/53/smp_affinity" shows that queue was configured to send interrupts to CPU8.

Alright, so far mono queue should clear on how it keeps single processor busy while others are idle.

RSS to rescue. RSS allows to configure network card to have multiple receive and send queues. These queues are individually mapped to each CPU processor. When interrupts are generated for each queue, they are sent to mapped processor.

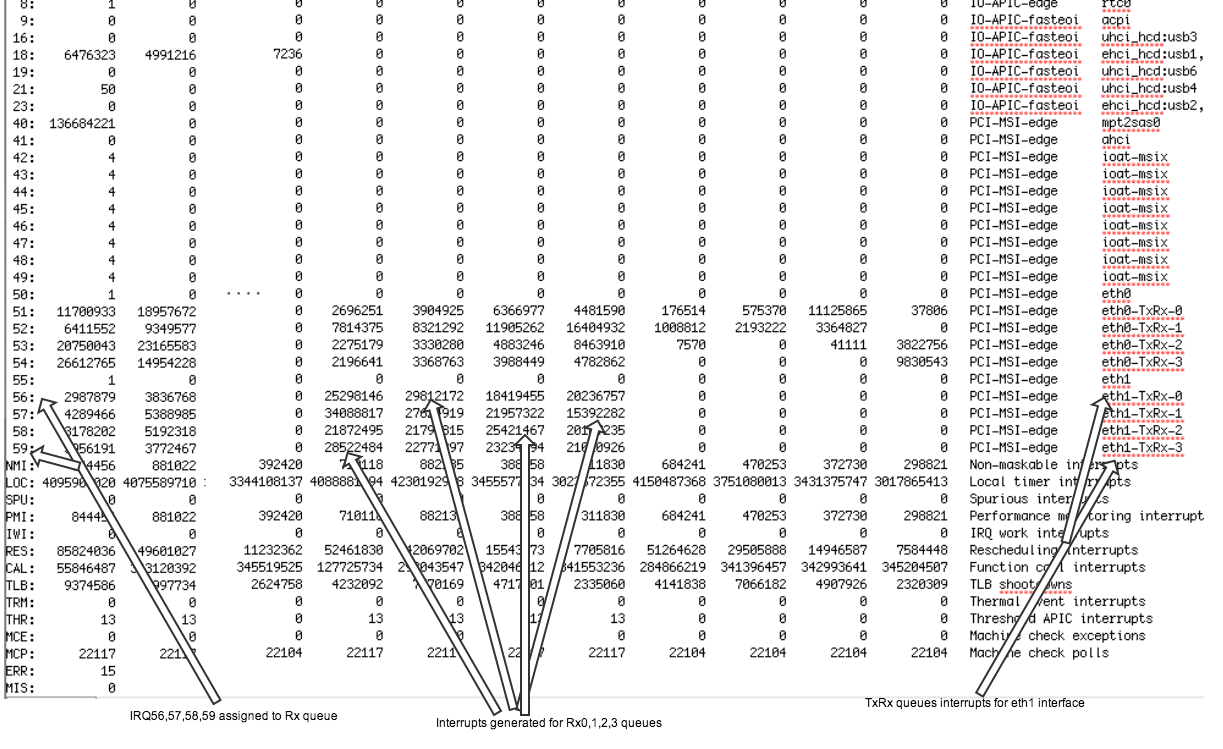

Below output shows that we have 4 receive queues ad 4 send transmit queues for eth1 interface. 56-59 are IRQ numbers assigned to those queues. Now packet processing load is being distributed amon 4 CPU processors (CPU8,9,10,11) achieving higher throughput and low latency.

RSS provides the benefits of parallel receive processing in multiprocessing environments. Receive Side Scaling is a NIC technology. It supports multiple receive queues and integrates a hashing function(distributes packets to different queues by Source and Destination IP and if applicable by TCP/UDP source and destination ports) in the NIC. The NIC computes a hash value for each incoming packet. Based on hash values, NIC assigns packets of the same data flow to a single queue and evenly distributes traffic flows across queues.

Below commands shows how to enable multiple queues on Intel igb driver

#Check Driver version

[root@machine1 ~]# ethtool -i eth1

driver: igb

version: 4.2.16

firmware-version: 2.5.5

#CPU Affinity before RSS for eth1 Rx queue:

[root@machine1 ~]$ cat /proc/interrupts | grep eth1-TxRx | awk '{print $1}' | cut -d":" -f 1 | xargs -n 1 -I {} cat /proc/irq/{}/smp_affinity

000100

#List all queues before RSS

[root@machine1 ~]# ls -l /sys/class/net/eth1/queues

total 0

drwxr-xr-x 2 root root 0 Sep 10 18:00 rx-0

drwxr-xr-x 2 root root 0 Oct 10 20:48 tx-0

[root@machine1 ~]# echo "options igb RSS=0,0" >>/etc/modprobe.d/igb.conf

#Reload igb driver and restart network

[root@machine1 ~]# /sbin/service network stop; sleep 2; /sbin/rmmod igb; sleep 2; /sbin/modprobe igb; sleep 2; /sbin/service network start;

Shutting down interface eth0: [ OK ]

Shutting down interface eth1: [ OK ]

Shutting down loopback interface: [ OK ]

Bringing up loopback interface: [ OK ]

Bringing up interface eth0:

Determining IP information for eth0... done.

[ OK ]

Bringing up interface eth1: [ OK ]

#List all queues after RSS

[root@machine1 ~]# ls -l /sys/class/net/eth1/queues

total 0

drwxr-xr-x 2 root root 0 Oct 11 00:34 rx-0

drwxr-xr-x 2 root root 0 Oct 11 00:34 rx-1

drwxr-xr-x 2 root root 0 Oct 11 00:34 rx-2

drwxr-xr-x 2 root root 0 Oct 11 00:34 rx-3

drwxr-xr-x 2 root root 0 Oct 11 00:34 tx-0

drwxr-xr-x 2 root root 0 Oct 11 00:34 tx-1

drwxr-xr-x 2 root root 0 Oct 11 00:34 tx-2

drwxr-xr-x 2 root root 0 Oct 11 00:34 tx-3

#CPU Affinity after RSS

[root@machine1 ~]# cat /proc/interrupts | grep eth1-TxRx | awk '{print $1}' | cut -d":" -f 1 | xargs -n 1 -I {} cat /proc/irq/{}/smp_affinity

000400

000008

000002

000001

Receive Packet Steering

Receive Packet Steering & Receive Side Scaling are pretty much the same concept except RSS is a hardware implementation of NIC and RPS is software implementation done for NICs which were monoqueue in nature. RPS enables a single NIC rx queue to have its receive softirq workload distributed among several CPUs. This helps prevent network traffic from being bottlenecked on a single NIC hardware queue. There is no real need of RPS if you have RSS working.

Below commands shows how to alter RPS values to distribute load across multiple CPU cores. Optimal settings for the CPU mask depend on architecture, network traffic, current CPU load, etc.

#There are only 2 queues present (1 rx queue and 1 tx queue)

[root@machine1 ~]# ls -l /sys/class/net/eth1/queues/

total 0

drwxr-xr-x 2 root root 0 Oct 14 19:00 rx-0

drwxr-xr-x 2 root root 0 Oct 15 00:15 tx-0

#Packet processing is done by single core CPU1

[root@machine1 ~]# cat /sys/class/net/eth1/queues/rx-0/rps_cpus

0001

#Distribute packet processing load to 15 CPU cores (CPU1-15) except CPU0

[root@machine1 ~]# echo fffe > /sys/class/net/eth1/queues/rx-0/rps_cpus

fffe

#Confirm output

[root@machine1 ~]# cat /sys/class/net/eth1/queues/rx-0/rps_cpus

fffe

Run following command to see output of how softirqs are being distributed across processors for receiving traffic.

[root@machine1 ~]# watch -d "cat /proc/softirqs | grep NET_RX"

Packet Flow

1) Packet arrival at NIC: NIC copies the data to socket buffer through an onboard DMA controller, and raises a hardware interrupt. Some NIC types also have a local memory which is mapped to host memory.

2) Copy data to socket buffer: Linux kernel maintains a pool of socket buffers. The socket buffer is the structure used to address and manage a packet over the entire time this packet is being processed in the kernel. When NIC recieves data, it creates a socket buffer structure and stores the payload data address in the variables of this structure. At each layer of TCP/ IP stack, headers are appended to this payload. The payload is copied only twice: once when it transits from the user address space to the kernel address space, and a second time when the packet data is passed to the network adapter.

3)Hardware interrupt & softIRQ: After copying data to socket buffer, NIC raises a hardware interrupt to indicate that an action needs to be taken by CPU on incoming packet. The processor's interrupt service routine then reads the Interrupt Status Register to determine what type of interrupt occurred and what action needs to be taken. It acknowledges the NIC interrupt. A hardware interrupt should be quick so the system isn't held up in interrupt handling. With the kernel now aware that a packet is available for processing on the receive queue the hardware interrupt is done, the hardware signal is un-asserted, and everything is ready for the next stage of packet processing. The next stage of packet processing is put in CPU's backlog queue as softIRQ so whenever it get chance, it will start processing and move the packet upto TCP/ IP stack.In case of monoqueues, the hardware interrupt generated is from single queue and same CPU is also responisble for processing softIRQ. If RPS is enabled on mono queue, the incoming packets are hashed, load is distributed across multiple CPU processors.In case of multi queues (RSS), hardware interrupt will go to matching CPU processor, and that processor will also be responsible for softIRQ processing.

.png)

.png)

.png)

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· go语言实现终端里的倒计时

· 如何编写易于单元测试的代码

· 10年+ .NET Coder 心语,封装的思维:从隐藏、稳定开始理解其本质意义

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· 周边上新:园子的第一款马克杯温暖上架

· 分享 3 个 .NET 开源的文件压缩处理库,助力快速实现文件压缩解压功能!

· Ollama——大语言模型本地部署的极速利器

· DeepSeek如何颠覆传统软件测试?测试工程师会被淘汰吗?

· 使用C#创建一个MCP客户端

2014-12-29 学习OpenStack之(5):在Mac上部署Juno版本OpenStack 四节点环境