Java 之 批量从hive导入mysql(代替sqoop工具)

一、pom

<dependencies> <!-- https://mvnrepository.com/artifact/org.apache.hive/hive-jdbc --> <dependency> <groupId>org.apache.hive</groupId> <artifactId>hive-jdbc</artifactId> <version>1.1.0</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-common</artifactId> <version>2.6.0</version> </dependency> <dependency> <groupId>org.mybatis</groupId> <artifactId>mybatis</artifactId> <version>3.4.6</version> </dependency> <dependency> <groupId>mysql</groupId> <artifactId>mysql-connector-java</artifactId> <version>5.1.38</version> </dependency> </dependencies>

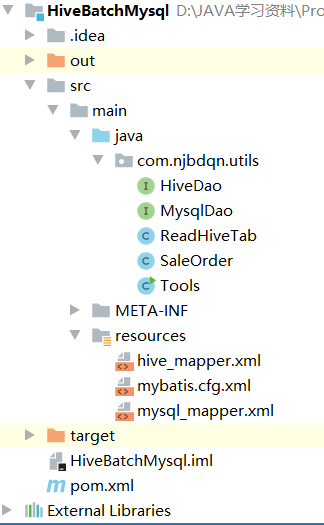

二、目录

三、配置类

mybatis.cfg

<?xml version="1.0" encoding="UTF-8" ?> <!DOCTYPE configuration PUBLIC "-//mybatis.org//DTD Config 3.0//EN" "http://mybatis.org/dtd/mybatis-3-config.dtd"> <configuration> <typeAliases> <typeAlias type="com.njbdqn.utils.SaleOrder" alias="so"/> </typeAliases> <environments default="cm"> <environment id="cm"> <transactionManager type="JDBC"></transactionManager> <dataSource type="POOLED"> <property name="driver" value="org.apache.hive.jdbc.HiveDriver"/> <property name="url" value="jdbc:hive2://192.168.56.111:10000/dm_sales_source"/> <property name="username" value="root"/> <property name="password" value="root"/> </dataSource> </environment> <environment id="ms"> <transactionManager type="JDBC"></transactionManager> <dataSource type="POOLED"> <property name="driver" value="com.mysql.jdbc.Driver"/> <property name="url" value="jdbc:mysql://192.168.56.111:3306/dmdb"/> <property name="username" value="root"/> <property name="password" value="root"/> </dataSource> </environment> </environments> <mappers> <mapper resource="hive_mapper.xml"></mapper> <mapper resource="mysql_mapper.xml"></mapper> </mappers> </configuration>

hive_mapper.xml

<?xml version="1.0" encoding="UTF-8" ?> <!DOCTYPE mapper PUBLIC "-//mybatis.org//DTD mapper 3.0//EN" "http://mybatis.org/dtd/mybatis-3-mapper.dtd"> <mapper namespace="com.njbdqn.utils.HiveDao"> <select id="findAll" resultType="so"> select customer_sk ,product_sk, d_date,recent_num,recent_amount,order_num,order_dailyamount from dm_sales_order_count </select> </mapper>

mysql_mapper.xml

<?xml version="1.0" encoding="UTF-8" ?> <!DOCTYPE mapper PUBLIC "-//mybatis.org//DTD mapper 3.0//EN" "http://mybatis.org/dtd/mybatis-3-mapper.dtd"> <mapper namespace="com.njbdqn.utils.MysqlDao"> <insert id="saveDate" parameterType="java.util.List"> insert into dm_sales_order_count values <foreach collection="list" item="ord" separator=","> (#{ord.d_date}, #{ord.customer_sk}, #{ord.product_sk}, #{ord.order_num}, #{ord.order_dailyamount}, #{ord.recent_amount}, #{ord.recent_num}) </foreach> </insert> </mapper>

三、代码

实体类:

public class SaleOrder { private String d_date ; private Integer customer_sk ; private Integer product_sk; private Long order_num ; private Double order_dailyamount ; private Double recent_amount ; private Long recent_num ;

HiveDao

public interface HiveDao { List<SaleOrder> findAll(); }

mysqlDao

public interface MysqlDao { void saveDate(List<SaleOrder> lst); }

ReadHiveTab(测试用的)

import com.sun.org.apache.regexp.internal.RE; import java.sql.*; import java.util.List; public class ReadHiveTab { public static <T>List<T> readTab(String dbname,String table){ try { Class.forName("org.apache.hive.jdbc.HiveDriver"); Connection con = DriverManager.getConnection("jdbc:hive2://192.168.56.111:10000/"+dbname,"root","root"); PreparedStatement pstat = con.prepareStatement("select * from "+table); ResultSet rs = pstat.executeQuery(); while (rs.next()){ System.out.println(rs.getInt("customer_sk")); } } catch (ClassNotFoundException e) { e.printStackTrace(); } catch (SQLException e) { e.printStackTrace(); } return null; } }

Tools(main方法):从hive到sql

package com.njbdqn.utils; import org.apache.ibatis.io.Resources; import org.apache.ibatis.session.SqlSession; import org.apache.ibatis.session.SqlSessionFactory; import org.apache.ibatis.session.SqlSessionFactoryBuilder; import java.io.Reader; import java.util.List; public class Tools { public static void main(String[] args) throws Exception { // ReadHiveTab.readTab("dm_sales_source","dm_sales_order_count"); Reader reader = Resources.getResourceAsReader("mybatis.cfg.xml"); SqlSessionFactory factory = new SqlSessionFactoryBuilder().build(reader,"cm"); SqlSession session = factory.openSession(); HiveDao hdao = session.getMapper(HiveDao.class); List<SaleOrder> lst = hdao.findAll(); session.close(); Reader r1 = Resources.getResourceAsReader("mybatis.cfg.xml"); SqlSessionFactory mf = new SqlSessionFactoryBuilder().build(r1, "ms"); SqlSession se = mf.openSession(); MysqlDao mdao = se.getMapper(MysqlDao.class); mdao.saveDate(lst); se.commit(); se.close(); } }

四、打胖包后运行(因为执行环境没有driver等)

java -jar HiveBatchMysql.jar

=> 验证mysql是否增加了数据

浙公网安备 33010602011771号

浙公网安备 33010602011771号