hbase 之如何用shell操作数据

一、使用hbase shell 导入数据

1. hive-hbase 通过hive导入(处理的)数据

hdfs存放数据,hive建立外部表tab1,建立外部表tab_hbase映射hbase中的某个表,最后insert into tab_hbase select XXX from tab1

例1:

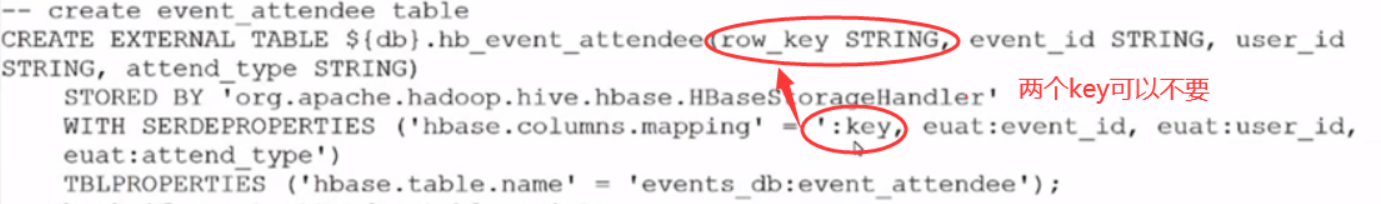

--hbase create_namespace "exam" create 'exam.spu','result' --hive create database spu_db ; use spu_db; drop table if exists ex_spu; create external table ex_spu( spu_id STRING, shop_id STRING, shop_name STRING, category_name STRING, spu_name STRING, spu_price double, spu_originprice double, month_sales INT, praise_num INT, spu_unit STRING, spu_desc STRING, spu_image STRING ) ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.OpenCSVSerde' location '/app/data/exam' -- location中不可写*.csv(直接创建新文件夹) -- location路径中不可以有其他文件! tblproperties("skip.header.line.count"="1") -- 一定写在最后一行 -- 一定要转换成unix编码! DROP TABLE IF EXISTS ex_spu_hbase; create external table ex_spu_hbase( key string, sales double, praise int ) stored by 'org.apache.hadoop.hive.hbase.HBaseStorageHandler' with serdeproperties("hbase.columns.mapping"=":key,result:sales,result:praise") tblproperties("hbase.table.name"="exam.spu"); -- mapping的顺序一定要和hive源数据表一致

(rowkey如果不要两个key都不要写)

导入处理后的数据:

insert into ex_spu_hbase select concat_ws('-',shop_id,shop_name) as key, sum(spu_price*month_sales) as sales, sum(praise_num) as praise from ex_spu group by shop_name,shop_id;

2. 通过Dimporttsv导入HDFS上(源)数据

create_namespace 'exam' create 'exam:mytest','info' hdfs dfs -put /opt/data/quizz/UserBehaviorTestHbase.csv /quizz/data hbase org.apache.hadoop.hbase.mapreduce.ImportTsv \ -Dimporttsv.separator="," \ -Dimporttsv.columns="HBASE_ROW_KEY,info:stuid,info:proid,info:level,info:score" \ exam:mytest /quizz/data/UserBehaviorTestHbase.csv scan 'exam:mytest', {COLUMNS => 'info'}

二、使用hbase shell 查看数据

scan:

-- 表前5

scan 'exam.spu',LIMIT => 5

-- hbase查列族 scan 'exam.analysis', {COLUMNS => 'question'} -- hbase查【列族-列】 -- scan 'hbase.meta', {COLUMNS => 'question:right'} -- hbase查多个【列族-列】 -- scan 'exam.analysis', {COLUMNS => ['question:right', 'accuracy:total_score'], LIMIT => 5}

其他:

三、用hbase shell删除表

disable 'event_db:users'

drop 'event_db:users'

四、快速统计行数

cnblogs.com/bigdatasafe/p/10954876.html

hbase org.apache.hadoop.hbase.mapreduce.RowCounter '表名'

浙公网安备 33010602011771号

浙公网安备 33010602011771号