1、自动化运维之SaltStack实践

自动化运维之SaltStack实践

1.1、环境

| linux-node1(master服务端) | 192.168.0.15 |

| linux-node2(minion客户端) | 192.168.0.16 |

1.2、SaltStack三种运行模式介绍

| Local | 本地 |

| Master/Minion | 传统运行方式(server端跟agent端) |

| Salt SSH | SSH |

1.3、SaltStack三大功能

●远程执行

●配置管理

●云管理

1.4、SaltStack安装基础环境准备

[root@linux-node1 ~]# cat /etc/redhat-release ##查看系统版本

CentOS release 6.7 (Final)

[root@linux-node1 ~]# uname -r ##查看系统内核版本

2.6.32-573.el6.x86_64

[root@linux-node1 ~]# getenforce ##查看selinux的状态

Enforcing

[root@linux-node1 ~]# setenforce 0 ##关闭selinux

[root@linux-node1 ~]# getenforce

Permissive

[root@linux-node1 ~]# /etc/init.d/iptables stop

[root@linux-node1 ~]# /etc/init.d/iptables stop

[root@linux-node1 ~]# ifconfig eth0|awk -F '[: ]+' 'NR==2{print $4}' ##过滤Ip地址

192.168.0.15

[root@linux-node1 ~]# hostname ##查看主机名

linux-node1.zhurui.com

[root@linux-node1 yum.repos.d]# wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-6.repo ##安装salt必须使用到epel源

1.4、安装Salt

服务端:

[root@linux-node1 yum.repos.d]# yum install -y salt-master salt-minion ##salt-master包跟salt-minion包

[root@linux-node1 yum.repos.d]# chkconfig salt-master on ##加入到开机自动启动

[root@linux-node1 yum.repos.d]# chkconfig salt-minion on ##加入到开机自动启动

[root@linux-node1 yum.repos.d]# /etc/init.d/salt-master start ##启动salt-master

Starting salt-master daemon: [ OK ]

启动到这里需要修改minion配置文件,才能启动salt-minion服务

[root@linux-node1 yum.repos.d]# grep '^[a-z]' /etc/salt/minion

master: 192.168.0.15 ##指定master主机

[root@linux-node1 yum.repos.d]# cat /etc/hosts

192.168.0.15 linux-node1.zhurui.com linux-node1 ##确认主机名是否解析

192.168.0.16 linux-node2.zhurui.com linux-node2

解析结果:

[root@linux-node1 yum.repos.d]# ping linux-node1.zhurui.comPING linux-node1.zhurui.com (192.168.0.15)56(84) bytes of data.64 bytes from linux-node1.zhurui.com (192.168.0.15): icmp_seq=1 ttl=64 time=0.087 ms64 bytes from linux-node1.zhurui.com (192.168.0.15): icmp_seq=2 ttl=64 time=0.060 ms64 bytes from linux-node1.zhurui.com (192.168.0.15): icmp_seq=3 ttl=64 time=0.053 ms64 bytes from linux-node1.zhurui.com (192.168.0.15): icmp_seq=4 ttl=64 time=0.060 ms64 bytes from linux-node1.zhurui.com (192.168.0.15): icmp_seq=5 ttl=64 time=0.053 ms64 bytes from linux-node1.zhurui.com (192.168.0.15): icmp_seq=6 ttl=64 time=0.052 ms64 bytes from linux-node1.zhurui.com (192.168.0.15): icmp_seq=7 ttl=64 time=0.214 ms64 bytes from linux-node1.zhurui.com (192.168.0.15): icmp_seq=8 ttl=64 time=0.061 ms

[root@linux-node1 yum.repos.d]# /etc/init.d/salt-minion start ##启动minion客户端

Starting salt-minion daemon: [ OK ]

[root@linux-node1 yum.repos.d]#

客户端:

[root@linux-node2 ~]# yum install -y salt-minion ##安装salt-minion包,相当于客户端包

[root@linux-node2 ~]# chkconfig salt-minion on ##加入开机自启动

[root@linux-node2 ~]# grep '^[a-z]' /etc/salt/minion ##客户端指定master主机

master: 192.168.0.15

[root@linux-node2 ~]# /etc/init.d/salt-minion start ##接着启动minion

Starting salt-minion daemon: [ OK ]

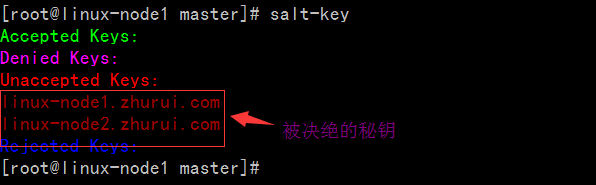

1.5、Salt秘钥认证设置

1.5.1使用salt-kes -a linux*命令之前在目录/etc/salt/pki/master目录结构如下

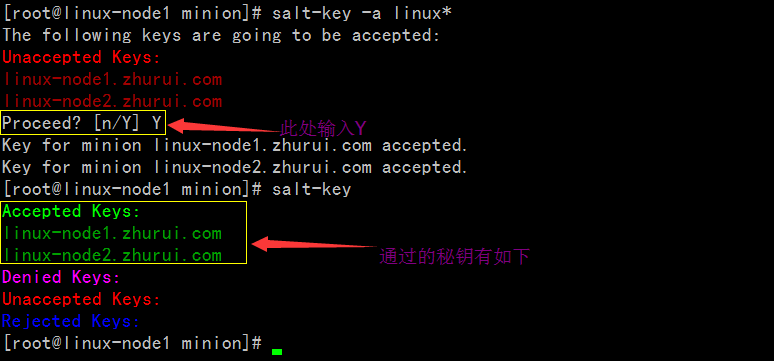

1.5.2使用salt-kes -a linux*命令将秘钥通过允许,随后minions_pre下的文件会转移到minions目录下

[root@linux-node1 minion]# salt-key -a linux*The following keys are going to be accepted:UnacceptedKeys:linux-node1.zhurui.comlinux-node2.zhurui.comProceed?[n/Y] YKeyfor minion linux-node1.zhurui.com accepted.Keyfor minion linux-node2.zhurui.com accepted.[root@linux-node1 minion]# salt-keyAcceptedKeys:linux-node1.zhurui.comlinux-node2.zhurui.comDeniedKeys:UnacceptedKeys:RejectedKeys:

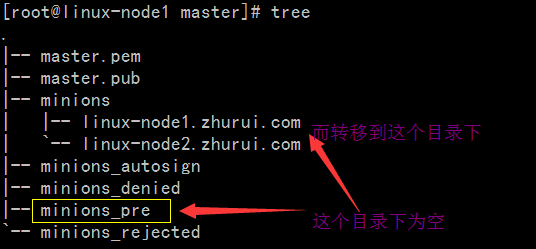

1.5.3此时目录机构变化成如下:

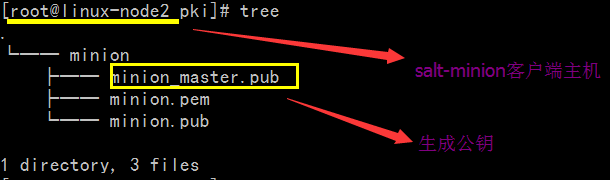

1.5.4并且伴随着客户端/etc/salt/pki/minion/目录下有master公钥生成

1.6、salt远程执行命令详解

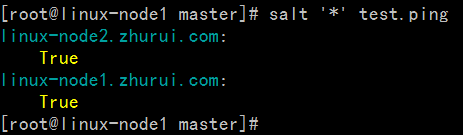

1.6.1 salt '*' test.ping 命令

[root@linux-node1 master]# salt '*' test.ping ##salt命令 test.ping的含义是,test是一个模块,ping是模块内的方法

linux-node2.zhurui.com:

True

linux-node1.zhurui.com:

True

[root@linux-node1 master]#

1.6.2 salt '*' cmd.run 'uptime' 命令

1.7、saltstack配置管理

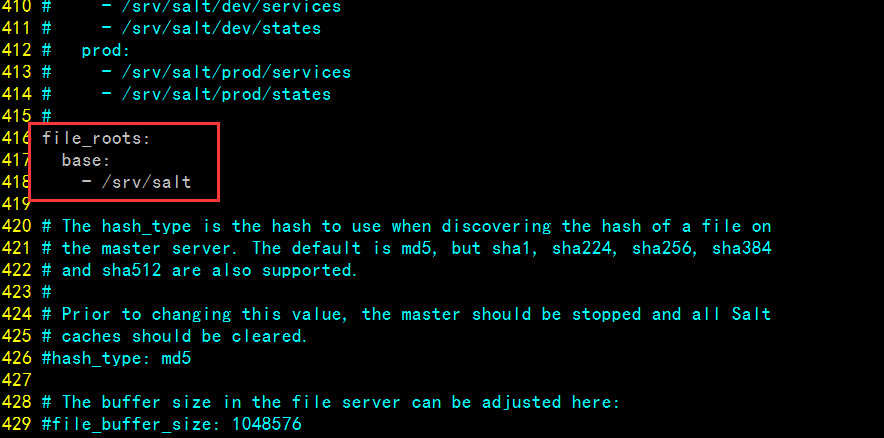

1.7.1编辑配置文件/etc/salt/master,将file_roots注释去掉

1.7.2接着saltstack远程执行如下命令

[root@linux-node1 master]# ls /srv/

[root@linux-node1 master]# mkdir /srv/salt

[root@linux-node1 master]# /etc/init.d/salt-master restart

Stopping salt-master daemon: [ OK ]

Starting salt-master daemon: [ OK ]

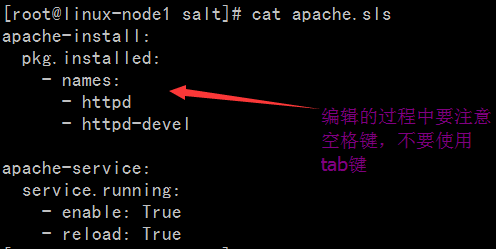

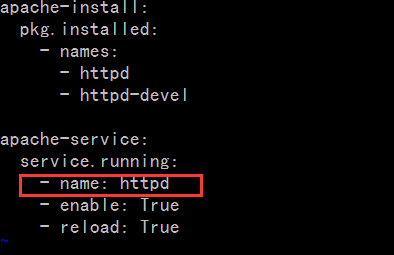

[root@linux-node1 salt]# cat apache.sls ##进入到/srv/salt/目录下创建

[root@linux-node1 salt]# salt '*' state.sls apache ##接着执行如下语句

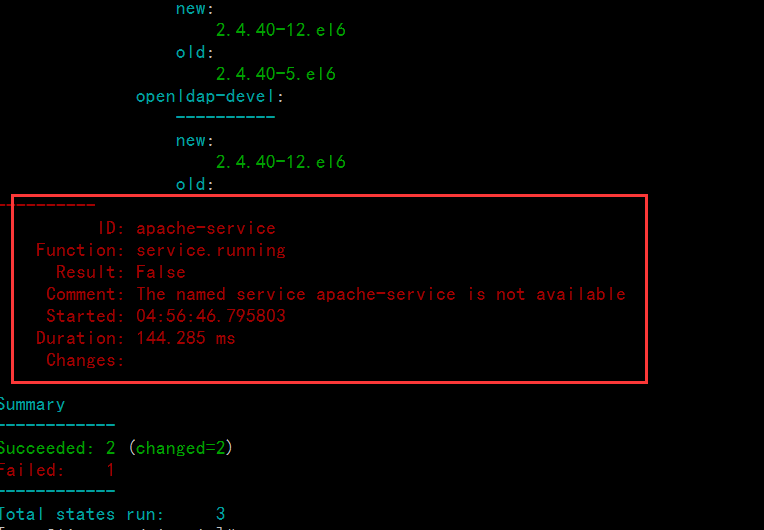

接着会出现如下报错:

便捷apache.sls文件添加如下:

最后成功如下:

[root@linux-node1 salt]# salt '*' state.sls apachelinux-node2.zhurui.com:----------ID: apache-installFunction: pkg.installedName: httpdResult:TrueComment:Package httpd is already installed.Started:22:38:52.954973Duration:1102.909 msChanges:----------ID: apache-installFunction: pkg.installedName: httpd-develResult:TrueComment:Package httpd-devel is already installed.Started:22:38:54.058190Duration:0.629 msChanges:----------ID: apache-serviceFunction: service.runningName: httpdResult:TrueComment:Service httpd has been enabled, and is runningStarted:22:38:54.059569Duration:1630.938 msChanges:----------httpd:TrueSummary------------Succeeded:3(changed=1)Failed:0------------Total states run:3linux-node1.zhurui.com:----------ID: apache-installFunction: pkg.installedName: httpdResult:TrueComment:Package httpd is already installed.Started:05:01:17.491217Duration:1305.282 msChanges:----------ID: apache-installFunction: pkg.installedName: httpd-develResult:TrueComment:Package httpd-devel is already installed.Started:05:01:18.796746Duration:0.64 msChanges:----------ID: apache-serviceFunction: service.runningName: httpdResult:TrueComment:Service httpd has been enabled, and is runningStarted:05:01:18.798131Duration:1719.618 msChanges:----------httpd:TrueSummary------------Succeeded:3(changed=1)Failed:0------------Total states run:3[root@linux-node1 salt]#

1.7.3验证使用saltstack安装httpd是否成功

linux-node1:

[root@linux-node1 salt]# lsof -i:80 ##已经成功启动

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

httpd 7397 root 4u IPv6 46164 0t0 TCP *:http (LISTEN)

httpd 7399 apache 4u IPv6 46164 0t0 TCP *:http (LISTEN)

httpd 7400 apache 4u IPv6 46164 0t0 TCP *:http (LISTEN)

httpd 7401 apache 4u IPv6 46164 0t0 TCP *:http (LISTEN)

httpd 7403 apache 4u IPv6 46164 0t0 TCP *:http (LISTEN)

httpd 7404 apache 4u IPv6 46164 0t0 TCP *:http (LISTEN)

httpd 7405 apache 4u IPv6 46164 0t0 TCP *:http (LISTEN)

httpd 7406 apache 4u IPv6 46164 0t0 TCP *:http (LISTEN)

httpd 7407 apache 4u IPv6 46164 0t0 TCP *:http (LISTEN)

linux-node2:

[root@linux-node2 pki]# lsof -i:80

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

httpd 12895 root 4u IPv6 47532 0t0 TCP *:http (LISTEN)

httpd 12897 apache 4u IPv6 47532 0t0 TCP *:http (LISTEN)

httpd 12898 apache 4u IPv6 47532 0t0 TCP *:http (LISTEN)

httpd 12899 apache 4u IPv6 47532 0t0 TCP *:http (LISTEN)

httpd 12901 apache 4u IPv6 47532 0t0 TCP *:http (LISTEN)

httpd 12902 apache 4u IPv6 47532 0t0 TCP *:http (LISTEN)

httpd 12906 apache 4u IPv6 47532 0t0 TCP *:http (LISTEN)

httpd 12908 apache 4u IPv6 47532 0t0 TCP *:http (LISTEN)

httpd 12909 apache 4u IPv6 47532 0t0 TCP *:http (LISTEN)

[root@linux-node2 pki]#

1.7.4使用saltstack状态管理

[root@linux-node1 salt]# salt '*' state.highstate

2.1、SaltStack之Grains数据系统

●Grains

●Pillar

2.1.1使用salt命令查看系统版本

[root@linux-node1 salt]# salt 'linux-node1*' grains.lslinux-node1.zhurui.com:-SSDs- biosreleasedate- biosversion- cpu_flags- cpu_model- cpuarch- domain- fqdn- fqdn_ip4- fqdn_ip6- gpus- host- hwaddr_interfaces- id- init- ip4_interfaces- ip6_interfaces- ip_interfaces- ipv4- ipv6- kernel- kernelrelease- locale_info- localhost- lsb_distrib_codename- lsb_distrib_id- lsb_distrib_release- machine_id- manufacturer- master- mdadm- mem_total- nodename- num_cpus- num_gpus- os- os_family- osarch- oscodename- osfinger- osfullname- osmajorrelease- osrelease- osrelease_info- path- productname- ps- pythonexecutable- pythonpath- pythonversion- saltpath- saltversion- saltversioninfo- selinux- serialnumber- server_id- shell- virtual- zmqversion[root@linux-node1 salt]#

2.1.2系统版本相关信息:

[root@linux-node1 salt]# salt 'linux-node1*' grains.itemslinux-node1.zhurui.com:----------SSDs:biosreleasedate:07/31/2013biosversion:6.00cpu_flags:- fpu- vme- de- pse- tsc- msr- pae- mce- cx8- apic- sep- mtrr- pge- mca- cmov- pat- pse36- clflush- dts- mmx- fxsr- sse- sse2- ss- syscall- nx- rdtscp- lm- constant_tsc- up- arch_perfmon- pebs- bts- xtopology- tsc_reliable- nonstop_tsc- aperfmperf- unfair_spinlock- pni- ssse3- cx16- sse4_1- sse4_2- x2apic- popcnt- hypervisor- lahf_lm- arat- dtscpu_model:Intel(R)Core(TM) i3 CPU M 380@2.53GHzcpuarch:x86_64domain:zhurui.comfqdn:linux-node1.zhurui.comfqdn_ip4:-192.168.0.15fqdn_ip6:gpus:|_----------model:SVGA II Adaptervendor:unknownhost:linux-node1hwaddr_interfaces:----------eth0:00:0c:29:fc:ba:90lo:00:00:00:00:00:00id:linux-node1.zhurui.cominit:upstartip4_interfaces:----------eth0:-192.168.0.15lo:-127.0.0.1ip6_interfaces:----------eth0:- fe80::20c:29ff:fefc:ba90lo:-::1ip_interfaces:----------eth0:-192.168.0.15- fe80::20c:29ff:fefc:ba90lo:-127.0.0.1-::1ipv4:-127.0.0.1-192.168.0.15ipv6:-::1- fe80::20c:29ff:fefc:ba90kernel:Linuxkernelrelease:2.6.32-573.el6.x86_64locale_info:----------defaultencoding:UTF8defaultlanguage:en_USdetectedencoding:UTF-8localhost:linux-node1.zhurui.comlsb_distrib_codename:Finallsb_distrib_id:CentOSlsb_distrib_release:6.7machine_id:da5383e82ce4b8d8a76b5a3e00000010manufacturer:VMware,Inc.master:192.168.0.15mdadm:mem_total:556nodename:linux-node1.zhurui.comnum_cpus:1num_gpus:1os:CentOSos_family:RedHatosarch:x86_64oscodename:Finalosfinger:CentOS-6osfullname:CentOSosmajorrelease:6osrelease:6.7osrelease_info:-6-7path:/sbin:/usr/sbin:/bin:/usr/binproductname:VMwareVirtualPlatformps:ps -efHpythonexecutable:/usr/bin/python2.6pythonpath:-/usr/bin-/usr/lib64/python26.zip-/usr/lib64/python2.6-/usr/lib64/python2.6/plat-linux2-/usr/lib64/python2.6/lib-tk-/usr/lib64/python2.6/lib-old-/usr/lib64/python2.6/lib-dynload-/usr/lib64/python2.6/site-packages-/usr/lib64/python2.6/site-packages/gtk-2.0-/usr/lib/python2.6/site-packagespythonversion:-2-6-6- final-0saltpath:/usr/lib/python2.6/site-packages/saltsaltversion:2015.5.10saltversioninfo:-2015-5-10-0selinux:----------enabled:Trueenforced:Permissiveserialnumber:VMware-564d8f43912d3a99-eb c4 3b a9 34 fc ba 90server_id:295577080shell:/bin/bashvirtual:VMwarezmqversion:3.2.5

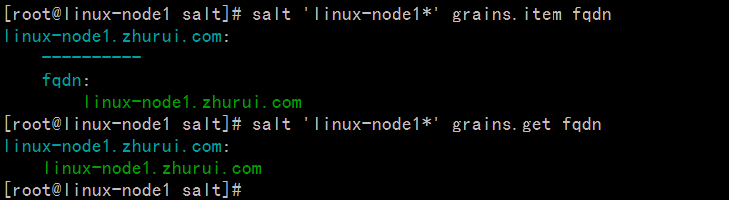

2.1.3系统版本相关信息:

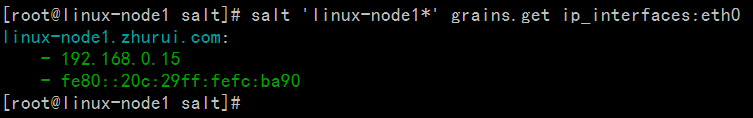

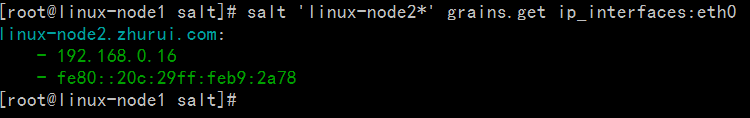

2.1.4查看node1所有ip地址:

[root@linux-node1 salt]# salt 'linux-node1*' grains.get ip_interfaces:eth0 ##用于信息的收集

linux-node1.zhurui.com:

- 192.168.0.15

- fe80::20c:29ff:fefc:ba90

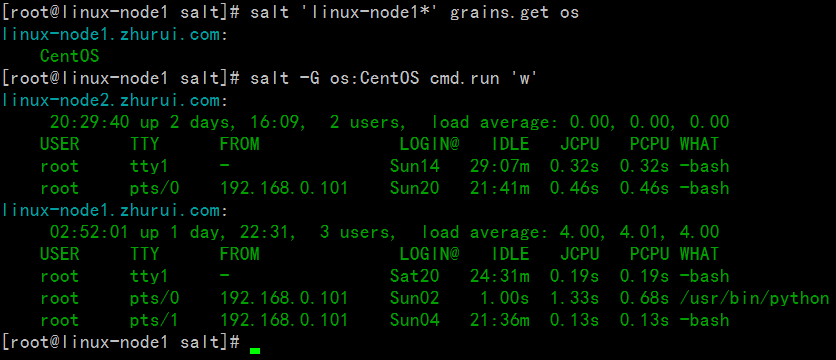

2.1.4使用Grains收集系统信息:

[root@linux-node1 salt]# salt 'linux-node1*' grains.get os

linux-node1.zhurui.com:

CentOS

[root@linux-node1 salt]# salt -G os:CentOS cmd.run 'w' ## -G:代表使用Grains收集,使用w命令,查看登录信息

linux-node2.zhurui.com:

20:29:40 up 2 days, 16:09, 2 users, load average: 0.00, 0.00, 0.00

USER TTY FROM LOGIN@ IDLE JCPU PCPU WHAT

root tty1 - Sun14 29:07m 0.32s 0.32s -bash

root pts/0 192.168.0.101 Sun20 21:41m 0.46s 0.46s -bash

linux-node1.zhurui.com:

02:52:01 up 1 day, 22:31, 3 users, load average: 4.00, 4.01, 4.00

USER TTY FROM LOGIN@ IDLE JCPU PCPU WHAT

root tty1 - Sat20 24:31m 0.19s 0.19s -bash

root pts/0 192.168.0.101 Sun02 1.00s 1.33s 0.68s /usr/bin/python

root pts/1 192.168.0.101 Sun04 21:36m 0.13s 0.13s -bash

[root@linux-node1 salt]#

截图如下:

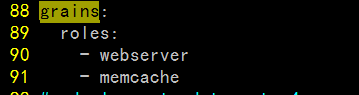

2.1.5 使用Grains规则匹配到memcache的主机上运行输入hehe

[root@linux-node1 salt]# vim /etc/salt/minion ##编辑minion配置文件,取消如下几行注释

88 grains:

89 roles:

90 - webserver

91 - memcache

截图如下:

[root@linux-node1 salt]# /etc/init.d/salt-minion restart ##

Stopping salt-minion daemon: [ OK ]

Starting salt-minion daemon: [ OK ]

[root@linux-node1 salt]#

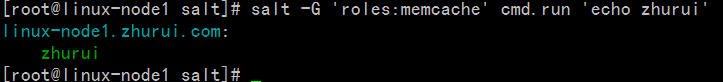

[root@linux-node1 salt]# salt -G 'roles:memcache' cmd.run 'echo zhurui' ##使用grains匹配规则是memcache的客户端机器,然后输出命令

linux-node1.zhurui.com:

zhurui

[root@linux-node1 salt]#

截图如下:

2.1.5 也可以通过创建新的配置文件/etc/salt/grains文件来配置规则

[root@linux-node1 salt]# cat /etc/salt/grains

web: nginx

[root@linux-node1 salt]# /etc/init.d/salt-minion restart ##修改完配置文件以后需要重启服务

Stopping salt-minion daemon: [ OK ]

Starting salt-minion daemon: [ OK ]

[root@linux-node1 salt]#

[root@linux-node1 salt]# salt -G web:nginx cmd.run 'w' ##使用grains匹配规则为web:nginx的主机运行命令w

linux-node1.zhurui.com:

03:31:07 up 1 day, 23:11, 3 users, load average: 4.11, 4.03, 4.01

USER TTY FROM LOGIN@ IDLE JCPU PCPU WHAT

root tty1 - Sat20 25:10m 0.19s 0.19s -bash

root pts/0 192.168.0.101 Sun02 0.00s 1.41s 0.63s /usr/bin/python

root pts/1 192.168.0.101 Sun04 22:15m 0.13s 0.13s -bash

grains的用法:

1.收集底层系统信息

2、远程执行里面匹配minion

3、top.sls里面匹配minion

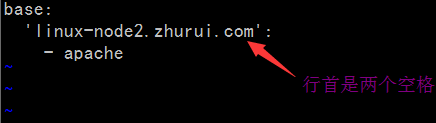

2.1.5 也可以/srv/salt/top.sls配置文件匹配minion

[root@linux-node1 salt]# cat /srv/salt/top.sls

base:

'web:nginx':

- match: grain

- apache

[root@linux-node1 salt]#

2.2、SaltStack之Pillar数据系统

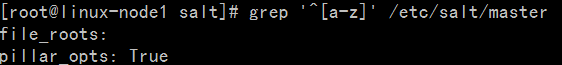

2.2.1 首先在master配置文件552行打开pillar开关

[root@linux-node1 salt]# grep '^[a-z]' /etc/salt/master

file_roots:

pillar_opts: True

[root@linux-node1 salt]# /etc/init.d/salt-master restart ##重启master

Stopping salt-master daemon: [ OK ]

Starting salt-master daemon: [ OK ]

[root@linux-node1 salt]# salt '*' pillar.items ##使用如下命令验证

截图如下:

[root@linux-node1 salt]# grep '^[a-z]' /etc/salt/master

529 pillar_roots: ##打开如下行

530 base:

531 - /srv/pillar

截图如下:

[root@linux-node1 salt]# mkdir /srv/pillar

[root@linux-node1 salt]# /etc/init.d/salt-master restart ##重启master

Stopping salt-master daemon: [ OK ]

Starting salt-master daemon: [ OK ]

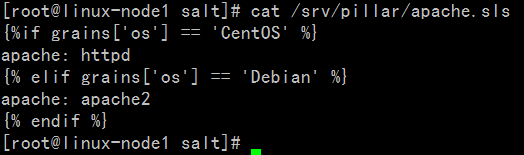

[root@linux-node1 salt]# vim /srv/pillar/apache.sls

[root@linux-node1 salt]# cat /srv/pillar/apache.sls

{%if grains['os'] == 'CentOS' %}

apache: httpd

{% elif grains['os'] == 'Debian' %}

apache: apache2

{% endif %}

[root@linux-node1 salt]#

截图如下:

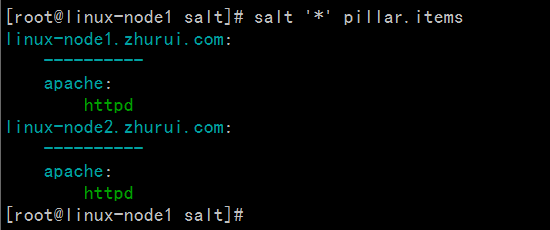

接着指定哪个minion可以看到:

[root@linux-node1 salt]# cat /srv/pillar/top.sls

base:

'*':

- apache

[root@linux-node1 salt]# salt '*' pillar.items ##修改完成以后使用该命令验证

linux-node1.zhurui.com:

----------

apache:

httpd

linux-node2.zhurui.com:

----------

apache:

httpd

截图如下:

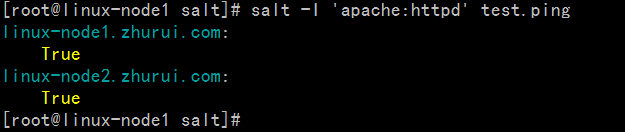

2.2.1 使用Pillar定位主机

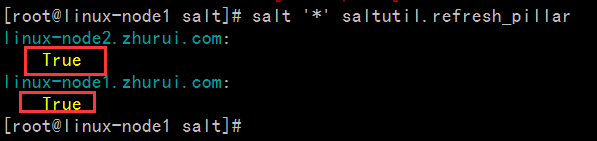

报错处理:

[root@linux-node1 salt]# salt '*' saltutil.refresh_pillar ##需要执行刷新命令

linux-node2.zhurui.com:

True

linux-node1.zhurui.com:

True

[root@linux-node1 salt]#

截图如下:

[root@linux-node1 salt]# salt -I 'apache:httpd' test.ping

linux-node1.zhurui.com:

True

linux-node2.zhurui.com:

True

[root@linux-node1 salt]#

2.3、SaltStack数据系统区别介绍

| 名称 | 存储位置 | 数据类型 | 数据采集更新方式 | 应用 |

| Grains | minion端 | 静态数据 | minion启动时收集,也可以使用saltutil.sync_grains进行刷新。 | 存储minion基本数据,比如用于匹配minion,自身数据可以用来做资产管理等。 |

| Pillar | master端 | 动态数据 | 在master端定义,指定给对应的minion,可以使用saltutil.refresh_pillar刷新 | 存储Master指定的数据,只有指定的minion可以看到,用于敏感数据保存。 |

########## 今天的苦逼是为了不这样一直苦逼下去!##########