Hive基本操作

在hive命令终端直接导入文本数据:

hive> LOAD DATA LOCAL INPATH '/home/simon/hive_test/a.txt' OVERWRITE INTO TABLE w_a;

查看特征:

hive> select A.usrid, A.age, B.time from w_a A join w_b B on A.usrid = B.usrid;

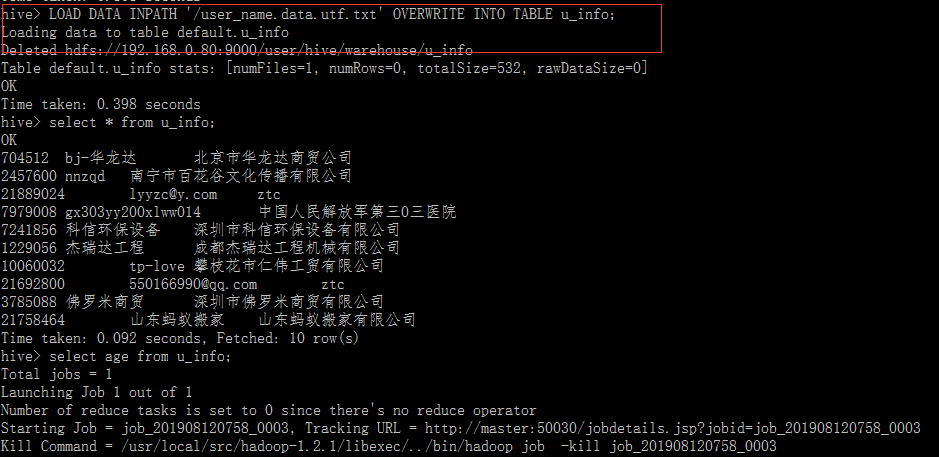

通过LOAD命令直接将hadoop文件系统上的文件导入,不加LOCAL

hive> LOAD DATA INPATH '/user_name.data.utf.txt' OVERWRITE INTO TABLE u_info;

hive数据导入另外一种方法:

hive> insert into table w_d select usrid, age from w_a limit 2;

从Hive导出数据到local本地

hive> insert overwrite local directory '/home/badou/hive_test/data/1.txt' select usrid, age from w_a;

从Hive导出数据到HDFS上

hive> insert overwrite directory '/hive_data' select usrid, age from w_a;

利用partition导入,查询数据

hive> load data local inpath '/home/badou/hive_test/p1.txt' into table p_t_2; Copying data from file:/home/badou/hive_test/p1.txt Copying file: file:/home/badou/hive_test/p1.txt Loading data to table default.p_t_2 Table default.p_t_2 stats: [numFiles=1, numRows=0, totalSize=72, rawDataSize=0] OK Time taken: 0.41 seconds hive> select * from p_t_2; OK user2 28 20170302 user4 30 20170302 user6 32 20170302 user8 34 20170302 Time taken: 0.081 seconds, Fetched: 4 row(s) hive> select * from p_t_2 where dt='20170302'; Total jobs = 1 Launching Job 1 out of 1 Number of reduce tasks is set to 0 since there's no reduce operator Starting Job = job_201908120758_0009, Tracking URL = http://master:50030/jobdetails.jsp?jobid=job_201908120758_0009 Kill Command = /usr/local/src/hadoop-1.2.1/libexec/../bin/hadoop job -kill job_201908120758_0009 Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 0 2019-08-14 20:36:11,750 Stage-1 map = 0%, reduce = 0% 2019-08-14 20:36:20,899 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.98 sec 2019-08-14 20:36:26,020 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 2.98 sec MapReduce Total cumulative CPU time: 2 seconds 980 msec Ended Job = job_201908120758_0009 MapReduce Jobs Launched: Job 0: Map: 1 Cumulative CPU: 2.98 sec HDFS Read: 283 HDFS Write: 72 SUCCESS Total MapReduce CPU Time Spent: 2 seconds 980 msec OK user2 28 20170302 user4 30 20170302 user6 32 20170302 user8 34 20170302 Time taken: 21.554 seconds, Fetched: 4 row(s) hive>

创建UDF函数:

hive> create temporary function uppercase as 'com.badou.hive.udf.Uppercase'; OK Time taken: 0.097 seconds

########## 今天的苦逼是为了不这样一直苦逼下去!##########