大数据生态圈 —— 关于实时流处理的单节点伪分布式环境搭建

本文参考

本文主要参考imooc上关于Hadoop、hbase、spark等课程中关于环境搭建的例子,但是有些并没有说明为什么选择这个的版本,我们知道这些开源的技术发展很快,所以有必要搞清楚如何对它们进行版本选择

本文省略了环境变量的配置,基本都需要配置bin目录和sbin目录

大多数配置文件都在解压根目录的bin目录下,hadoop的配置文件在解压根目录的etc/hadoop/目录下

环境

centos7.7(主机名hadoop0001,用户名hadoop)+ scala 2.11.8 + Oracle jdk 1.8.0_241 + spark 2.2.0 + HBase1.3.6 + Hadoop 2.6.5 + ZooKeeper 3.4.14 + Kafka 0.8.2.1 + Flume 1.6.0

本文所用的安装包均为apache(更稳定的版本,可以到cloudera下载http://archive.cloudera.com/cdh5/cdh/5/)

semantic versioning(语义版本号)

语义版本号由五个部分组成主版本号、次版本号、补丁号、预发布版本号,例如现在spark的最新版本号为3.0.0 – preview2,其中3为主版本号,两个0依次为次版本号和补丁号,preview2为预发布版本号(另外的还有Alpha、Beta等),主版本号的递增往往不兼容旧版本;次版本号的递增,往往会有新增的功能,可能会带来API的变化,例如标记某个API为Deprecated,也不保证一定兼容旧版本;补丁号只负责修复bug,在主版本号和次版本号相同的情况下,补丁号版本越大,系统越可靠

Hadoop 2.6.5 环境搭建

下载地址:

http://archive.apache.org/dist/Hadoop/core/

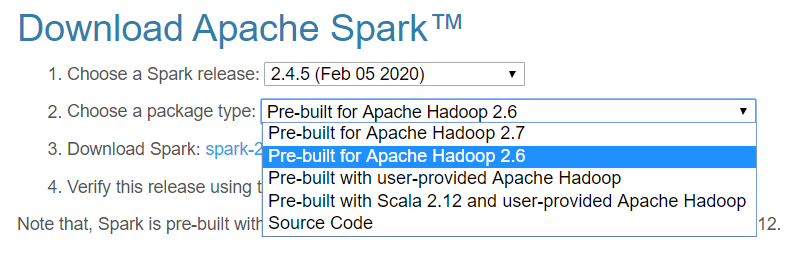

为什么选择Hadoop2.6.5版本?

目前spark最新稳定的版本为2.4.5,仍由Hadoop 2.6 或 2.7 版本编译,spark2.2.0也是如此,可以到spark archieve下载页面验证,这里就选择Hadoop 2.6 最新的补丁号 2.6.5版本

配置 core–default.xml

<configuration> <property> <name>fs.defaultFS</name> <value>hdfs://hadoop0001:9000</value> </property> <property> <name>hadoop.tmp.dir</name> <value>/home/hadoop/app/tmp/hadoop</value> </property> </configuration>

fs.defaultFS配置Hadoop的HDFS分布式文件系统的URI,这个URI也关系到后续Hbase的配置,在这里我的主机名为hadoop0001,端口号配置为9000

hadoop.tmp.dir配置Hadoop的缓存目录,默认存放在根目录的tmp文件夹下,路径和文件名为/tmp/hadoop-${user.name},因为在每次重启时/tmp目录内的内容会丢失,所以在这里我配置到了hadoop用户目录下自己创建的app/tmp/hadoop目录中

配置hdfs–site.xml

<configuration> <property> <name>dfs.replication</name> <value>1</value> </property> </configuration>

dfs.replication默认值为3,即文件默认有3份备份,在这里因为我们是单节点单台机器,所以更改为1

配置yarn-site.xml

<configuration> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarn.resourcemanager.webapp.address</name> <value>hadoop0001:8088</value> </property> </configuration>

yarn.nodemanager.aux-services配置nodemanager的服务名

yarn.resourcemanager.webapp.address配置resourcemanager的网页URI,若只指定主机名,端口将随机分配

配置mapred-site.xml

<configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> </configuration>

mapreduce.framework.name配置执行MapReduce作业的框架,一般使用yarn

The runtime framework for executing MapReduce jobs. Can be one of local, classic or yarn.

配置slaves

hadoop0001

配置本机的主机名即可

配置hadoop-env.sh

export JAVA_HOME=/home/hadoop/app/jdk1.8.0_241

指定java8的路径

ZooKeeper 3.4.14 环境搭建

下载地址:

http://zookeeper.apache.org/releases.html

为什么选择ZooKeeper3.4.14版本?

ZooKeeper3.4的第一个版本最早发布于2011年,最新的补丁号版本为2019年发布的3.4.14,可见维护时间之长,个人认为有较好的稳定性

配置conf/zoo.cfg

# the directory where the snapshot is stored. # do not use /tmp for storage, /tmp here is just example sakes. dataDir=/home/hadoop/app/zookeeper-3.4.14/zkData/zoo_1 # the port at which the clients will connect clientPort=2181 server.1=hadoop0001:2889:3889 server.2=hadoop0001:2890:3890 server.3=hadoop0001:2891:3891

这里只列出了需要更改的配置项,将原文件复制三份,zoo_1.cfg,zoo_2.cfg,zoo_3.cfg,分别为他们配置不同的dataDir(zkData/zoo_1是自己新建的目录,另有zkData/zoo_2和zkData/zoo_3)和不同的clientPort(另有2182,2183端口),最后三行的URI在三个文件中都相同

Finally, note the two port numbers after each server name: " 2888" and "3888". Peers use the former port to connect to other peers. Such a connection is necessary so that peers can communicate, for example, to agree upon the order of updates. More specifically, a ZooKeeper server uses this port to connect followers to the leader. When a new leader arises, a follower opens a TCP connection to the leader using this port. Because the default leader election also uses TCP, we currently require another port for leader election. This is the second port in the server entry.

server.X中配置了两个端口,第一个端口用于ZooKeeper之间的通信,例如和leader(如果使用过Kafka,对leader会有一定的认识)进行TCP通信,第二个端口用于选举产生新的leader

配置myid

上一步在zookeeper-3.4.14的安装目录下新建了zkData存放数据的目录,在zkData/zoo_1, zkData/zoo_2 和zkData/zoo_3下都新建myid文件,文件内容分别为1,2,3(只有一个数字,该数字对应server.1,server.2,server.3)

The entries of the form server.X list the servers that make up the ZooKeeper service. When the server starts up, it knows which server it is by looking for the file myid in the data directory. That file has the contains the server number, in ASCII.

注意点:

Please be aware that setting up multiple servers on a single machine will not create any redundancy. If something were to happen which caused the machine to die, all of the zookeeper servers would be offline. Full redundancy requires that each server have its own machine. It must be a completely separate physical server. Multiple virtual machines on the same physical host are still vulnerable to the complete failure of that host.

在一个机器上配置多个ZooKeeper节点无法保证容错性,因为当这台机器宕机时,所有的ZooKeeper节点都会"死亡",真正的分布式要求一台机器对应一个ZooKeeper节点

HBase 1.3.6 环境搭建

下载地址:

https://hbase.apache.org/downloads.html

为什么选择HBase 1.3.6版本?

图片源自官网的Guide Reference https://hbase.apache.org/book.html

配置hbase-env.sh

export JAVA_HOME=/home/hadoop/app/jdk1.8.0_241

指定java8的安装路径

配置regionservers

hadoop0001

配置本机的主机名即可

配置hbase-site.xml

<configuration> <property> <name>hbase.rootdir</name> <value>hdfs://hadoop0001:9000/hbase</value> </property> <property> <name>hbase.zookeeper.quorum</name> <value>hadoop0001:2181,hadoop0001:2182,hadoop0001:2183</value> </property> <property> <name>hbase.cluster.distributed</name> <value>true</value> </property> <property> <name>hbase.tmp.dir</name> <value>/home/hadoop/app/tmp/hbase</value> </property> <property> <name>hbase.master.info.port</name> <value>60010</value> </property> </configuration>

hbase.rootdir配置HDFS的URI

hbase.zookeeper.quorum配置ZooKeeper集群

hbase.cluster.distributed配置为true,否则只会使用本地文件系统和自带的ZooKeeper,注意,默认值为false

Standalone mode is the default mode.In standalone mode, HBase does not use HDFS — it uses the local filesystem instead — and it runs all HBase daemons and a local ZooKeeper all up in the same JVM.

hbase.tmp.dir配置缓存目录

hbase.master.info.port配置网页监控页面的端口,默认端口为16010

Spark 2.2.0 配置

下载:

https://archive.apache.org/dist/spark/

为什么选择Spark 2.2.0 版本?

Spark版本牵涉到很多,例如上面所讲的Hadoop版本的选择就参考了Spark,另外Kafka和Flume版本的选择也受Spark的影响,在下面的环境配置中再作解释

Spark手动编译

尽管Spark官网给出了预编译版本,但是最好由我们自己进行手动编译,根据我们的生产环境制定我们自己的spark,这里可以参考官网教程和慕课上的一篇手记

http://spark.apache.org/docs/latest/building-spark.html

https://www.imooc.com/article/18419

编译的过程十分漫长,可能长达两到三个小时,需要从maven上拉取依赖jar包,要尽量保证网络的通畅

spark2.2.0默认使用scala2.11.8版本,若要更换,注意在编译时指定其它scala版本,后续的spark版本大多使用scala2.11.12版本,更多的具体信息可在pom.xml文件中查看

Flume 1.6.0环境搭建

下载:

http://flume.apache.org/releases/index.html

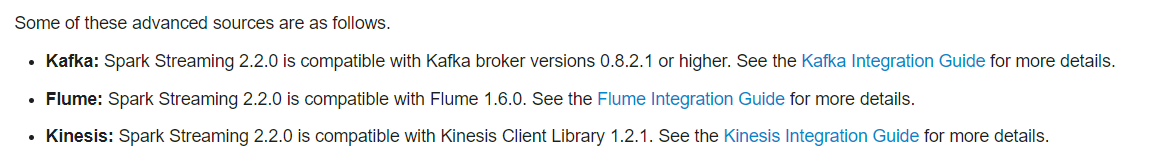

为什么选择Flume1.6.0版本?

Flume版本的选择受到Spark的影响,详见http://spark.apache.org/docs/2.2.0/streaming-programming-guide.html

配置flume-env.sh

export JAVA_HOME=/home/hadoop/app/jdk1.8.0_241

指定java8的安装路径

Kafka 0.8.2.1 环境搭建

下载:

http://kafka.apache.org/downloads

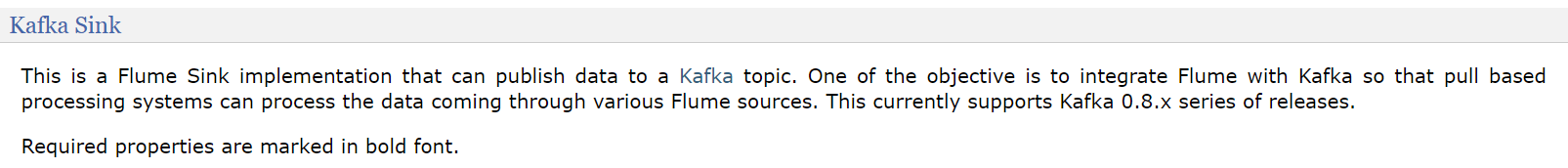

为什么选择Kafka0.8.2.1版本?

Flume版本的选择受到Spark和Flume共同的影响,详见 http://flume.apache.org/releases/content/1.6.0/FlumeUserGuide.html

配置server.properties

############################# Server Basics ############################# # The id of the broker. This must be set to a unique integer for each broker. broker.id=1 ############################# Socket Server Settings ############################# # The port the socket server listens on port=9092 # Hostname the broker will bind to. If not set, the server will bind to all interfaces host.name=hadoop0001 # Hostname the broker will advertise to producers and consumers. If not set, it uses the # value for "host.name" if configured. Otherwise, it will use the value returned from # java.net.InetAddress.getCanonicalHostName(). advertised.host.name=hadoop0001 # The port to publish to ZooKeeper for clients to use. If this is not set, # it will publish the same port that the broker binds to. advertised.port=9092 ############################# Log Basics ############################# # A comma seperated list of directories under which to store log files log.dirs=/home/hadoop/app/tmp/kafka/kafka_log_1 ############################# Zookeeper ############################# # Zookeeper connection string (see zookeeper docs for details). # This is a comma separated host:port pairs, each corresponding to a zk # server. e.g. "127.0.0.1:3000,127.0.0.1:3001,127.0.0.1:3002". # You can also append an optional chroot string to the urls to specify the # root directory for all kafka znodes. zookeeper.connect=hadoop0001:2181,hadoop0001:2182,hadoop0001:2183 ############################# topic ############################# delete.topic.enable = true

这里仅列出需要设置的配置项,可以创建多个这样的配置文件来保证容错性,注意每个配置文件的broker.id,port和advertised.port的值要不同

zookeeper.connect配置项配置ZooKeeper集群,一定要配置,否则会使用Kafka自带的ZooKeeper

delete.topic.enable设置为true时,可以实现完全删除某个topic,可配可不配

启动服务

各个服务的启动命令此处不做赘述

浙公网安备 33010602011771号

浙公网安备 33010602011771号