k8s helm部署kafka集群

本文选择采用 Bitnami 的 Kafka Helm chart 进行部署。Bitnami 提供的 Helm chart 以其稳定性和易用性著称,是快速部署 Kafka 到 Kubernetes 集群的理想选择。

生产环境应该考虑的几项配置如下:

- 外部访问安全协议,选择

PLAINTEXT,SASL_PLAINTEXT,SASL_SSL和SSL中的哪种方式加密认证方式, - 数据、日志持久化配置

- k8s 集群外部访问 Kafka 的方式,NodePort 是否合适?是否需要使用 LoadBalancer、Ingress

- 内否启用内置的监控

Metrics - 是否利用 Helm 生成 Kubectl 可用的资源配置清单,离线部署

使用helm安装kafka集群

添加helm 仓库

helm repo add bitnami https://charts.bitnami.com/bitnami

更新本地 charts

helm repo update bitnami

执行安装命令

helm install kafka-cluster bitnami/kafka \ --namespace app --create-namespace \ --set replicaCount=3 \ --set global.imageRegistry="xxx:10086" \ --set global.defaultStorageClass="nfs-sc" \ --set externalAccess.enabled=true \ --set externalAccess.controller.service.type=NodePort \ --set externalAccess.controller.service.nodePorts[0]='31211' \ --set externalAccess.controller.service.nodePorts[1]='31212' \ --set externalAccess.controller.service.nodePorts[2]='31213' \ --set externalAccess.controller.service.useHostIPs=true #--set listeners.client.protocol=PLAINTEXT \ #--set listeners.external.protocol=PLAINTEXT

安装后输出

helm install opsxlab bitnami/kafka \ --namespace opsxlab --create-namespace \ --set replicaCount=3 \ --set global.imageRegistry="registry.opsxlab.cn:8443" \ --set global.defaultStorageClass="nfs-sc" \ --set externalAccess.enabled=true \ --set externalAccess.controller.service.type=NodePort \ --set externalAccess.controller.service.nodePorts[0]='31211' \ --set externalAccess.controller.service.nodePorts[1]='31212' \ --set externalAccess.controller.service.nodePorts[2]='31213' \ --set externalAccess.controller.service.useHostIPs=true \ --set listeners.client.protocol=PLAINTEXT \ --set listeners.external.protocol=PLAINTEXT NAME: kafka LAST DEPLOYED: Mon Dec 30 09:35:13 2024 NAMESPACE: app STATUS: deployed REVISION: 1 TEST SUITE: None NOTES: CHART NAME: kafka CHART VERSION: 31.1.1 APP VERSION: 3.9.0 Did you know there are enterprise versions of the Bitnami catalog? For enhanced secure software supply chain features, unlimited pulls from Docker, LTS support, or application customization, see Bitnami Premium or Tanzu Application Catalog. See https://www.arrow.com/globalecs/na/vendors/bitnami for more information. ** Please be patient while the chart is being deployed ** Kafka can be accessed by consumers via port 9092 on the following DNS name from within your cluster: kafka.app.svc.cluster.local Each Kafka broker can be accessed by producers via port 9092 on the following DNS name(s) from within your cluster: kafka-controller-0.kafka-controller-headless.app.svc.cluster.local:9092 kafka-controller-1.kafka-controller-headless.app.svc.cluster.local:9092 kafka-controller-2.kafka-controller-headless.app.svc.cluster.local:9092 The CLIENT listener for Kafka client connections from within your cluster have been configured with the following security settings: - SASL authentication To connect a client to your Kafka, you need to create the 'client.properties' configuration files with the content below: security.protocol=SASL_PLAINTEXT sasl.mechanism=SCRAM-SHA-256 sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required \ username="user1" \ password="$(kubectl get secret kafka-user-passwords --namespace app -o jsonpath='{.data.client-passwords}' | base64 -d | cut -d , -f 1)"; To create a pod that you can use as a Kafka client run the following commands: kubectl run kafka-client --restart='Never' --image docker.io/bitnami/kafka:3.9.0-debian-12-r4 --namespace app --command -- sleep infinity kubectl cp --namespace app /path/to/client.properties kafka-client:/tmp/client.properties kubectl exec --tty -i kafka-client --namespace app -- bash PRODUCER: kafka-console-producer.sh \ --producer.config /tmp/client.properties \ --bootstrap-server kafka.app.svc.cluster.local:9092 \ --topic test CONSUMER: kafka-console-consumer.sh \ --consumer.config /tmp/client.properties \ --bootstrap-server kafka.app.svc.cluster.local:9092 \ --topic test \ --from-beginning To connect to your Kafka controller+broker nodes from outside the cluster, follow these instructions: Kafka brokers domain: You can get the external node IP from the Kafka configuration file with the following commands (Check the EXTERNAL listener) 1. Obtain the pod name: kubectl get pods --namespace app -l "app.kubernetes.io/name=kafka,app.kubernetes.io/instance=kafka,app.kubernetes.io/component=kafka" 2. Obtain pod configuration: kubectl exec -it KAFKA_POD -- cat /opt/bitnami/kafka/config/server.properties | grep advertised.listeners Kafka brokers port: You will have a different node port for each Kafka broker. You can get the list of configured node ports using the command below: echo "$(kubectl get svc --namespace app -l "app.kubernetes.io/name=kafka,app.kubernetes.io/instance=kafka,app.kubernetes.io/component=kafka,pod" -o jsonpath='{.items[*].spec.ports[0].nodePort}' | tr ' ' '\n')" The EXTERNAL listener for Kafka client connections from within your cluster have been configured with the following settings: - SASL authentication To connect a client to your Kafka, you need to create the 'client.properties' configuration files with the content below: security.protocol=SASL_PLAINTEXT sasl.mechanism=SCRAM-SHA-256 sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required \ username="user1" \ password="$(kubectl get secret kafka-user-passwords --namespace app -o jsonpath='{.data.client-passwords}' | base64 -d | cut -d , -f 1)"; WARNING: There are "resources" sections in the chart not set. Using "resourcesPreset" is not recommended for production. For production installations, please set the following values according to your workload needs: - controller.resources +info https://kubernetes.io/docs/concepts/configuration/manage-resources-containers/

安装后查看

[root@master-1 ~]# kubectl get svc,pod -n app NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kafka ClusterIP 10.0.206.158 <none> 9092/TCP,9095/TCP 39m service/kafka-controller-0-external NodePort 10.0.40.112 <none> 9094:31211/TCP 39m service/kafka-controller-1-external NodePort 10.0.101.43 <none> 9094:31212/TCP 39m service/kafka-controller-2-external NodePort 10.0.59.143 <none> 9094:31213/TCP 39m service/kafka-controller-headless ClusterIP None <none> 9094/TCP,9092/TCP,9093/TCP 39m NAME READY STATUS RESTARTS AGE pod/backend-84885dc4fb-pzkjr 1/1 Running 8 4d16h pod/kafka-controller-0 1/1 Running 0 39m pod/kafka-controller-1 1/1 Running 0 39m pod/kafka-controller-2 1/1 Running 0 39m

查看动态生成的kafka配置文件

[root@master-1 ~]# kubectl exec -it -n app kafka-controller-0 -- cat /opt/bitnami/kafka/config/server.properties # Listeners configuration listeners=CLIENT://:9092,INTERNAL://:9094,EXTERNAL://:9095,CONTROLLER://:9093 # 监听器

# 定义监听地址 advertised.listeners=CLIENT://kafka-controller-0.kafka-controller-headless.app.svc.cluster.local:9092,INTERNAL://kafka-controller-0.kafka-controller-headless.app.svc.cluster.local:9094,EXTERNAL://192.168.43.130:31211 # 支持的安全认证协议

listener.security.protocol.map=CLIENT:SASL_PLAINTEXT,INTERNAL:SASL_PLAINTEXT,CONTROLLER:SASL_PLAINTEXT,EXTERNAL:SASL_PLAINTEXT # KRaft process roles process.roles=controller,broker node.id=0 controller.listener.names=CONTROLLER controller.quorum.voters=0@kafka-controller-0.kafka-controller-headless.app.svc.cluster.local:9093,1@kafka-controller-1.kafka-controller-headless.app.svc.cluster.local:9093,2@kafka-controller-2.kafka-controller-headless.app.svc.cluster.local:9093 # Kraft Controller listener SASL settings sasl.mechanism.controller.protocol=PLAIN listener.name.controller.sasl.enabled.mechanisms=PLAIN listener.name.controller.plain.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="controller_user" password="GiJMaJvHpA" user_controller_user="GiJMaJvHpA"; # Kafka data logs directory log.dir=/bitnami/kafka/data # Kafka application logs directory logs.dir=/opt/bitnami/kafka/logs # Common Kafka Configuration sasl.enabled.mechanisms=PLAIN,SCRAM-SHA-256,SCRAM-SHA-512 # Interbroker configuration inter.broker.listener.name=INTERNAL sasl.mechanism.inter.broker.protocol=PLAIN # Listeners SASL JAAS configuration listener.name.client.plain.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required user_user1="fvIKwTFV4c"; listener.name.client.scram-sha-256.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required; listener.name.client.scram-sha-512.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required; listener.name.internal.plain.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="inter_broker_user" password="qbTBaq59N5" user_inter_broker_user="qbTBaq59N5" user_user1="fvIKwTFV4c"; listener.name.internal.scram-sha-256.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="inter_broker_user" password="qbTBaq59N5"; listener.name.internal.scram-sha-512.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="inter_broker_user" password="qbTBaq59N5"; listener.name.external.plain.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required user_user1="fvIKwTFV4c"; listener.name.external.scram-sha-256.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required; listener.name.external.scram-sha-512.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required; # End of SASL JAAS configuration # Custom Kafka Configuration [root@master-1 ~]# kubectl exec -it -n app kafka-controller-1 -- cat /opt/bitnami/kafka/config/server.properties # Listeners configuration listeners=CLIENT://:9092,INTERNAL://:9094,EXTERNAL://:9095,CONTROLLER://:9093 advertised.listeners=CLIENT://kafka-controller-1.kafka-controller-headless.app.svc.cluster.local:9092,INTERNAL://kafka-controller-1.kafka-controller-headless.app.svc.cluster.local:9094,EXTERNAL://192.168.43.131:31212 listener.security.protocol.map=CLIENT:SASL_PLAINTEXT,INTERNAL:SASL_PLAINTEXT,CONTROLLER:SASL_PLAINTEXT,EXTERNAL:SASL_PLAINTEXT # KRaft process roles process.roles=controller,broker # broker可以作为的角色 node.id=1 # 集群节点唯一标识 controller.listener.names=CONTROLLER # 控制器名称

# 集群节点 controller.quorum.voters=0@kafka-controller-0.kafka-controller-headless.app.svc.cluster.local:9093,1@kafka-controller-1.kafka-controller-headless.app.svc.cluster.local:9093,2@kafka-controller-2.kafka-controller-headless.app.svc.cluster.local:9093 # Kraft Controller listener SASL settings sasl.mechanism.controller.protocol=PLAIN listener.name.controller.sasl.enabled.mechanisms=PLAIN listener.name.controller.plain.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="controller_user" password="GiJMaJvHpA" user_controller_user="GiJMaJvHpA"; # Kafka data logs directory log.dir=/bitnami/kafka/data # Kafka application logs directory logs.dir=/opt/bitnami/kafka/logs # Common Kafka Configuration sasl.enabled.mechanisms=PLAIN,SCRAM-SHA-256,SCRAM-SHA-512 # Interbroker configuration inter.broker.listener.name=INTERNAL sasl.mechanism.inter.broker.protocol=PLAIN # Listeners SASL JAAS configuration listener.name.client.plain.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required user_user1="fvIKwTFV4c"; listener.name.client.scram-sha-256.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required; listener.name.client.scram-sha-512.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required; listener.name.internal.plain.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="inter_broker_user" password="qbTBaq59N5" user_inter_broker_user="qbTBaq59N5" user_user1="fvIKwTFV4c"; listener.name.internal.scram-sha-256.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="inter_broker_user" password="qbTBaq59N5"; listener.name.internal.scram-sha-512.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="inter_broker_user" password="qbTBaq59N5"; listener.name.external.plain.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required user_user1="fvIKwTFV4c"; listener.name.external.scram-sha-256.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required; listener.name.external.scram-sha-512.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required; # End of SASL JAAS configuration # Custom Kafka Configuration [root@master-1 ~]# kubectl exec -it -n app kafka-controller-2 -- cat /opt/bitnami/kafka/config/server.properties # Listeners configuration listeners=CLIENT://:9092,INTERNAL://:9094,EXTERNAL://:9095,CONTROLLER://:9093 advertised.listeners=CLIENT://kafka-controller-2.kafka-controller-headless.app.svc.cluster.local:9092,INTERNAL://kafka-controller-2.kafka-controller-headless.app.svc.cluster.local:9094,EXTERNAL://192.168.43.129:31213 listener.security.protocol.map=CLIENT:SASL_PLAINTEXT,INTERNAL:SASL_PLAINTEXT,CONTROLLER:SASL_PLAINTEXT,EXTERNAL:SASL_PLAINTEXT # KRaft process roles process.roles=controller,broker node.id=2 controller.listener.names=CONTROLLER controller.quorum.voters=0@kafka-controller-0.kafka-controller-headless.app.svc.cluster.local:9093,1@kafka-controller-1.kafka-controller-headless.app.svc.cluster.local:9093,2@kafka-controller-2.kafka-controller-headless.app.svc.cluster.local:9093 # Kraft Controller listener SASL settings sasl.mechanism.controller.protocol=PLAIN listener.name.controller.sasl.enabled.mechanisms=PLAIN listener.name.controller.plain.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="controller_user" password="GiJMaJvHpA" user_controller_user="GiJMaJvHpA"; # Kafka data logs directory log.dir=/bitnami/kafka/data # Kafka application logs directory logs.dir=/opt/bitnami/kafka/logs # Common Kafka Configuration sasl.enabled.mechanisms=PLAIN,SCRAM-SHA-256,SCRAM-SHA-512 # Interbroker configuration inter.broker.listener.name=INTERNAL sasl.mechanism.inter.broker.protocol=PLAIN # Listeners SASL JAAS configuration listener.name.client.plain.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required user_user1="fvIKwTFV4c"; listener.name.client.scram-sha-256.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required; listener.name.client.scram-sha-512.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required; listener.name.internal.plain.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="inter_broker_user" password="qbTBaq59N5" user_inter_broker_user="qbTBaq59N5" user_user1="fvIKwTFV4c"; listener.name.internal.scram-sha-256.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="inter_broker_user" password="qbTBaq59N5"; listener.name.internal.scram-sha-512.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="inter_broker_user" password="qbTBaq59N5"; listener.name.external.plain.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required user_user1="fvIKwTFV4c"; listener.name.external.scram-sha-256.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required; listener.name.external.scram-sha-512.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required; # End of SASL JAAS configuration # Custom Kafka Configuration

配置文件参数解释

]# kubectl exec kafka-controller-1 -- cat /opt/bitnami/kafka/config/server.properties # Listeners configuration listeners=CLIENT://:9092,INTERNAL://:9094,CONTROLLER://:9093 # 三个监听器: 9092 客户端 ,9094 broker之间通信同步,9093 控制器通信 advertised.listeners=CLIENT://kafka-controller-1.kafka-controller-headless.default.svc.cluster.local:9092,INTERNAL://kafka-controller-1.kafka-controller-headless.default.svc.cluster.local:9094 # 定义节点对外公布的地址 listener.security.protocol.map=CLIENT:SASL_PLAINTEXT,INTERNAL:SASL_PLAINTEXT,CONTROLLER:SASL_PLAINTEXT # KRaft process roles process.roles=controller,broker # 每个 Kafka 实例既作为 Broker(处理生产者/消费者请求),又作为 Controller(管理集群元数据)。 node.id=1 # 当前节点的唯一标识 controller.listener.names=CONTROLLER # 指定用于控制器通信的监听器名称

# 集群节点列表 controller.quorum.voters=0@kafka-controller-0.kafka-controller-headless.default.svc.cluster.local:9093,1@kafka-controller-1.kafka-controller-headless.default.svc.cluster.local:9093,2@kafka-controller-2.kafka-controller-headless.default.svc.cluster.local:9093 # 定义控制器选举的节点列表,表示这是一个 3 节点的 KRaft 模式集群。 # Kraft Controller listener SASL settings # 认证和安全 # 使用 PLAIN 认证机制: sasl.mechanism.controller.protocol=PLAIN listener.name.controller.sasl.enabled.mechanisms=PLAIN listener.name.controller.plain.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="controller_user" password="CzRtwrUu9j" user_controller_user="CzRtwrUu9j"; # Kafka data logs directory log.dir=/bitnami/kafka/data # Kafka application logs directory logs.dir=/opt/bitnami/kafka/logs # Common Kafka Configuration # 支持 PLAIN 和 SCRAM-SHA 认证 sasl.enabled.mechanisms=PLAIN,SCRAM-SHA-256,SCRAM-SHA-512 # Interbroker configuration inter.broker.listener.name=INTERNAL sasl.mechanism.inter.broker.protocol=PLAIN # Listeners SASL JAAS configuration # 客户端监听器 (CLIENT) listener.name.client.plain.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required user_user1="TqWBAZmAA4"; listener.name.client.scram-sha-256.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required; listener.name.client.scram-sha-512.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required; # 内部监听器,用于 Broker 间通信 支持 PLAIN listener.name.internal.plain.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="inter_broker_user" password="5CrHWCrXPJ" user_inter_broker_user="5CrHWCrXPJ" user_user1="TqWBAZmAA4"; 支持SCRAM-SHA listener.name.internal.scram-sha-256.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="inter_broker_user" password="5CrHWCrXPJ"; listener.name.internal.scram-sha-512.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="inter_broker_user" password="5CrHWCrXPJ"; # End of SASL JAAS configuration # Custom Kafka Configuration

测试连接

# Producer客户端代码

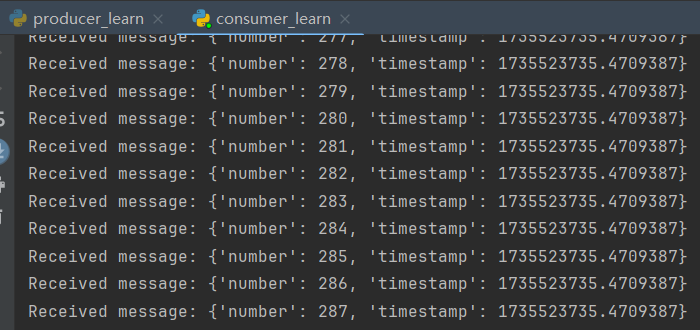

# pip install kafka-python # pip install kafka-python-ng - i https://pypi.tuna.tsinghua.edu.cn/simple from kafka import KafkaProducer import json import time def json_serializer(data): return json.dumps(data).encode('utf-8') producer = KafkaProducer( bootstrap_servers=['192.168.43.129:31213','192.168.43.130:31211','192.168.43.131:31212'], # value_serializer=lambda v: json.dumps(v).encode('utf-8'), # key_serializer=lambda k: k.encode('utf-8') if k else None, sasl_plain_username='user1', sasl_plain_password='fvIKwTFV4c', sasl_mechanism='PLAIN', security_protocol='SASL_PLAINTEXT', value_serializer=json_serializer ) for i in range(1,288): message = {'number': i, 'timestamp': time.time()} try: producer.send("test1", value=message) # 没有 key 时只传递 value print(f"Sent message: {message}") # time.sleep(1) except Exception as e: print(f"Error sending message: {e}") producer.flush() producer.close() # Consumer 客户端代码 from kafka import KafkaConsumer import json def json_deserializer(data): return json.loads(data.decode('utf-8')) consumer = KafkaConsumer( 'test1', bootstrap_servers=['192.168.43.129:31213','192.168.43.130:31211','192.168.43.131:31212'], auto_offset_reset='earliest', enable_auto_commit=True, # 自动提交偏移量 group_id='test-group', # value_deserializer=lambda v: json.loads(v.decode('utf-8')), sasl_plain_username='user1', sasl_plain_password='fvIKwTFV4c', sasl_mechanism='PLAIN', security_protocol='SASL_PLAINTEXT', value_deserializer=json_deserializer ) try: for message in consumer: print(f"Received message: {message.value}") except Exception as e: print(f"Error consuming messages: {e}")

可以看到生产者生产消息,消费者可以消费到

使用helm导出kubectl可以执行的清单

helm get manifest -n app kafka >kafka-external.yaml

查看清单

--- # Source: kafka/templates/networkpolicy.yaml kind: NetworkPolicy apiVersion: networking.k8s.io/v1 metadata: name: kafka namespace: "app" labels: app.kubernetes.io/instance: kafka app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: kafka app.kubernetes.io/version: 3.9.0 helm.sh/chart: kafka-31.1.1 spec: podSelector: matchLabels: app.kubernetes.io/instance: kafka app.kubernetes.io/name: kafka policyTypes: - Ingress - Egress egress: - {} ingress: # Allow client connections - ports: - port: 9092 - port: 9094 - port: 9093 - port: 9095 --- # Source: kafka/templates/broker/pdb.yaml apiVersion: policy/v1beta1 kind: PodDisruptionBudget metadata: name: kafka-broker namespace: "app" labels: app.kubernetes.io/instance: kafka app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: kafka app.kubernetes.io/version: 3.9.0 helm.sh/chart: kafka-31.1.1 app.kubernetes.io/component: broker app.kubernetes.io/part-of: kafka spec: maxUnavailable: 1 selector: matchLabels: app.kubernetes.io/instance: kafka app.kubernetes.io/name: kafka app.kubernetes.io/component: broker app.kubernetes.io/part-of: kafka --- # Source: kafka/templates/controller-eligible/pdb.yaml apiVersion: policy/v1beta1 kind: PodDisruptionBudget metadata: name: kafka-controller namespace: "app" labels: app.kubernetes.io/instance: kafka app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: kafka app.kubernetes.io/version: 3.9.0 helm.sh/chart: kafka-31.1.1 app.kubernetes.io/component: controller-eligible app.kubernetes.io/part-of: kafka spec: maxUnavailable: 1 selector: matchLabels: app.kubernetes.io/instance: kafka app.kubernetes.io/name: kafka app.kubernetes.io/component: controller-eligible app.kubernetes.io/part-of: kafka --- # Source: kafka/templates/provisioning/serviceaccount.yaml apiVersion: v1 kind: ServiceAccount metadata: name: kafka-provisioning namespace: "app" labels: app.kubernetes.io/instance: kafka app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: kafka app.kubernetes.io/version: 3.9.0 helm.sh/chart: kafka-31.1.1 automountServiceAccountToken: false --- # Source: kafka/templates/rbac/serviceaccount.yaml apiVersion: v1 kind: ServiceAccount metadata: name: kafka namespace: "app" labels: app.kubernetes.io/instance: kafka app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: kafka app.kubernetes.io/version: 3.9.0 helm.sh/chart: kafka-31.1.1 app.kubernetes.io/component: kafka automountServiceAccountToken: false --- # Source: kafka/templates/secrets.yaml apiVersion: v1 kind: Secret metadata: name: kafka-user-passwords namespace: "app" labels: app.kubernetes.io/instance: kafka app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: kafka app.kubernetes.io/version: 3.9.0 helm.sh/chart: kafka-31.1.1 type: Opaque data: client-passwords: "ZnZJS3dURlY0Yw==" system-user-password: "ZnZJS3dURlY0Yw==" inter-broker-password: "cWJUQmFxNTlONQ==" controller-password: "R2lKTWFKdkhwQQ==" --- # Source: kafka/templates/secrets.yaml apiVersion: v1 kind: Secret metadata: name: kafka-kraft-cluster-id namespace: "app" labels: app.kubernetes.io/instance: kafka app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: kafka app.kubernetes.io/version: 3.9.0 helm.sh/chart: kafka-31.1.1 type: Opaque data: kraft-cluster-id: "RzlOS0xlOUZuU1prZ0ZyRXFMdDgyZA==" --- # Source: kafka/templates/controller-eligible/configmap.yaml apiVersion: v1 kind: ConfigMap metadata: name: kafka-controller-configuration namespace: "app" labels: app.kubernetes.io/instance: kafka app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: kafka app.kubernetes.io/version: 3.9.0 helm.sh/chart: kafka-31.1.1 app.kubernetes.io/component: controller-eligible app.kubernetes.io/part-of: kafka data: server.properties: |- # Listeners configuration listeners=CLIENT://:9092,INTERNAL://:9094,EXTERNAL://:9095,CONTROLLER://:9093 advertised.listeners=CLIENT://advertised-address-placeholder:9092,INTERNAL://advertised-address-placeholder:9094 listener.security.protocol.map=CLIENT:SASL_PLAINTEXT,INTERNAL:SASL_PLAINTEXT,CONTROLLER:SASL_PLAINTEXT,EXTERNAL:SASL_PLAINTEXT # KRaft process roles process.roles=controller,broker #node.id= controller.listener.names=CONTROLLER controller.quorum.voters=0@kafka-controller-0.kafka-controller-headless.app.svc.cluster.local:9093,1@kafka-controller-1.kafka-controller-headless.app.svc.cluster.local:9093,2@kafka-controller-2.kafka-controller-headless.app.svc.cluster.local:9093 # Kraft Controller listener SASL settings sasl.mechanism.controller.protocol=PLAIN listener.name.controller.sasl.enabled.mechanisms=PLAIN listener.name.controller.plain.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="controller_user" password="controller-password-placeholder" user_controller_user="controller-password-placeholder"; # Kafka data logs directory log.dir=/bitnami/kafka/data # Kafka application logs directory logs.dir=/opt/bitnami/kafka/logs # Common Kafka Configuration sasl.enabled.mechanisms=PLAIN,SCRAM-SHA-256,SCRAM-SHA-512 # Interbroker configuration inter.broker.listener.name=INTERNAL sasl.mechanism.inter.broker.protocol=PLAIN # Listeners SASL JAAS configuration listener.name.client.plain.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required user_user1="password-placeholder-0"; listener.name.client.scram-sha-256.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required; listener.name.client.scram-sha-512.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required; listener.name.internal.plain.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="inter_broker_user" password="interbroker-password-placeholder" user_inter_broker_user="interbroker-password-placeholder" user_user1="password-placeholder-0"; listener.name.internal.scram-sha-256.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="inter_broker_user" password="interbroker-password-placeholder"; listener.name.internal.scram-sha-512.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="inter_broker_user" password="interbroker-password-placeholder"; listener.name.external.plain.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required user_user1="password-placeholder-0"; listener.name.external.scram-sha-256.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required; listener.name.external.scram-sha-512.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required; # End of SASL JAAS configuration # Custom Kafka Configuration --- # Source: kafka/templates/scripts-configmap.yaml apiVersion: v1 kind: ConfigMap metadata: name: kafka-scripts namespace: "app" labels: app.kubernetes.io/instance: kafka app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: kafka app.kubernetes.io/version: 3.9.0 helm.sh/chart: kafka-31.1.1 data: kafka-init.sh: |- #!/bin/bash set -o errexit set -o nounset set -o pipefail error(){ local message="${1:?missing message}" echo "ERROR: ${message}" exit 1 } retry_while() { local -r cmd="${1:?cmd is missing}" local -r retries="${2:-12}" local -r sleep_time="${3:-5}" local return_value=1 read -r -a command <<< "$cmd" for ((i = 1 ; i <= retries ; i+=1 )); do "${command[@]}" && return_value=0 && break sleep "$sleep_time" done return $return_value } replace_in_file() { local filename="${1:?filename is required}" local match_regex="${2:?match regex is required}" local substitute_regex="${3:?substitute regex is required}" local posix_regex=${4:-true} local result # We should avoid using 'sed in-place' substitutions # 1) They are not compatible with files mounted from ConfigMap(s) # 2) We found incompatibility issues with Debian10 and "in-place" substitutions local -r del=$'\001' # Use a non-printable character as a 'sed' delimiter to avoid issues if [[ $posix_regex = true ]]; then result="$(sed -E "s${del}${match_regex}${del}${substitute_regex}${del}g" "$filename")" else result="$(sed "s${del}${match_regex}${del}${substitute_regex}${del}g" "$filename")" fi echo "$result" > "$filename" } kafka_conf_set() { local file="${1:?missing file}" local key="${2:?missing key}" local value="${3:?missing value}" # Check if the value was set before if grep -q "^[#\\s]*$key\s*=.*" "$file"; then # Update the existing key replace_in_file "$file" "^[#\\s]*${key}\s*=.*" "${key}=${value}" false else # Add a new key printf '\n%s=%s' "$key" "$value" >>"$file" fi } replace_placeholder() { local placeholder="${1:?missing placeholder value}" local password="${2:?missing password value}" local -r del=$'\001' # Use a non-printable character as a 'sed' delimiter to avoid issues with delimiter symbols in sed string sed -i "s${del}$placeholder${del}$password${del}g" "$KAFKA_CONFIG_FILE" } append_file_to_kafka_conf() { local file="${1:?missing source file}" local conf="${2:?missing kafka conf file}" cat "$1" >> "$2" } configure_external_access() { # Configure external hostname if [[ -f "/shared/external-host.txt" ]]; then host=$(cat "/shared/external-host.txt") elif [[ -n "${EXTERNAL_ACCESS_HOST:-}" ]]; then host="$EXTERNAL_ACCESS_HOST" elif [[ -n "${EXTERNAL_ACCESS_HOSTS_LIST:-}" ]]; then read -r -a hosts <<<"$(tr ',' ' ' <<<"${EXTERNAL_ACCESS_HOSTS_LIST}")" host="${hosts[$POD_ID]}" elif [[ "$EXTERNAL_ACCESS_HOST_USE_PUBLIC_IP" =~ ^(yes|true)$ ]]; then host=$(curl -s https://ipinfo.io/ip) else error "External access hostname not provided" fi # Configure external port if [[ -f "/shared/external-port.txt" ]]; then port=$(cat "/shared/external-port.txt") elif [[ -n "${EXTERNAL_ACCESS_PORT:-}" ]]; then if [[ "${EXTERNAL_ACCESS_PORT_AUTOINCREMENT:-}" =~ ^(yes|true)$ ]]; then port="$((EXTERNAL_ACCESS_PORT + POD_ID))" else port="$EXTERNAL_ACCESS_PORT" fi elif [[ -n "${EXTERNAL_ACCESS_PORTS_LIST:-}" ]]; then read -r -a ports <<<"$(tr ',' ' ' <<<"${EXTERNAL_ACCESS_PORTS_LIST}")" port="${ports[$POD_ID]}" else error "External access port not provided" fi # Configure Kafka advertised listeners sed -i -E "s|^(advertised\.listeners=\S+)$|\1,EXTERNAL://${host}:${port}|" "$KAFKA_CONFIG_FILE" } configure_kafka_sasl() { # Replace placeholders with passwords replace_placeholder "interbroker-password-placeholder" "$KAFKA_INTER_BROKER_PASSWORD" replace_placeholder "controller-password-placeholder" "$KAFKA_CONTROLLER_PASSWORD" read -r -a passwords <<<"$(tr ',;' ' ' <<<"${KAFKA_CLIENT_PASSWORDS:-}")" for ((i = 0; i < ${#passwords[@]}; i++)); do replace_placeholder "password-placeholder-${i}\"" "${passwords[i]}\"" done } export KAFKA_CONFIG_FILE=/config/server.properties cp /configmaps/server.properties $KAFKA_CONFIG_FILE # Get pod ID and role, last and second last fields in the pod name respectively POD_ID=$(echo "$MY_POD_NAME" | rev | cut -d'-' -f 1 | rev) POD_ROLE=$(echo "$MY_POD_NAME" | rev | cut -d'-' -f 2 | rev) # Configure node.id and/or broker.id if [[ -f "/bitnami/kafka/data/meta.properties" ]]; then if grep -q "broker.id" /bitnami/kafka/data/meta.properties; then ID="$(grep "broker.id" /bitnami/kafka/data/meta.properties | awk -F '=' '{print $2}')" kafka_conf_set "$KAFKA_CONFIG_FILE" "node.id" "$ID" else ID="$(grep "node.id" /bitnami/kafka/data/meta.properties | awk -F '=' '{print $2}')" kafka_conf_set "$KAFKA_CONFIG_FILE" "node.id" "$ID" fi else ID=$((POD_ID + KAFKA_MIN_ID)) kafka_conf_set "$KAFKA_CONFIG_FILE" "node.id" "$ID" fi replace_placeholder "advertised-address-placeholder" "${MY_POD_NAME}.kafka-${POD_ROLE}-headless.app.svc.cluster.local" if [[ "${EXTERNAL_ACCESS_ENABLED:-false}" =~ ^(yes|true)$ ]]; then configure_external_access fi configure_kafka_sasl if [ -f /secret-config/server-secret.properties ]; then append_file_to_kafka_conf /secret-config/server-secret.properties $KAFKA_CONFIG_FILE fi --- # Source: kafka/templates/controller-eligible/svc-external-access.yaml apiVersion: v1 kind: Service metadata: name: kafka-controller-0-external namespace: "app" labels: app.kubernetes.io/instance: kafka app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: kafka app.kubernetes.io/version: 3.9.0 helm.sh/chart: kafka-31.1.1 app.kubernetes.io/component: kafka pod: kafka-controller-0 spec: type: NodePort publishNotReadyAddresses: false ports: - name: tcp-kafka port: 9094 nodePort: 31211 targetPort: external selector: app.kubernetes.io/instance: kafka app.kubernetes.io/name: kafka app.kubernetes.io/part-of: kafka app.kubernetes.io/component: controller-eligible statefulset.kubernetes.io/pod-name: kafka-controller-0 --- # Source: kafka/templates/controller-eligible/svc-external-access.yaml apiVersion: v1 kind: Service metadata: name: kafka-controller-1-external namespace: "app" labels: app.kubernetes.io/instance: kafka app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: kafka app.kubernetes.io/version: 3.9.0 helm.sh/chart: kafka-31.1.1 app.kubernetes.io/component: kafka pod: kafka-controller-1 spec: type: NodePort publishNotReadyAddresses: false ports: - name: tcp-kafka port: 9094 nodePort: 31212 targetPort: external selector: app.kubernetes.io/instance: kafka app.kubernetes.io/name: kafka app.kubernetes.io/part-of: kafka app.kubernetes.io/component: controller-eligible statefulset.kubernetes.io/pod-name: kafka-controller-1 --- # Source: kafka/templates/controller-eligible/svc-external-access.yaml apiVersion: v1 kind: Service metadata: name: kafka-controller-2-external namespace: "app" labels: app.kubernetes.io/instance: kafka app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: kafka app.kubernetes.io/version: 3.9.0 helm.sh/chart: kafka-31.1.1 app.kubernetes.io/component: kafka pod: kafka-controller-2 spec: type: NodePort publishNotReadyAddresses: false ports: - name: tcp-kafka port: 9094 nodePort: 31213 targetPort: external selector: app.kubernetes.io/instance: kafka app.kubernetes.io/name: kafka app.kubernetes.io/part-of: kafka app.kubernetes.io/component: controller-eligible statefulset.kubernetes.io/pod-name: kafka-controller-2 --- # Source: kafka/templates/controller-eligible/svc-headless.yaml apiVersion: v1 kind: Service metadata: name: kafka-controller-headless namespace: "app" labels: app.kubernetes.io/instance: kafka app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: kafka app.kubernetes.io/version: 3.9.0 helm.sh/chart: kafka-31.1.1 app.kubernetes.io/component: controller-eligible app.kubernetes.io/part-of: kafka spec: type: ClusterIP clusterIP: None publishNotReadyAddresses: true ports: - name: tcp-interbroker port: 9094 protocol: TCP targetPort: interbroker - name: tcp-client port: 9092 protocol: TCP targetPort: client - name: tcp-controller protocol: TCP port: 9093 targetPort: controller selector: app.kubernetes.io/instance: kafka app.kubernetes.io/name: kafka app.kubernetes.io/component: controller-eligible app.kubernetes.io/part-of: kafka --- # Source: kafka/templates/svc.yaml apiVersion: v1 kind: Service metadata: name: kafka namespace: "app" labels: app.kubernetes.io/instance: kafka app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: kafka app.kubernetes.io/version: 3.9.0 helm.sh/chart: kafka-31.1.1 app.kubernetes.io/component: kafka spec: type: ClusterIP sessionAffinity: None ports: - name: tcp-client port: 9092 protocol: TCP targetPort: client nodePort: null - name: tcp-external port: 9095 protocol: TCP targetPort: external selector: app.kubernetes.io/instance: kafka app.kubernetes.io/name: kafka app.kubernetes.io/part-of: kafka --- # Source: kafka/templates/controller-eligible/statefulset.yaml apiVersion: apps/v1 kind: StatefulSet metadata: name: kafka-controller namespace: "app" labels: app.kubernetes.io/instance: kafka app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: kafka app.kubernetes.io/version: 3.9.0 helm.sh/chart: kafka-31.1.1 app.kubernetes.io/component: controller-eligible app.kubernetes.io/part-of: kafka spec: podManagementPolicy: Parallel replicas: 3 selector: matchLabels: app.kubernetes.io/instance: kafka app.kubernetes.io/name: kafka app.kubernetes.io/component: controller-eligible app.kubernetes.io/part-of: kafka serviceName: kafka-controller-headless updateStrategy: type: RollingUpdate template: metadata: labels: app.kubernetes.io/instance: kafka app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: kafka app.kubernetes.io/version: 3.9.0 helm.sh/chart: kafka-31.1.1 app.kubernetes.io/component: controller-eligible app.kubernetes.io/part-of: kafka annotations: checksum/configuration: 92994ed94d5ac5c9fe9d5042cd90e5124603c72695daa231e789e36db6c2bb2f checksum/passwords-secret: b8e485a8832bfa206553705980b48e1a154dd8727da6ff66f930f4392594c5f0 spec: automountServiceAccountToken: false hostNetwork: false hostIPC: false affinity: podAffinity: podAntiAffinity: preferredDuringSchedulingIgnoredDuringExecution: - podAffinityTerm: labelSelector: matchLabels: app.kubernetes.io/instance: kafka app.kubernetes.io/name: kafka app.kubernetes.io/component: controller-eligible topologyKey: kubernetes.io/hostname weight: 1 nodeAffinity: securityContext: fsGroup: 1001 fsGroupChangePolicy: Always seccompProfile: type: RuntimeDefault supplementalGroups: [] sysctls: [] serviceAccountName: kafka enableServiceLinks: true initContainers: - name: kafka-init image: docker.io/bitnami/kafka:3.9.0-debian-12-r4 imagePullPolicy: IfNotPresent securityContext: allowPrivilegeEscalation: false capabilities: drop: - ALL readOnlyRootFilesystem: true runAsGroup: 1001 runAsNonRoot: true runAsUser: 1001 seLinuxOptions: {} resources: limits: {} requests: {} command: - /bin/bash args: - -ec - | /scripts/kafka-init.sh env: - name: BITNAMI_DEBUG value: "false" - name: MY_POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: KAFKA_VOLUME_DIR value: "/bitnami/kafka" - name: KAFKA_MIN_ID value: "0" - name: EXTERNAL_ACCESS_ENABLED value: "true" - name: HOST_IP valueFrom: fieldRef: fieldPath: status.hostIP - name: EXTERNAL_ACCESS_HOST value: "$(HOST_IP)" - name: EXTERNAL_ACCESS_PORTS_LIST value: "31211,31212,31213" - name: KAFKA_CLIENT_USERS value: "user1" - name: KAFKA_CLIENT_PASSWORDS valueFrom: secretKeyRef: name: kafka-user-passwords key: client-passwords - name: KAFKA_INTER_BROKER_USER value: "inter_broker_user" - name: KAFKA_INTER_BROKER_PASSWORD valueFrom: secretKeyRef: name: kafka-user-passwords key: inter-broker-password - name: KAFKA_CONTROLLER_USER value: "controller_user" - name: KAFKA_CONTROLLER_PASSWORD valueFrom: secretKeyRef: name: kafka-user-passwords key: controller-password volumeMounts: - name: data mountPath: /bitnami/kafka - name: kafka-config mountPath: /config - name: kafka-configmaps mountPath: /configmaps - name: kafka-secret-config mountPath: /secret-config - name: scripts mountPath: /scripts - name: tmp mountPath: /tmp containers: - name: kafka image: docker.io/bitnami/kafka:3.9.0-debian-12-r4 imagePullPolicy: "IfNotPresent" securityContext: allowPrivilegeEscalation: false capabilities: drop: - ALL readOnlyRootFilesystem: true runAsGroup: 1001 runAsNonRoot: true runAsUser: 1001 seLinuxOptions: {} env: - name: BITNAMI_DEBUG value: "false" - name: KAFKA_HEAP_OPTS value: "-Xmx1024m -Xms1024m" - name: KAFKA_KRAFT_CLUSTER_ID valueFrom: secretKeyRef: name: kafka-kraft-cluster-id key: kraft-cluster-id - name: KAFKA_KRAFT_BOOTSTRAP_SCRAM_USERS value: "true" - name: KAFKA_CLIENT_USERS value: "user1" - name: KAFKA_CLIENT_PASSWORDS valueFrom: secretKeyRef: name: kafka-user-passwords key: client-passwords - name: KAFKA_INTER_BROKER_USER value: "inter_broker_user" - name: KAFKA_INTER_BROKER_PASSWORD valueFrom: secretKeyRef: name: kafka-user-passwords key: inter-broker-password - name: KAFKA_CONTROLLER_USER value: "controller_user" - name: KAFKA_CONTROLLER_PASSWORD valueFrom: secretKeyRef: name: kafka-user-passwords key: controller-password ports: - name: controller containerPort: 9093 - name: client containerPort: 9092 - name: interbroker containerPort: 9094 - name: external containerPort: 9095 livenessProbe: failureThreshold: 3 initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 5 exec: command: - pgrep - -f - kafka readinessProbe: failureThreshold: 6 initialDelaySeconds: 5 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 5 tcpSocket: port: "controller" startupProbe: failureThreshold: 15 initialDelaySeconds: 30 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 tcpSocket: port: "controller" resources: limits: cpu: 750m ephemeral-storage: 2Gi memory: 768Mi requests: cpu: 500m ephemeral-storage: 50Mi memory: 512Mi volumeMounts: - name: data mountPath: /bitnami/kafka - name: logs mountPath: /opt/bitnami/kafka/logs - name: kafka-config mountPath: /opt/bitnami/kafka/config/server.properties subPath: server.properties - name: tmp mountPath: /tmp volumes: - name: kafka-configmaps configMap: name: kafka-controller-configuration - name: kafka-secret-config emptyDir: {} - name: kafka-config emptyDir: {} - name: tmp emptyDir: {} - name: scripts configMap: name: kafka-scripts defaultMode: 493 - name: logs emptyDir: {} volumeClaimTemplates: - apiVersion: v1 kind: PersistentVolumeClaim metadata: name: data spec: accessModes: - "ReadWriteOnce" resources: requests: storage: "8Gi"

kafka 生产消息命令

/opt/bitnami/kafka/bin/kafka-console-producer.sh \ --broker-list kafka-controller-1.kafka-controller-headless.default.svc.cluster.local:9092 \ --topic test \ --producer-property security.protocol=SASL_PLAINTEXT \ --producer-property sasl.mechanism=PLAIN \ --producer-property sasl.jaas.config='org.apache.kafka.common.security.plain.PlainLoginModule required username="user1" password="TqWBAZmAA4";'

消费命令

/opt/bitnami/kafka/bin/kafka-console-consumer.sh \ --bootstrap-server kafka-controller-1.kafka-controller-headless.default.svc.cluster.local:9092 \ --topic test1 \ --group test-group1 \ --consumer-property security.protocol=SASL_PLAINTEXT \ --consumer-property sasl.mechanism=PLAIN \ --consumer-property sasl.jaas.config='org.apache.kafka.common.security.plain.PlainLoginModule required username="user1" password="TqWBAZmAA4";' \ --from-beginning

根据提示创建客户端连接认证文件

To connect a client to your Kafka, you need to create the 'client.properties' configuration files with the content below: security.protocol=SASL_PLAINTEXT sasl.mechanism=SCRAM-SHA-256 sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required \ username="user1" \ password="$(kubectl get secret kafka-user-passwords --namespace app -o jsonpath='{.data.client-passwords}' | base64 -d | cut -d , -f 1)";

创建文件并复制到其他两个broker

cat client.properties security.protocol=SASL_PLAINTEXT sasl.mechanism=SCRAM-SHA-256 sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required \ username="user1" \ password="fvIKwTFV4c"; #password="$(kubectl get secret kafka-user-passwords --namespace app -o jsonpath='{.data.client-passwords}' | base64 -d | cut -d , -f 1)"; cp client.properties ../../app-data-kafka-controller-1-pvc-d1aee723-960d-4394-b393-58b0b7341267/data/ cp client.properties ../../app-data-kafka-controller-2-pvc-b542d73c-f2cb-4bd2-8bb7-9b6ff2da1905/data/

# 查看topic列表

/opt/bitnami/kafka/bin/kafka-topics.sh --bootstrap-server kafka-controller-1.kafka-controller-headless.app.svc.cluster.local:9092 --command-config /bitnami/kafka/data/client.properties --list __consumer_offsets test test1 # 查看topic详情 $ /opt/bitnami/kafka/bin/kafka-topics.sh --bootstrap-server kafka-controller-1.kafka-controller-headless.app.svc.cluster.local:9092 --command-config /bitnami/kafka/data/client.properties --describe test1 Topic: test TopicId: wj_p6enjS7-3RJb1ywGxog PartitionCount: 1 ReplicationFactor: 1 Configs: Topic: test Partition: 0 Leader: 0 Replicas: 0 Isr: 0 Elr: LastKnownElr: Topic: test1 TopicId: ywdLzJMJQTi5nNoCHGnjCA PartitionCount: 1 ReplicationFactor: 1 Configs: Topic: test1 Partition: 0 Leader: 2 Replicas: 2 Isr: 2 Elr: LastKnownElr: Topic: __consumer_offsets TopicId: NockRxS9Q9qTKeMG5MW6KA PartitionCount: 50 ReplicationFactor: 3 Configs: compression.type=producer,cleanup.policy=compact,segment.bytes=104857600 Topic: __consumer_offsets Partition: 0 Leader: 2 Replicas: 2,0,1 Isr: 2,0,1 Elr: LastKnownElr: Topic: __consumer_offsets Partition: 1 Leader: 0 Replicas: 0,1,2 Isr: 0,1,2 Elr: LastKnownElr: Topic: __consumer_offsets Partition: 2 Leader: 1 Replicas: 1,2,0 Isr: 1,2,0 Elr: LastKnownElr: Topic: __consumer_offsets Partition: 3 Leader: 2 Replicas: 2,1,0 Isr: 2,1,0 Elr: LastKnownElr: ... Topic: __consumer_offsets Partition: 48 Leader: 2 Replicas: 2,0,1 Isr: 2,0,1 Elr: LastKnownElr: Topic: __consumer_offsets Partition: 49 Leader: 0 Replicas: 0,1,2 Isr: 0,1,2 Elr: LastKnownElr:

# 生产消息 /opt/bitnami/kafka/bin/kafka-console-producer.sh \ --broker-list kafka-controller-1.kafka-controller-headless.app.svc.cluster.local:9092 \ --topic test \ --producer-property security.protocol=SASL_PLAINTEXT \ --producer-property sasl.mechanism=PLAIN \ --producer-property sasl.jaas.config='org.apache.kafka.common.security.plain.PlainLoginModule required username="user1" password="TqWBAZmAA4";' # 使用配置 /opt/bitnami/kafka/bin/kafka-console-producer.sh \ --broker-list kafka-controller-1.kafka-controller-headless.app.svc.cluster.local:9092 \ --topic test \ --producer.config /bitnami/kafka/data/client.properties

# 消费消息 /opt/bitnami/kafka/bin/kafka-console-consumer.sh \ --bootstrap-server kafka-controller-1.kafka-controller-headless.default.svc.cluster.local:9092 \ --topic test1 \ --group test-group1 \ --consumer-property security.protocol=SASL_PLAINTEXT \ --consumer-property sasl.mechanism=PLAIN \ --consumer-property sasl.jaas.config='org.apache.kafka.common.security.plain.PlainLoginModule required username="user1" password="TqWBAZmAA4";' \ --from-beginning # 使用配置 /opt/bitnami/kafka/bin/kafka-console-consumer.sh \ --bootstrap-server kafka-controller-1.kafka-controller-headless.app.svc.cluster.local:9092 \ --topic test1 \ --group test-group1 \ --consumer.config /bitnami/kafka/data/client.properties \ --from-beginning

越学越感到自己的无知