ELK—logstash日志写入redis与kafka

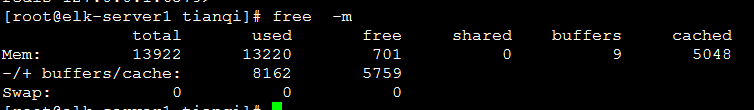

用一台服务器按照部署 redis 服务,专门用于日志缓存使用,用于 web 服务器产生大量日志的场景,例如下面的服务器内存即将被使用完毕,查看是因为 redis服务保存了大量的数据没有被读取而占用了大量的内存空间。

部署redis

[root@linux-host2 ~]# cd /usr/local/src/ [root@linux-host2 src]# wget http://download.redis.io/releases/redis-3.2.8.tar.gz [root@linux-host2 src]# tar xvf redis-3.2.8.tar.gz [root@linux-host2 src]# ln -sv /usr/local/src/redis-3.2.8 /usr/local/redis ‘/usr/local/redis’ -> ‘/usr/local/src/redis-3.2.8’ [root@linux-host2 src]#cd /usr/local/redis/deps [root@linux-host2 redis]# yum install gcc [root@linux-host2 deps]# make geohash-int hiredis jemalloc linenoise lua [root@linux-host2 deps]# cd .. [root@linux-host2 redis]# make [root@linux-host2 redis]# vim redis.conf [root@linux-host2 redis]# grep "^[a-Z]" redis.conf bind 0.0.0.0 protected-mode yes port 6379 tcp-backlog 511 timeout 0 tcp-keepalive 300 daemonize yes supervised no pidfile /var/run/redis_6379.pid loglevel notice logfile "" databases 16 save "" rdbcompression no #是否压缩,仅做服务解耦,削峰 rdbchecksum no #是否校验

将 tomcat 服务器的 logstash 收集之后的 tomcat 访问日志写入到 redis 服务器,然后通过另外的 logstash 将 redis 服务器的数据取出在写入到 elasticsearch 服务器。

官 方 文 档 : https://www.elastic.co/guide/en/logstash/current/plugins-outputs-redis.html

一、logstash收集日志并写入redis

input { file { path => "/usr/local/tomcat/logs/tomcat_access_log.*.log" type => "tomcat-accesslog-1512" start_position => "beginning" # 从最开始收集 stat_interval => "2" # 日志收集间隔时间 codec => "json" #对json格式日志进行json解析 } tcp { port => 7800 # TCP日志收集 mode => "server" type => "tcplog-1512" } } output { if [type] == "tomcat-accesslog-1512" { redis { data_type => "list" key => "tomcat-accesslog-1512" host => "192.168.15.12" port => "6379" db => "0" password => "123456" } } if [type] == "tcplog-1512" { redis { data_type => "list" key => "tcplog-1512" host => "192.168.15.12" port => "6379" db => "1" # 将不同日志写入不同的db password => "123456" } } }

验证 redis 是否有数据

logstash从redis取数据写入es集群

input { redis { data_type => "list" key => "tomcat-accesslog-1512" host => "192.168.15.12" port => "6379" db => "0" password => "123456" codec => "json" #json 解析 } redis { data_type => "list" key => "tcplog-1512" host => "192.168.15.12" port => "6379" db => "1" password => "123456" } } output { if [type] == "tomcat-accesslog-1512" { elasticsearch { hosts => ["192.168.15.11:9200"] index => "logstash-tomcat1512-accesslog-%{+YYYY.MM.dd}" } } if [type] == "tcplog-1512" { elasticsearch { hosts => ["192.168.15.11:9200"] index => "logstash-tcplog1512-%{+YYYY.MM.dd}" } } }

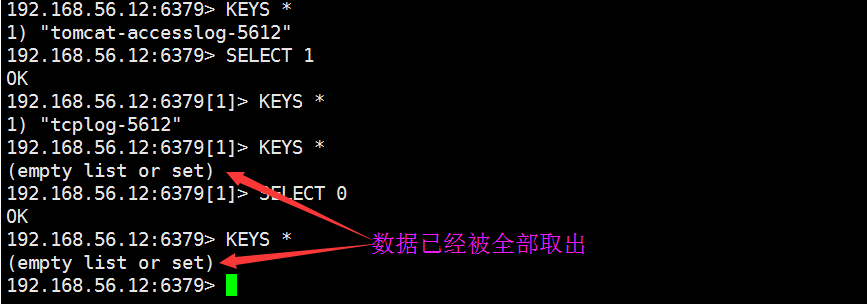

验证 redis 的数据 是否被取出

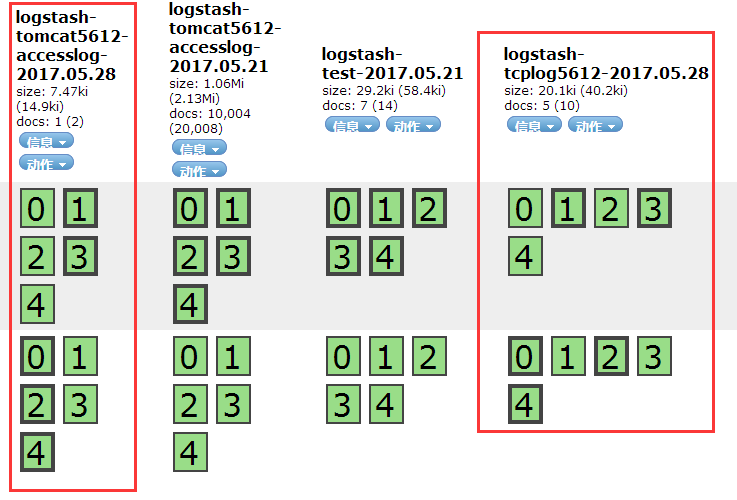

在 head 插件验证数据

二、logstash收集日志写入kafka

2.1 收集单个Nginx 日志文件配置

input { file { path => "/var/log/nginx/access.log" start_position => "beginning" type => "nginx-accesslog-1512" codec => "json" #声明 json 编码格式 } } output { if [type] == "nginx-accesslog-1512" { kafka { bootstrap_servers => "192.168.15.11:9092" #kafka 服务器地址 topic_id => "nginx-accesslog-1512" # 写入主题 codec => "json" } } }

配置 logstash从kafka读取日志

input { kafka { bootstrap_servers => "192.168.15.11:9092" topics => "nginx-accesslog-1512" codec => "json" consumer_threads => 1 #decorate_events => true } } output { if [type] == "nginx-accesslog-1512" { elasticsearch { hosts => ["192.168.15.12:9200"] index => "nginx-accesslog-1512-%{+YYYY.MM.dd}" } } }

2.2 使用 logstash收集多 日志文件并写入 kafka

input { file { path => "/var/log/nginx/access.log" start_position => "beginning" type => "nginx-accesslog-1512" codec => "json" } file { path => "/var/log/messages" start_position => "beginning" type => "system-log-1512" } } output { if [type] == "nginx-accesslog-1512" { kafka { bootstrap_servers => "192.168.15.11:9092" topic_id => "nginx-accesslog-1512" batch_size => 5 codec => "json" } } if [type] == "system-log-1512" { kafka { bootstrap_servers => "192.168.15.11:9092" topic_id => "system-log-1512" batch_size => 5 codec => "json" #使用 json编码 ,因为logstash收集后会转换为json格式 } } }

配置logstash 从kafka 读取系统日志

input { kafka { bootstrap_servers => "192.168.15.11:9092" topics => "nginx-accesslog-1512" codec => "json" consumer_threads => 1 decorate_events => true } kafka { bootstrap_servers => "192.168.15.11:9092" topics => "system-log-1512" consumer_threads => 1 decorate_events => true codec => "json" } } output { if [type] == "nginx-accesslog-1512" { elasticsearch { hosts => ["192.168.15.11:9200"] index => "nginx-accesslog-1512-%{+YYYY.MM.dd}" } } if [type] == "system-log-1512" { elasticsearch { hosts => ["192.168.15.12:9200"] index => "system-log-1512-%{+YYYY.MM.dd}" } } }

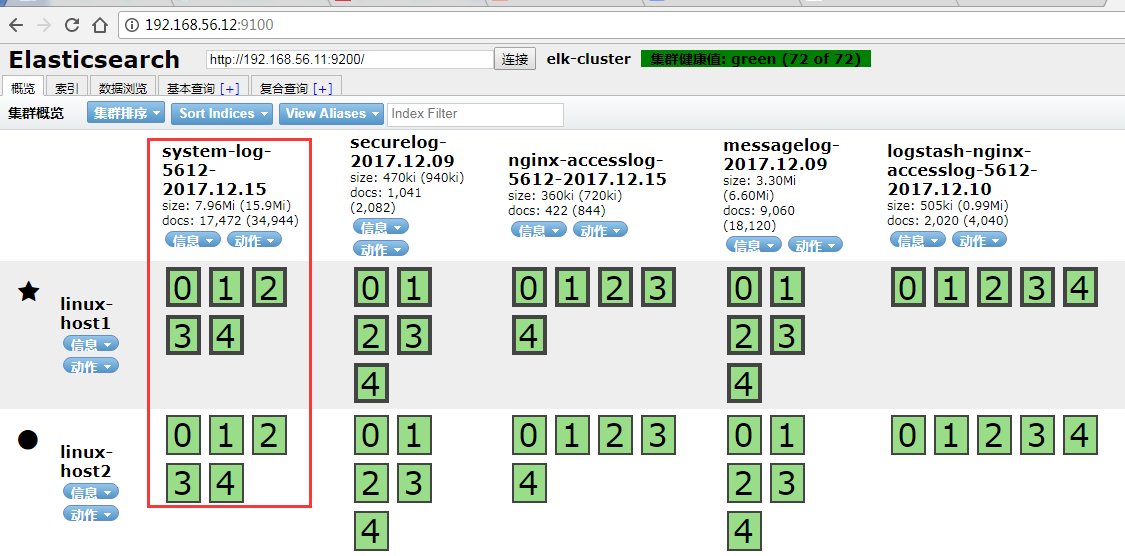

在head 插件验证数据

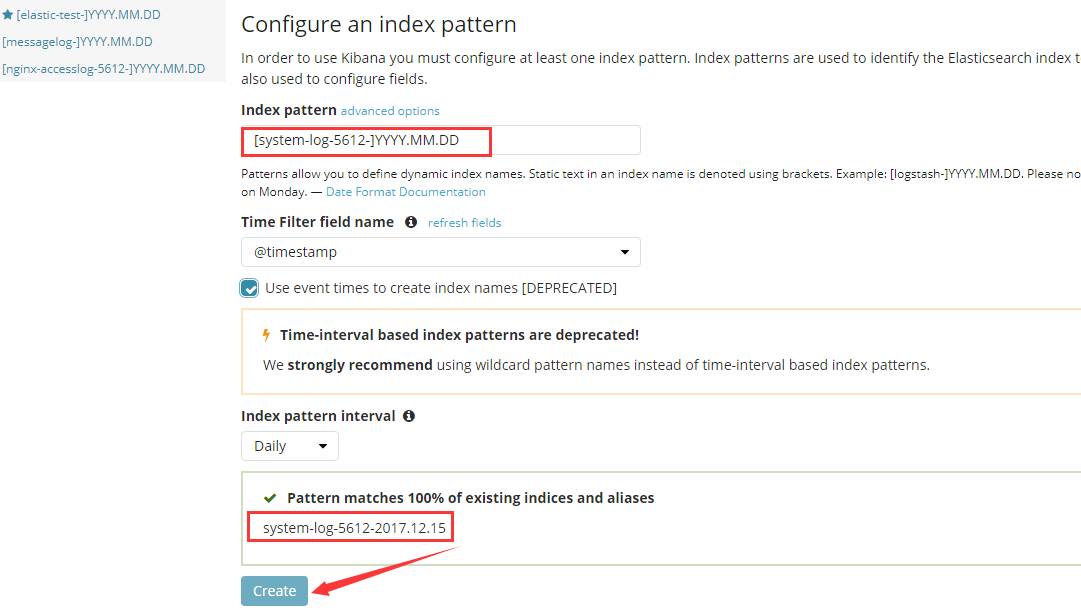

在kibana添加索引

kibana 验证数据

本文来自博客园,作者:不会跳舞的胖子,转载请注明原文链接:https://www.cnblogs.com/rtnb/p/16275960.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号